Collaborative Integration, Publishing and Analysis of Distributed Scholarly Metadata

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Avoiding Greenwashing in Event Marketing: an Exploration of Concepts, Literature and Methods

Journal of Management and Sustainability; Vol. 7, No. 4; 2017 ISSN 1925-4725 E-ISSN 1925-4733 Published by Canadian Center of Science and Education Avoiding Greenwashing in Event Marketing: An Exploration of Concepts, Literature and Methods Kai-Michael Griese1, Kim Werner1 & Johannes Hogg2 1 School of Management, University of Applied Science, Osnabrück, Germany 2 School of Management, Fresenius University of Applied Science, Hamburg, Germany Correspondence: Kai-Michael Griese, School of Management, University of Applied Science, Osnabrück, Germany, Caprivistraße 30a, Tel: 49-541-969-3880. E-mail: [email protected] Received: August 25, 2017 Accepted: September 20, 2017 Online Published: October 25, 2017 doi:10.5539/jms.v7n4p1 URL: http://doi.org/10.5539/jms.v7n4p1 Abstract Greenwashing, defined by the Oxford Dictionary as “disinformation disseminated by an organization so as to present an environmentally responsible public image” can cause multifarious problems for companies. The phenomenon of greenwashing has, however, not attracted much attention in the event marketing literature to date. The purpose of this paper is twofold. It first describes and analyses the specific characteristics and features of greenwashing in event marketing. It then seeks to identify the current fundamental approaches of how to avoid greenwashing in event marketing and to assess their potential. A two-step literature analysis with complementary search approaches served as a methodical framework. First, journals related to event marketing were screened for the keywords “greenwashing” and “greenwash”. Next, the general literature was consulted for the same keywords. The results clearly demonstrate that the subject of greenwashing has been widely neglected in the event literature. -

Introduction to Sustainability Sustainability Essentials

SUSTAINABILITY ESSENTIALS A SERIES OF PRACTICAL GUIDES FOR THE OLYMPIC MOVEMENT INTRODUCTION TO SUSTAINABILITY SUSTAINABILITY ESSENTIALS SUSTAINABILITY ESSENTIALS Sustainability is one of the most pressing • The IOC as an organisation: To embrace challenges of our time across a wide sustainability principles and to include spectrum of social, environmental and sustainability in its day-to-day operations. economic matters. Major issues such as climate change, economic inequality and • The IOC as owner of the Olympic social injustice are affecting people Games: To take a proactive and leadership throughout the world. These are also role on sustainability and ensure that it is pressing concerns for the sports community, included in all aspects of the planning and both for managing its day-to-day affairs and staging of the Olympic Games. for its responsibilities towards young people and future generations. We also recognise • The IOC as leader of the Olympic that sport has an unrivalled capacity to Movement: To engage and assist Olympic motivate and inspire large numbers of Movement stakeholders in integrating people. This is why we believe that the sustainability within their own organisations Olympic Movement has both a duty and an and operations. opportunity to contribute actively to global sustainability in line with our vision: “Building Following on from Olympic Agenda 2020, a better world through sport”. we issued the IOC Sustainability Strategy in December 2016. The Strategy is based on It is therefore logical that sustainability forms our three spheres of responsibility and five one of the key elements of Olympic Agenda focus areas, as illustrated below. 2020, the Olympic Movement’s strategic roadmap adopted in December 2014. -

Building a Visual Editor for Wikipedia

Building a Visual Editor for Wikipedia Trevor Parscal and Roan Kattouw Wikimania D.C. 2012 Trevor Parscal Roan Kattouw Rob Moen Lead Designer and Engineer Data Model Engineer User Interface Engineer Wikimedia Wikimedia Wikimedia Inez Korczynski Christian Williams James Forrester Edit Surface Engineer Edit Surface Engineer Product Analyst Wikia Wikia Wikimedia The People Wikimania D.C. 2012 Parsoid Team Gabriel Wicke Subbu Sastry Lead Parser Engineer Parser Engineer Wikimedia Wikimedia The People Wikimania D.C. 2012 The Complexity Problem Wikimania D.C. 2012 Active Editors 20k 0 2001 2007 Today Growth Stagnation The Complexity Problem Wikimania D.C. 2012 just messing around Testing testing 123... The Complexity Problem Wikimania D.C. 2012 The Review Problem Wikimania D.C. 2012 Balancing the ecosystem Difficulty Editing Reviewing The Review Problem Wikimania D.C. 2012 Balancing the ecosystem Difficulty Editing Reviewing The Review Problem Wikimania D.C. 2012 Balancing the ecosystem Difficulty Editing Reviewing The Review Problem Wikimania D.C. 2012 Balancing the ecosystem Difficulty Editing Reviewing The Review Problem Wikimania D.C. 2012 Wikitext enthusiasts CC-BY-SA-3.0, http://commons.wikimedia.org/wiki/File:Usfa-heston.gif The Expert Problem Wikimania D.C. 2012 Exit strategy 100% Preference for Wikitext Capabilities of visual tools 0% The Expert Problem Wikimania D.C. 2012 To what extent? CC-BY-SA-3.0, http://commons.wikimedia.org/wiki/File:TriMet_MAX_Green_Line_Train_on_Portland_Transit_Mall.jpg The Expert Problem Wikimania D.C. 2012 To what extent? CC-BY-SA-3.0, http://commons.wikimedia.org/wiki/File:TriMet_MAX_Green_Line_Train_on_Portland_Transit_Mall.jpgCC-BY-SA-3.0, http://commons.wikimedia.org/wiki/File:TriMet_1990_Gillig_bus_carrying_bike.jpg The Expert Problem Wikimania D.C. -

Implementation Plan for ISO 14001:2004 Environmental Management in Illco Factory”

University of Azuay Faculty of Law School of International Studies “Implementation plan for ISO 14001:2004 Environmental Management in Illco Factory” Graduate work to obtain the degree of International Studies with biligual honors in Foreign Trade Author: Caroly Alexandra Beltrán Vázquez Director: Engineer Juan Manuel Maldonado Matute Cuenca-Ecuador 2015 DEDICATION This research paper is dedicated to God, the guide of my life, for giving me the opportunity to complete my studies. To my parents, for all their efforts, love and support received, without them I would not be the person I try to be every day. To my teachers for their love and dedication to educate me and help me become an excellent professional. ii ACKNOWLEDGEMENT I thank my advisor Juan Maldonado for his time and patience and for giving me guidance during the development of this study. To the owners of Illco Factory, for giving me the necessary information and trust to develop this thesis. iii INDEX OF CONTENTS DEDICATION .................................................................................................................. ii ACKNOWLEDGEMENT ...............................................................................................iii ABSTRACT .................................................................................................................... vii INTRODUCTION............................................................................................................. 1 1.1 Generalities ............................................................................................................ -

Insights Risk Resilience

Insights Risk Resilience ISSUE 01 / APRIL 2016 When Standards MatterMatter. Insights 22 Intro ISSUE 01 / APRIL 2016 They say that there is nothing more important than being fully equipped with the right knowledge, expertise, and resources to provide your customers satisfactory products and/ or services. But what is more important is the recognition of the dedication and hard work your 28 organization invests day-in and 10 day-out to not only meet customer expectations, but also exceed them. Everyday PECB is one step closer 34 to our vision of a world where best practices are widely disseminated, accessible, affordable, known and used. Our mission to enhance the accessibility of standards, compliance and education for people and organizations by 04 Why Risk Resilience? reducing the certification costs and widening the range for education and certification programs is 06 Risk Assessment in project Management gradually being accomplished. We will continue our journey to 10 Information Security in Banks and Financial Institutions support worldwide professionals that want to differentiate themselves, and follow best 14 Replacing OHSAS 18001 what will ISO 45001 Bring? practices based on internationally recognized standards. 18 Are You Metting Customer Expectations? Eric Lachapelle 22 Social Engineering and Risk From Cyber-Attacks CEO at PECB 14 28 Risk Assessment in Different Disciplines 30 Business Continuity with Cybersecurity 32 The Weakest Link in Information Security 34 News Flash 18 35 What’s Happening on Twitter In recent years, a rapid Before entering such market, it is very important to understand the needs, rules and strategies increase in foreign which help you to grow and increase profitability. -

Uci Sustainability Guidelines June 2021 Table of Contents

UCI SUSTAINABILITY GUIDELINES JUNE 2021 TABLE OF CONTENTS 01 | PAGE 03 05 | PAGE 51 HOW TO USE THESE SUSTAINABLE CYCLING GUIDELINES EVENT CHECKLISTS 02 | PAGE 04 06 | PAGE 80 INTRODUCTION ADVOCACY CHECKLISTS 03 | PAGE 09 07 | PAGE 88 SUSTAINABILITY ACKNOWLEDGEMENTS OVERVIEW & REFERENCES 04 | PAGE 23 08 | PAGE 90 SUSTAINABILITY IN ABOUT CYCLING FOCUS AREAS THE UCI PAGE 2 HOW TO USE 01 THESE GUIDELINES These guidelines aim to provide simple, practical and essential information on key aspects of sustainability. It is a tool that will help National Federations, Event Organisers, Teams, and other cycling stakeholders navigate this subject’s complexities and start the journey to developing effective sustainability programmes. Introduces the UCI’s commitment to sustainability and its four pillars, Section 1 which are the foundation for transforming our sport, and outlines the role cycling can play in an increasingly challenging world. Gives a broad overview of sustainability, the United Nations Sustainable Section 2 Development Goals, the ISO 20121 management system, and where to start with taking climate action. Contains short overviews, providing a reference point for cycling’s Section 3 essential focus areas, with case studies of sustainability in practice from cycling organisations around the world. Provides checklists highlighting best practice to help organisers deliver sustainable events that maximise positive and minimise negative impacts on people and the planet. These actions are neither exhaustive nor Section 4 compulsory. Organisers must think about what is important and realistic for events according to specific local conditions and plan to address priority objectives. Contains tools and resources to advocate for cycling as a mode of Section 5 transport and encourage better inclusion within our sport. -

ISO 14001: Current Status and Future Challenges Dick Hortensius NEN

ISO 14001: current status and future challenges Dick Hortensius NEN Management Systems Titel presentatie 1 Agenda • Background and current status ISO 14000 series • Looking into the future: – ISO SAG-MSS and JTCG – Study group on future challenges for EMS and ISO 14001 • Some future challenges for ISO 14001: – Integration of management systems – Value chain management – Social responsibility • The ISO answer 2 The origin of ISO 14001 • 1990: Preparation of UNCED; The Business Council for Sustainable Development expressed a need for global standards for environmental performance • 1991-1993: ISO Strategic Advisory Group on Environment (SAGE) • 1993: Establishment of ISO/TC 207 Environmental management • 1996: 1st edition of ISO 14001 published 3 ISO 14001 is about….: Environmental policy EMS A I S M P P E A C C T T S S Environmental performance Company Environment ….managing environmental aspects 4 Scope of ISO 14001 Plan Control Act Do Influence Influence Check Environmental management system 5 Vision of ISO/TC 207 ISO/TC 207 is recognized as a leading world-forum for the development of International Standards that lead to improvements in environmental performance of organizations and their products, facilitate world trade and contribute to sustainable development 6 The ISO 14000 series 14046 14067 Water footprint 14064 Carbon footprint GHG monitoring Plan 14040 series Act Do14020 series LCA Environmental labelling Check 14062 14063 14050 14006 Ecodesign 14015 7 ISO 14000 toolbox for environmental management Description of Prioritizing env. The ISO 14040 – series aspects environmental Life Cycle Assessment performance of products ISO 14001 ISO 14001 Improvement of Integration of env. ISO 14062/14006 and 14004 Design for Environment environmental and 14004 aspects in design performance of and development products The ISO 14020 series Environmental labels and Information about Act Plan Declarations environmental ISO 14067 on carbon FP aspects of products Communicating 14046 on water FP env. -

Working-With-Mediawiki-Yaron-Koren.Pdf

Working with MediaWiki Yaron Koren 2 Working with MediaWiki by Yaron Koren Published by WikiWorks Press. Copyright ©2012 by Yaron Koren, except where otherwise noted. Chapter 17, “Semantic Forms”, includes significant content from the Semantic Forms homepage (https://www. mediawiki.org/wiki/Extension:Semantic_Forms), available under the Creative Commons BY-SA 3.0 license. All rights reserved. Library of Congress Control Number: 2012952489 ISBN: 978-0615720302 First edition, second printing: 2014 Ordering information for this book can be found at: http://workingwithmediawiki.com All printing of this book is handled by CreateSpace (https://createspace.com), a subsidiary of Amazon.com. Cover design by Grace Cheong (http://gracecheong.com). Contents 1 About MediaWiki 1 History of MediaWiki . 1 Community and support . 3 Available hosts . 4 2 Setting up MediaWiki 7 The MediaWiki environment . 7 Download . 7 Installing . 8 Setting the logo . 8 Changing the URL structure . 9 Updating MediaWiki . 9 3 Editing in MediaWiki 11 Tabs........................................................... 11 Creating and editing pages . 12 Page history . 14 Page diffs . 15 Undoing . 16 Blocking and rollbacks . 17 Deleting revisions . 17 Moving pages . 18 Deleting pages . 19 Edit conflicts . 20 4 MediaWiki syntax 21 Wikitext . 21 Interwiki links . 26 Including HTML . 26 Templates . 27 3 4 Contents Parser and tag functions . 30 Variables . 33 Behavior switches . 33 5 Content organization 35 Categories . 35 Namespaces . 38 Redirects . 41 Subpages and super-pages . 42 Special pages . 43 6 Communication 45 Talk pages . 45 LiquidThreads . 47 Echo & Flow . 48 Handling reader comments . 48 Chat........................................................... 49 Emailing users . 49 7 Images and files 51 Uploading . 51 Displaying images . 55 Image galleries . -

Editing Wikipedia: a Guide to Improving Content on the Online Encyclopedia

wikipedia globe vector [no layers] Editing Wikipedia: A guide to improving content on the online encyclopedia Wikimedia Foundation 1 Imagine a world in which every single human wikipedia globebeing vector [no layers] can freely share in the sum of all knowledge. That’s our commitment. This is the vision for Wikipedia and the other Wikimedia projects, which volunteers from around the world have been building since 2001. Bringing together the sum of all human knowledge requires the knowledge of many humans — including yours! What you can learn Shortcuts This guide will walk you through Want to see up-to-date statistics about how to contribute to Wikipedia, so Wikipedia? Type WP:STATS into the the knowledge you have can be freely search bar as pictured here. shared with others. You will find: • What Wikipedia is and how it works • How to navigate Wikipedia The text WP:STATS is what’s known • How you can contribute to on Wikipedia as a shortcut. You can Wikipedia and why you should type shortcuts like this into the search • Important rules that keep Wikipedia bar to pull up specific pages. reliable In this brochure, we designate shortcuts • How to edit Wikipedia with the as | shortcut WP:STATS . VisualEditor and using wiki markup • A step-by-step guide to adding content • Etiquette for interacting with other contributors 2 What is Wikipedia? Wikipedia — the free encyclopedia that anyone can edit — is one of the largest collaborative projects in history. With millions of articles and in hundreds of languages, Wikipedia is read by hundreds of millions of people on a regular basis. -

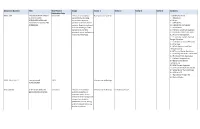

Document Number Title Most Recent Publication Date Scope Sector 1 Sector 2 Sector 3 Sector 4 Contents

Document Number Title Most Recent Scope Sector 1 Sector 2 Sector 3 Sector 4 Contents Publication Date ARINC 654 ENVIRONMENTAL DESIGN 9/12/1994 Refers electromagnetic Transportation Systems 1.0 INTRODUCTION GUIDELINES FOR compatibility, shielding, 1.1 Objectives INTEGRATED MODULAR thermal management, 1.2 Scope AVIONICS PACKAGING AND vibration and shock of IMA 1.3 References INTERFACES systems. Emphasis is placed 2.0 VIBRATION AND SHOCK both on the design of IMA 2.1 Introduction components and their 2.2 Vibration and Shock Isolation electrical, optical and electro- 3.0 THERMAL CONSIDERATIONS mechanical interfaces. 3.1 Thermal Management 3.1.1 Electronic System Thermal Design Objectives 3.1.2 Design Condition Definitions 3.1.3 Air Flow 3.1.4 Fully Enclosed and Flow- Through Cooling 3.1.5 Thermal Design Conditions 3.1.6 Cooling Hole Sizes - Limit Cases 3.2 Electronic Parts Application 3.3 Ambient Temperatures 3.4 Equipment Sidewall Temperature 3.5 LRM Thermal Appraisal 3.6 Thermal Interface Information 3.7 Materials for Thermal Design 4.0 DESIGN LIFE 4.1 Operational Design Life 4.2 Failure Modes ARINC 666 ITEM 7.0 Encryption and 2002 Information Technology f l/ Authentication ATIS 0300100 IP NETWORK DISASTER 1/12/2009 Pertains to enumerate Information Technology Emergency Services RECOVERY FRAMEWORK potential proactive or automatic policy-driven network traffic management actions that should be performed prior to, during, and immediately following disaster conditions. ATIS 0300202 Internetwork Operations 1/11/2009 Describes the cooperative Information Technology Emergency Services communications Guidelines for Network network management Management of the Public actions (that may be) Telecommunications required of interconnected Networks under Disaster network operators during Conditions emergency conditions associated with disasters that threaten life or property and case congestion in the public telecommunications networks. -

International Standard Iso 20121:2012(E)

INTERNATIONAL ISO STANDARD 20121 First edition 2012-06-15 Event sustainability management systems — Requirements with guidance for use Systèmes de management responsable appliqués à l'activité événementielle — Exigences et recommandations de mise en œuvre Reference number ISO 20121:2012(E) Licensed to Mrs. Cavanna ISO Store order #: 10-1304363/Downloaded: 2012-11-20 Single user licence only, copying and networking prohibited © ISO 2012 ISO 20121:2012(E) COPYRIGHT PROTECTED DOCUMENT © ISO 2012 All rights reserved. Unless otherwise specified, no part of this publication may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying and microfilm, without permission in writing from either ISO at the address below or ISO's member body in the country of the requester. ISO copyright office Case postale 56 CH-1211 Geneva 20 Tel. + 41 22 749 01 11 Fax + 41 22 749 09 47 E-mail [email protected] Web www.iso.org Published in Switzerland Licensed to Mrs. Cavanna ISO Store order #: 10-1304363/Downloaded: 2012-11-20 Single user licence only, copying and networking prohibited ii © ISO 2012 – All rights reserved ISO 20121:2012(E) Contents Page Foreword ............................................................................................................................................................ iv Introduction ......................................................................................................................................................... v 1 Scope ..................................................................................................................................................... -

ISO 9001, Quality Management Systems — Requirements

Task Name [1] ISO 9001, Quality management systems — Requirements [2] ISO 9004, Quality management systems — Guidelines for performance improvements [3] ISO 10002, Quality management — Customer satisfaction — Guidelines for complaints handling in organ. [4] ISO 10005, Quality management systems — Guidelines for quality plans [5] ISO 10006, Quality management systems — Guidelines for quality management in projects [6] ISO 10007, Quality management systems — Guidelines for configuration management [7] ISO 10012, Measurement management sys — Requirements for measu. processes and measuring equipment [8] ISO/TR 10013, Guidelines for quality management system documentation [9] ISO/TR 10014, Guidelines for managing the economics of quality [10] ISO 10015, Quality management — Guidelines for training [11] ISO/TR 10017, Guidance on statistical techniques for ISO 9001:2000 [12] ISO 19011, Guidelines for quality and/or environmental management systems auditing [13] ISO/IEC Guide 62, General requirements for bodies operating assessment and certification/registration TSE ISO Guide 73 Risc Management SA 8000 Social Accountability Standard ISO 8402:1994, Quality management and quality assurance-Vocabulary. ISO 10011-1:1990, Guidelines for auditing quality system-Part 1: Auditing. ISO/IEC Guide 2:1996, Standardization and related activities-General vocabulary. ISO/IEC Guide 7:1994, Guidelines for drafting of standards suitable for use for conformity assessment. ISO/IEC Guide 23:1982, Methods of indicating conformity with standards for third-party certification systems. ISO/IEC Guide 25:1990, General requirements for the competence of calibration and testing laboratories. ISO/IEC Guide 27:1983, Guidelines for corrective action to be taken by a certification body in the event of misuse of its mark of conformity.