Boulder Lake

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Dual Core Processor Has Two Cores (Essentially, Two Cpus on One Chip)

Faculty of Engineering & Information Technology Al-Azhar University-Gaza Dr. Mohammad Aqel Microprocessors and Interfacing (ITCC 3301) Homework (1) Intel Core is a brand name used for various mid-range to high-end consumer and business microprocessors made by Intel. In general, processors sold as Core are more powerful variants of the same processors marketed as entry-level Celeron and Pentium. Similarly, identical or more capable versions of Core processors are also sold as Xeon processors for the server market. The current lineup of Core processors includes the latest Intel Core i7, Intel Core i5 and Intel Core i3, and the older Intel Core 2 Solo, Intel Core 2 Duo, Intel Core 2 Quad, and Intel Core 2 Extreme lines. 1- The original Core brand refers to Intel's 32-bit dual-core x86 CPUs. 2- Unlike the Intel Core, Intel Core 2 is a 64-bit processor, supporting Intel 64. The Core brand comprised two branches: the Duo (dual-core) and Solo (Duo with one disabled core). A dual core processor has two cores (essentially, two CPUs on one die (piece) silicon chip (IC)). Core2Duo is a specific dual-core processor design. Thus, all Core2Duo CPUs are dual-core CPUs, but not all Intel dual-core CPUs are Core2Duo designs. Core 2 is a brand encompassing a range of Intel's consumer 64-bit x86-64 single-, dual-, and quad-core microprocessors based on the Core microarchitecture. The single- and dual-core models are single-die, whereas the quad-core models comprise two dies, each containing two cores, packaged in a multi-chip module. -

Microcode Revision Guidance August 31, 2019 MCU Recommendations

microcode revision guidance August 31, 2019 MCU Recommendations Section 1 – Planned microcode updates • Provides details on Intel microcode updates currently planned or available and corresponding to Intel-SA-00233 published June 18, 2019. • Changes from prior revision(s) will be highlighted in yellow. Section 2 – No planned microcode updates • Products for which Intel does not plan to release microcode updates. This includes products previously identified as such. LEGEND: Production Status: • Planned – Intel is planning on releasing a MCU at a future date. • Beta – Intel has released this production signed MCU under NDA for all customers to validate. • Production – Intel has completed all validation and is authorizing customers to use this MCU in a production environment. -

Asrock G41C-VS Motherboard, a Reliable Motherboard Produced Under Asrock’S Consistently Stringent Quality Control

G41C-VS User Manual Version 1.0 Published October 2009 Copyright©2009 ASRock INC. All rights reserved. 1 Copyright Notice: No part of this manual may be reproduced, transcribed, transmitted, or translated in any language, in any form or by any means, except duplication of documentation by the purchaser for backup purpose, without written consent of ASRock Inc. Products and corporate names appearing in this manual may or may not be regis- tered trademarks or copyrights of their respective companies, and are used only for identification or explanation and to the owners’ benefit, without intent to infringe. Disclaimer: Specifications and information contained in this manual are furnished for informa- tional use only and subject to change without notice, and should not be constructed as a commitment by ASRock. ASRock assumes no responsibility for any errors or omissions that may appear in this manual. With respect to the contents of this manual, ASRock does not provide warranty of any kind, either expressed or implied, including but not limited to the implied warran- ties or conditions of merchantability or fitness for a particular purpose. In no event shall ASRock, its directors, officers, employees, or agents be liable for any indirect, special, incidental, or consequential damages (including damages for loss of profits, loss of business, loss of data, interruption of business and the like), even if ASRock has been advised of the possibility of such damages arising from any defect or error in the manual or product. This device complies with Part 15 of the FCC Rules. Operation is subject to the following two conditions: (1) this device may not cause harmful interference, and (2) this device must accept any interference received, including interference that may cause undesired operation. -

MBP4ASG41M-VS3.Pdf

ASRock > G41M-VS3 Página 1 de 2 Home | Global / English [Change] About ASRock Products News Support Forum Download Awards Dealer Zone Where to Buy Products G41M-VS3 Motherboard Series »G41M-VS3 Translate »Overview & Specifications ■ Supports FSB1333/1066/800/533 MHz CPUs »Download ■ Supports Dual Channel DDR3 1333(OC) ■ Intel® Graphics Media Accelerator X4500, Pixel Shader 4.0, DirectX 10, Max. shared »Manual memory 1759MB »FAQ ■ EuP Ready »CPU Support List ■ Supports ASRock XFast RAM, XFast LAN, XFast USB Technologies ■ Supports Instant Boot, Instant Flash, OC DNA, ASRock OC Tuner (Up to 158% CPU »Memory QVL frequency increase) »Beta Zone ■ Supports Intelligent Energy Saver (Up to 20% CPU Power Saving) ■ Free Bundle : CyberLink DVD Suite - OEM and Trial; Creative Sound Blaster X-Fi MB - Trial This model may not be sold worldwide. Please contact your local dealer for the availability of this model in your region. Product Specifications General - LGA 775 for Intel® Core™ 2 Extreme / Core™ 2 Quad / Core™ 2 Duo / Pentium® Dual Core / Celeron® Dual Core / Celeron, supporting Penryn Quad Core Yorkfield and Dual Core Wolfdale processors - Supports FSB1333/1066/800/533 MHz CPU - Supports Hyper-Threading Technology - Supports Untied Overclocking Technology - Supports EM64T CPU - Northbridge: Intel® G41 Chipset - Southbridge: Intel® ICH7 - Dual Channel DDR3 memory technology - 2 x DDR3 DIMM slots - Supports DDR3 1333(OC)/1066/800 non-ECC, un-buffered memory Memory - Max. capacity of system memory: 8GB* *Due to the operating system limitation, the actual memory size may be less than 4GB for the reservation for system usage under Windows® 32-bit OS. For Windows® 64-bit OS with 64-bit CPU, there is no such limitation. -

Toshiba Hardware Portfolio – Microsoft Support by Family

Date created: 6/17/2020 Toshiba Hardware Portfolio – Microsoft Support by Family Windows 10 Windows 10 Windows 10 Toshiba Windows 10 Toshiba IoT IoT Enterprise Intel Platform Machine Intel Processor Model IoT Enterprise Family Enterprise LTSC 2019 Type LTSB 2015 LTSB 2016 Core i3 9100TE 777 TCx™ 700 4900 Core i5 9500T 787 9th Gen Core Core i7 9700T 797 (Coffee Lake Not Supported Refresh) Core i3 9100TE 371 TCx™ 300 4810 Core i5 9500T 381 Core i7 9700T 391 8th Gen Core TCx™ 300 4810 361 Celeron 4900T (Coffee Lake) TCx™ 700 4900 767 107 Available now and Core i7 7600U 117 supported until 137 Not Supported Jan 9, 2029 105 Core i5 7300U 115 7th Gen Core 135 TCx™ 800 6200 Available (Kaby Lake) 103 now and Core i3 7100U 113 supported until 133 Oct 13, 2026 10C Celeron 3965U 11C 13C N/A 4750 AMD GX-218GL D10 SoC Family Basics Celeron J1900 4818 T10 (Bay Trail) Quad Not Supported 6th Gen Core 145 TCxWave™ 6140 Core i5 6300U (Skylake/Q170) 155 Date created: 6/17/2020 14C Celeron 3955U 15C SoC Family Celeron J1900 A3R (Bay Trail) Quad Core i5 4950S 786 Available Core i3 4330 C86 now and TCx™ 700 4900 Celeron G1820 supported until 746 Oct 14, 2025 4th Gen Core Celeron G1820TE (Haswell/Q87) C46 Core i5 4570TE 380 TCx™ 300 4810 Core i3 4330TE 370 Celeron G1820TE 360 3rd Gen Core + TCxWave ™ 6140 Core i3 3217UE 120 2nd Gen PCH SurePOS (IvyBridge CPU + 4852 Core i5 3550S 580 CougarPoint PCH) 500 Toshiba Toshiba Limited TCxWave ™ 6140 Celeron 847E 100 Limited Support SurePOS Celeron G540 785 Support 2nd Gen Core 4900 700 Core i3 2120 C85 (SandyBridge/Q67) -

Class-Action Lawsuit

Case 3:20-cv-00863-SI Document 1 Filed 05/29/20 Page 1 of 279 Steve D. Larson, OSB No. 863540 Email: [email protected] Jennifer S. Wagner, OSB No. 024470 Email: [email protected] STOLL STOLL BERNE LOKTING & SHLACHTER P.C. 209 SW Oak Street, Suite 500 Portland, Oregon 97204 Telephone: (503) 227-1600 Attorneys for Plaintiffs [Additional Counsel Listed on Signature Page.] UNITED STATES DISTRICT COURT DISTRICT OF OREGON PORTLAND DIVISION BLUE PEAK HOSTING, LLC, PAMELA Case No. GREEN, TITI RICAFORT, MARGARITE SIMPSON, and MICHAEL NELSON, on behalf of CLASS ACTION ALLEGATION themselves and all others similarly situated, COMPLAINT Plaintiffs, DEMAND FOR JURY TRIAL v. INTEL CORPORATION, a Delaware corporation, Defendant. CLASS ACTION ALLEGATION COMPLAINT Case 3:20-cv-00863-SI Document 1 Filed 05/29/20 Page 2 of 279 Plaintiffs Blue Peak Hosting, LLC, Pamela Green, Titi Ricafort, Margarite Sampson, and Michael Nelson, individually and on behalf of the members of the Class defined below, allege the following against Defendant Intel Corporation (“Intel” or “the Company”), based upon personal knowledge with respect to themselves and on information and belief derived from, among other things, the investigation of counsel and review of public documents as to all other matters. INTRODUCTION 1. Despite Intel’s intentional concealment of specific design choices that it long knew rendered its central processing units (“CPUs” or “processors”) unsecure, it was only in January 2018 that it was first revealed to the public that Intel’s CPUs have significant security vulnerabilities that gave unauthorized program instructions access to protected data. 2. A CPU is the “brain” in every computer and mobile device and processes all of the essential applications, including the handling of confidential information such as passwords and encryption keys. -

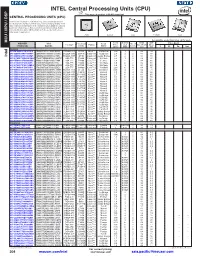

INTEL Central Processing Units (CPU) This Page of Product Is Rohs Compliant

INTEL Central Processing Units (CPU) This page of product is RoHS compliant. CENTRAL PROCESSING UNITS (CPU) Intel Processor families include the most powerful and flexible Central Processing Units (CPUs) available today. Utilizing industry leading 22nm device fabrication techniques, Intel continues to pack greater processing power into smaller spaces than ever before, providing desktop, mobile, and embedded products with maximum performance per watt across a wide range of applications. Atom Celeron Core Pentium Xeon For quantities greater than listed, call for quote. MCU \ MPU / DSP MPU / MCU \ MOUSER Intel Core Cache Data TDP Price Each Package Processor Family Code Freq. Size No. of Bus Width (Max) STOCK NO. Part No. Series Name (GHz) (MB) Cores (bit) (W) 1 25 50 100 Desktop 607-DF8064101211300Y DF8064101211300S R0VY FCBGA-559 D2550 Atom™ Cedarview 1.86 1 2 64 10 607-CM8063701444901S CM8063701444901S R10K FCLGA-1155 G1610 Celeron® Ivy Bridge 2.6 2 2 64 55 Intel 607-CM8062301046804S CM8062301046804S R05J FCLGA-1155 G540 Celeron® Sandy Bridge 2.5 2 2 64 65 607-AT80571RG0641MLS AT80571RG0641MLS LGTZ LGA-775 E3400 Celeron® Wolfdale 2.6 1 2 64 65 607-HH80557PG0332MS HH80557PG0332MS LA99 LGA-775 E4300 Core™ 2 Conroe 1.8 2 2 64 65 607-AT80570PJ0806MS AT80570PJ0806MS LB9J LGA-775 E8400 Core™ 2 Wolfdale 3.0 6 2 64 65 607-AT80571PH0723MLS AT80571PH0723MLS LGW3 LGA-775 E7400 Core™ 2 Wolfdale 2.8 3 2 64 65 607-AT80580PJ0676MS AT80580PJ0676MS LB6B LGA-775 Q9400 Core™ 2 Yorkfield 2.66 6 4 64 95 607-CM80616003060AES CM80616003060AES LBTD FCLGA-1156 -

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

CISC Processor - Intel X86

Architecture of Computers and Parallel Systems Part 4: Intel x86 History Ing. Petr Olivka [email protected] Department of Computer Science FEI VSB-TUO Architecture of Computers and Parallel Systems Part 4: Intel x86 History Ing. Petr Olivka [email protected] Department of Computer Science FEI VSB-TUO Architecture of Computers and Parallel Systems Part 4: Intel x86 History Ing. Petr Olivka [email protected] Department of Computer Science FEI VSB-TUO CISC Processor - Intel x86 This chapter will introduce the CISC processors evolution. We will try to illustrate the history on one typical processor, because the comparison of multiple processors simultaneously would not be clear for readers. But the selection of one typical processor is complicated due to a variety of products and manufactures in the past 30 years. We have decided to describe in this presentation one of the best- known and longest mass-produced processors in existence. We definitely do not want to say that it is the best technology or that these are processors with the highest performance! The Intel x86 processors are the selected product line. Intel 8080 (Year-Technology-Transistors-Frequency-Data bus-Address Bus) Y: 1974 T: NMOS 6μm Tr: 6000 F: 2MHz D: 8b A: 16b This 8 bit processor is not directly the first member of x86 series, but it can not be skipped. It is one of the first commercially successful microprocessors. This microprocessor became the basis for a number of the first single-board computers and its instruction set inspired other manufacturers to develop 8-bit processors. -

Intel® Celeron® Processor 900 Series and Ultra Low Voltage 700 Series

Intel® Celeron® Processor 900 Series and Ultra Low Voltage 700 Series Datasheet For platforms based on Mobile Intel® 4 Series Chipset family February 2010 Document Number: 320389-003 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The information here is subject to change without notice. Do not finalize a design with this information. The products described in this document may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order. -

HC19.21.810.45Nm Next Generation Intel® Core™ Microarchitecture

45nm Next Generation Intel® Core™ Microarchitecture (Penryn) HOT CHIPS 2007 Varghese George Principal Engineer, Intel Corp Legal Disclaimer Today’s presentation may contain forward-looking statements. All statements made that are not historical facts are subject to a number of risks and uncertainties, and actual results may differ materially. Please refer to our most recent Earnings Release and our most recent Form 10-Q or 10-K filing available on our website for more information on the risk factors that could cause actual results to differ. INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, or life sustaining applications. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked "reserved" or "undefined." Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The Intel® Core™ Microarchitecture, Intel® Pentium, Intel® Pentium II, Intel® Pentium III, Intel® Pentium 4, Intel® Pentium Pro, Intel® Pentium D, Intel® Pentium M , Itanium®, Xeon® may contain design defects or errors known as errata which may cause the product to deviate from published specifications. -

The Intel X86 Microarchitectures Map Version 2.0

The Intel x86 Microarchitectures Map Version 2.0 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • Variant: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128