A Model for Deliberation, Action, and Introspection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Logging Songs of the Pacific Northwest: a Study of Three Contemporary Artists Leslie A

Florida State University Libraries Electronic Theses, Treatises and Dissertations The Graduate School 2007 Logging Songs of the Pacific Northwest: A Study of Three Contemporary Artists Leslie A. Johnson Follow this and additional works at the FSU Digital Library. For more information, please contact [email protected] THE FLORIDA STATE UNIVERSITY COLLEGE OF MUSIC LOGGING SONGS OF THE PACIFIC NORTHWEST: A STUDY OF THREE CONTEMPORARY ARTISTS By LESLIE A. JOHNSON A Thesis submitted to the College of Music in partial fulfillment of the requirements for the degree of Master of Music Degree Awarded: Spring Semester, 2007 The members of the Committee approve the Thesis of Leslie A. Johnson defended on March 28, 2007. _____________________________ Charles E. Brewer Professor Directing Thesis _____________________________ Denise Von Glahn Committee Member ` _____________________________ Karyl Louwenaar-Lueck Committee Member The Office of Graduate Studies has verified and approved the above named committee members. ii ACKNOWLEDGEMENTS I would like to thank those who have helped me with this manuscript and my academic career: my parents, grandparents, other family members and friends for their support; a handful of really good teachers from every educational and professional venture thus far, including my committee members at The Florida State University; a variety of resources for the project, including Dr. Jens Lund from Olympia, Washington; and the subjects themselves and their associates. iii TABLE OF CONTENTS ABSTRACT ................................................................................................................. -

Flowers for Algernon.Pdf

SHORT STORY FFlowerslowers fforor AAlgernonlgernon by Daniel Keyes When is knowledge power? When is ignorance bliss? QuickWrite Why might a person hesitate to tell a friend something upsetting? Write down your thoughts. 52 Unit 1 • Collection 1 SKILLS FOCUS Literary Skills Understand subplots and Reader/Writer parallel episodes. Reading Skills Track story events. Notebook Use your RWN to complete the activities for this selection. Vocabulary Subplots and Parallel Episodes A long short story, like the misled (mihs LEHD) v.: fooled; led to believe one that follows, sometimes has a complex plot, a plot that con- something wrong. Joe and Frank misled sists of intertwined stories. A complex plot may include Charlie into believing they were his friends. • subplots—less important plots that are part of the larger story regression (rih GREHSH uhn) n.: return to an earlier or less advanced condition. • parallel episodes—deliberately repeated plot events After its regression, the mouse could no As you read “Flowers for Algernon,” watch for new settings, charac- longer fi nd its way through a maze. ters, or confl icts that are introduced into the story. These may sig- obscure (uhb SKYOOR) v.: hide. He wanted nal that a subplot is beginning. To identify parallel episodes, take to obscure the fact that he was losing his note of similar situations or events that occur in the story. intelligence. Literary Perspectives Apply the literary perspective described deterioration (dih tihr ee uh RAY shuhn) on page 55 as you read this story. n. used as an adj: worsening; declining. Charlie could predict mental deterioration syndromes by using his formula. -

Ep #375: Do Hard Things Full Episode Transcript Brooke Castillo

Ep #375: Do Hard Things Full Episode Transcript With Your Host Brooke Castillo The Life Coach School Podcast with Brooke Castillo Ep #375: Do Hard Things You are listening to The Life Coach School Podcast with Brooke Castillo, episode number 375. Welcome to The Life Coach School Podcast, where it’s all about real clients, real problems and real coaching. And now your host, Master Coach Instructor, Brooke Castillo. Hello, my friends. Okay, I might have a little rant. I might have a little talking-to today. You telling me that things are hard doesn’t mean anything. What does it mean that things are hard? It’s an irrelevant thing to say. People say things are hard to me as if that’s a reason not to do them. That is the reason to do them. Hard things are what make us stronger. We can’t get stronger if we don’t do hard things. So, if you come to me and you say, “But it’s so hard...” I don’t know what you mean. It’s like when people say to me, “But it’s so expensive.” Of course, it’s expensive. Of course, I charge a lot for what I do. It’s so valuable. Of course, it’s hard. It’s going to make you into who you want to be. You can’t become who you want to be on easy street. You can’t make things easy and then expect yourself to grow from doing easy things. You don’t want people to go, “Oh, she always takes the easy road.” When you hear someone talk about someone else like that, it’s not impressive, my friends. -

Song & Music in the Movement

Transcript: Song & Music in the Movement A Conversation with Candie Carawan, Charles Cobb, Bettie Mae Fikes, Worth Long, Charles Neblett, and Hollis Watkins, September 19 – 20, 2017. Tuesday, September 19, 2017 Song_2017.09.19_01TASCAM Charlie Cobb: [00:41] So the recorders are on and the levels are okay. Okay. This is a fairly simple process here and informal. What I want to get, as you all know, is conversation about music and the Movement. And what I'm going to do—I'm not giving elaborate introductions. I'm going to go around the table and name who's here for the record, for the recorded record. Beyond that, I will depend on each one of you in your first, in this first round of comments to introduce yourselves however you wish. To the extent that I feel it necessary, I will prod you if I feel you've left something out that I think is important, which is one of the prerogatives of the moderator. [Laughs] Other than that, it's pretty loose going around the table—and this will be the order in which we'll also speak—Chuck Neblett, Hollis Watkins, Worth Long, Candie Carawan, Bettie Mae Fikes. I could say things like, from Carbondale, Illinois and Mississippi and Worth Long: Atlanta. Cobb: Durham, North Carolina. Tennessee and Alabama, I'm not gonna do all of that. You all can give whatever geographical description of yourself within the context of discussing the music. What I do want in this first round is, since all of you are important voices in terms of music and culture in the Movement—to talk about how you made your way to the Freedom Singers and freedom singing. -

The Nature of Mid-Life Introspection with the Goal of Understanding the Fullness, Or Essence, of the Experience

Copyright 2014, Lee DeRemer Abstract I explore the nature of mid-life introspection with the goal of understanding the fullness, or essence, of the experience. I use research from interviews with nine people who have had deeply personal, highly intentional mid-life introspection experiences. In these interviews I seek to appreciate the motivation of the individuals, the dynamics of the experience, the role of others, lessons learned, and the impact on interviewees’ approach to questions of meaning and purpose. I also interview the spouses of six of the participants to obtain additional perspective on each introspection experience. I employ a transcendental phenomenological methodology to discover whether any aspects of the mid-life introspection experience are common, regardless of differences in world view, background, gender, occupation, education level, or socioeconomic status. By learning the stories of each of these mid-life introspection experiences, I discover the nature of mid-life introspection and develop the following definition of mid-life introspection: a deeply personal quest for a fresh understanding of one’s identity, purpose, values, goals, and life direction, influenced but not necessarily constrained by one’s life history and present circumstances. This research will enable the reader to conclude, “I understand the nature of mid-life introspection and what to expect from a mid-life introspection experience.” The research also reveals a framework that depicts the mid-life introspection experience graphically and a collection of questions that can guide one’s own mid-life introspection. iii Dedication This paper is dedicated to three people. I am who I am because of them, and I am forever grateful. -

Holiness: Why Is It So Hard?

HOLINESS: WHY IS IT SO HARD? The movie, A League of Their Own, presents a Hollywood version of the All-American Girls Professional Baseball League during WWII. In one dramatic scene Dottie Hinson, the best player (portrayed by Geena Davis) is torn between playing in their first World Series and returning home to Oregon with her wounded veteran husband. Her priority was family and she recognized that baseball is “only a game.” When challenged by her manager Jimmy Dugan (played by Tom Hanks) she lamented, “It just got too hard.” Dugan retorted: “It’s supposed to be hard. If it wasn’t hard everyone would do it. It’s the hard that makes it great.” The “hard” in baseball comes from the unique skill it takes to hit major league pitching or to pitch successfully at the major league level. My friend Walt was a terrific third baseman who played triple-A ball in the Boston Red Sox system. During one spring training, he was brought up to the big team and went into the batter’s box to hit under the watchful eye of his idol, Ted Williams. Afterwards, he approached Ted and asked, “What do you think Mr. Williams?” Ted shook his head and replied, “Slow bat, kid, slow bat.” Walt realized that his dream of playing major league baseball was over. As good as he played the game, Walt simply lacked the extraordinary hand-eye coordination needed to hit major league pitching. So, is the “hard” of holiness similar in that it is the discovery that very few of us are spiritual prodigies like the canonized saints? The answer is a resounding, “NO!” In the first place, there are no spiritual prodigies who have the innate exceptional skills they can develop into major league holiness. -

Exploring the Dual Language Program Implementation And

A TALE OF TWO CITIES: EXPLORING THE DUAL LANGUAGE PROGRAM IMPLEMENTATION AND BILITERACY TRAJECTORIES AT TWO SCHOOLS Alexandra Babino Dissertation Prepared for the Degree of DOCTOR OF PHILOSOPHY UNIVERSITY OF NORTH TEXAS August 2015 APPROVED: Carol Wickstrom, Major Professor Sadaf Munshi, Committee Member Ricardo González-Carriedo, Committee Member Endia Lindo, Committee Member Jim Laney, Chair of the Department of Teacher Education and Administration Jerry Thomas, Dean of the College of Education Costas Tsatsoulis, Interim Dean of the Toulouse Graduate School Babino, Alexandra. A Tale of Two Cities: Exploring the Dual Language Program Implementation and Biliteracy Trajectories at Two Schools. Doctor of Philosophy (Language and Literacy Studies), August 2015, 229 pp., 38 tables, 21 figures, 245 numbered references. With the increase in emergent bilinguals and higher standards for all, the challenge for educational stakeholders is to fully utilize dual language programs as a prominent means toward meeting and surpassing rigorous state and national standards. Part of maximizing dual language programs’ impact, and the purpose of this study, was to provide detailed analyses of program models and student biliteracy development. Thus, the research questions sought to explore the level of understanding and implementation of dual language programs in general and the biliteracy component in particular at each campus, before documenting the second through fifth grade English and Spanish reading biliteracy trajectories of students at each school. Both campuses experienced more challenges in the implementation of the program structure, staff quality, and professional development rather than in curriculum and instruction. Furthermore, although both campuses’ students experienced positive trajectories towards biliteracy by the end of fifth grade, each campus was characterized by different rates and correlation between English and Spanish reading growth in each grade. -

Thanksgiving Point Dance Championships Director’S Packet

Thanksgiving Point Dance Championships Director’s packet Website: www.americaonstage.org | e-mail: [email protected] | Phone (801) 224-8334 We are SO HAPPY to have you and your dancers dancing with us. Finally, back on stage!!!! This year we are PACKED. SOLD OUT COMPLETELY! THREE COMPLETELY FULL DAYS. It's going to be awesome! Directors! We are so excited to get you and your dancers back on stage. A few notes: Our first priority is to your dancers and to you. Getting you all back on stage. Safely, happily, and with the best experience we can give everyone. With that in mind, we have several things to do to make sure we can get everyone on stage, including spaced out studio blocks, awards done in studio times, spread out schedules, mask requirements when not on stage, and limits on the number of people who can gather inside facilities as well as audience limitations. We appreciate your understanding in all these things. Doing them means we get to dance on stage. And that's the most important thing to us all. Now, let's get ready to rock those stages. COVID - 19 Adjustments We're going to dance! We're going to DANCE!!! We're so excited! After the year of 2020 in which so many things prevented us from dancing, we're going to make it work. And we're going to do all we can to make it safe, awesome, and working for everyone. Here's how: STUDIO BLOCKS To make sure each studio dances in as safe an envoronment as possible, we are going to dance in STUDIO BLOCKS. -

Why Was Japan Hit So Hard by the Global Financial Crisis?

ADBI Working Paper Series Why was Japan Hit So Hard by the Global Financial Crisis? Masahiro Kawai and Shinji Takagi No. 153 October 2009 Asian Development Bank Institute ADBI Working Paper 153 Kawai and Takagi Masahiro Kawai is the dean of the Asian Development Bank Institute. Shinji Takagi is a professor, Graduate School of Economics, Osaka University, Osaka, Japan. This is a revised version of the paper presented at the Samuel Hsieh Memorial Conference, hosted by the Chung-Hua Institution for Economic Research, Taipei,China 9–10 July 2009. The authors are thankful to Ainslie Smith for her editorial work. The views expressed in this paper are the views of the authors and do not necessarily reflect the views or policies of ADBI, the Asian Development Bank (ADB), its Board of Directors, or the governments they represent. ADBI does not guarantee the accuracy of the data included in this paper and accepts no responsibility for any consequences of their use. Terminology used may not necessarily be consistent with ADB official terms. The Working Paper series is a continuation of the formerly named Discussion Paper series; the numbering of the papers continued without interruption or change. ADBI’s working papers reflect initial ideas on a topic and are posted online for discussion. ADBI encourages readers to post their comments on the main page for each working paper (given in the citation below). Some working papers may develop into other forms of publication. Suggested citation: Kawai, M., and S. Takagi. 2009. Why was Japan Hit So Hard by the Global Financial Crisis? ADBI Working Paper 153. -

Summer Camp Song Book

Summer Camp Song Book 05-209-03/2017 TABLE OF CONTENTS Numbers 3 Short Neck Buzzards ..................................................................... 1 18 Wheels .............................................................................................. 2 A A Ram Sam Sam .................................................................................. 2 Ah Ta Ka Ta Nu Va .............................................................................. 3 Alive, Alert, Awake .............................................................................. 3 All You Et-A ........................................................................................... 3 Alligator is My Friend ......................................................................... 4 Aloutte ................................................................................................... 5 Aouettesky ........................................................................................... 5 Animal Fair ........................................................................................... 6 Annabelle ............................................................................................. 6 Ants Go Marching .............................................................................. 6 Around the World ............................................................................... 7 Auntie Monica ..................................................................................... 8 Austrian Went Yodeling ................................................................. -

Why Is It So Hard to Change? By: Rev

Full Circle Where Behavioral Health and Spirituality Meet April 2014 Vol. 11, Issue 1 Full Circle is a publication of In This Issue Penn Foundation’s Why Is It So Hard to Change……1,2,4 Pastoral The Spirituality of Change……….1,3,5 Services 10 Quotes About Change……………4 Making Lasting Changes……………..6 Department. Why Is It So Hard To Change? By: Rev. Carl Yusavitz, Director of Pastoral Services “I do not understand my own behavior; I do not act as I mean to, but do the things that I hate. Though the will to do what is good is in me, The Spirituality of Change the power to do it is not; the good thing I want to do, I never do; the evil thing which I do not want – that is what I do.” I sit in my chair preparing a class on the Spirituality of (Paul’s Letter to the Romans 7: 14-24, paraphrased by Gerald May, Change, and my mind author of Addiction and Grace) wanders to the signatures in the back of my high Although St. Paul is referring to sin here, I sense that what is underneath school yearbook. It’s signed by teenagers who his frustration is something we can all relate to - the difficulty of chang- often assume the entire ing our behavior. Why is change so hard? It seems like we want it and world locates itself within the halls of their own high fight it at the same time. school frozen in time and space. Think for a moment of something you would like to change, but just can’t. -

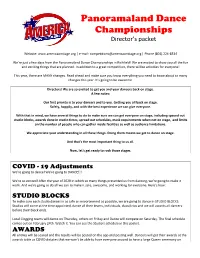

Director's Packets Please Pick up Your Director's Packet at the Registration Table Once at the Event

Panoramaland Dance Championships Director’s packet Website: www.americaonstage.org | e-mail: [email protected] | Phone (801) 224-8334 We're just a few days from the Panoramaland Dance Championships in Richfield! We are excited to show you all the fun and exciting things that are planned. In addition to a great competition, there will be activities for everyone! This year, there are MANY changes. Read ahead and make sure you know everything you need to know about so many changes this year. It’s going to be awesome. Directors! We are so excited to get you and your dancers back on stage. A few notes: Our first priority is to your dancers and to you. Getting you all back on stage. Safely, happily, and with the best experience we can give everyone. With that in mind, we have several things to do to make sure we can get everyone on stage, including spaced out studio blocks, awards done in studio times, spread out schedules, mask requirements when not on stage, and limits on the number of people who can gather inside facilities as well as audience limitations. We appreciate your understanding in all these things. Doing them means we get to dance on stage. And that's the most important thing to us all. Now, let's get ready to rock those stages. COVID - 19 Adjustments We're going to dance! We're going to DANCE!!! We're so excited! After the year of 2020 in which so many things prevented us from dancing, we're going to make it work.