Numerical Methods in Finance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Trinomial Tree

Trinomial Tree • Set up a trinomial approximation to the geometric Brownian motion dS=S = r dt + σ dW .a • The three stock prices at time ∆t are S, Su, and Sd, where ud = 1. • Impose the matching of mean and that of variance: 1 = pu + pm + pd; SM = (puu + pm + (pd=u)) S; 2 2 2 2 S V = pu(Su − SM) + pm(S − SM) + pd(Sd − SM) : aBoyle (1988). ⃝c 2013 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 599 • Above, M ≡ er∆t; 2 V ≡ M 2(eσ ∆t − 1); by Eqs. (21) on p. 154. ⃝c 2013 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 600 * - pu* Su * * pm- - j- S S * pd j j j- Sd - j ∆t ⃝c 2013 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 601 Trinomial Tree (concluded) • Use linear algebra to verify that ( ) u V + M 2 − M − (M − 1) p = ; u (u − 1) (u2 − 1) ( ) u2 V + M 2 − M − u3(M − 1) p = : d (u − 1) (u2 − 1) { In practice, we must also make sure the probabilities lie between 0 and 1. • Countless variations. ⃝c 2013 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 602 A Trinomial Tree p • Use u = eλσ ∆t, where λ ≥ 1 is a tunable parameter. • Then ( ) p r + σ2 ∆t ! 1 pu 2 + ; 2λ ( 2λσ) p 1 r − 2σ2 ∆t p ! − : d 2λ2 2λσ p • A nice choice for λ is π=2 .a aOmberg (1988). ⃝c 2013 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 603 Barrier Options Revisited • BOPM introduces a specification error by replacing the barrier with a nonidentical effective barrier. -

Here We Go Again

2015, 2016 MDDC News Organization of the Year! Celebrating 161 years of service! Vol. 163, No. 3 • 50¢ SINCE 1855 July 13 - July 19, 2017 TODAY’S GAS Here we go again . PRICE Rockville political differences rise to the surface in routine commission appointment $2.28 per gallon Last Week pointee to the City’s Historic District the three members of “Team him from serving on the HDC. $2.26 per gallon By Neal Earley @neal_earley Commission turned into a heated de- Rockville,” a Rockville political- The HDC is responsible for re- bate highlighting the City’s main po- block made up of Council members viewing applications for modification A month ago ROCKVILLE – In most jurisdic- litical division. Julie Palakovich Carr, Mark Pierzcha- to the exteriors of historic buildings, $2.36 per gallon tions, board and commission appoint- Mayor Bridget Donnell Newton la and Virginia Onley who ran on the as well as recommending boundaries A year ago ments are usually toward the bottom called the City Council’s rejection of same platform with mayoral candi- for the City’s historic districts. If ap- $2.28 per gallon of the list in terms of public interest her pick for Historic District Commis- date Sima Osdoby and city council proved Giammo, would have re- and controversy -- but not in sion – former three-term Rockville candidate Clark Reed. placed Matthew Goguen, whose term AVERAGE PRICE PER GALLON OF Rockville. UNLEADED REGULAR GAS IN Mayor Larry Giammo – political. While Onley and Palakovich expired in May. MARYLAND/D.C. METRO AREA For many municipalities, may- “I find it absolutely disappoint- Carr said they opposed Giammo’s ap- Giammo previously endorsed ACCORDING TO AAA oral appointments are a formality of- ing that politics has entered into the pointment based on his lack of qualifi- Newton in her campaign against ten given rubberstamped approval by boards and commission nomination cations, Giammo said it was his polit- INSIDE the city council, but in Rockville what process once again,” Newton said. -

A Fuzzy Real Option Model for Pricing Grid Compute Resources

A Fuzzy Real Option Model for Pricing Grid Compute Resources by David Allenotor A Thesis submitted to the Faculty of Graduate Studies of The University of Manitoba in partial fulfillment of the requirements of the degree of DOCTOR OF PHILOSOPHY Department of Computer Science University of Manitoba Winnipeg Copyright ⃝c 2010 by David Allenotor Abstract Many of the grid compute resources (CPU cycles, network bandwidths, computing power, processor times, and software) exist as non-storable commodities, which we call grid compute commodities (gcc) and are distributed geographically across organizations. These organizations have dissimilar resource compositions and usage policies, which makes pricing grid resources and guaranteeing their availability a challenge. Several initiatives (Globus, Legion, Nimrod/G) have developed various frameworks for grid resource management. However, there has been a very little effort in pricing the resources. In this thesis, we propose financial option based model for pricing grid resources by devising three research threads: pricing the gcc as a problem of real option, modeling gcc spot price using a discrete time approach, and addressing uncertainty constraints in the provision of Quality of Service (QoS) using fuzzy logic. We used GridSim, a simulation tool for resource usage in a Grid to experiment and test our model. To further consolidate our model and validate our results, we analyzed usage traces from six real grids from across the world for which we priced a set of resources. We designed a Price Variant Function (PVF) in our model, which is a fuzzy value and its application attracts more patronage to a grid that has more resources to offer and also redirect patronage from a grid that is very busy to another grid. -

Local Volatility Modelling

LOCAL VOLATILITY MODELLING Roel van der Kamp July 13, 2009 A DISSERTATION SUBMITTED FOR THE DEGREE OF Master of Science in Applied Mathematics (Financial Engineering) I have to understand the world, you see. - Richard Philips Feynman Foreword This report serves as a dissertation for the completion of the Master programme in Applied Math- ematics (Financial Engineering) from the University of Twente. The project was devised from the collaboration of the University of Twente with Saen Options BV (during the course of the project Saen Options BV was integrated into AllOptions BV) at whose facilities the project was performed over a period of six months. This research project could not have been performed without the help of others. Most notably I would like to extend my gratitude towards my supervisors: Michel Vellekoop of the University of Twente, Julien Gosme of AllOptions BV and Fran¸coisMyburg of AllOptions BV. They provided me with the theoretical and practical knowledge necessary to perform this research. Their constant guidance, involvement and availability were an essential part of this project. My thanks goes out to Irakli Khomasuridze, who worked beside me for six months on his own project for the same degree. The many discussions I had with him greatly facilitated my progress and made the whole experience much more enjoyable. Finally I would like to thank AllOptions and their staff for making use of their facilities, getting access to their data and assisting me with all practical issues. RvdK Abstract Many different models exist that describe the behaviour of stock prices and are used to value op- tions on such an underlying asset. -

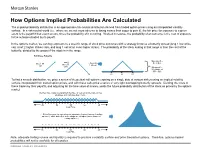

How Options Implied Probabilities Are Calculated

No content left No content right of this line of this line How Options Implied Probabilities Are Calculated The implied probability distribution is an approximate risk-neutral distribution derived from traded option prices using an interpolated volatility surface. In a risk-neutral world (i.e., where we are not more adverse to losing money than eager to gain it), the fair price for exposure to a given event is the payoff if that event occurs, times the probability of it occurring. Worked in reverse, the probability of an outcome is the cost of exposure Place content to the outcome divided by its payoff. Place content below this line below this line In the options market, we can buy exposure to a specific range of stock price outcomes with a strategy know as a butterfly spread (long 1 low strike call, short 2 higher strikes calls, and long 1 call at an even higher strike). The probability of the stock ending in that range is then the cost of the butterfly, divided by the payout if the stock is in the range. Building a Butterfly: Max payoff = …add 2 …then Buy $5 at $55 Buy 1 50 short 55 call 1 60 call calls Min payoff = $0 outside of $50 - $60 50 55 60 To find a smooth distribution, we price a series of theoretical call options expiring on a single date at various strikes using an implied volatility surface interpolated from traded option prices, and with these calls price a series of very tight overlapping butterfly spreads. Dividing the costs of these trades by their payoffs, and adjusting for the time value of money, yields the future probability distribution of the stock as priced by the options market. -

Pricing Options Using Trinomial Trees

Pricing Options Using Trinomial Trees Paul Clifford Yan Wang Oleg Zaboronski 30.12.2009 1 Introduction One of the first computational models used in the financial mathematics community was the binomial tree model. This model was popular for some time but in the last 15 years has become significantly outdated and is of little practical use. However it is still one of the first models students traditionally were taught. A more advanced model used for the project this semester, is the trinomial tree model. This improves upon the binomial model by allowing a stock price to move up, down or stay the same with certain probabilities, as shown in the diagram below. 2 Project description. The aim of the project is to apply the trinomial tree to the following problems: ² Pricing various European and American options ² Pricing barrier options ² Calculating the greeks More precisely, the students are asked to do the following: 1. Study the trinomial tree and its parameters, pu; pd; pm; u; d 2. Study the method to build the trinomial tree of share prices 3. Study the backward induction algorithms for option pricing on trees 4. Price various options such as European, American and barrier 1 5. Calculate the greeks using the tree Each of these topics will be explained very clearly in the following sections. Students are encouraged to ask questions during the lab sessions about certain terminology that they do not understand such as barrier options, hedging greeks and things of this nature. Answers to questions listed below should contain analysis of numerical results produced by the simulation. -

Tax Treatment of Derivatives

United States Viva Hammer* Tax Treatment of Derivatives 1. Introduction instruments, as well as principles of general applicability. Often, the nature of the derivative instrument will dictate The US federal income taxation of derivative instruments whether it is taxed as a capital asset or an ordinary asset is determined under numerous tax rules set forth in the US (see discussion of section 1256 contracts, below). In other tax code, the regulations thereunder (and supplemented instances, the nature of the taxpayer will dictate whether it by various forms of published and unpublished guidance is taxed as a capital asset or an ordinary asset (see discus- from the US tax authorities and by the case law).1 These tax sion of dealers versus traders, below). rules dictate the US federal income taxation of derivative instruments without regard to applicable accounting rules. Generally, the starting point will be to determine whether the instrument is a “capital asset” or an “ordinary asset” The tax rules applicable to derivative instruments have in the hands of the taxpayer. Section 1221 defines “capital developed over time in piecemeal fashion. There are no assets” by exclusion – unless an asset falls within one of general principles governing the taxation of derivatives eight enumerated exceptions, it is viewed as a capital asset. in the United States. Every transaction must be examined Exceptions to capital asset treatment relevant to taxpayers in light of these piecemeal rules. Key considerations for transacting in derivative instruments include the excep- issuers and holders of derivative instruments under US tions for (1) hedging transactions3 and (2) “commodities tax principles will include the character of income, gain, derivative financial instruments” held by a “commodities loss and deduction related to the instrument (ordinary derivatives dealer”.4 vs. -

On Trinomial Trees for One-Factor Short Rate Models∗

On Trinomial Trees for One-Factor Short Rate Models∗ Markus Leippoldy Swiss Banking Institute, University of Zurich Zvi Wienerz School of Business Administration, Hebrew University of Jerusalem April 3, 2003 ∗Markus Leippold acknowledges the financial support of the Swiss National Science Foundation (NCCR FINRISK). Zvi Wiener acknowledges the financial support of the Krueger and Rosenberg funds at the He- brew University of Jerusalem. We welcome comments, including references to related papers we inadvertently overlooked. yCorrespondence Information: University of Zurich, ISB, Plattenstr. 14, 8032 Zurich, Switzerland; tel: +41 1-634-2951; fax: +41 1-634-4903; [email protected]. zCorrespondence Information: Hebrew University of Jerusalem, Mount Scopus, Jerusalem, 91905, Israel; tel: +972-2-588-3049; fax: +972-2-588-1341; [email protected]. On Trinomial Trees for One-Factor Short Rate Models ABSTRACT In this article we discuss the implementation of general one-factor short rate models with a trinomial tree. Taking the Hull-White model as a starting point, our contribution is threefold. First, we show how trees can be spanned using a set of general branching processes. Secondly, we improve Hull-White's procedure to calibrate the tree to bond prices by a much more efficient approach. This approach is applicable to a wide range of term structure models. Finally, we show how the tree can be adjusted to the volatility structure. The proposed approach leads to an efficient and flexible construction method for trinomial trees, which can be easily implemented and calibrated to both prices and volatilities. JEL Classification Codes: G13, C6. Key Words: Short Rate Models, Trinomial Trees, Forward Measure. -

Bond Futures Calendar Spread Trading

Black Algo Technologies Bond Futures Calendar Spread Trading Part 2 – Understanding the Fundamentals Strategy Overview Asset to be traded: Three-month Canadian Bankers' Acceptance Futures (BAX) Price chart of BAXH20 Strategy idea: Create a duration neutral (i.e. market neutral) synthetic asset and trade the mean reversion The general idea is straightforward to most professional futures traders. This is not some market secret. The success of this strategy lies in the execution. Understanding Our Asset and Synthetic Asset These are the prices and volume data of BAX as seen in the Interactive Brokers platform. blackalgotechnologies.com Black Algo Technologies Notice that the volume decreases as we move to the far month contracts What is BAX BAX is a future whose underlying asset is a group of short-term (30, 60, 90 days, 6 months or 1 year) loans that major Canadian banks make to each other. BAX futures reflect the Canadian Dollar Offered Rate (CDOR) (the overnight interest rate that Canadian banks charge each other) for a three-month loan period. Settlement: It is cash-settled. This means that no physical products are transferred at the futures’ expiry. Minimum price fluctuation: 0.005, which equates to C$12.50 per contract. This means that for every 0.005 move in price, you make or lose $12.50 Canadian dollar. Link to full specification details: • https://m-x.ca/produits_taux_int_bax_en.php (Note that the minimum price fluctuation is 0.01 for contracts further out from the first 10 expiries. Not too important as we won’t trade contracts that are that far out.) • https://www.m-x.ca/f_publications_en/bax_en.pdf Other STIR Futures BAX are just one type of short-term interest rate (STIR) future. -

Volatility Curves of Incomplete Markets

Master's thesis Volatility Curves of Incomplete Markets The Trinomial Option Pricing Model KATERYNA CHECHELNYTSKA Department of Mathematical Sciences Chalmers University of Technology University of Gothenburg Gothenburg, Sweden 2019 Thesis for the Degree of Master Science Volatility Curves of Incomplete Markets The Trinomial Option Pricing Model KATERYNA CHECHELNYTSKA Department of Mathematical Sciences Chalmers University of Technology University of Gothenburg SE - 412 96 Gothenburg, Sweden Gothenburg, Sweden 2019 Volatility Curves of Incomplete Markets The Trinomial Asset Pricing Model KATERYNA CHECHELNYTSKA © KATERYNA CHECHELNYTSKA, 2019. Supervisor: Docent Simone Calogero, Department of Mathematical Sciences Examiner: Docent Patrik Albin, Department of Mathematical Sciences Master's Thesis 2019 Department of Mathematical Sciences Chalmers University of Technology and University of Gothenburg SE-412 96 Gothenburg Telephone +46 31 772 1000 Typeset in LATEX Printed by Chalmers Reproservice Gothenburg, Sweden 2019 4 Volatility Curves of Incomplete Markets The Trinomial Option Pricing Model KATERYNA CHECHELNYTSKA Department of Mathematical Sciences Chalmers University of Technology and University of Gothenburg Abstract The graph of the implied volatility of call options as a function of the strike price is called volatility curve. If the options market were perfectly described by the Black-Scholes model, the implied volatility would be independent of the strike price and thus the volatility curve would be a flat horizontal line. However the volatility curve of real markets is often found to have recurrent convex shapes called volatility smile and volatility skew. The common approach to explain this phenomena is by assuming that the volatility of the underlying stock is a stochastic process (while in Black-Scholes it is assumed to be a deterministic constant). -

Robust Option Pricing: the Uncertain Volatility Model

Imperial College London Department of Mathematics Robust option pricing: the uncertain volatility model Supervisor: Dr. Pietro SIORPAES Author: Hicham EL JERRARI (CID: 01792391) A thesis submitted for the degree of MSc in Mathematics and Finance, 2019-2020 El Jerrari Robust option pricing Declaration The work contained in this thesis is my own work unless otherwise stated. Signature and date: 06/09/2020 Hicham EL JERRARI 1 El Jerrari Robust option pricing Acknowledgements I would like to thank my supervisor: Dr. Pietro SIORPAES for giving me the opportunity to work on this exciting subject. I am very grateful to him for his help throughout my project. Thanks to his advice, his supervision, as well as the discussions I had with him, this experience was very enriching for me. I would also like to thank Imperial College and more particularly the \Msc Mathemat- ics and Finance" for this year rich in learning, for their support and their dedication especially in this particular year of pandemic. On a personal level, I want to thank my parents for giving me the opportunity to study this master's year at Imperial College and to be present and support me throughout my studies. I also thank my brother and my two sisters for their encouragement. Last but not least, I dedicate this work to my grandmother for her moral support and a tribute to my late grandfather. 2 El Jerrari Robust option pricing Abstract This study presents the uncertain volatility model (UVM) which proposes a new approach for the pricing and hedging of derivatives by considering a band of spot's volatility as input. -

The Hull-White Model • Hull and White (1987) Postulate the Following Model, Ds √ = R Dt + V Dw , S 1 Dv = Μvv Dt + Bv Dw2

The Hull-White Model • Hull and White (1987) postulate the following model, dS p = r dt + V dW ; S 1 dV = µvV dt + bV dW2: • Above, V is the instantaneous variance. • They assume µv depends on V and t (but not S). ⃝c 2014 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 599 The SABR Model • Hagan, Kumar, Lesniewski, and Woodward (2002) postulate the following model, dS = r dt + SθV dW ; S 1 dV = bV dW2; for 0 ≤ θ ≤ 1. • A nice feature of this model is that the implied volatility surface has a compact approximate closed form. ⃝c 2014 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 600 The Hilliard-Schwartz Model • Hilliard and Schwartz (1996) postulate the following general model, dS = r dt + f(S)V a dW ; S 1 dV = µ(V ) dt + bV dW2; for some well-behaved function f(S) and constant a. ⃝c 2014 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 601 The Blacher Model • Blacher (2002) postulates the following model, dS [ ] = r dt + σ 1 + α(S − S ) + β(S − S )2 dW ; S 0 0 1 dσ = κ(θ − σ) dt + ϵσ dW2: • So the volatility σ follows a mean-reverting process to level θ. ⃝c 2014 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 602 Heston's Stochastic-Volatility Model • Heston (1993) assumes the stock price follows dS p = (µ − q) dt + V dW1; (64) S p dV = κ(θ − V ) dt + σ V dW2: (65) { V is the instantaneous variance, which follows a square-root process. { dW1 and dW2 have correlation ρ.