Intraday Volatility Surface Calibration

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On the Optionality and Fairness of Atomic Swaps

On the optionality and fairness of Atomic Swaps Runchao Han Haoyu Lin Jiangshan Yu Monash University and CSIRO-Data61 [email protected] Monash University [email protected] [email protected] ABSTRACT happened on centralised exchanges. To date, the DEX market volume Atomic Swap enables two parties to atomically exchange their own has reached approximately 50,000 ETH [2]. More specifically, there cryptocurrencies without trusted third parties. This paper provides are more than 250 DEXes [3], more than 30 DEX protocols [4], and the first quantitative analysis on the fairness of the Atomic Swap more than 4,000 active traders in all DEXes [2]. protocol, and proposes the first fair Atomic Swap protocol with However, being atomic does not indicate the Atomic Swap is fair. implementations. In an Atomic Swap, the swap initiator can decide whether to proceed In particular, we model the Atomic Swap as the American Call or abort the swap, and the default maximum time for him to decide is Option, and prove that an Atomic Swap is equivalent to an Amer- 24 hours [5]. This enables the the swap initiator to speculate without ican Call Option without the premium. Thus, the Atomic Swap is any penalty. More specifically, the swap initiator can keep waiting unfair to the swap participant. Then, we quantify the fairness of before the timelock expires. If the price of the swap participant’s the Atomic Swap and compare it with that of conventional financial asset rises, the swap initiator will proceed the swap so that he will assets (stocks and fiat currencies). -

Here We Go Again

2015, 2016 MDDC News Organization of the Year! Celebrating 161 years of service! Vol. 163, No. 3 • 50¢ SINCE 1855 July 13 - July 19, 2017 TODAY’S GAS Here we go again . PRICE Rockville political differences rise to the surface in routine commission appointment $2.28 per gallon Last Week pointee to the City’s Historic District the three members of “Team him from serving on the HDC. $2.26 per gallon By Neal Earley @neal_earley Commission turned into a heated de- Rockville,” a Rockville political- The HDC is responsible for re- bate highlighting the City’s main po- block made up of Council members viewing applications for modification A month ago ROCKVILLE – In most jurisdic- litical division. Julie Palakovich Carr, Mark Pierzcha- to the exteriors of historic buildings, $2.36 per gallon tions, board and commission appoint- Mayor Bridget Donnell Newton la and Virginia Onley who ran on the as well as recommending boundaries A year ago ments are usually toward the bottom called the City Council’s rejection of same platform with mayoral candi- for the City’s historic districts. If ap- $2.28 per gallon of the list in terms of public interest her pick for Historic District Commis- date Sima Osdoby and city council proved Giammo, would have re- and controversy -- but not in sion – former three-term Rockville candidate Clark Reed. placed Matthew Goguen, whose term AVERAGE PRICE PER GALLON OF Rockville. UNLEADED REGULAR GAS IN Mayor Larry Giammo – political. While Onley and Palakovich expired in May. MARYLAND/D.C. METRO AREA For many municipalities, may- “I find it absolutely disappoint- Carr said they opposed Giammo’s ap- Giammo previously endorsed ACCORDING TO AAA oral appointments are a formality of- ing that politics has entered into the pointment based on his lack of qualifi- Newton in her campaign against ten given rubberstamped approval by boards and commission nomination cations, Giammo said it was his polit- INSIDE the city council, but in Rockville what process once again,” Newton said. -

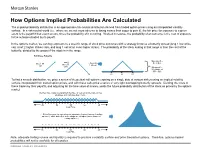

How Options Implied Probabilities Are Calculated

No content left No content right of this line of this line How Options Implied Probabilities Are Calculated The implied probability distribution is an approximate risk-neutral distribution derived from traded option prices using an interpolated volatility surface. In a risk-neutral world (i.e., where we are not more adverse to losing money than eager to gain it), the fair price for exposure to a given event is the payoff if that event occurs, times the probability of it occurring. Worked in reverse, the probability of an outcome is the cost of exposure Place content to the outcome divided by its payoff. Place content below this line below this line In the options market, we can buy exposure to a specific range of stock price outcomes with a strategy know as a butterfly spread (long 1 low strike call, short 2 higher strikes calls, and long 1 call at an even higher strike). The probability of the stock ending in that range is then the cost of the butterfly, divided by the payout if the stock is in the range. Building a Butterfly: Max payoff = …add 2 …then Buy $5 at $55 Buy 1 50 short 55 call 1 60 call calls Min payoff = $0 outside of $50 - $60 50 55 60 To find a smooth distribution, we price a series of theoretical call options expiring on a single date at various strikes using an implied volatility surface interpolated from traded option prices, and with these calls price a series of very tight overlapping butterfly spreads. Dividing the costs of these trades by their payoffs, and adjusting for the time value of money, yields the future probability distribution of the stock as priced by the options market. -

Tax Treatment of Derivatives

United States Viva Hammer* Tax Treatment of Derivatives 1. Introduction instruments, as well as principles of general applicability. Often, the nature of the derivative instrument will dictate The US federal income taxation of derivative instruments whether it is taxed as a capital asset or an ordinary asset is determined under numerous tax rules set forth in the US (see discussion of section 1256 contracts, below). In other tax code, the regulations thereunder (and supplemented instances, the nature of the taxpayer will dictate whether it by various forms of published and unpublished guidance is taxed as a capital asset or an ordinary asset (see discus- from the US tax authorities and by the case law).1 These tax sion of dealers versus traders, below). rules dictate the US federal income taxation of derivative instruments without regard to applicable accounting rules. Generally, the starting point will be to determine whether the instrument is a “capital asset” or an “ordinary asset” The tax rules applicable to derivative instruments have in the hands of the taxpayer. Section 1221 defines “capital developed over time in piecemeal fashion. There are no assets” by exclusion – unless an asset falls within one of general principles governing the taxation of derivatives eight enumerated exceptions, it is viewed as a capital asset. in the United States. Every transaction must be examined Exceptions to capital asset treatment relevant to taxpayers in light of these piecemeal rules. Key considerations for transacting in derivative instruments include the excep- issuers and holders of derivative instruments under US tions for (1) hedging transactions3 and (2) “commodities tax principles will include the character of income, gain, derivative financial instruments” held by a “commodities loss and deduction related to the instrument (ordinary derivatives dealer”.4 vs. -

Bond Futures Calendar Spread Trading

Black Algo Technologies Bond Futures Calendar Spread Trading Part 2 – Understanding the Fundamentals Strategy Overview Asset to be traded: Three-month Canadian Bankers' Acceptance Futures (BAX) Price chart of BAXH20 Strategy idea: Create a duration neutral (i.e. market neutral) synthetic asset and trade the mean reversion The general idea is straightforward to most professional futures traders. This is not some market secret. The success of this strategy lies in the execution. Understanding Our Asset and Synthetic Asset These are the prices and volume data of BAX as seen in the Interactive Brokers platform. blackalgotechnologies.com Black Algo Technologies Notice that the volume decreases as we move to the far month contracts What is BAX BAX is a future whose underlying asset is a group of short-term (30, 60, 90 days, 6 months or 1 year) loans that major Canadian banks make to each other. BAX futures reflect the Canadian Dollar Offered Rate (CDOR) (the overnight interest rate that Canadian banks charge each other) for a three-month loan period. Settlement: It is cash-settled. This means that no physical products are transferred at the futures’ expiry. Minimum price fluctuation: 0.005, which equates to C$12.50 per contract. This means that for every 0.005 move in price, you make or lose $12.50 Canadian dollar. Link to full specification details: • https://m-x.ca/produits_taux_int_bax_en.php (Note that the minimum price fluctuation is 0.01 for contracts further out from the first 10 expiries. Not too important as we won’t trade contracts that are that far out.) • https://www.m-x.ca/f_publications_en/bax_en.pdf Other STIR Futures BAX are just one type of short-term interest rate (STIR) future. -

On Volatility Swaps for Stock Market Forecast: Application Example CAC 40 French Index

Hindawi Publishing Corporation Journal of Probability and Statistics Volume 2014, Article ID 854578, 6 pages http://dx.doi.org/10.1155/2014/854578 Research Article On Volatility Swaps for Stock Market Forecast: Application Example CAC 40 French Index Halim Zeghdoudi,1,2 Abdellah Lallouche,3 and Mohamed Riad Remita1 1 LaPSLaboratory,Badji-MokhtarUniversity,BP12,23000Annaba,Algeria 2 Department of Computing Mathematics and Physics, Waterford Institute of Technology, Waterford, Ireland 3 Universite´ 20 Aout, 1955 Skikda, Algeria Correspondence should be addressed to Halim Zeghdoudi; [email protected] Received 3 August 2014; Revised 21 September 2014; Accepted 29 September 2014; Published 9 November 2014 Academic Editor: Chin-Shang Li Copyright © 2014 Halim Zeghdoudi et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. This paper focuses on the pricing of variance and volatility swaps under Heston model (1993). To this end, we apply this modelto the empirical financial data: CAC 40 French Index. More precisely, we make an application example for stock market forecast: CAC 40 French Index to price swap on the volatility using GARCH(1,1) model. 1. Introduction a price index. The underlying is usually a foreign exchange rate (very liquid market) but could be as well a single name Black and Scholes’ model [1]isoneofthemostsignificant equity or index. However, the variance swap is reliable in models discovered in finance in XXe century for valuing the index market because it can be replicated with a linear liquid European style vanilla option. -

OPTION-BASED EQUITY STRATEGIES Roberto Obregon

MEKETA INVESTMENT GROUP BOSTON MA CHICAGO IL MIAMI FL PORTLAND OR SAN DIEGO CA LONDON UK OPTION-BASED EQUITY STRATEGIES Roberto Obregon MEKETA INVESTMENT GROUP 100 Lowder Brook Drive, Suite 1100 Westwood, MA 02090 meketagroup.com February 2018 MEKETA INVESTMENT GROUP 100 LOWDER BROOK DRIVE SUITE 1100 WESTWOOD MA 02090 781 471 3500 fax 781 471 3411 www.meketagroup.com MEKETA INVESTMENT GROUP OPTION-BASED EQUITY STRATEGIES ABSTRACT Options are derivatives contracts that provide investors the flexibility of constructing expected payoffs for their investment strategies. Option-based equity strategies incorporate the use of options with long positions in equities to achieve objectives such as drawdown protection and higher income. While the range of strategies available is wide, most strategies can be classified as insurance buying (net long options/volatility) or insurance selling (net short options/volatility). The existence of the Volatility Risk Premium, a market anomaly that causes put options to be overpriced relative to what an efficient pricing model expects, has led to an empirical outperformance of insurance selling strategies relative to insurance buying strategies. This paper explores whether, and to what extent, option-based equity strategies should be considered within the long-only equity investing toolkit, given that equity risk is still the main driver of returns for most of these strategies. It is important to note that while option-based strategies seek to design favorable payoffs, all such strategies involve trade-offs between expected payoffs and cost. BACKGROUND Options are derivatives1 contracts that give the holder the right, but not the obligation, to buy or sell an asset at a given point in time and at a pre-determined price. -

Global Energy Markets & Pricing

11-FEB-20 GLOBAL ENERGY MARKETS & PRICING www.energytraining.ae 21 - 25 Sep 2020, London GLOBAL ENERGY MARKETS & PRICING INTRODUCTION OBJECTIVES This 5-day accelerated Global Energy Markets & Pricing training By the end of this training course, the participants will be course is designed to give delegates a comprehensive picture able to: of the global energy obtained through fossil fuels and renewal sources. The overall dynamics of global energy industry are • Gain broad perspective of global oil and refined explained with the supply-demand dynamics of fossil fuels, products sales business, supply, transportation, refining, their price volatility, and the associated geopolitics. marketing & trading • Boost their understanding on the fundamentals of oil The energy sources include crude oil, natural gas, LNG, refined business: quality, blending & valuation of crude oil for products, and renewables – solar, wind, hydro, and nuclear trade, freight and netback calculations, refinery margins energy. The focus on the issues to be considered on the calculations, & vessel chartering sales, marketing, trading and special focus on the price risk • Master the Total barrel economics, Oil market futures, management. hedging and futures, and price risk management • Evaluate the technical, commercial, legal and trading In this training course, the participants will gain the aspects of oil business with the International, US, UK, technical knowledge and business acumen on the key and Singapore regulations subjects: • Confidently discuss the technical, -

Options Strategy Guide for Metals Products As the World’S Largest and Most Diverse Derivatives Marketplace, CME Group Is Where the World Comes to Manage Risk

metals products Options Strategy Guide for Metals Products As the world’s largest and most diverse derivatives marketplace, CME Group is where the world comes to manage risk. CME Group exchanges – CME, CBOT, NYMEX and COMEX – offer the widest range of global benchmark products across all major asset classes, including futures and options based on interest rates, equity indexes, foreign exchange, energy, agricultural commodities, metals, weather and real estate. CME Group brings buyers and sellers together through its CME Globex electronic trading platform and its trading facilities in New York and Chicago. CME Group also operates CME Clearing, one of the largest central counterparty clearing services in the world, which provides clearing and settlement services for exchange-traded contracts, as well as for over-the-counter derivatives transactions through CME ClearPort. These products and services ensure that businesses everywhere can substantially mitigate counterparty credit risk in both listed and over-the-counter derivatives markets. Options Strategy Guide for Metals Products The Metals Risk Management Marketplace Because metals markets are highly responsive to overarching global economic The hypothetical trades that follow look at market position, market objective, and geopolitical influences, they present a unique risk management tool profit/loss potential, deltas and other information associated with the 12 for commercial and institutional firms as well as a unique, exciting and strategies. The trading examples use our Gold, Silver -

Analysis of the Trading Book Hypothetical Portfolio Exercise

Basel Committee on Banking Supervision Analysis of the trading book hypothetical portfolio exercise September 2014 This publication is available on the BIS website (www.bis.org). Grey underlined text in this publication shows where hyperlinks are available in the electronic version. © Bank for International Settlements 2014. All rights reserved. Brief excerpts may be reproduced or translated provided the source is stated. ISBN 978-92-9131-662-5 (print) ISBN 978-92-9131-668-7 (online) Contents Executive summary ........................................................................................................................................................................... 1 1. Hypothetical portfolio exercise description ......................................................................................................... 4 2. Coverage statistics .......................................................................................................................................................... 5 3. Key findings ....................................................................................................................................................................... 5 4. Technical background ................................................................................................................................................... 6 4.1 Data quality ............................................................................................................................................................. -

American Style Stock Options

AMERICAN STYLE OPTIONS ON EQUITY - CONTRACT SPECIFICATIONS – 100 SHARES OPTION STYLE American style, which can be exercised at any time. UNDERLYING Well-capitalized equity securities. INSTRUMENT CONTRACT SIZE Apart from exceptions or temporary adjustments for corporate actions, an equity option contract generally relates to 100 shares of the underlying equity security. The Contract Value is equal to the quoted option price in Euro multiplied by the number of underlying shares. MINIMUM The tick size of the premium quotation is equal to € 0.01 (€ 1 per contract). PRICE MOVEMENT (TICK SIZE AND VALUE) EXPIRY Euronext Paris will publish a list with the number of maturities listed per option class. The MONTHS option classes will be divided in 4 different groups. ■ Group I: 3 monthly, the following 3 quarterly, the following 4 half yearly and the following 2 yearly maturities are opened. Cycle Expiry Months Cycle Lifetime (Months) Monthly Every Month 1; 2; 3 Quarterly March, June, September, December 6; 9; 12 GROUP 1 Half-Yearly June, December 18; 24; 30; 36 Yearly December 48; 60 ■ Group II: 3 monthly, the following 3 quarterly and the following 2 half yearly maturities are opened. Cycle Expiry Months Cycle Lifetime (Months) Monthly Every Month 1; 2; 3 Quarterly March, June, September, December 6; 9; 12 GROUP 2 Half-Yearly June, December 18; 24 ■ Group III: 3 monthly and the following 3 quarterly maturities are opened. 3 Cycle Expiry Months Cycle Lifetime (Months) Monthly Every Month 1; 2; 3 GROUP Quarterly March, June, September, December 6; 9; 12 www.euronext.com AMERICAN STYLE OPTIONS ON EQUITY - CONTRACT SPECIFICATIONS – 100 SHARES ■ Group IV: 4 quarterly maturities are opened. -

1. BGC Derivative Markets, L.P. Contract Specifications

1. BGC Derivative Markets, L.P. Contract Specifications ......................................................................................................................................2 1.1 Product Descriptions ..................................................................................................................................................................................2 1.1.1 Mandatorily Cleared CEA 2(h)(1) Products as of 2nd October 2013 .............................................................................................2 1.1.2 Made Available to Trade CEA 2(h)(8) Products ..............................................................................................................................5 1.1.3 Interest Rate Swaps .........................................................................................................................................................................7 1.1.4 Commodities.....................................................................................................................................................................................27 1.1.5 Credit Derivatives .............................................................................................................................................................................30 1.1.6 Equity Derivatives .............................................................................................................................................................................37 1.1.6.1 Equity Index