Monitoring Malicious Powershell Usage Through Log Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Resolving Issues with Network Connectivity

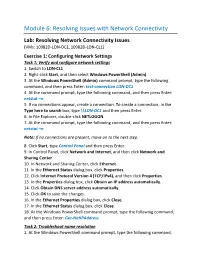

Module 6: Resolving Issues with Network Connectivity Lab: Resolving Network Connectivity Issues (VMs: 10982D-LON-DC1, 10982D-LON-CL1) Exercise 1: Configuring Network Settings Task 1: Verify and configure network settings 1. Switch to LON-CL1. 2. Right-click Start, and then select Windows PowerShell (Admin). 3. At the Windows PowerShell (Admin) command prompt, type the following command, and then press Enter: test-connection LON-DC1 4. At the command prompt, type the following command, and then press Enter: netstat –n 5. If no connections appear, create a connection. To create a connection, in the Type here to search box, type \\LON-DC1 and then press Enter. 6. In File Explorer, double-click NETLOGON. 7. At the command prompt, type the following command, and then press Enter: netstat –n Note: If no connections are present, move on to the next step. 8. Click Start, type Control Panel and then press Enter. 9. In Control Panel, click Network and Internet, and then click Network and Sharing Center. 10. In Network and Sharing Center, click Ethernet. 11. In the Ethernet Status dialog box, click Properties. 12. Click Internet Protocol Version 4 (TCP/IPv4), and then click Properties. 13. In the Properties dialog box, click Obtain an IP address automatically. 14. Click Obtain DNS server address automatically. 15. Click OK to save the changes. 16. In the Ethernet Properties dialog box, click Close. 17. In the Ethernet Status dialog box, click Close. 18. At the Windows PowerShell command prompt, type the following command, and then press Enter: Get-NetIPAddress Task 2: Troubleshoot name resolution 1. -

Beginning Portable Shell Scripting from Novice to Professional

Beginning Portable Shell Scripting From Novice to Professional Peter Seebach 10436fmfinal 1 10/23/08 10:40:24 PM Beginning Portable Shell Scripting: From Novice to Professional Copyright © 2008 by Peter Seebach All rights reserved. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage or retrieval system, without the prior written permission of the copyright owner and the publisher. ISBN-13 (pbk): 978-1-4302-1043-6 ISBN-10 (pbk): 1-4302-1043-5 ISBN-13 (electronic): 978-1-4302-1044-3 ISBN-10 (electronic): 1-4302-1044-3 Printed and bound in the United States of America 9 8 7 6 5 4 3 2 1 Trademarked names may appear in this book. Rather than use a trademark symbol with every occurrence of a trademarked name, we use the names only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. Lead Editor: Frank Pohlmann Technical Reviewer: Gary V. Vaughan Editorial Board: Clay Andres, Steve Anglin, Ewan Buckingham, Tony Campbell, Gary Cornell, Jonathan Gennick, Michelle Lowman, Matthew Moodie, Jeffrey Pepper, Frank Pohlmann, Ben Renow-Clarke, Dominic Shakeshaft, Matt Wade, Tom Welsh Project Manager: Richard Dal Porto Copy Editor: Kim Benbow Associate Production Director: Kari Brooks-Copony Production Editor: Katie Stence Compositor: Linda Weidemann, Wolf Creek Press Proofreader: Dan Shaw Indexer: Broccoli Information Management Cover Designer: Kurt Krames Manufacturing Director: Tom Debolski Distributed to the book trade worldwide by Springer-Verlag New York, Inc., 233 Spring Street, 6th Floor, New York, NY 10013. -

Transaction Insight Reference Manual Contents I Admin - Filters - Partner Filter

TIBCO Foresight® Transaction Insight® Reference Manual Software Release 5.2 September 2017 Two-second advantage® Important Information SOME TIBCO SOFTWARE EMBEDS OR BUNDLES OTHER TIBCO SOFTWARE. USE OF SUCH EMBEDDED OR BUNDLED TIBCO SOFTWARE IS SOLELY TO ENABLE THE FUNCTIONALITY (OR PROVIDE LIMITED ADD-ON FUNCTIONALITY) OF THE LICENSED TIBCO SOFTWARE. THE EMBEDDED OR BUNDLED SOFTWARE IS NOT LICENSED TO BE USED OR ACCESSED BY ANY OTHER TIBCO SOFTWARE OR FOR ANY OTHER PURPOSE. USE OF TIBCO SOFTWARE AND THIS DOCUMENT IS SUBJECT TO THE TERMS AND CONDITIONS OF A LICENSE AGREEMENT FOUND IN EITHER A SEPARATELY EXECUTED SOFTWARE LICENSE AGREEMENT, OR, IF THERE IS NO SUCH SEPARATE AGREEMENT, THE CLICKWRAP END USER LICENSE AGREEMENT WHICH IS DISPLAYED DURING DOWNLOAD OR INSTALLATION OF THE SOFTWARE (AND WHICH IS DUPLICATED IN LICENSE.PDF) OR IF THERE IS NO SUCH SOFTWARE LICENSE AGREEMENT OR CLICKWRAP END USER LICENSE AGREEMENT, THE LICENSE(S) LOCATED IN THE “LICENSE” FILE(S) OF THE SOFTWARE. USE OF THIS DOCUMENT IS SUBJECT TO THOSE TERMS AND CONDITIONS, AND YOUR USE HEREOF SHALL CONSTITUTE ACCEPTANCE OF AND AN AGREEMENT TO BE BOUND BY THE SAME. This document contains confidential information that is subject to U.S. and international copyright laws and treaties. No part of this document may be reproduced in any form without the written authorization of TIBCO Software Inc. TIBCO and Two-Second Advantage, TIBCO Foresight EDISIM, TIBCO Foresight Instream, TIBCO Foresight Studio, and TIBCO Foresight Transaction Insight are either registered trademarks or trademarks of TIBCO Software Inc. in the United States and/or other countries. -

SOAP / REST and IBM I

1/11/18 SOPA / REST and IBM i Tim Rowe- [email protected] Business Architect Application Development © 2017 International Business Machines Corporation Using a REST API with Watson https://ibm-i-watson-test.mybluemix.net/ 2 © 2017 International Business Machines Corporation 1 1/11/18 ™ What is an API - Agenda •What is an API •What is a Web Service •SOAP vs REST – What is SOAP – What is REST – Benefits – Drawbacks 3 © 2017 International Business Machines Corporation ™ Connections Devices There Here Applications 4 © 2017 International Business Machines Corporation 2 1/11/18 ™ 5 © 2017 International Business Machines Corporation ™ 6 © 2017 International Business Machines Corporation 3 1/11/18 ™ 7 © 2017 International Business Machines Corporation ™ 8 © 2017 International Business Machines Corporation 4 1/11/18 ™ API Definition Application Programming Interface 9 © 2017 International Business Machines Corporation ™ API Definition 10 © 2017 International Business Machines Corporation 5 1/11/18 ™ APIs - Simple Simple way to connect endpoints. Send a request and receive a response. 11 © 2017 International Business Machines Corporation ™ Example Kitchen 12 © 2017 International Business Machines Corporation 6 1/11/18 ™ 13 © 2017 International Business Machines Corporation ™ Not just a buzz-word, but rather the evolution of services- oriented IT. Allows users, businesses & partners the ability to interact in new and different ways resulting in the growth (in some cases the revolution) of business. 14 © 2017 International Business Machines Corporation -

Shell Variables

Shell Using the command line Orna Agmon ladypine at vipe.technion.ac.il Haifux Shell – p. 1/55 TOC Various shells Customizing the shell getting help and information Combining simple and useful commands output redirection lists of commands job control environment variables Remote shell textual editors textual clients references Shell – p. 2/55 What is the shell? The shell is the wrapper around the system: a communication means between the user and the system The shell is the manner in which the user can interact with the system through the terminal. The shell is also a script interpreter. The simplest script is a bunch of shell commands. Shell scripts are used in order to boot the system. The user can also write and execute shell scripts. Shell – p. 3/55 Shell - which shell? There are several kinds of shells. For example, bash (Bourne Again Shell), csh, tcsh, zsh, ksh (Korn Shell). The most important shell is bash, since it is available on almost every free Unix system. The Linux system scripts use bash. The default shell for the user is set in the /etc/passwd file. Here is a line out of this file for example: dana:x:500:500:Dana,,,:/home/dana:/bin/bash This line means that user dana uses bash (located on the system at /bin/bash) as her default shell. Shell – p. 4/55 Starting to work in another shell If Dana wishes to temporarily use another shell, she can simply call this shell from the command line: [dana@granada ˜]$ bash dana@granada:˜$ #In bash now dana@granada:˜$ exit [dana@granada ˜]$ bash dana@granada:˜$ #In bash now, going to hit ctrl D dana@granada:˜$ exit [dana@granada ˜]$ #In original shell now Shell – p. -

View the Slides (Smith)

Network Shells Michael Smith Image: https://commons.wikimedia.org/wiki/File:Network-connections.png What does a Shell give us? ● A REPL ● Repeatability ● Direct access to system operations ● User-focused design ● Hierarchical context & sense of place Image: https://upload.wikimedia.org/wikipedia/commons/8/84/Bash_demo.png What does a Shell give us? ● A REPL ● Repeatability ● Direct access to system operations ● User-focused design ● Hierarchical context & sense of place Image: https://upload.wikimedia.org/wikipedia/commons/8/84/Bash_demo.png Management at a distance (netsh) Netsh: Configure DHCP servers with netsh -r RemoteMachine -u domain\username [RemoteMachine] netsh>interface [RemoteMachine] netsh interface>ipv6 [RemoteMachine] netsh interface ipv6>show interfaces Reference: https://docs.microsoft.com/en-us/windows-server/networking/technologies/netsh/netsh-contexts Management at a distance (netsh) Netsh: Configure DHCP servers with netsh Location-r RemoteMachine -u domain\username Hierarchical [RemoteMachine] netsh>interfacecontext Simpler [RemoteMachine] netsh interface>ipv6 commands [RemoteMachine] netsh interface ipv6>show interfaces Reference: https://docs.microsoft.com/en-us/windows-server/networking/technologies/netsh/netsh-contexts Management at a distance (WSMan) WSMan (in Powershell): Manage Windows remotely with Set-Location -Path WSMan:\SERVER01 Get-ChildItem -Path . Set-Item Client\TrustedHosts *.domain2.com -Concatenate Reference: https://docs.microsoft.com/en-us/powershell/module/microsoft.wsman.management/about/about_wsman_provider -

Netsh Commands William John Holden 20140411 (Version 2) Interface Configuration Configure an Ipv4 Address with Subnet Mask and Default Gateway

Netsh Commands William John Holden 20140411 (version 2) Interface Configuration Configure an IPv4 address with subnet mask and default gateway. Omitted netmask implies classful addressing. netsh int ipv4 set address "Local Area Connection" static 192.168.1.3 255.255.255.0 192.168.1.1 Remove an IPv4 address and default gateway from an interface. netsh int ipv4 del address "Local Area Connection" 192.168.1.3 192.168.1.1 You can add more than one IP address to an interface. Additional addresses don't show up in ipconfig without /all. netsh int ipv4 add address "Local Area Connection" 192.168.1.4 Add a global unicast IP with prefix. Prefix is optional and defaults to /64. netsh int ipv6 set address "Local Area Connection" 2001:beef::1/64 Add a linklocal IP to an interface. See the similarity to above? netsh int ipv6 add address "Local Area Connection" fe80::6 Delete the IP. Remove a linklocal IP the same way. netsh int ipv6 del address "Local Area Connection" 2001:beef::1 Set an IPv6 default route. netsh int ipv6 add route ::/0 "Local Area Connection" fe80::3 Delete the default route. netsh int ipv6 delete route ::/0 "Local Area Connection" fe80::3 Reset Configuration Reset interface configuration completely (requires restart): netsh int ipv6 reset all netsh int ipv4 reset all shutdown r t 0 Verification (“show commands”) netsh has several commands that are very similar to ipconfig, route print (netstat r), netstat a, and getmac. Poke around netsh int ipv4 show ? and you’ll find lots of interesting stuff. -

UNIX X Command Tips and Tricks David B

SESUG Paper 122-2019 UNIX X Command Tips and Tricks David B. Horvath, MS, CCP ABSTRACT SAS® provides the ability to execute operating system level commands from within your SAS code – generically known as the “X Command”. This session explores the various commands, the advantages and disadvantages of each, and their alternatives. The focus is on UNIX/Linux but much of the same applies to Windows as well. Under SAS EG, any issued commands execute on the SAS engine, not necessarily on the PC. X %sysexec Call system Systask command Filename pipe &SYSRC Waitfor Alternatives will also be addressed – how to handle when NOXCMD is the default for your installation, saving results, and error checking. INTRODUCTION In this paper I will be covering some of the basics of the functionality within SAS that allows you to execute operating system commands from within your program. There are multiple ways you can do so – external to data steps, within data steps, and within macros. All of these, along with error checking, will be covered. RELEVANT OPTIONS Execution of any of the SAS System command execution commands depends on one option's setting: XCMD Enables the X command in SAS. Which can only be set at startup: options xcmd; ____ 30 WARNING 30-12: SAS option XCMD is valid only at startup of the SAS System. The SAS option is ignored. Unfortunately, ff NOXCMD is set at startup time, you're out of luck. Sorry! You might want to have a conversation with your system administrators to determine why and if you can get it changed. -

How to Cheat at Windows System Administration Using Command Line Scripts

www.dbebooks.com - Free Books & magazines 405_Script_FM.qxd 9/5/06 11:37 AM Page i How to Cheat at Windows System Administration Using Command Line Scripts Pawan K. Bhardwaj 405_Script_FM.qxd 9/5/06 11:37 AM Page ii Syngress Publishing, Inc., the author(s), and any person or firm involved in the writing, editing, or produc- tion (collectively “Makers”) of this book (“the Work”) do not guarantee or warrant the results to be obtained from the Work. There is no guarantee of any kind, expressed or implied, regarding the Work or its contents.The Work is sold AS IS and WITHOUT WARRANTY.You may have other legal rights, which vary from state to state. In no event will Makers be liable to you for damages, including any loss of profits, lost savings, or other incidental or consequential damages arising out from the Work or its contents. Because some states do not allow the exclusion or limitation of liability for consequential or incidental damages, the above limitation may not apply to you. You should always use reasonable care, including backup and other appropriate precautions, when working with computers, networks, data, and files. Syngress Media®, Syngress®,“Career Advancement Through Skill Enhancement®,”“Ask the Author UPDATE®,” and “Hack Proofing®,” are registered trademarks of Syngress Publishing, Inc.“Syngress:The Definition of a Serious Security Library”™,“Mission Critical™,” and “The Only Way to Stop a Hacker is to Think Like One™” are trademarks of Syngress Publishing, Inc. Brands and product names mentioned in this book are trademarks or service marks of their respective companies. -

1. Run Nslookup to Obtain the IP Address of a Web Server in Europe

1. Run nslookup to obtain the IP address of a Web server in Europe. frigate:Desktop drb$ nslookup home.web.cern.ch Server: 130.215.32.18 Address: 130.215.32.18#53 Non-authoritative answer: home.web.cern.ch canonical name = drupalprod.cern.ch. Name: drupalprod.cern.ch Address: 137.138.76.28 Note that the #53 denotes the DNS service is running on port 53. 2. Run nslookup to determine the authoritative DNS servers for a university in Asia. frigate:Desktop drb$ nslookup -type=NS tsinghua.edu.cn Server: 130.215.32.18 Address: 130.215.32.18#53 Non-authoritative answer: tsinghua.edu.cn nameserver = dns2.tsinghua.edu.cn. tsinghua.edu.cn nameserver = dns.tsinghua.edu.cn. tsinghua.edu.cn nameserver = dns2.edu.cn. tsinghua.edu.cn nameserver = ns2.cuhk.edu.hk. Authoritative answers can be found from: dns2.tsinghua.edu.cn internet address = 166.111.8.31 ns2.cuhk.edu.hk internet address = 137.189.6.21 ns2.cuhk.edu.hk has AAAA address 2405:3000:3:6::15 dns2.edu.cn internet address = 202.112.0.13 dns.tsinghua.edu.cn internet address = 166.111.8.30 Note that there can be multiple authoritative servers. The response we got back was from a cached record. To confirm the authoritative DNS servers, we perform the same DNS query of one of the servers that can provide authoritative answers. frigate:Desktop drb$ nslookup -type=NS tsinghua.edu.cn dns.tsinghua.edu.cn Server: dns.tsinghua.edu.cn Address: 166.111.8.30#53 tsinghua.edu.cn nameserver = dns2.edu.cn. -

VNC User Guide 7 About This Guide

VNC® User Guide Version 5.3 December 2015 Trademarks RealVNC, VNC and RFB are trademarks of RealVNC Limited and are protected by trademark registrations and/or pending trademark applications in the European Union, United States of America and other jursidictions. Other trademarks are the property of their respective owners. Protected by UK patent 2481870; US patent 8760366 Copyright Copyright © RealVNC Limited, 2002-2015. All rights reserved. No part of this documentation may be reproduced in any form or by any means or be used to make any derivative work (including translation, transformation or adaptation) without explicit written consent of RealVNC. Confidentiality All information contained in this document is provided in commercial confidence for the sole purpose of use by an authorized user in conjunction with RealVNC products. The pages of this document shall not be copied, published, or disclosed wholly or in part to any party without RealVNC’s prior permission in writing, and shall be held in safe custody. These obligations shall not apply to information which is published or becomes known legitimately from some source other than RealVNC. Contact RealVNC Limited Betjeman House 104 Hills Road Cambridge CB2 1LQ United Kingdom www.realvnc.com Contents About This Guide 7 Chapter 1: Introduction 9 Principles of VNC remote control 10 Getting two computers ready to use 11 Connectivity and feature matrix 13 What to read next 17 Chapter 2: Getting Connected 19 Step 1: Ensure VNC Server is running on the host computer 20 Step 2: Start VNC -

How Will You Troubleshoot the Issue? What Are the Steps to Followed? A

1. A user in a corporate network contacts service desk saying he/she has lost network connectivity: How will you troubleshoot the issue? What are the steps to followed? A. First I will check the network cable is plugged in or not. Then check the network connections and the ip address is assigned or not. Then check connecting to website or not. IP conflict. 2. A User calls in and complains that her computer and network is running very slow. How would go about troubleshooting it? A. 3. How would you create an email account for a user already in AD? A. Open Microsoft Outlook if you are using office 2000, and click on "Tools" tab. Go to "Email Accounts". There you can find two option like Email and Directory. Click on "Add a new Account" and click next. If you are using Exchange Server then click over there, this depends on that particular Organization. According to the their setup you have to choose. And if you are using POP3 server then next popup will come along with your name, email address POP3 and SMTP IP address, Password etc. and after that click on Next and finish it..... 4. A PC did not receive an update from SMS. What steps would we take to resolve this? A. If SMS not updated in client system. 1. Need to check system getting IPaddress or not. 2. Need to check system in domain or not 3.Ensure that windows firewall should be off. 5. How do you set the IP address by using the command prompt A.