Scribe Notes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

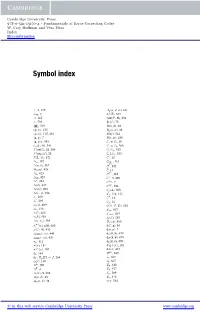

Symbol Index

Cambridge University Press 978-0-521-13170-4 - Fundamentals of Error-Correcting Codes W. Cary Huffman and Vera Pless Index More information Symbol index ⊥,5,275 Aq (n, d,w), 60 n ⊥H ,7 A (F), 517 , 165 Aut(P, B), 292 , 533 Bi (C), 75 a , p , 219 B(n d), 48 (g(x)), 125 Bq (n, d), 48 g(x) , 125, 481 B(G), 564 x, y ,7 B(t, m), 439 x, y T , 383 C1 ⊕ C2,18 αq (δ), 89, 541 C1 C2, 368 Aut(C), 26, 384 Ci /C j , 353 AutPr(C), 28 Ci ⊥C j , 353 (L, G), 521 C∗,13 μ, 187 C| , 116 Fq (n, k), 567 Cc, 145 (q), 426 C,14 2, 423 C(L), 565 ⊥ 24, 429 C ,5,469 ∗ ⊥ , 424 C H ,7 C ⊥ ( ), 427 C T , 384 C 4( ), 503 Cq (A), 308 x, y ( ), 196 Cs , 114, 122 λ i , 293 CT ,14 λ j i , 295 CT ,16 λ i (x), 609 C(X , P, D), 535 μ a , 138 d2m , 367 ν C ( ), 465 d free, 563 ν P ( ), 584 dr (C), 283 ν s , s ( i j ), 584 Dq (A), 309 π (n) C, i (x), 608, 610 d( x), 65 ρ(C), 50, 432 d(x, y), 7 , ρBCH(t, m), 440 dE (x y), 470 , ρRM(r, m), 437 dH (x y), 470 , σp, 111 dL (x y), 470 σ (x), 181 deg f (x), 101 σ (μ)(x), 187 det , 423 Dext φs , 164 , 249 φs : Fq [I] → I, 164 e7, 367 ω(x), 190 e8, 367 A , 309 E8, 428 T A ,4 Eb, 577 E Ai (C), 8, 252 n, 209 A(n, d), 48 Es , 573 Aq (n, d), 48 evP , 534 © in this web service Cambridge University Press www.cambridge.org Cambridge University Press 978-0-521-13170-4 - Fundamentals of Error-Correcting Codes W. -

Sequence Distance Embeddings

Sequence Distance Embeddings by Graham Cormode Thesis Submitted to the University of Warwick for the degree of Doctor of Philosophy Computer Science January 2003 Contents List of Figures vi Acknowledgments viii Declarations ix Abstract xi Abbreviations xii Chapter 1 Starting 1 1.1 Sequence Distances . ....................................... 2 1.1.1 Metrics ............................................ 3 1.1.2 Editing Distances ...................................... 3 1.1.3 Embeddings . ....................................... 3 1.2 Sets and Vectors ........................................... 4 1.2.1 Set Difference and Set Union . .............................. 4 1.2.2 Symmetric Difference . .................................. 5 1.2.3 Intersection Size ...................................... 5 1.2.4 Vector Norms . ....................................... 5 1.3 Permutations ............................................ 6 1.3.1 Reversal Distance ...................................... 7 1.3.2 Transposition Distance . .................................. 7 1.3.3 Swap Distance ....................................... 8 1.3.4 Permutation Edit Distance . .............................. 8 1.3.5 Reversals, Indels, Transpositions, Edits (RITE) . .................... 9 1.4 Strings ................................................ 9 1.4.1 Hamming Distance . .................................. 10 1.4.2 Edit Distance . ....................................... 10 1.4.3 Block Edit Distances . .................................. 11 1.5 Sequence Distance Problems . ................................. -

Reed-Solomon Codes

Foreword This chapter is based on lecture notes from coding theory courses taught by Venkatesan Gu- ruswami at University at Washington and CMU; by Atri Rudra at University at Buffalo, SUNY and by Madhu Sudan at MIT. This version is dated May 8, 2015. For the latest version, please go to http://www.cse.buffalo.edu/ atri/courses/coding-theory/book/ The material in this chapter is supported in part by the National Science Foundation under CAREER grant CCF-0844796. Any opinions, findings and conclusions or recomendations ex- pressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation (NSF). ©Venkatesan Guruswami, Atri Rudra, Madhu Sudan, 2013. This work is licensed under the Creative Commons Attribution-NonCommercial- NoDerivs 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/3.0/ or send a letter to Creative Commons, 444 Castro Street, Suite 900, Mountain View, California, 94041, USA. Chapter 5 The Greatest Code of Them All: Reed-Solomon Codes In this chapter, we will study the Reed-Solomon codes. Reed-Solomoncodeshavebeenstudied a lot in coding theory. These codes are optimal in the sense that they meet the Singleton bound (Theorem 4.3.1). We would like to emphasize that these codes meet the Singleton bound not just asymptotically in terms of rate and relative distance but also in terms of the dimension, block length and distance. As if this were not enough, Reed-Solomon codes turn out to be more versatile: they have many applications outside of coding theory. -

Bilinear Forms Over a Finite Field, with Applications to Coding Theory

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector JOURNAL OF COMBINATORIAL THEORY, Series A 25, 226-241 (1978) Bilinear Forms over a Finite Field, with Applications to Coding Theory PH. DELSARTE MBLE Research Laboratory, Brussels, Belgium Communicated by J. H. can Lint Received December 7, 1976 Let 0 be the set of bilinear forms on a pair of finite-dimensional vector spaces over GF(q). If two bilinear forms are associated according to their q-distance (i.e., the rank of their difference), then Q becomes an association scheme. The characters of the adjacency algebra of Q, which yield the MacWilliams transform on q-distance enumerators, are expressed in terms of generalized Krawtchouk polynomials. The main emphasis is put on subsets of s2 and their q-distance structure. Certain q-ary codes are attached to a given XC 0; the Hamming distance enumerators of these codes depend only on the q-distance enumerator of X. Interesting examples are provided by Singleton systems XC 0, which are defined as t-designs of index 1 in a suitable semilattice (for a given integer t). The q-distance enumerator of a Singleton system is explicitly determined from the parameters. Finally, a construction of Singleton systems is given for all values of the parameters. 1. INTRODUCTION Classical coding theory may be introduced as follows. Let r denote a finite-dimensional vector space over the finite field K. The Hamming weight wt(f) of a vector f E r is the number of nonzero coordinates off in a fixed K-basis of lY Then (r, wt) is a normed space, called Hamming space; and a code simply is a nonempty subset of r endowed with the Hamming distance attached to wt. -

The Chromatic Number of the Square of the 8-Cube

The chromatic number of the square of the 8-cube Janne I. Kokkala∗ and Patric R. J. Osterg˚ard¨ † Department of Communications and Networking Aalto University School of Electrical Engineering P.O. Box 13000, 00076 Aalto, Finland Abstract A cube-like graph is a Cayley graph for the elementary abelian group of order 2n. In studies of the chromatic number of cube-like k graphs, the kth power of the n-dimensional hypercube, Qn, is fre- quently considered. This coloring problem can be considered in the k framework of coding theory, as the graph Qn can be constructed with one vertex for each binary word of length n and edges between ver- tices exactly when the Hamming distance between the corresponding k words is at most k. Consequently, a proper coloring of Qn corresponds to a partition of the n-dimensional binary Hamming space into codes with minimum distance at least k + 1. The smallest open case, the 2 chromatic number of Q8, is here settled by finding a 13-coloring. Such 13-colorings with specific symmetries are further classified. 1 Introduction arXiv:1607.01605v1 [math.CO] 6 Jul 2016 A cube-like graph is a Cayley graph for the elementary abelian group of order 2n. One of the original motivations for studying cube-like graphs was the fact that they have only integer eigenvalues [5]. Cube-like graphs also form a generalization of the hypercube. ∗Supported by Aalto ELEC Doctoral School, Nokia Foundation, Emil Aaltonen Foun- dation, and by Academy of Finland Project 289002. †Supported in part by Academy of Finland Project 289002. -

Fundamentals of Error-Correcting Codes W

Cambridge University Press 978-0-521-13170-4 - Fundamentals of Error-Correcting Codes W. Cary Huffman and Vera Pless Frontmatter More information Fundamentals of Error-Correcting Codes Fundamentals of Error-Correcting Codes is an in-depth introduction to coding theory from both an engineering and mathematical viewpoint. As well as covering classical topics, much coverage is included of recent techniques that until now could only be found in special- ist journals and book publications. Numerous exercises and examples and an accessible writing style make this a lucid and effective introduction to coding theory for advanced undergraduate and graduate students, researchers and engineers, whether approaching the subject from a mathematical, engineering, or computer science background. Professor W. Cary Huffman graduated with a PhD in mathematics from the California Institute of Technology in 1974. He taught at Dartmouth College and Union College until he joined the Department of Mathematics and Statistics at Loyola in 1978, serving as chair of the department from 1986 through 1992. He is an author of approximately 40 research papers in finite group theory, combinatorics, and coding theory, which have appeared in journals such as the Journal of Algebra, IEEE Transactions on Information Theory, and the Journal of Combinatorial Theory. Professor Vera Pless was an undergraduate at the University of Chicago and received her PhD from Northwestern in 1957. After ten years at the Air Force Cambridge Research Laboratory, she spent a few years at MIT’s project MAC. She joined the University of Illinois-Chicago’s Department of Mathematics, Statistics, and Computer Science as a full professor in 1975 and has been there ever since. -

Constructing Covering Codes

Research Collection Master Thesis Constructing Covering Codes Author(s): Filippini, Luca Teodoro Publication Date: 2016 Permanent Link: https://doi.org/10.3929/ethz-a-010633987 Rights / License: In Copyright - Non-Commercial Use Permitted This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Constructing Covering Codes Master Thesis Luca Teodoro Filippini Thursday 24th March, 2016 Advisors: Prof. Dr. E. Welzl, C. Annamalai Department of Theoretical Computer Science, ETH Z¨urich Abstract Given r, n N, the problem of constructing a set C 0,1 n such that every∈ element in 0,1 n has Hamming distance at⊆ most { }r from some element in C is called{ }the covering code construction problem. Con- structing a covering code of minimal size is such a hard task that even for r = 1, n = 10 we don’t know the exact size of the minimal code. Therefore, approximations are often studied and employed. Among the several applications that such a construction has, it plays a key role in one of the fastest 3-SAT algorithms known to date. The main contribution of this thesis is presenting a Las Vegas algorithm for constructing a covering code with linear radius, derived from the famous Monte Carlo algorithm of random codeword sampling. Our algorithm is faster than the deterministic algorithm presented in [5] by a cubic root factor of the polynomials involved. We furthermore study the problem of determining the covering radius of a code: it was already proven -complete for r = n/2, and we extend the proof to a wider range ofN radii. -

An Introduction to Coding Theory

An introduction to coding theory Adrish Banerjee Department of Electrical Engineering Indian Institute of Technology Kanpur Kanpur, Uttar Pradesh India Feb. 6, 2017 Lecture #7A: Bounds on the size of a code Adrish Banerjee Department of Electrical Engineering Indian Institute of Technology Kanpur Kanpur, Uttar Pradesh India An introduction to coding theory Outline of the lecture Hamming bound Adrish Banerjee Department of Electrical Engineering Indian Institute of Technology Kanpur Kanpur, Uttar Pradesh India An introduction to coding theory Outline of the lecture Hamming bound Perfect codes Adrish Banerjee Department of Electrical Engineering Indian Institute of Technology Kanpur Kanpur, Uttar Pradesh India An introduction to coding theory Outline of the lecture Hamming bound Perfect codes Singleton bound Adrish Banerjee Department of Electrical Engineering Indian Institute of Technology Kanpur Kanpur, Uttar Pradesh India An introduction to coding theory Outline of the lecture Hamming bound Perfect codes Singleton bound Maximum distance separable codes Adrish Banerjee Department of Electrical Engineering Indian Institute of Technology Kanpur Kanpur, Uttar Pradesh India An introduction to coding theory Outline of the lecture Hamming bound Perfect codes Singleton bound Maximum distance separable codes Plotkin Bound Adrish Banerjee Department of Electrical Engineering Indian Institute of Technology Kanpur Kanpur, Uttar Pradesh India An introduction to coding theory Outline of the lecture Hamming bound Perfect codes Singleton bound Maximum distance separable codes Plotkin Bound Gilbert-Varshamov bound Adrish Banerjee Department of Electrical Engineering Indian Institute of Technology Kanpur Kanpur, Uttar Pradesh India An introduction to coding theory Bounds on the size of a code The basic problem is to find the largest code of a given length, n and minimum distance, d. -

Optimal Codes and Entropy Extractors

Department of Mathematics Ph.D. Programme in Mathematics XXIX cycle Optimal Codes and Entropy Extractors Alessio Meneghetti 2017 University of Trento Ph.D. Programme in Mathematics Doctoral thesis in Mathematics OPTIMAL CODES AND ENTROPY EXTRACTORS Alessio Meneghetti Advisor: Prof. Massimiliano Sala Head of the School: Prof. Valter Moretti Academic years: 2013{2016 XXIX cycle { January 2017 Acknowledgements I thank Dr. Eleonora Guerrini, whose Ph.D. Thesis inspired me and whose help was crucial for my research on Coding Theory. I also thank Dr. Alessandro Tomasi, without whose cooperation this work on Entropy Extraction would not have come in the present form. Finally, I sincerely thank Prof. Massimiliano Sala for his guidance and useful advice over the past few years. This research was funded by the Autonomous Province of Trento, Call \Grandi Progetti 2012", project On silicon quantum optics for quantum computing and secure communications - SiQuro. i Contents List of Figures......................v List of Tables....................... vii Introduction1 I Codes and Random Number Generation7 1 Introduction to Coding Theory9 1.1 Definition of systematic codes.............. 16 1.2 Puncturing, shortening and extending......... 18 2 Bounds on code parameters 27 2.1 Sphere packing bound.................. 29 2.2 Gilbert bound....................... 29 2.3 Varshamov bound.................... 30 2.4 Singleton bound..................... 31 2.5 Griesmer bound...................... 33 2.6 Plotkin bound....................... 34 2.7 Johnson bounds...................... 36 2.8 Elias bound........................ 40 2.9 Zinoviev-Litsyn-Laihonen bound............ 42 2.10 Bellini-Guerrini-Sala bounds............... 43 iii 3 Hadamard Matrices and Codes 49 3.1 Hadamard matrices.................... 50 3.2 Hadamard codes..................... 53 4 Introduction to Random Number Generation 59 4.1 Entropy extractors................... -

Optimal Locally Repairable Codes of Distance 3 and 4 Via Cyclic Codes

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by CWI's Institutional Repository Optimal locally repairable codes of distance 3 and 4 via cyclic codes Yuan Luo,∗ Chaoping Xing and Chen Yuan y Abstract Like classical block codes, a locally repairable code also obeys the Singleton-type bound (we call a locally repairable code optimal if it achieves the Singleton-type bound). In the breakthrough work of [14], several classes of optimal locally repairable codes were constructed via subcodes of Reed-Solomon codes. Thus, the lengths of the codes given in [14] are upper bounded by the code alphabet size q. Recently, it was proved through extension of construction in [14] that length of q-ary optimal locally repairable codes can be q + 1 in [7]. Surprisingly, [2] presented a few examples of q-ary optimal locally repairable codes of small distance and locality with code length achieving roughly q2. Very recently, it was further shown in [8] that there exist q-ary optimal locally repairable codes with length bigger than q + 1 and distance propositional to n. Thus, it becomes an interesting and challenging problem to construct new families of q-ary optimal locally repairable codes of length bigger than q + 1. In this paper, we construct a class of optimal locally repairable codes of distance 3 and 4 with un- bounded length (i.e., length of the codes is independent of the code alphabet size). Our technique is through cyclic codes with particular generator and parity-check polynomials that are carefully chosen. -

Algebraic Geometric Codes: Basic Notions Mathematical Surveys and Monographs

http://dx.doi.org/10.1090/surv/139 Algebraic Geometric Codes: Basic Notions Mathematical Surveys and Monographs Volume 139 Algebraic Geometric Codes: Basic Notions Michael Tsfasman Serge Vladut Dmitry Nogin American Mathematical Society EDITORIAL COMMITTEE Jerry L. Bona Peter S. Landweber Michael G. Eastwood Michael P. Loss J. T. Stafford, Chair Our research while working on this book was supported by the French National Scien tific Research Center (CNRS), in particular by the Inst it ut de Mathematiques de Luminy and the French-Russian Poncelet Laboratory, by the Institute for Information Transmis sion Problems, and by the Independent University of Moscow. It was also supported in part by the Russian Foundation for Basic Research, projects 99-01-01204, 02-01-01041, and 02-01-22005, and by the program Jumelage en Mathematiques. 2000 Mathematics Subject Classification. Primary 14Hxx, 94Bxx, 14G15, 11R58; Secondary 11T23, 11T71. For additional information and updates on this book, visit www.ams.org/bookpages/surv-139 Library of Congress Cataloging-in-Publication Data Tsfasman, M. A. (Michael A.), 1954- Algebraic geometry codes : basic notions / Michael Tsfasman, Serge Vladut, Dmitry Nogin. p. cm. — (Mathematical surveys and monographs, ISSN 0076-5376 ; v. 139) Includes bibliographical references and index. ISBN 978-0-8218-4306-2 (alk. paper) 1. Coding theory. 2. Number theory. 3. Geometry, Algebraic. I. Vladut, S. G. (Serge G.), 1954- II. Nogin, Dmitry, 1966- III. Title. QA268 .T754 2007 003'.54—dc22 2007061731 Copying and reprinting. Individual readers of this publication, and nonprofit libraries acting for them, are permitted to make fair use of the material, such as to copy a chapter for use in teaching or research. -

ELEC 619A Selected Topics in Digital Communications: Information Theory

ELEC 519A Selected Topics in Digital Communications: Information Theory Hamming Codes and Bounds on Codes Single Error Correcting Codes 2 Hamming Codes • (7,4,3) Hamming code 1 0 0 0 0 1 1 0 1 0 0 1 0 1 GIP | 0 0 1 0 1 1 0 0 0 0 1 1 1 1 • (7,3,4) dual code 0 1 1 1 1 0 0 T HPI -| 1011010 1 1 0 1 0 0 1 3 • The minimum distance of a code C is equal to the minimum number of columns of H which sum to zero • For any codeword c h0 h T 1 cH [c,c,...,c01 n1 ] ch 0011 ch ...c n1n1 h 0 hn1 where ho, h1, …, hn-1 are the column vectors of H T • cH is a linear combination of the columns of H 4 Significance of H • For a codeword of weight w (w ones), cHT is a linear combination of w columns of H. • Thus there is a one-to-one mapping between weight w codewords and linear combinations of w columns of H that sum to 0. • The minimum value of w which results in cHT=0, i.e., codeword c with weight w, determines that dmin = w 5 Distance 1 and 2 Codes • (5,2,1) code 10100 10110 HG= 10010 = 01000 00001 • (5,2,2) codes 11100 10110 HG= 11010 = 01110 00001 6 (7,4,3) Code • A codeword with weight dmin = 3 is given by the first row of G: c = 1000011 • The linear combination of the first and last 2 columns in H gives T T T T (011) +(010) +(001) = (000) • Thus a minimum of 3 columns (= dmin) are required to get a zero value for cHT 7 Binary Hamming Codes Let m be an integer and H be an m (2m - 1) matrix with columns which are the non-zero distinct elements from Vm.