January 20, 2019 Brynne O'neal Professor Nathaniel Persily Free

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

THE TRUTH CAN CATCH the LIE: the FLAWED UNDERSTANDING of ONLINE SPEECH in INRE Anonymous ONLINE SPEAKERS Musetta Durkeet

THE TRUTH CAN CATCH THE LIE: THE FLAWED UNDERSTANDING OF ONLINE SPEECH IN INRE ANoNYMous ONLINE SPEAKERS Musetta Durkeet In the early years of the Internet, many cases seeking disclosure of anonymous online speakers involved large companies seeking to unveil identities of anonymous posters criticizing the companies on online financial message boards.' In such situations, Internet service providers (ISPs)-or, less often, online service providers (OSPs)2-- disclosed individually- identifying information, often without providing defendants notice of this disclosure,' and judges showed hostility towards defendants' motions to quash.4 Often these subpoenas were issued by parties alleging various civil causes of action, including defamation and tortious interference with business contracts.s These cases occurred in a time when courts and the general public alike pictured the Internet as a wild west-like "frontier society,"' devoid of governing norms, where anonymous personalities ran C 2011 Musetta Durkee. t J.D. Candidate, 2012, University of California, Berkeley School of Law. 1. Lyrissa Barnett Lidsky, Anonymity in Cyberspace: What Can We LearnfromJohn Doe?, 50 B.C. L. REv. 1373, 1373-74 (2009). 2. As will be discussed below, there has been some conflation of two separate services that allow users to access the Internet, on the one hand, and engage in various activities, speech, and communication, on the other. Most basically, this Note distinguishes between Internet Service Providers (JSPs) and Online Service Providers (OSPs) in order to separate those services and companies involved with providing subscribers access to the infrastructure of the Internet (ISPs, and their subscribers) and those services and companies involved with providing users with online applications, services, platforms, and spaces on the Internet (OSPs and their users). -

Social Media Why You Should Care What Is Social Media? Social Network

Social Media Why You Should Care IST 331 - Olivier Georgeon, Frank Ritter 31 oct 15 • eMarketer (2007) estimated by 2011 one-half Examples of all Internet users will use social networking • Facebook regulary. • YouTube • By 2015, 75% use • Myspace • Twitter • Del.icio.us • Digg • Etc… 2 What is Social Media? Social Network • Social Network • Online communities of people who share • User Generated Content (UGC) interests and activities, • Social Bookmarking • … or who are interested in exploring the interests and activities of others. • Examples: Facebook, MySpace, LinkedIn, Orkut • Falls to analysis with tools in Ch. 9 3 4 User Generated Content (UGC) Social Bookmarking • A method for Internet users to store, organize, search, • or Consumer Generated Media (CGM) and manage bookmarks of web pages on the Internet with the help of metadata. • Based on communities; • Defined: Media content that is publicly – The more people who bookmark a piece of content, the more available and produced by end-users (user). value it is determined to have. • Examples: Digg, Del.icio.us, StumbleUpon, and reddit….and now combinations • Usually supported by a social network • Examples: Blogs, Micro-blogs, YouTube video, Flickr photos, Wiki content, Facebook wall posts, reddit, Second Life… 5 6 Social Media Principles Generate an activity stream • Automatic • Who you are – Google History, Google Analytics – Personalization • Blog • Who you know • Micro-blog – Browse network – Twitter, yammer, identi.ca • What you do • Mailing groups – Generate an activity stream -

Network Overlap and Content Sharing on Social Media Platforms

Network Overlap and Content Sharing on Social Media Platforms Jing Peng1, Ashish Agarwal2, Kartik Hosanagar3, Raghuram Iyengar3 1School of Business, University of Connecticut 2McCombs School of Business, University of Texas at Austin 3The Wharton School, University of Pennsylvania [email protected] [email protected] {kartikh, riyengar}@wharton.upenn.edu February 15, 2017 Acknowledgments. We benefited from feedback from session participants at 2013 Symposium on Statistical Challenges in eCommerce Research, 2014 International Conference on Information Systems, 2015 Workshop on Information in Networks, 2015 INFORMS Annual Meeting, and 2015 Workshop on Information Systems and Economics. The authors would like to thank Professors Christophe Van den Bulte, Paul Shaman, and Dylan Small for helpful discussions. This project was made possible by financial support extended to the first author through Mack Institute Research Fellowship, President Gutmann's Leadership Award, and Baker Retailing Center PhD Research Grant. 1 Network Overlap and Content Sharing on Social Media Platforms ABSTRACT We study the impact of network overlap – the overlap in network connections between two users – on content sharing in directed social media platforms. We propose a hazards model that flexibly captures the impact of three different measures of network overlap (i.e., common followees, common followers and common mutual followers) on content sharing. Our results indicate a receiver is more likely to share content from a sender with whom they share more common followees, common followers or common mutual followers after accounting for other measures. Additionally, the effect of common followers and common mutual followers is positive when the content is novel but decreases, and may even become negative, when many others in the network have already adopted it. -

Facebook Timeline

Facebook Timeline 2003 October • Mark Zuckerberg releases Facemash, the predecessor to Facebook. It was described as a Harvard University version of Hot or Not. 2004 January • Zuckerberg begins writing Facebook. • Zuckerberg registers thefacebook.com domain. February • Zuckerberg launches Facebook on February 4. 650 Harvard students joined thefacebook.com in the first week of launch. March • Facebook expands to MIT, Boston University, Boston College, Northeastern University, Stanford University, Dartmouth College, Columbia University, and Yale University. April • Zuckerberg, Dustin Moskovitz, and Eduardo Saverin form Thefacebook.com LLC, a partnership. June • Facebook receives its first investment from PayPal co-founder Peter Thiel for US$500,000. • Facebook incorporates into a new company, and Napster co-founder Sean Parker becomes its president. • Facebook moves its base of operations to Palo Alto, California. N. Lee, Facebook Nation, DOI: 10.1007/978-1-4614-5308-6, 211 Ó Springer Science+Business Media New York 2013 212 Facebook Timeline August • To compete with growing campus-only service i2hub, Zuckerberg launches Wirehog. It is a precursor to Facebook Platform applications. September • ConnectU files a lawsuit against Zuckerberg and other Facebook founders, resulting in a $65 million settlement. October • Maurice Werdegar of WTI Partner provides Facebook a $300,000 three-year credit line. December • Facebook achieves its one millionth registered user. 2005 February • Maurice Werdegar of WTI Partner provides Facebook a second $300,000 credit line and a $25,000 equity investment. April • Venture capital firm Accel Partners invests $12.7 million into Facebook. Accel’s partner and President Jim Breyer also puts up $1 million of his own money. -

Participatory Porn Culture Feminist Positions and Oppositions in the Internet Pornosphere Allegra W

from Paul G. Nixon and Isabel K. Düsterhöft (Eds.) Sex in the Digital Age. New York: Rougledge, 2018. CHAPTER 2 Participatory porn culture Feminist positions and oppositions in the internet pornosphere Allegra W. Smith Introductions: pornography, feminisms, and ongoing debate Though first-wave feminists had engaged in debate and advocacy surrounding sexual knowledge and obscenity since the late-19th century (Horowitz, 2003), American advocates did not begin to address pornography as a feminist concern until the 1970s, ostensibly beginning with the formation of the activist group Women Against Violence in Pornography and Media (WAVPM) in 1978. WAVPM was a reactionary response to the mainstreaming of hardcore pornography in the wake of American sexual liberation, which many second wave feminists claimed “…encouraged rape and other acts of violence, threatening women’s safety and establishing a climate of terror that silenced women and perpetuated their oppression… [thus helping] create and maintain women’s subordinate status” (Bronstein, 2011, pp. 178–179). The resulting “porn wars” (or, more broadly, “sex wars”) of the 1980s and 1990s polarized second- and third-wave feminists into two camps: (1) anti-porn feminists, such as writer Andrea Dworkin (1981) and legal scholar Catharine MacKinnon (1984), who opposed the creation and distribution of pornographic images and videos on moral and political grounds; and (2) pro-porn or sex-positive feminists, such as anthropologist Gayle Rubin and feminist pornographer Candida Royalle, who advocated for freedom of sexual expression and representation. The rhetoric surrounding the social and cultural ills perpetuated by pornography was duly acrimonious, with one prominent feminist writing, “pornography is the theory, rape is the practice” (Morgan, 1978, p. -

Criminalization Downloads Evil: Reexamining the Approach to Electronic Possession When Child Pornography Goes International

\\jciprod01\productn\B\BIN\34-2\BIN203.txt unknown Seq: 1 2-JUN-16 14:19 CRIMINALIZATION DOWNLOADS EVIL: REEXAMINING THE APPROACH TO ELECTRONIC POSSESSION WHEN CHILD PORNOGRAPHY GOES INTERNATIONAL Asaf Harduf* INTRODUCTION ................................................... 280 R I. THE LADDER OF CRIMINALIZATION ....................... 281 R A. The Matter of Criminalization ......................... 282 R B. The Rungs of the Ladder of Criminalization ........... 284 R 1. First Rung: Identifying the Conduct, Causation, and Harm ......................................... 285 R 2. Second Rung: Examining the Ability to Achieve Goals ............................................. 286 R 3. Third Rung: Examining Alternatives to Criminalization .................................... 287 R 4. Fourth Rung: Assessing the Social Costs of Solutions and Striking a Balance .................. 288 R C. Towards an Analysis of Child Pornography Possession ............................................. 288 R II. APPLICATION TO THE ELECTRONIC POSSESSION OF CHILD PORNOGRAPHY ............................................ 289 R A. First Rung: The Offensive Conduct of Electronic Possession ............................................. 292 R 1. Conduct of Electronic Possession .................. 292 R 2. Harms to Children ................................ 294 R 3. Causation: Four Possible Links .................... 295 R 4. Offensiveness: Summation ......................... 302 R B. Second Rung: Criminal Law’s Ability to Reduce Harm to Children ........................................... -

A Case Study of the Reddit Community

An Exploration of Discussion Threads in Social News Sites: A Case Study of the Reddit Community Tim Weninger Xihao Avi Zhu Jiawei Han Department of Computer Science University of Illinois Urbana-Champaign Urbana, Illinois 61801 Email: fweninge1, zhu40, [email protected] Abstract—Social news and content aggregation Web sites have submission. These comment threads provide a user-generated become massive repositories of valuable knowledge on a diverse and user-curated commentary on the topic at hand. Unlike range of topics. Millions of Web-users are able to leverage these message board or Facebook-style comments that list comments platforms to submit, view and discuss nearly anything. The users in a flat, chronological order, or Twitter discussions that are themselves exclusively curate the content with an intricate system person-to-person and oftentimes difficult to discern, comment of submissions, voting and discussion. Furthermore, the data on threads in the social news paradigm are permanent (although social news Web sites is extremely well organized by its user-base, which opens the door for opportunities to leverage this data for editable), well-formed and hierarchical. The hierarchical nature other purposes just like Wikipedia data has been used for many of comment threads, where the discussion structure resembles other purposes. In this paper we study a popular social news a tree, is especially important because this allows divergent Web site called Reddit. Our investigation looks at the dynamics sub-topics resulting in a more robust overall discussion. of its discussion threads, and asks two main questions: (1) to In this paper we explore the social news site Reddit in order what extent do discussion threads resemble a topical hierarchy? and (2) Can discussion threads be used to enhance Web search? to gain a deeper understanding on the social, temporal, and We show interesting results for these questions on a very large topical methods that allow these types of user-powered Web snapshot several sub-communities of the Reddit Web site. -

Zach Christopherson PHIL 308 Term Paper March 22, 2009

Zach Christopherson PHIL 308 Term Paper March 22, 2009 Online Communities and the Ethics of Revolt With the creation and widespread use of the Internet in the 1980s came Internet communities. They were originally started in newsgroups, message boards, and Internet forums. A regular group of members would consistently post and exchange ideas and information with one another. Relationships were formed and these communities became permanent entities, usually remaining on the sites where they were created. From time to time a community’s home becomes threatened. This can occur when a site is taken down by the owners, the site becomes obsolete and is replaced by a competitor, or the community migrates to a new home for reasons like censorship or discrimination. Some of the largest online communities exist on message board sites such as GenMay.com (General Mayhem), LiveJournal.com, and 4chan.org. These members share common likes and dislikes which bring them together for general socialization. Over the span of a community’s life differences can arise between the site administrators and the site community that can cause a revolt by the community against the website on which it was created. One of the most popular social news websites on the Internet is Digg.com, which was launched on December 4, 2004 and has housed a strong Internet community since then. The purpose of Digg is to compile many user-submitted news stories and have the community either rate them up (Digg them) or rate them down (Bury them). These ratings are stored on the site and a running counter is shown to the left of the news article title. -

PANAHI-.Pdf (1.237Mb)

© Copyright by Hesam Panahi, 2010 USER-GENERATED CONTENT AND REVOLUTIONS: TOWARDS A THEORIZATION OF WEB 2.0 A Dissertation Presented to The Faculty of the C.T. Bauer College of Business University of Houston In Partial Fulfillment Of the Requirements for the Degree Doctor of Philosophy By Hesam Panahi December, 2010 Introduction ............................................................................................................ 1 Dissertationʼs Research Objective ..................................................................... 2 Dissertationʼs Research Methodology ................................................................ 3 References ......................................................................................................... 5 Paper One: A Critical Study of Social Computing and Web 2.0: The Case of Digg ............................................................................................................................... 6 Introduction ........................................................................................................ 7 Theoretical Background ..................................................................................... 9 Critical Studies of IS ....................................................................................... 9 A Critical Framework for Studying Social Computing ................................... 10 Research Methods ........................................................................................... 15 Why an Interpretive Approach ..................................................................... -

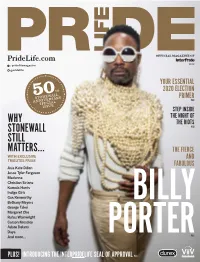

Why Stonewall Still Matters…

PrideLife Magazine 2019 / pridelifemagazine 2019 @pridelife YOUR ESSENTIAL th 2020 ELECTION 50 PRIMER stonewall P.68 anniversaryspecial issue STEP INSIDE THE NIGHT OF WHY THE RIOTS STONEWALL P.50 STILL MATTERS… THE FIERCE WITH EXCLUSIVE AND TRIBUTES FROM FABULOUS Asia Kate Dillon Jesse Tyler Ferguson Madonna Christian Siriano Kamala Harris Indigo Girls Gus Kenworthy Bethany Meyers George Takei BILLY Margaret Cho Rufus Wainwright Carson Kressley Adore Delano Daya And more... PORTERP.46 PLUS! INTRODUCING THE INTERPRIDELIFE SEAL OF APPROVAL P.14 B:17.375” T:15.75” S:14.75” Important Facts About DOVATO Tell your healthcare provider about all of your medical conditions, This is only a brief summary of important information about including if you: (cont’d) This is only a brief summary of important information about DOVATO and does not replace talking to your healthcare provider • are breastfeeding or plan to breastfeed. Do not breastfeed if you SO MUCH GOES about your condition and treatment. take DOVATO. You should not breastfeed if you have HIV-1 because of the risk of passing What is the Most Important Information I Should ° You should not breastfeed if you have HIV-1 because of the risk of passing What is the Most Important Information I Should HIV-1 to your baby. Know about DOVATO? INTO WHO I AM If you have both human immunodeficiency virus-1 (HIV-1) and ° One of the medicines in DOVATO (lamivudine) passes into your breastmilk. hepatitis B virus (HBV) infection, DOVATO can cause serious side ° Talk with your healthcare provider about the best way to feed your baby. -

Will the Quants Invade Sand Hill Road? | Venturebeat

Will the quants invade Sand Hill Road? | VentureBeat http://venturebeat.com/2010/10/21/will-the-quants-invade-sand-... VentureBeat Will the quants invade Sand Hill Road? October 21, 2010 | Alex Salkever 7 Comments Will the quants storm the clubby bastions of the venture capital world? That question has been coming up frequently of late. At the Demo 2010 conference, InLab Ventures rolled out a new venture capital program it dubbed VC3.0 that included elimination of carry fees and incentives for board members to spend time with invested startups. Most importantly, though, the InLab Ventures platform features back-tested screening technology that InLab claims can quickly and accurately identify which startups have a greater chance of success even before initial funding occurs. In other words, InLab says it has a mathematical and statistical model that can predict startup success. Apparently the technology is decent, as InLab general partner Greg Doyle told me that at least one major consulting firm doing research into VC markets has licensed the model for due diligence purposes. If InLab’s magical model works and math can replace that vaunted gut judgment that to date has been a critical criteria for deciding whether to invest in a startup, then why should VCs be using their gut to pick investments at all? (a point raised by VC gadfly Paul Kedrosky). Why not let the quants, the propeller-heads that designed trading models for Wall Street and for other hard-to-value asset classes do the same for Silicon Valley? 1 of 11 10/27/10 4:54 PM Will the quants invade Sand Hill Road? | VentureBeat http://venturebeat.com/2010/10/21/will-the-quants-invade-sand-.. -

30-Minute Social Media Marketing

30-MINUTE SOCIAL MEDIA MARKETING Step-by-Step Techniques to Spread the Word About Your Business FAST AND FREE Susan Gunelius New York Chicago San Francisco Lisbon London Madrid Mexico City Milan New Delhi San Juan Seoul Singapore Sydney Toronto To Scott, for supporting every new opportunity I pursue on and off the social Web and for sending me blog post ideas when I’m too busy to think straight. And to my family and friends for remembering me and welcoming me with open arms when I eventually emerge from behind my computer. Copyright © 2011 by Susan Gunelius. All rights reserved. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior written permission of the publisher. ISBN: 978-0-07-174865-0 MHID: 0-07-174865-2 The material in this eBook also appears in the print version of this title: ISBN: 978-0-07-174381-5, MHID: 0-07-174381-2. All trademarks are trademarks of their respective owners. Rather than put a trademark symbol after every oc- currence of a trademarked name, we use names in an editorial fashion only, and to the benefi t of the trademark owner, with no intention of infringement of the trademark. Where such designations appear in this book, they have been printed with initial caps. McGraw-Hill eBooks are available at special quantity discounts to use as premiums and sales promotions, or for use in corporate training programs.