Sleepcoacher: Combining Computational and Clinician-Generated Sleep Recommendations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Extracts on Eggs, Nymphs and Adult Red Spider Mites, Tetranychus Spp. (Acari: Tetranychidae) on Tomatoes

African Journal of Agricultural Research Vol.8(8), pp. 695-700, 8 March, 2013 Available online at http://www.academicjournals.org/AJAR DOI: 10.5897/AJAR12.2143 ISSN 1991-637X ©2013 Academic Journals Full Length Research Paper Efficacy of Syringa (Melia Azedarach L.) extracts on eggs, nymphs and adult red spider mites, Tetranychus spp. (Acari: Tetranychidae) on tomatoes Mwandila N. J. K.1, J. Olivier1*, D. Munthali2 and D. Visser3 1Department of Environmental Sciences, University of South Africa (UNISA), Florida 1710, South Africa. 2Department of Crop Science and Production, Botswana College of Agriculture, Private Bag 0027, Gaborone, Botswana. 3ARC-Roodeplaat Vegetable and Ornamental Plant Institute (VOPI), Private Bag X293, Pretoria 0001, South Africa. Accepted 18 February, 2013 This study evaluated the effect of Syringa (Melia azedarach) fruit and seed extracts (SSE) on red spider mite (Tetranychus spp.) eggs, nymphs and adults. Bioassay investigations were carried at the Vegetable and Ornamental Plant Institute (VOPI) outside Pretoria in South Africa using different concentrations (0.1, 1, 10, 20, 50, 75 and 100%) of SSE. Mortalities were measured at 24, 48 and 72 h after treatment and compared to the effects of the synthetic acaricides: Abamectin, chlorfenapyr and protenofos. A completely randomized design (CRD) was used with 12 treatments. Analysis of variance (ANOVA) was used to test for effects of treatments. Differences in treatment means were identified using Fisher’s protected t-test least significant difference (LSD) at the 1% level of significance. Data were analysed using the statistical program GenStat (2003). The result of the analyses revealed that the efficacy of SSE and commercial synthetic acaricides increased with exposure time. -

'Jaws'. In: Hunter, IQ and Melia, Matthew, (Eds.) the 'Jaws' Book : New Perspectives on the Classic Summer Blockbuster

This is the accepted manuscript version of Melia, Matthew [Author] (2020) Relocating the western in 'Jaws'. In: Hunter, IQ and Melia, Matthew, (eds.) The 'Jaws' book : new perspectives on the classic summer blockbuster. London, U.K. For more details see: https://www.bloomsbury.com/uk/the-jaws-book-9781501347528/ 12 Relocating the Western in Jaws Matthew Melia Introduction During the Jaws 40th Anniversary Symposium1 Carl Gottlieb, the film’s screenwriter, refuted the suggestion that Jaws was a ‘Revisionist’ or ‘Post’ Western, and claimed that the influence of the Western genre had not entered the screenwriting or production processes. Yet the Western is such a ubiquitous presence in American visual culture that its narratives, tropes, style and forms can be broadly transposed across a variety of non-Western genre films, including Jaws. Star Wars (1977), for instance, a film with which Jaws shares a similar intermedial cultural position between the Hollywood Renaissance and the New Blockbuster, was a ‘Western movie set in Outer Space’.2 Matthew Carter has noted the ubiquitous presence of the frontier mythos in US popular culture and how contemporary ‘film scholars have recently taken account of the “migration” of the themes of frontier mythology from the Western into numerous other Hollywood genres’.3 This chapter will not claim that Jaws is a Western, but that the Western is a distinct yet largely unrecognised part of its extensive cross-generic hybridity. Gottlieb has admitted the influence of the ‘Sensorama’ pictures of proto-exploitation auteur William Castle (the shocking appearance of Ben Gardner’s head is testament to this) as well as The Thing from Another World (1951),4 while Spielberg suggested that they were simply trying to make a Roger Corman picture.5 Critical writing on Jaws has tended to exclude the Western from the film’s generic DNA. -

Melia-Ebrochure.Pdf

SOHNA - THE BEST OF SUBURBIA AND THE CITY Sohna or South Gurgaon is an Idyllic retreat with sulphur water Springs , Scenic Lake and a charming bird scantury, just a stone throw away. South of Gurgaon offers you the luxury of living in a chaos-free environment – while enjoying Gurgaon's best amenities at an affordable price compared to Gurgaon. South of Gurgaon is well connected to Gurgaon and the National Capital by the National Highway 248A which will also soon be revamped to a 6 Lane highway. The areas around the Gurgaon Sohna highway is set to emerge as the next axis of industrial and commercial development like Manesar. The Haryana State Industrial & Infrastructure Development Corporation (HSIIDC) has acquired about 1,700 acres of land in Roz Ka Meo, nearly 26 km from Gurgaon, to establish a new industrial township on the lines of IMT Manesar. It will be well connected by the in-progress KMP (Kundli-Manesar-Palwal) and DMIC (Delhi Mumbai Industrial Corridor). Coming to localized connectivity, Sohna has a proposed 60 meter wide sector road that connects 5 sectors of Sohna. It will also have a 2 km elevated road between Vatika City and Badshahpur. South of Gurgaon, is also rapidly accessible from Udyog Vihar, Cyber City, IFFCO Chowk, Rajeev Chowk, NH8, Subhash Chowk and Hero Honda Chowk. Infrastructure South of Gurgaon has many other important facilities already in place – like banks, ATMs, shops for daily needs, nearby – all of which make living here extremely convenient. Hospitals like Medanta, Artemis, Paras, Fortis, Max, etc. are also located within 25-30 minutes. -

PLANETARIAN Journal of the International Planetarium Society Vol

PLANETARIAN Journal of the International Planetarium Society Vol. 29, No.4, December 2000 Articles 6 Invitations for IPS 2004 ....................................................... various 12 Creation of A New World of 1.7 Million Stars .. Takayuki Ohira 16 We Make the Magic ........................................................ Jack Dunn 18 Planetarium Partnerships ....................................... Carole Helper Features 21 Reviews ...................................................................... April S. Whitt 26 Forum: How Can IPS Serve You in Future? .............. Steve 29 Mobile News Network ............................................. Susan 34 What's New ................................................................ Jim Manning 38 International News ..................................................... Lars Broman 43 President's Message .................................. oo .................. Dale Smith 61 Minutes of IPS Council Meeting ...................... Lee Ann Hennig 70 Jane's Corner ............................................................. Jane Hastings North America Welcomes a Brilliant NelN Character in Star ShOlNs: Zeiss Fiber Optics With the dawn of the new millenni improve the quality of Star Shows for um, visitors of the new planetariums in audiences of the Universarium. They are Oakland, CA and New York City will also offered with the Starmaster, the experience brilliant stars produced by medium planetarium. the Carl Zeiss Universarium fiber optics Quality at the highest level which systems, -

Melian Nymphs Scholars Widely Assume That Hesiod Alludes to An

Melian Nymphs Scholars widely assume that Hesiod alludes to an older tradition that human beings sprang from ash trees or their nymphs (West 1978, on WD 145-6, Most 1997,109- 110; Hardie 2000, 20; Yates 2004; Sistakou 2009, 253 n. 35): at Theog. 187, where the Meliae, like the Giants, are born from Gaia and Ouranos' blood, perhaps at Theog. 563, and at WD 145, where Zeus makes the Bronze Race from ash-trees. However, there really is no evidence for such a tradition before Hesiod; it is an interpretation of Hesiod's text. Hesiod narrates how Zeus withdrew fire at Theog. 563-4,: οὐκ ἐδίδου μελίῃσι πυρὸς μένος ἀκαμάτοιο θνητοῖς ἀνθρώποις οἳ ἐπὶ χθονὶ ναιετάουσιν He was not giving the power of tireless fire to the ash-trees for the benefit of mortal men who live on the earth Ancient commentators took μελίῃσι as a synonym for ἀνθρώποις, as the scholia show: 563b.) μ ε λ ί ῃ σ ι ν : ἀντὶ τοῦ μελιηγενὴς ἢ ἀπό τινος Μελίας οὕτω καλουμένης νύμφης. ἄλλως. ἢ ὅτι ἐξ ἀρχῆς ἐκ Μελιῶν νυμφῶν ἐγεννῶντο οἱ ἄνθρωποι ἢ ὅτι γεννώμενοι ἐρρίπτοντο ὑπὸ ταῖς μελίαις, ἤτοι τοῖς δένδροις. Ash-trees: instead of "ash-born" or from a certain nymph who was named this way, Melia. Or because in the beginning people were born from the Melian numphs or because at birth they were cast under ash- trees, or simply trees. We cannot know why ancient scholars preferred this interpretation instead of the simpler one that Zeus transmitted fire into trees (ash is an excellent firewood). -

Melia Azedarach L

Journal of Bacteriology & Mycology: Open Access Research Article Open Access Formulation of Allium sativum L. and Melia azedarach L. plant extracts and their effects on Myzus persicae Sulzer, 1776 (Hemiptera: Aphididae) Abstract Volume 8 Issue 3 - 2020 Myzus persicae Sulzer, 1776 (Hem.: Aphididae) the most important pests of vegetable Pervin ERDOGAN,1 Pelin AKSU,2 Gamze cultivated in the world. Pesticides are used extensively to control this pest. Intensive use ESİN KILINC,2 Murat KAHYAOGLU,3 of chemical pesticides to control pests caused various side effects such as residues in the 2 product, pests’ resistance and push a great risk for human health, nature and environment. Numan E BABAROGLU 1 This study was undertaken to provide an alternative to chemical pesticides. For this purpose, Department of Plant Protection, Sivas Science and Technology University, Turkey the extract of Allium sativum L. (Liliaceae) and Melia azedarach L. (Meliaceae) were 2Plant Protection Central Research Institute, Turkey prepared. Then formulation studies of these extracts were carried out with several inert 3Agriculture and Chemical industry Trade Corporation, Turkey ingredients. Obtained preparations were subjected to quality control tests in the laboratory. As a result of these tests, preparations which were found successfully were separated/ Correspondence: Pervin ERDOGAN, Department of Plant chosen for effectiveness studies on M. persicae. According to the results of laboratory Protection, Faculty of Agricultural Sciences and Technology, Sivas studies, the highest dose found to be effective and theirs two upper doses (10, 15, 20ml/L) Science and Technology University, Turkey, Tel +90346 219 13 98, were taken to examine effect on M. persicae at the greenhouse conditions. -

(1756- 1791) Apollo Et Hyacinthus, K.38

WOLFGANG AMADEUS MOZART (1756-1791) APOLLO ET HYACINTHUS, K.38 Latin intermedium in three parts (1767) Libretto by RUFINUS WIDL (1731-1798) OEBALUS King of Laconia ANDREW KENNEDY tenor MELIA daughter of Oebalus KLARA EK soprano HYACINTHUS son of Oebalus SOPHIE BEVAN soprano APOLLO guest of Oebalus LAWRENCE ZAZZO countertenor ZEPHYRUS friend of Hyacinthus CHRISTOPHER AINSLIE countertenor TWO PRIESTS OF APOLLO MARCUS FARNSWORTH baritone DAVID SHIPLEY bass THE MOZARTISTS Leader: Matthew Truscott Continuo: Steven Devine (harpsichord), Joseph Crouch (cello), Cecelia Bruggemeyer (double bass) IAN PAGE conductor MOZART / APOLLO ET HYACINTHUS 3 8536_CLA_Apollo_Signum_BOOKLET_134x122_TEXT PAGES_FINAL.indd 3 16/04/2019 15:26 4 MOZART / APOLLO ET HYACINTHUS 8536_CLA_Apollo_Signum_BOOKLET_134x122_TEXT PAGES_FINAL.indd 4 16/04/2019 15:26 APOLLO ET HYACINTHUS, K.38 PROLOGUS Page 1 Intrada 2’41 23 2 Recitativo: Amice! iam parata sunt omnia (Hyacinthus, Zephyrus, Oebalus, Melia) 2’53 23 3 No. 1, Chorus: Numen o Latonium (Chorus, Oebalus) 4’26 25 4 Recitativo: Heu me! periimus! (Melia, Oebalus, Hyacinthus, Zephyrus) 1’24 26 5 No. 2, Aria: Saepe terrent Numina (Hyacinthus) 7’48 27 6 Recitativo: Ah Nate! vera loqueris (Oebalus, Apollo, Hyacinthus, Melia, Zephyrus) 3’04 27 7 No. 3, Aria: Iam pastor Apollo (Apollo) 3’46 30 CHORUS I 8 Recitativo: Amare numquid Filia (Oebalus, Melia) 1’49 31 9 No. 4, Aria: Laetari, iocari (Melia) 6’39 32 10 Recitativo: Rex! de salute Filii (Zephyrus, Oebalus, Melia) 5’22 33 11 No. 5, Aria: En! duos conspicis (Zephyrus) 3’08 37 12 Recitativo: Heu! Numen! ecce! (Zephyrus, Melia, Apollo) 2’05 37 13 No. 6, Duetto: Discede crudelis! (Melia, Apollo) 6’22 39 CHORUS II 14 Recitativo accompagnato: Non est – Quis ergo Nate! (Hyacinthus, Oebalus) 2’35 40 15 No. -

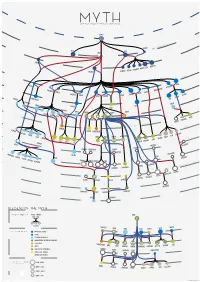

Legend of the Myth

MYTH Graph of greek mythological figures CHAOS s tie dei ial ord EREBUS rim p EROS NYX GAEA PONTU S ESIS NEM S CER S MORO THANATO A AETHER HEMER URANUS NS TA TI EA TH ON PERI IAPE HY TUS YNE EMNOS MN OC EMIS EANUS US TH TETHYS COE LENE PHOEBE SE EURYBIA DIONE IOS CREUS RHEA CRONOS HEL S EO PR NER OMETH EUS EUS ERSE THA P UMAS LETO DORIS PAL METIS LAS ERIS ST YX INACHU S S HADE MELIA POSEIDON ZEUS HESTIA HERA P SE LEIONE CE A G ELECTR CIR OD A STYX AE S & N SIPH ym p PA hs DEMETER RSES PE NS MP IA OLY WELVE S EON - T USE OD EKATH M D CHAR ON ARION CLYM PERSEPHONE IOPE NE CALL GALA CLIO TEA RODITE ALIA PROTO RES ATHENA APH IA TH AG HEBE A URAN AVE A DIKE MPHITRIT TEMIS E IRIS OLLO AR BIA AP NIKE US A IO S OEAGR ETHRA THESTIU ATLA S IUS ACRIS NE AGENOR TELEPHASSA CYRE REUS TYNDA T DA AM RITON LE PHEUS BROS OR IA E UDORA MAIA PH S YTO PHOEN OD ERYTHE IX EUROPA ROMULUS REMUS MIG A HESP D E ERIA EMENE NS & ALC UMA DANAE H HARMONIA DIOMEDES ASTOR US C MENELA N HELE ACLES US HER PERSE EMELE DRYOPE HERMES MINOS S E ERMION H DIONYSUS SYMAETHIS PAN ARIADNE ACIS LATRAMYS LEGEND OF THE MYTH ZEUS FAMILY IN THE MYTH FATHER MOTHER Zeus IS THEM CHILDREN DEMETER LETO HERA MAIA DIONE SEMELE COLORS IN THE MYTH PRIMORDIAL DEITIES TITANS SEA GODS AND NYMPHS DODEKATHEON, THE TWELVE OLYMPIANS DIKE OTHER GODS PERSEPHONE LO ARTEMIS HEBE ARES HERMES ATHENA APHRODITE DIONYSUS APOL MUSES EUROPA OSYNE GODS OF THE UNDERWORLD DANAE ALCEMENE LEDA MNEM ANIMALS AND HYBRIDS HUMANS AND DEMIGODS circles in the myth 196 M - 8690 K } {Google results M CALLIOPE INOS PERSEU THALIA CLIO 8690 K - 2740 K S HERACLES HELEN URANIA 2740 K - 1080 K 1080 K - 2410 J. -

The Efficacy of Enhanced Aqueous Extracts of Melia Azedarach Leaves

Bulletin of Insectology 56 (2): 269-275, 2003 ISSN 1721-8861 The efficacy of enhanced aqueous extracts of Melia azedarach leaves and fruits integrated with the Camptotylus reuteri releases against the sweetpotato whitefly nymphs 1 2 Chantal JAZZAR , Efat ABOU-FAKHR HAMMAD 1Institute of Plant Protection and Plant Diseases, Hannover University, Germany 2Faculty of Agricultural and Food Sciences, American University of Beirut, Lebanon Abstract Bioassays with aqueous extracts of different growth stages of Melia azedarach L. (chinaberry) leaves and fruits were conducted against 3rd - 4th instar nymphs of Bemisia tabaci (Gennadius) on tomato plants. Mortality of instars was assessed 15 days post- treatment. Results illustrated that every Melia extract produced a significant greater mortality of B. tabaci than the controls. Ex- tracts combined with the surfactant were significantly more toxic than those applied alone. Extracts with Tween-20 caused a mortality range of 34.6 to 67.9% and 53.5 to 74.1% for leaves and fruits, respectively. These was parallel to 15.6 and 31.4% and 11.4 to 18.7% for leaf and fruit extracts, lacking the surfactant, respectively. The synchronous application of one indigenous adult mirid predator, Camptotylus reuteri (Jakovlev) introduced at 30 min after spraying the Melia fruit extract, for a period of 3 days, was not synergistic with the botanical extract. The potential insecticidal activity of Melia extracts appears to be enhanced by Tween-20, and they are safe to integrate with C. reuteri biocontrol agent as the extracts did not cause any mortality of the predator. Key words: Melia azedarach, Bemisia tabaci, Camptotylus reuteri, Tween-20, botanical insecticide. -

Description of 'Candidatus Phytoplasma Meliae', a Phytoplasma Associated with Chinaberry (Melia Azedarach L.) Yellowing in S

International Journal of Systematic and Evolutionary Microbiology (2016), 66, 5244–5251 DOI 10.1099/ijsem.0.001503 Description of ‘Candidatus Phytoplasma meliae’, a phytoplasma associated with Chinaberry (Melia azedarach L.) yellowing in South America Franco Daniel Fernandez, 1 Ernestina Galdeano,2 Marcela Victoria Kornowski,3 Joel Demian Arneodo4 and Luis Rogelio Conci1 Correspondence 1Instituto de Patología Vegetal (IPAVE), CIAP-INTA, Camino 60 cuadras km 5 ½ (X5020ICA), Luis Rogelio Conci Córdoba, Argentina [email protected] 2Instituto de Botanica del Nordeste, (CONICET-UNNE), Facultad de Ciencias Agrarias, Universidad Nacional del Nordeste, Sargento Cabral 2131 (3400), Corrientes, Argentina 3Estación Experimental Agropecuaria Montecarlo-INTA, Av. El Libertador 2472 (3384), Montecarlo, Argentina 4Instituto de Microbiología y Zoología Agrícola (IMyZA), INTA, Nicolas Repetto y de los Reseros s/n (1686), Hurlingham, Argentina China tree yellows (ChTY) phytoplasma is associated with the yellowing disease of the China tree (Melia azedarach) in Argentina. According to partial 16S rRNA gene analysis, ChTY phytoplasma belongs to the 16Sr XIII group, subgroup G. Strains of species of ChTY have 98– 99 % 16S rDNA gene sequence similarity with 16SrXIII-group phytoplasmas, and less than 97.5 % when compared to all ‘Candidatus Phytoplasma’ described so far, except for the novel ‘Candidatus Phytoplasma hispanicum’. However, strains of species of ChTY are differentiated from the latter due to having additional molecular and biological attributes. The presence of unique features in the 16S rDNA sequence distinguishes ChTY from all species of ‘Candidatus Phytoplasma’ currently described. The in silico RFLP profile of 16S rDNA (1.2 kb) and rpLV-rpsC (1.3 kb) genes distinguished ChTY, as in the 16SrXIII-G subgroup within the 16SrXIIII group. -

Greek Mythology / Apollodorus; Translated by Robin Hard

Great Clarendon Street, Oxford 0X2 6DP Oxford University Press is a department of the University of Oxford. It furthers the University’s objective of excellence in research, scholarship, and education by publishing worldwide in Oxford New York Athens Auckland Bangkok Bogotá Buenos Aires Calcutta Cape Town Chennai Dar es Salaam Delhi Florence Hong Kong Istanbul Karachi Kuala Lumpur Madrid Melbourne Mexico City Mumbai Nairobi Paris São Paulo Shanghai Singapore Taipei Tokyo Toronto Warsaw with associated companies in Berlin Ibadan Oxford is a registered trade mark of Oxford University Press in the UK and in certain other countries Published in the United States by Oxford University Press Inc., New York © Robin Hard 1997 The moral rights of the author have been asserted Database right Oxford University Press (maker) First published as a World’s Classics paperback 1997 Reissued as an Oxford World’s Classics paperback 1998 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, without the prior permission in writing of Oxford University Press, or as expressly permitted by law, or under terms agreed with the appropriate reprographics rights organizations. Enquiries concerning reproduction outside the scope of the above should be sent to the Rights Department, Oxford University Press, at the address above You must not circulate this book in any other binding or cover and you must impose this same condition on any acquirer British Library Cataloguing in Publication Data Data available Library of Congress Cataloging in Publication Data Apollodorus. [Bibliotheca. English] The library of Greek mythology / Apollodorus; translated by Robin Hard. -

Greek American Wedding

2 COMMUNITY THE NATIONAL HERALD, MARCH 15, 2008 GOINGS ON... ■ MARCH 17 cation technology on that division. WASHINGTON, D.C. – The President Their work in Cyprus began in 1994 of the Alexander S. Onassis Public as an extension of their study of the Benefit Foundation Mr. Anthony Pa- Greek American Community in Asto- padimitriou cordially invites the ria. They have lectured throughout community to a dramatic reading of the world on the role of communica- selected passages “Thucydides’ His- tion in sites of conflict and division. tory of the Peloponnesian War,” with They have visited Cyprus at least 20 commentary by Dr. Josiah Ober Pro- times and have published extensive- fessor of Classics and Political Sci- ly as the result of their research. ence; Constantinos Mitsotakis Chair, They have interviewed Presidents School of Humanities and Sciences, Clerides, Papadopolous and Stanford University, California at Christofias, as well as Turkish Cypri- The Shakespeare Theatre Company ot community leaders Rauf Denk- Lansburgh Theatre (450 7th Street tash and Mehmet Talat and other no- NW) on Monday, March 17 at 7 PM. table figures in Cyprus. They have Dramatic reading by professional ac- conducted Media Literacy work- tors. Directed by David Muse, Associ- shops for Greek and Turkish Cypriot ate Director, Shakespeare Theatre participants. For more information, Company. The Spartans consider ar- call Despina Axiotakis at 201-444- guments for and against going to 8237. war with the Athenians, exposing timeless issues of foreign policy. Peri- ■ MAY 2 – JUNE 1 cles delivers his great funeral oration NEW YORK – Theatron Inc. cordially setting forth the highest ideals of the invite the community to the play “39 civilization of Classical Athens.