Nuno Filipe Correia De Almeida Interacção Multimodal

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bibliography of Erik Wilde

dretbiblio dretbiblio Erik Wilde's Bibliography References [1] AFIPS Fall Joint Computer Conference, San Francisco, California, December 1968. [2] Seventeenth IEEE Conference on Computer Communication Networks, Washington, D.C., 1978. [3] ACM SIGACT-SIGMOD Symposium on Principles of Database Systems, Los Angeles, Cal- ifornia, March 1982. ACM Press. [4] First Conference on Computer-Supported Cooperative Work, 1986. [5] 1987 ACM Conference on Hypertext, Chapel Hill, North Carolina, November 1987. ACM Press. [6] 18th IEEE International Symposium on Fault-Tolerant Computing, Tokyo, Japan, 1988. IEEE Computer Society Press. [7] Conference on Computer-Supported Cooperative Work, Portland, Oregon, 1988. ACM Press. [8] Conference on Office Information Systems, Palo Alto, California, March 1988. [9] 1989 ACM Conference on Hypertext, Pittsburgh, Pennsylvania, November 1989. ACM Press. [10] UNIX | The Legend Evolves. Summer 1990 UKUUG Conference, Buntingford, UK, 1990. UKUUG. [11] Fourth ACM Symposium on User Interface Software and Technology, Hilton Head, South Carolina, November 1991. [12] GLOBECOM'91 Conference, Phoenix, Arizona, 1991. IEEE Computer Society Press. [13] IEEE INFOCOM '91 Conference on Computer Communications, Bal Harbour, Florida, 1991. IEEE Computer Society Press. [14] IEEE International Conference on Communications, Denver, Colorado, June 1991. [15] International Workshop on CSCW, Berlin, Germany, April 1991. [16] Third ACM Conference on Hypertext, San Antonio, Texas, December 1991. ACM Press. [17] 11th Symposium on Reliable Distributed Systems, Houston, Texas, 1992. IEEE Computer Society Press. [18] 3rd Joint European Networking Conference, Innsbruck, Austria, May 1992. [19] Fourth ACM Conference on Hypertext, Milano, Italy, November 1992. ACM Press. [20] GLOBECOM'92 Conference, Orlando, Florida, December 1992. IEEE Computer Society Press. http://github.com/dret/biblio (August 29, 2018) 1 dretbiblio [21] IEEE INFOCOM '92 Conference on Computer Communications, Florence, Italy, 1992. -

IT Acronyms.Docx

List of computing and IT abbreviations /.—Slashdot 1GL—First-Generation Programming Language 1NF—First Normal Form 10B2—10BASE-2 10B5—10BASE-5 10B-F—10BASE-F 10B-FB—10BASE-FB 10B-FL—10BASE-FL 10B-FP—10BASE-FP 10B-T—10BASE-T 100B-FX—100BASE-FX 100B-T—100BASE-T 100B-TX—100BASE-TX 100BVG—100BASE-VG 286—Intel 80286 processor 2B1Q—2 Binary 1 Quaternary 2GL—Second-Generation Programming Language 2NF—Second Normal Form 3GL—Third-Generation Programming Language 3NF—Third Normal Form 386—Intel 80386 processor 1 486—Intel 80486 processor 4B5BLF—4 Byte 5 Byte Local Fiber 4GL—Fourth-Generation Programming Language 4NF—Fourth Normal Form 5GL—Fifth-Generation Programming Language 5NF—Fifth Normal Form 6NF—Sixth Normal Form 8B10BLF—8 Byte 10 Byte Local Fiber A AAT—Average Access Time AA—Anti-Aliasing AAA—Authentication Authorization, Accounting AABB—Axis Aligned Bounding Box AAC—Advanced Audio Coding AAL—ATM Adaptation Layer AALC—ATM Adaptation Layer Connection AARP—AppleTalk Address Resolution Protocol ABCL—Actor-Based Concurrent Language ABI—Application Binary Interface ABM—Asynchronous Balanced Mode ABR—Area Border Router ABR—Auto Baud-Rate detection ABR—Available Bitrate 2 ABR—Average Bitrate AC—Acoustic Coupler AC—Alternating Current ACD—Automatic Call Distributor ACE—Advanced Computing Environment ACF NCP—Advanced Communications Function—Network Control Program ACID—Atomicity Consistency Isolation Durability ACK—ACKnowledgement ACK—Amsterdam Compiler Kit ACL—Access Control List ACL—Active Current -

Multimodal Input Fusion for Companion Technology a Thesis Submitted to Attain the Degree of Dr

Ulm University | 89069 Ulm | Germany Faculty of Engineering, Computer Sciences and Psychology Institute of Media Informatics Multimodal Input Fusion for Companion Technology A thesis submitted to attain the degree of Dr. rer. nat. of the Faculty of Engineering, Computer Sciences and Psychology at Ulm University Author: Felix Schüssel from Erlangen 2017 Version of November 28, 2017 c 2017 Felix Schüssel This work is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 3.0 License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/de/ or send a letter to Creative Commons, 543 Howard Street, 5th Floor, San Francisco, California, 94105, USA. Typeset: PDF-LATEX 2" Date of Graduation: November 28, 2017 Dean: Prof. Dr. Frank Kargl Advisor: Prof. Dr.-Ing. Michael Weber Advisor: Prof. Dr. Dr.-Ing. Wolfgang Minker A Note on Copyrighted Material: [citation] For added clarity, literally adopted parts from own work published elsewhere are emphasized with markings in the page margins as exemplified on the left. These markings are made generously to minimize impacts on layout and readability. In consequence, they may occasionally contain information obviously not stemming from the original publication that are not exempted from the marking (headings, references within this thesis, etc.). The above applies to parts of this thesis that have already been published in the following articles given by bibliography reference and copyright notice as mandated by the respective publisher: [144]: Multimodal Pattern Recognition of Social Signals in Human-Computer-Interaction. Using the Transferable Belief Model for Multimodal Input Fusion in Companion Systems, vol. -

3GPP TR 22.977 Version 6.0.0 Release 6)

ETSI TR 122 977 V6.0.0 (2002-09) Technical Report Universal Mobile Telecommunications System (UMTS); Feasibility study for speech-enabled services (3GPP TR 22.977 version 6.0.0 Release 6) 3GPP TR 22.977 version 6.0.0 Release 6 1 ETSI TR 122 977 V6.0.0 (2002-09) Reference DTR/TSGS-0122977v600 Keywords UMTS ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice Individual copies of the present document can be downloaded from: http://www.etsi.org The present document may be made available in more than one electronic version or in print. In any case of existing or perceived difference in contents between such versions, the reference version is the Portable Document Format (PDF). In case of dispute, the reference shall be the printing on ETSI printers of the PDF version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at http://portal.etsi.org/tb/status/status.asp If you find errors in the present document, please send your comment to one of the following services: http://portal.etsi.org/chaircor/ETSI_support.asp Copyright Notification No part may be reproduced except as authorized by written permission. -

W3C Standards for Multimodal Interaction

W3C Japan Day - 14th November 2003 WW33CC ssttaannddaarrddss ffoorr MMuullttiimmooddaall IInntteerraaccttiioonn Dave Raggett W3C/Canon W3C Activity lead for Voice & Multimodal Copyright © 2003 W3C Introduction o 10 years ago, the Mosaic browser unleashed the Web, stimulating massive growth on an unprecedented scale o Today the Web is pervasive and enables an incredible range of services o Mobile networks and falling hardware costs make in-car systems increasingly attractive o W3C is developing the vision and core technical standards for the multimodal Web 14 November 2003 2/20 Copyright © 2003 W3C The Need for Standards Bluetooth headset SonyEricsson P800 Lexus console 14 November 2003 3/20 Copyright © 2003 W3C Multimodal – Our Dream o Adapting the Web to allow multiple modes of interaction: – GUI, Speech, Vision, Pen, Gestures, Haptic interfaces o Augmenting human to computer and human to human interaction – Communication services involving multiple devices and multiple people o Anywhere, Any device, Any time – Services that dynamically adapt to the device, user preferences and environmental conditions o Accessible to all 14 November 2003 4/20 Copyright © 2003 W3C W3C Multimodal Interaction Working Group o Initial requirements study in W3C Voice Browser working group, followed by a joint workshop with the WAP Forum in 2000 o Working Group was formed in February 2002, and the following organizations are participating: Access, Alcatel, Apple, Aspect, AT&T, Avaya, BeVocal, Canon, Cisco, Comverse, EDS, Ericsson, France Telecom, Fraunhofer -

Stephen M. Watt 25 October 2010

Stephen M. Watt 25 October 2010 Professional Address Department of Computer Science MC 375 [email protected] University of Western Ontario http://www.csd.uwo.ca/~watt London Ontario, canada n6a 5b7 Tel. +1 519 661-4244 Fax. +1 519 661-3515 Personal Address 56 Doncaster Place [email protected] London Ontario, canada n6g 2a5 Tel. +1 519 471-1688 Fax. +1 519 204-9288 Table of Contents General Career and Education . 2 Professional Biography . 2 Prizes, Awards, Honours . .3 Leadership . 4 Research Research Summary . 6 Publications . 8 Major Software Productions . 20 Invited Presentations . 21 Research Grants . .27 Technology Transfer . 30 Teaching Teaching Philosophy . .31 Program Development . 31 Courses Taught . .32 Training of Highly Qualified Personnel . .34 Outreach and Coaching . .39 Service Institutional Service . 40 Professional Boards and Committees . 42 Conference Organization . 43 Evaluation . 47 Stephen M. Watt 2 1 Career and Education 1997-date Professor, Department of Computer Science, University of Western Ontario, canada Department Chair (1997-2002) Cross appointments to departments of Applied Mathematics and Mathematics 2001-date Member, Board of Directors, Descartes Systems Group (nasdaq dsgx, tse dsg) Chairman of the Board (2003-2007) 1998-2009 Member, Board of Directors, Maplesoft 1996-1998 Responsable Scientifique (Scientific Director), Projet safir Institut National de Recherche en Informatique et en Automatique, france 1995-1997 Professeur, Dept. d’Informatique, Universit´ede Nice–Sophia Antipolis, france Titularisation (tenure) awarded January 1996. 1984-1997 Research Staff Member, IBM T.J. Watson Research Center, Yorktown Heights ny, usa 1982-1984 Lecturer (during PhD), Dept. of Computer Science, University of Waterloo, canada 1981-1986 PhD, Computer Science, University of Waterloo, canada 1979-1981 MMath, Applied Mathematics, University of Waterloo, canada 1976-1979 BSc, Honours Mathematics and Honours Physics, U. -

Reducing the Description Amount in Authoring MMI Applications

Reducing the Description Amount in Authoring MMI Applications Kouichi Katsurada, Kazumine Aoki, Hirobumi Yamada and Tsuneo Nitta Graduate School of Knowledge-based Information Engineering Toyohashi University of Technology, Japan {katurada, nitta}@tutkie.tut.ac.jp, {aoki, yamada}@vox.tutkie.tut.ac.jp Abstract describes outline of XISL2.0 and comparison with XISL1.1. Although an MMI description language XISL1.1 (eXtensible After discussing some future issues in section 4, we conclude Interaction Scenario Language) developed for web-based our work and show some future work in section 5. MMI applications has some desirable features such as high extensibility and controllability of modalities, it has some 2. XISL1.1 and Its Issues issues concerning capabilities for describing slot-filling style dialogs, a large amount of description for modality 2.1. Outline of XISL1.1 combination of inputs, and complicated authoring when handling XML contents. In this paper we provide a new XISL1.1 [5][6] is an XML-based language for describing version of XISL (XISL2.0) that resolves these issues by using MMI between a user and a system. In XISL1.1, a scenario is VoiceXML-based syntax, modality-independent input composed of a sequence of exchanges that contains a set of description, and XForms-like data model. The results of prompts for a user, a set of user's multimodal inputs and a set comparison between XISL1.1 and XISL2.0 showed that of system's actions. Actions include outputs to a user, simple XISL2.0 reduced the amount of description by 40% as arithmetic operations, conditional branches, dialog transition, compared with XISL1.1, and XISL2.0 succeeded to mitigate and so on. -

(12) United States Patent (10) Patent No.: US 7,798,417 B2 Snyder Et Al

US007798417B2 (12) United States Patent (10) Patent No.: US 7,798,417 B2 Snyder et al. (45) Date of Patent: Sep. 21, 2010 (54) METHOD FOR DATA INTERCHANGE application No. 1 1/325,713, filed on Jan.5, 2006, now Pat. No. 7,118,040. (76) Inventors: David M. Snyder, 1110 Wenig Rd. NE., (60) Provisional application No. 60/294,375, filed on May Cedar Rapids, IA (US) 524.02: Bruce D. 30, 2001, provisional application No. 60/232,825, Melick, 4335 Cloverdale Rd. NE., Cedar filed on Sep. 15, 2000, provisional application No. Rapids, IA (US) 52411; Leslie D. 60/213.843, filed on Jun. 23, 2000, provisional appli Baych, 4315 Woodfield La. NE., Cedar cation No. 60/174.220, filed on Jan. 3, 2000, provi Rapids, IA (US) 524.02: Paul R. sional application No. 60/572,140, filed on May 18, Staman, 1600 G St., Amana, IA (US) 2004, provisional application No. 60/727,605, filed on 52203; Nicholas J. Peters, 3229 260' Oct. 18, 2005, provisional application No. 60/813,899, St., Williamsburg, IA (US) 52261; filed on Jun. 15, 2006, provisional application No. Gregory P. Probst, 531 Woodridge Ave., 60/834,523, filed on Aug. 1, 2006. Iowa City, IA (US) 5224.5 (51) Int. C. (*) Notice: Subject to any disclaimer, the term of this G06K 9/06 (2006.01) patent is extended or adjusted under 35 G06K 9/00 (2006.01) U.S.C. 154(b) by 390 days. (52) U.S. Cl. ....................................... 235/494; 235/487 (58) Field of Classification Search ................. 235/380, (21) Appl. -

Multimodal Presentation of Biomedical Data Željko Obrenovic, Dušan Starcevic, Emil Jovanov

Multimodal Presentation of Biomedical Data Željko Obrenovic, Dušan Starcevic, Emil Jovanov 1. INTRODUCTION ...............................................................................................................................................2 2. DEFINING TERMS ............................................................................................................................................2 3. PRESENTATION OF BIOMEDICAL DATA..................................................................................................3 3.1. EARLY BIOMEDICAL SIGNAL DETECTION AND PRESENTATION ..................................................................3 3.2. COMPUTER-BASED SIGNAL DETECTION AND PRESENTATION ....................................................................3 3.3. TYPES OF BIOMEDICAL DATA .......................................................................................................................4 4. MULTIMODAL PRESENTATION ..................................................................................................................6 4.1. COMPUTING PRESENTATION MODALITIES ...................................................................................................6 4.1.1. Visualization..........................................................................................................................................7 4.1.2. Audio Presentation................................................................................................................................9 4.1.3. Haptic Rendering............................................................................................................................... -

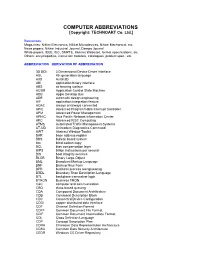

COMPUTER ABBREVIATIONS [Copyright: TECHNOART Co

COMPUTER ABBREVIATIONS [Copyright: TECHNOART Co. Ltd.] References: Magazines: Nikkei Electronics, Nikkei Microdevices, Nikkei Mechanical, etc. News papers: Nikkei Industrial Journal, Dempa Journal White papers: IEEE, IEC, SMPTE, Internet Websites, format specifications, etc. Others: encyclopedias, instruction booklets, catalogues, product spec., etc. ABBREVIATION DERIVATION OF ABBREVIATION 3D DDI 3 Dimensional Device Driver Interface 4GL 4th generation language A3D Aural 3D ABI application binary interface ABS air bearing surface ACSM Application Control State Machine ADB Apple Desktop Bus ADE automatic design engineering AIF application integration feature AOAC always on/always connected APIC Advanced Programmable Interrupt Controller APM Advanced Power Management APNIC Asia Pacific Network Information Center ARC Advanced RISC Computing ATMS Automated Traffic Management Systems AT-UD Unimodem Diagnostics Command AWT Abstract Window Toolkit BAR base address register BBS bulletin board system bcc blind carbon copy BCL bias compensation layer BIPS billion instructions per second BIS boot integrity services BLOB Binary Large Object BML Broadcast Markup Language BNF Backup Naur Form BPR business process reengineering BSDL Boundary Scan Description Language BTL backplane transceiver logic BTRON Business TRON C&C computer and communication CBQ class-based queuing CDA Compound Document Architecture CDB Command Description Block CDC Connected Device Configuration CDDI copper distributed data interface CDF Channel Definition Format CDFF Common -

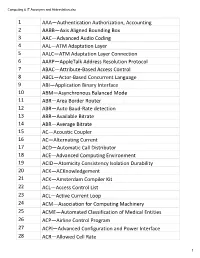

Computing & IT Acronyms and Abbreviation.Xlsx

Computing & IT Acronyms and Abbreviation.xlsx 1 AAA—Authentication Authorization, Accounting 2 AABB—Axis Aligned Bounding Box 3 AAC—Advanced Audio Coding 4 AAL—ATM Adaptation Layer 5 AALC—ATM Adaptation Layer Connection 6 AARP—AppleTalk Address Resolution Protocol 7 ABAC—Attribute-Based Access Control 8 ABCL—Actor-Based Concurrent Language 9 ABI—Application Binary Interface 10 ABM—Asynchronous Balanced Mode 11 ABR—Area Border Router 12 ABR—Auto Baud-Rate detection 13 ABR—Available Bitrate 14 ABR—Average Bitrate 15 AC—Acoustic Coupler 16 AC—Alternating Current 17 ACD—Automatic Call Distributor 18 ACE—Advanced Computing Environment 19 ACID—Atomicity Consistency Isolation Durability 20 ACK—ACKnowledgement 21 ACK—Amsterdam Compiler Kit 22 ACL—Access Control List 23 ACL—Active Current Loop 24 ACM—Association for Computing Machinery 25 ACME—Automated Classification of Medical Entities 26 ACP—Airline Control Program 27 ACPI—Advanced Configuration and Power Interface 28 ACR—Allowed Cell Rate 1 Computing & IT Acronyms and Abbreviation.xlsx 29 ACR—Attenuation to Crosstalk Ratio 30 AD—Active Directory 31 AD—Administrative Domain 32 ADC—Analog-to-Digital Converter 33 ADC—Apple Display Connector 34 ADB—Apple Desktop Bus 35 ADCCP—Advanced Data Communications Control Procedures 36 ADO—ActiveX Data Objects 37 ADSL—Asymmetric Digital Subscriber Line 38 ADT—Abstract Data Type 39 AE—Adaptive Equalizer 40 AES—Advanced Encryption Standard 41 AF—Anisotropic Filtering 42 AFP—Apple Filing Protocol 43 AGP—Accelerated Graphics Port 44 AH—Active Hub 45 AI—Artificial -

Page 1 Nov 19, 2008 1 Deutsche Telekom Laboratories the W3C

Deutsche Telekom Laboratories The W3C Multimodal Architecture ETSI Workshop: Multimodal Interaction on Mobile Devices, Ingmar Kliche (Nov 19, 2008) Nov 19, 2008 1 W3C Multimodal Architecture. Agenda. The W3C W3C Multimodal Interaction Working Group W3C Multimodal Architecture (overview) Upcoming web standards SCXML (State Chart XML) VoiceXML 3.0 EMMA (Extensible Multimodal Annotation Markup Language) Context awareness in multimodal applications A practical implementation of the W3C Multimodal Architecture Conclusion Deutsche Telekom Laboratories Nov 19, 2008 2 The W3C. W3C Multimodal Architecture. The W3C – Mission and Goals. Mission: To lead the World Wide Web to its full potential by developing protocols and guidelines that ensure long-term growth for the Web. The main goal: To ensure interoperabilit yyy of web standards , irrespective of special interests. Deutsche Telekom Laboratories Nov 19, 2008 4 W3C Multimodal Architecture. The W3C – Governance and Statistics. Web standards creation Since 1994 some 110 Web standards have been published. Infrastructure W3C Hosts and offices map International representation (ERCIM*, MIT**, Keio Uni.***) with 400 member organizations from 40 countries Accountability Vendor-neutral forum Public discussion with full consideration of public feedback Participation in working groups , as member or invited expert Patent policy Strong patent policy to protect company and public interests * European Research Consortium for Informatics and Mathematics (ERCIM) ** MIT Computer Science