Ethics out of Economics John Broome Index More Information

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Anintrapersonaladditionparadox

An Intrapersonal Addition Paradox Jacob M. Nebel December 1, 2017 Abstract I present a new problem for those of us who wish to avoid the repugnant conclu- sion. The problem is an intrapersonal, risky analogue of the mere addition paradox. The problem is important for three reasons. First, it highlights new conditions at least one of which must be rejected in order to avoid the repugnant conclusion. Some solu- tions to Parfit’s original puzzle do not obviously generalize to our intrapersonal puzzle in a plausible way. Second, it raises new concerns about how to make decisions un- der uncertainty for the sake of people whose existence might depend on what we do. Different answers to these questions suggest different solutions to the extant puzzles in population ethics. And, third, the problem suggests new difficulties for leading views about the value of a person’s life compared to her nonexistence. 1 The Mere Addition Paradox Many of us want to avoid what Parfit (1984) calls The Repugnant Conclusion: For any population of excellent lives, there is some population of mediocre lives whose existence would be better. By “better,” I mean something like what (Parfit 2011, 41) calls the impartial-reason-implying sense. One thing is better than another in this sense just in case, from an impartial point of view, we would have most reason to prefer the one to the other; two things are equally good in this sense just in case, from an impartial point of view, we would have most reason 1 to be indifferent between them. -

On the Nature of Moral Ideals PART II

12 On the Nature of Moral Ideals PART II In chapter 11 , we saw that Parfi t appealed to an Essentially Comparative View of Equality in presenting his Mere Addition Paradox, and that Parfi t now believes that his doing so was a big mistake. More generally, Parfi t now thinks that we should reject any Essentially Comparative View about the goodness of outcomes, which means that we must reject the Essentially Comparative View for all moral ideals relevant to assessing the goodness of outcomes. I noted my agreement with Parfi t that we should reject an Essentially Comparative View of Equality. But should we take the further step of rejecting an Essentially Comparative View for all moral ideals, in favor of the Internal Aspects View of Moral Ideals? Do we, in fact, open ourselves up to serious error if we allow our judgment about alternatives like A and A + to be infl uenced by whether or not A + involves Mere Addition? It may seem the answers to these questions must surely be “Yes!” Aft er all, as we saw in chapter 11 , adopting the Internal Aspects View would give us a way of reject- ing the Mere Addition Paradox. More important, it would enable us to avoid intran- sitivity in our all-things-considered judgments (in my wide reason-implying sense), allow the “all-things-considered better than” relation to apply to all sets of comparable outcomes, and license the Principle of Like Comparability for Equivalents. More- over, in addition to the unwanted implications of its denial, the Internal Aspects View has great appeal in its own right. -

Intransitivity and Future Generations: Debunking Parfit's Mere Addition Paradox

Journal of Applied Philosophy, Vol. 20, No. 2,Intransitivity 2003 and Future Generations 187 Intransitivity and Future Generations: Debunking Parfit’s Mere Addition Paradox KAI M. A. CHAN Duties to future persons contribute critically to many important contemporaneous ethical dilemmas, such as environmental protection, contraception, abortion, and population policy. Yet this area of ethics is mired in paradoxes. It appeared that any principle for dealing with future persons encountered Kavka’s paradox of future individuals, Parfit’s repugnant conclusion, or an indefensible asymmetry. In 1976, Singer proposed a utilitarian solution that seemed to avoid the above trio of obstacles, but Parfit so successfully demonstrated the unacceptability of this position that Singer abandoned it. Indeed, the apparently intransitivity of Singer’s solution contributed to Temkin’s argument that the notion of “all things considered better than” may be intransitive. In this paper, I demonstrate that a time-extended view of Singer’s solution avoids the intransitivity that allows Parfit’s mere addition paradox to lead to the repugnant conclusion. However, the heart of the mere addition paradox remains: the time-extended view still yields intransitive judgments. I discuss a general theory for dealing with intransitivity (Tran- sitivity by Transformation) that was inspired by Temkin’s sports analogy, and demonstrate that such a solution is more palatable than Temkin suspected. When a pair-wise comparison also requires consideration of a small number of relevant external alternatives, we can avoid intransitivity and the mere addition paradox without infinite comparisons or rejecting the Independence of Irrelevant Alternatives Principle. Disagreement regarding the nature and substance of our duties to future persons is widespread. -

The Ethical Consistency of Animal Equality

1 The ethical consistency of animal equality Stijn Bruers, Sept 2013, DRAFT 2 Contents 0. INTRODUCTION........................................................................................................................................ 5 0.1 SUMMARY: TOWARDS A COHERENT THEORY OF ANIMAL EQUALITY ........................................................................ 9 1. PART ONE: ETHICAL CONSISTENCY ......................................................................................................... 18 1.1 THE BASIC ELEMENTS ................................................................................................................................. 18 a) The input data: moral intuitions .......................................................................................................... 18 b) The method: rule universalism............................................................................................................. 20 1.2 THE GOAL: CONSISTENCY AND COHERENCE ..................................................................................................... 27 1.3 THE PROBLEM: MORAL ILLUSIONS ................................................................................................................ 30 a) Optical illusions .................................................................................................................................... 30 b) Moral illusions .................................................................................................................................... -

A Set of Solutions to Parfit's Problems

NOÛS 35:2 ~2001! 214–238 A Set of Solutions to Parfit’s Problems Stuart Rachels University of Alabama In Part Four of Reasons and Persons Derek Parfit searches for “Theory X,” a satisfactory account of well-being.1 Theories of well-being cover the utilitarian part of ethics but don’t claim to cover everything. They say nothing, for exam- ple, about rights or justice. Most of the theories Parfit considers remain neutral on what well-being consists in; his ingenious problems concern form rather than content. In the end, Parfit cannot find a theory that solves each of his problems. In this essay I propose a theory of well-being that may provide viable solu- tions. This theory is hedonic—couched in terms of pleasure—but solutions of the same form are available even if hedonic welfare is but one aspect of well-being. In Section 1, I lay out the “Quasi-Maximizing Theory” of hedonic well-being. I motivate the least intuitive part of the theory in Section 2. Then I consider Jes- per Ryberg’s objection to a similar theory. In Sections 4–6 I show how the theory purports to solve Parfit’s problems. If my arguments succeed, then the Quasi- Maximizing Theory is one of the few viable candidates for Theory X. 1. The Quasi-Maximizing Theory The Quasi-Maximizing Theory incorporates four principles. 1. The Conflation Principle: One state of affairs is hedonically better than an- other if and only if one person’s having all the experiences in the first would be hedonically better than one person’s having all the experiences in the second.2 The Conflation Principle sanctions translating multiperson comparisons into single person comparisons. -

Frick, Johann David

'Making People Happy, Not Making Happy People': A Defense of the Asymmetry Intuition in Population Ethics The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Frick, Johann David. 2014. 'Making People Happy, Not Making Happy People': A Defense of the Asymmetry Intuition in Population Ethics. Doctoral dissertation, Harvard University. Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:13064981 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA ʹMaking People Happy, Not Making Happy Peopleʹ: A Defense of the Asymmetry Intuition in Population Ethics A dissertation presented by Johann David Anand Frick to The Department of Philosophy in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the subject of Philosophy Harvard University Cambridge, Massachusetts September 2014 © 2014 Johann Frick All rights reserved. Dissertation Advisors: Professor T.M. Scanlon Author: Johann Frick Professor Frances Kamm ʹMaking People Happy, Not Making Happy Peopleʹ: A Defense of the Asymmetry Intuition in Population Ethics Abstract This dissertation provides a defense of the normative intuition known as the Procreation Asymmetry, according to which there is a strong moral reason not to create a life that will foreseeably not be worth living, but there is no moral reason to create a life just because it would foreseeably be worth living. Chapter 1 investigates how to reconcile the Procreation Asymmetry with our intuitions about another recalcitrant problem case in population ethics: Derek Parfit’s Non‑Identity Problem. -

Paradoxes Situations That Seems to Defy Intuition

Paradoxes Situations that seems to defy intuition PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Tue, 08 Jul 2014 07:26:17 UTC Contents Articles Introduction 1 Paradox 1 List of paradoxes 4 Paradoxical laughter 16 Decision theory 17 Abilene paradox 17 Chainstore paradox 19 Exchange paradox 22 Kavka's toxin puzzle 34 Necktie paradox 36 Economy 38 Allais paradox 38 Arrow's impossibility theorem 41 Bertrand paradox 52 Demographic-economic paradox 53 Dollar auction 56 Downs–Thomson paradox 57 Easterlin paradox 58 Ellsberg paradox 59 Green paradox 62 Icarus paradox 65 Jevons paradox 65 Leontief paradox 70 Lucas paradox 71 Metzler paradox 72 Paradox of thrift 73 Paradox of value 77 Productivity paradox 80 St. Petersburg paradox 85 Logic 92 All horses are the same color 92 Barbershop paradox 93 Carroll's paradox 96 Crocodile Dilemma 97 Drinker paradox 98 Infinite regress 101 Lottery paradox 102 Paradoxes of material implication 104 Raven paradox 107 Unexpected hanging paradox 119 What the Tortoise Said to Achilles 123 Mathematics 127 Accuracy paradox 127 Apportionment paradox 129 Banach–Tarski paradox 131 Berkson's paradox 139 Bertrand's box paradox 141 Bertrand paradox 146 Birthday problem 149 Borel–Kolmogorov paradox 163 Boy or Girl paradox 166 Burali-Forti paradox 172 Cantor's paradox 173 Coastline paradox 174 Cramer's paradox 178 Elevator paradox 179 False positive paradox 181 Gabriel's Horn 184 Galileo's paradox 187 Gambler's fallacy 188 Gödel's incompleteness theorems -

PERSONS in PROSPECT* III. Love And

PERSONS IN PROSPECT* III. Love and Non-Existence The birth of a child is an event about which we can have “before” and “after” value judgments that appear inconsistent. Consider, for example, a 14-year-old girl who decides to have a baby.1 We tend to think that the birth of a child to a 14-year-old mother would be a very unfortunate event, and hence that she should not decide to have a child. But once the child has been born, we are loath to say that it should not have been born. We may love the child, if we are one of the mother’s relatives or friends, and even if we don’t love it, we respect it as a member of the human family, whose existence is not to be regretted. Indeed, we now think that the birth is something to celebrate — once a year, on the child’s birthday. We may be tempted to explain our apparent change of mind as a product of better information. Before the birth, we didn’t know how things would turn out, and now that the baby has made its appearance, we know more. But the birth does not bring to light * The paper bears some similarity to Larry Temkin’s “Intransitivity and the Mere Addition Paradox”, Philosophy and Public Affairs 16 (1987): 138-87. Both seek to show that a combination of views about future persons is not as paradoxical as it seems. The difference between the papers is this. Temkin focuses on failures of transitivity among comparative judgments; I address a different problem, in which the value of a general state of affairs appears inconsistent with the values of all possible instances. -

List of Paradoxes 1 List of Paradoxes

List of paradoxes 1 List of paradoxes This is a list of paradoxes, grouped thematically. The grouping is approximate: Paradoxes may fit into more than one category. Because of varying definitions of the term paradox, some of the following are not considered to be paradoxes by everyone. This list collects only those instances that have been termed paradox by at least one source and which have their own article. Although considered paradoxes, some of these are based on fallacious reasoning, or incomplete/faulty analysis. Logic • Barbershop paradox: The supposition that if one of two simultaneous assumptions leads to a contradiction, the other assumption is also disproved leads to paradoxical consequences. • What the Tortoise Said to Achilles "Whatever Logic is good enough to tell me is worth writing down...," also known as Carroll's paradox, not to be confused with the physical paradox of the same name. • Crocodile Dilemma: If a crocodile steals a child and promises its return if the father can correctly guess what the crocodile will do, how should the crocodile respond in the case that the father guesses that the child will not be returned? • Catch-22 (logic): In need of something which can only be had by not being in need of it. • Drinker paradox: In any pub there is a customer such that, if he or she drinks, everybody in the pub drinks. • Paradox of entailment: Inconsistent premises always make an argument valid. • Horse paradox: All horses are the same color. • Lottery paradox: There is one winning ticket in a large lottery. It is reasonable to believe of a particular lottery ticket that it is not the winning ticket, since the probability that it is the winner is so very small, but it is not reasonable to believe that no lottery ticket will win. -

Born Free and Equal? on the Ethical Consistency of Animal Equality

Born free and equal? On the ethical consistency of animal equality Stijn Bruers Proefschrift voorgelegd tot het bekomen van de graad van Doctor in de Moraalwetenschappen Promotor: Prof. dr. Johan Braeckman Promotor Prof. dr. Johan Braeckman Vakgroep Wijsbegeerte en Moraalwetenschap Decaan Prof. dr. Marc Boone Rector Prof. dr. Anne De Paepe Nederlandse vertaling: Vrij en gelijk geboren? Over ethische consistentie en dierenrechten Faculteit Letteren & Wijsbegeerte Stijn Bruers Born free and equal? On the ethical consistency of animal equality Proefschrift voorgelegd tot het behalen van de graad van Doctor in de moraalwetenschappen 2014 Acknowledgements First of all, I would like to thank my supervisor Prof. Dr. Johan Braeckman. He allowed me to explore many new paths in ethics and philosophy and he took time to guide me through the research. His assistance and advice were precious. Second, I owe gratitude to Prof. Dr. Tom Claes and Tim De Smet for comments and Dianne Scatrine and Scott Bell for proofreading. Thanks to Gitte for helping me with the lay-out and cover. Thanks to the University of Ghent for giving opportunities, knowledge and assistance. From all philosophers I know, perhaps Floris van den Berg has ethical ideas closest to mine. I enjoyed our collaboration and meetings with him. Also the many discussions with animal rights activists of Bite Back, with the participants at the International Animal Rights Conferences and Gatherings and with the many meat eaters I encountered during the years allowed me to refine my theories. Furthermore, I am grateful to anonymous reviewers for some useful comments on my research papers. -

Impossibility and Uncertainty Theorems in AI Value Alignment

Impossibility and Uncertainty Theorems in AI Value Alignment or why your AGI should not have a utility function Peter Eckersley Partnership on AI & EFF [email protected] Abstract been able to avoid that problem in the first place are a tiny por- tion of possibility space, and one that has arguably received Utility functions or their equivalents (value functions, objec- 1 tive functions, loss functions, reward functions, preference a disproportionate amount of attention. orderings) are a central tool in most current machine learning There are, however, other kinds of AI systems for which systems. These mechanisms for defining goals and guiding ethical discussions about the value of lives, about who should optimization run into practical and conceptual difficulty when exist or keep existing, are more genuinely relevant. The clear- there are independent, multi-dimensional objectives that need est category is systems that are designed to make decisions to be pursued simultaneously and cannot be reduced to each affecting the welfare or existence of people in the future, other. Ethicists have proved several impossibility theorems rather than systems that only have to make such decisions in that stem from this origin; those results appear to show that highly atypical scenarios. Examples of such systems include: there is no way of formally specifying what it means for an outcome to be good for a population without violating strong • autonomous or semi-autonomous weapons systems (which human ethical intuitions (in such cases, the objective -

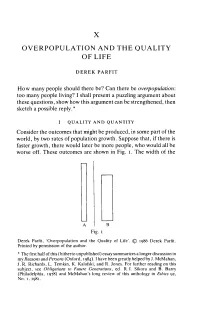

Overpopulation and the Quality of Life’

X OVERPOPULATION AND THE QUALITY OF LI FE DEREK PARFIT How many people should there be? Can there be overpopulation: too many people living? I shall present a puzzling argument about these questions, show how this argument can be strengthened, then sketch a possible reply.* I QUALITY AND QUANTITY Consider the outcomes that might be produced, in some part of the world, by two rates of population growth. Suppose that, if there is faster growth, there would later be more people, who would all be worse off. These outcomes are shown in Fig. i . The width of the Fig. i Derek Parfit, ‘Overpopulation and the Quality of Life’. © 1986 Derek Parfit. Printed by permission of the author. * The first half of this (hitherto unpublished) essay summarizes a longer discussion in my Reasons and Persons (Oxford, 1984). Ihave been greatly helped by J. McMahan, J. R. Richards, L. Temkin, K. Kalafski, and R. Jones. For further reading on this subject, see Obligations to Future Generations, ed. R. I. Sikora and B. Barry (Philadelphia, 1978) and McMahan's long review of this anthology in Ethics 92, No. 1, 1981. 146 DEREK PARFIT blocks shows the number of people living; the height shows how well off these people are. Compared with outcome A, outcome B would have twice as many people, who would all be worse off. To avoid irrelevant complications, I assume that in each outcome there would be no inequality: no one would be worse off than anyone else. I also assume that everyone’s life would be well worth living.