TURL: Table Understanding Through Representation Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1. Aabol Taabol Roy, Sukumar Kolkata: Patra Bharati 2003; 48P

1. Aabol Taabol Roy, Sukumar Kolkata: Patra Bharati 2003; 48p. Rs.30 It Is the famous rhymes collection of Bengali Literature. 2. Aabol Taabol Roy, Sukumar Kolkata: National Book Agency 2003; 60p. Rs.30 It in the most popular Bengala Rhymes ener written. 3. Aabol Taabol Roy, Sukumar Kolkata: Dey's 1990; 48p. Rs.10 It is the most famous rhyme collection of Bengali Literature. 4. Aachin Paakhi Dutta, Asit : Nikhil Bharat Shishu Sahitya 2002; 48p. Rs.30 Eight-stories, all bordering on humour by a popular writer. 5. Aadhikar ke kake dei Mukhophaya, Sutapa Kolkata: A 'N' E Publishers 1999; 28p. Rs.16 8185136637 This book intend to inform readers on their Rights and how to get it. 6. Aagun - Pakhir Rahasya Gangopadhyay, Sunil Kolkata: Ananda Publishers 1996; 119p. Rs.30 8172153198 It is one of the most famous detective story and compilation of other fun stories. 7. Aajgubi Galpo Bardhan, Adrish (ed.) : Orient Longman 1989; 117p. Rs.12 861319699 A volume on interesting and detective stories of Adrish Bardhan. 8. Aamar banabas Chakraborty, Amrendra : Swarnakhar Prakashani 1993; 24p. Rs.12 It is nice poetry for childrens written by Amarendra Chakraborty. 9. Aamar boi Mitra, Premendra : Orient Longman 1988; 40p. Rs.6 861318080 Amar Boi is a famous Primer-cum-beginners book written by Premendra Mitra. 10. Aat Rahasya Phukan, Bandita New Delhi: Fantastic ; 168p. Rs.27 This is a collection of eight humour A Mystery Stories. 12. Aatbhuture Mitra, Khagendranath Kolkata: Ashok Prakashan 1996; 140p. Rs.25 A collection of defective stories pull of wonder & surprise. 13. Abak Jalpan lakshmaner shaktishel jhalapala Ray, Kumar Kolkata: National Book Agency 2003; 58p. -

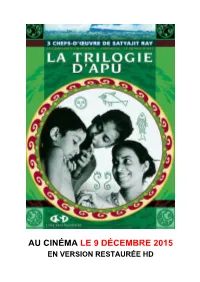

Satyajit Ray

AU CINÉMA LE 9 DÉCEMBRE 2015 EN VERSION RESTAURÉE HD Galeshka Moravioff présente LLAA TTRRIILLOOGGIIEE DD’’AAPPUU 3 CHEFS-D’ŒUVRE DE SATYAJIT RAY LLAA CCOOMMPPLLAAIINNTTEE DDUU SSEENNTTIIEERR (PATHER PANCHALI - 1955) Prix du document humain - Festival de Cannes 1956 LL’’IINNVVAAIINNCCUU (APARAJITO - 1956) Lion d'or - Mostra de Venise 1957 LLEE MMOONNDDEE DD’’AAPPUU (APUR SANSAR - 1959) Musique de RAVI SHANKAR AU CINÉMA LE 9 DÉCEMBRE 2015 EN VERSION RESTAURÉE HD Photos et dossier de presse téléchargeables sur www.films-sans-frontieres.fr/trilogiedapu Presse et distribution FILMS SANS FRONTIERES Christophe CALMELS 70, bd Sébastopol - 75003 Paris Tel : 01 42 77 01 24 / 06 03 32 59 66 Fax : 01 42 77 42 66 Email : [email protected] 2 LLAA CCOOMMPPLLAAIINNTTEE DDUU SSEENNTTIIEERR ((PPAATTHHEERR PPAANNCCHHAALLII)) SYNOPSIS Dans un petit village du Bengale, vers 1910, Apu, un garçon de 7 ans, vit pauvrement avec sa famille dans la maison ancestrale. Son père, se réfugiant dans ses ambitions littéraires, laisse sa famille s’enfoncer dans la misère. Apu va alors découvrir le monde, avec ses deuils et ses fêtes, ses joies et ses drames. Sans jamais sombrer dans le désespoir, l’enfance du héros de la Trilogie d’Apu est racontée avec une simplicité émouvante. A la fois contemplatif et réaliste, ce film est un enchantement grâce à la sincérité des comédiens, la splendeur de la photo et la beauté de la musique de Ravi Shankar. Révélation du Festival de Cannes 1956, La Complainte du sentier (Pather Panchali) connut dès sa sortie un succès considérable. Prouvant qu’un autre cinéma, loin des grosses productions hindi, était possible en Inde, le film fit aussi découvrir au monde entier un auteur majeur et désormais incontournable : Satyajit Ray. -

Download (Binaural and Ambisonics B-Format Files)

Cover Page The handle http://hdl.handle.net/1887/47914 holds various files of this Leiden University dissertation Author: Chattopadhyay, B. Title: Audible absence: searching for the site in sound production Issue Date: 2017-03-09 Part II: Articles The following 6 articles are published in this dissertation in their original form (i.e. as they were published, accepted or submitted in peer-reviewed journals). I have chosen to insert short postscripts or comments on the first page of each article and besides added some comments here and there inside the articles on the basis of new insights gained throughout my research process, serving as clarification or to critically comment on them and connect them to each other as well as to the topics discussed in the Introduction and Conclusion. These blue and green-colored postscripts establish the context in which the articles can be considered part of the main body of research for this dissertation. 45 46 Article 1: Chattopadhyay, Budhaditya (2017). “The World Within the Home: Tracing the Sound in Satyajit Ray’s Films.” Music, Sound, and the Moving Image Autumn issue (Accepted). This article deals with the first historical phase of sound production in India, as explained in the Introduction, namely: analogue recording, synchronized sound, and monaural mixing (1931– 1950s). Satyajit Ray emerged during this period and made full use of these techniques; hence, his work with sound is used as a benchmark here when studying this specific period of sound production. The article examines the use of ambient sound in the early years of film sound production, highlighting two differing attitudes, the first markedly vococentric and music-oriented, the second applying a more direct sound aesthetics to create a mode of realism. -

Code Date Description Channel TV001 30-07-2017 & JARA HATKE STAR Pravah TV002 07-05-2015 10 ML LOVE STAR Gold HD TV003 05-02

Code Date Description Channel TV001 30-07-2017 & JARA HATKE STAR Pravah TV002 07-05-2015 10 ML LOVE STAR Gold HD TV003 05-02-2018 108 TEERTH YATRA Sony Wah TV004 07-05-2017 1234 Zee Talkies HD TV005 18-06-2017 13 NO TARACHAND LANE Zee Bangla HD TV006 27-09-2015 13 NUMBER TARACHAND LANE Zee Bangla Cinema TV007 25-08-2016 2012 RETURNS Zee Action TV008 02-07-2015 22 SE SHRAVAN Jalsha Movies TV009 04-04-2017 22 SE SRABON Jalsha Movies HD TV010 24-09-2016 27 DOWN Zee Classic TV011 26-12-2018 27 MAVALLI CIRCLE Star Suvarna Plus TV012 28-08-2016 3 AM THE HOUR OF THE DEAD Zee Cinema HD TV013 04-01-2016 3 BAYAKA FAJITI AIKA Zee Talkies TV014 22-06-2017 3 BAYAKA FAJITI AIYKA Zee Talkies TV015 21-02-2016 3 GUTTU ONDHU SULLU ONDHU Star Suvarna TV016 12-05-2017 3 GUTTU ONDU SULLU ONDU NIJA Star Suvarna Plus TV017 26-08-2017 31ST OCTOBER STAR Gold Select HD TV018 25-07-2015 3G Sony MIX TV019 01-04-2016 3NE CLASS MANJA B COM BHAGYA Star Suvarna TV020 03-12-2015 4 STUDENTS STAR Vijay TV021 04-08-2018 400 Star Suvarna Plus TV022 05-11-2015 5 IDIOTS Star Suvarna Plus TV023 27-02-2017 50 LAKH Sony Wah TV024 13-03-2017 6 CANDLES Zee Tamil TV025 02-01-2016 6 MELUGUVATHIGAL Zee Tamil TV026 05-12-2016 6 TA NAGAD Jalsha Movies TV027 10-01-2016 6-5=2 Star Suvarna TV028 27-08-2015 7 O CLOCK Zee Kannada TV029 02-03-2016 7 SAAL BAAD Sony Pal TV030 01-04-2017 73 SHAANTHI NIVAASA Zee Kannada TV031 04-01-2016 73 SHANTI NIVASA Zee Kannada TV032 09-06-2018 8 THOTAKKAL STAR Gold HD TV033 28-01-2016 9 MAHINE 9 DIWAS Zee Talkies TV034 10-02-2018 A Zee Kannada TV035 20-08-2017 -

Pather Panchali Satyajit RAY - Inde - 1955

Cycle « Pour Krishna » 1/3 Pather Panchali Satyajit RAY - Inde - 1955 Fiche technique Scénario : Satyajit Ray d'après le roman Pather Panchali de Bibhutibhushan Bandopadhyay Directeur de la photo : Subatra Mitra Décors : Bansi Chandragupta Musique : Ravi Shankar Montage : Dulal Dutta Production : Satyajit Ray Distribution : Parafrance films Interprétation : Karuna Bandyopadhyay (Sarbojaya Ray, la mère de Durga et Apu), Kanu Banerjee (Harihar Ray, le père), Uma Das Gupta (Durga Ray), Subir Bannerjee (Apurba « Apu » Ray), Chunibala Devi (Indir, la parente âgée) Durée : 115 min Sortie Inde : 26 août 1955 Prix du document humain, Cannes 1956 Sortie France : 16 mars 1960 Critique et Commentaires […] C'est par les yeux d'Apu, un petit garçon de sept ans, turbulent et sauvage, que nous découvrons les menus faits tour à tour dramatiques et cocasses qui constituent la trame des jours, des saisons, des années. Pather Panchali pourtant n'a rien d'un film d'enfant. C'est une œuvre au contraire puissamment élaborée, dont la pureté, la simplicité, la calme lucidité, sont du domaine de la méditation. Par son sentiment de la nature, nullement romantique mais mystique, par cette poésie virgilienne qui sait si bien rattacher les êtres et les choses à la terre ancestrale, Satyajit Ray se révèle dans Pather Panchali digne du grand Flaherty. Mais dans son évocation du monde indien d'aujourd'hui, évocation où la pudeur de l'expression donne plus de prix encore à ce que peuvent avoir de pathétique certaines images, le réalisateur témoigne de son admiration (admiration à plusieurs reprises publiquement affirmée) pour Vittorio de Sica. Et de ce double courant, contemplatif et réaliste, naît un charme auquel il me semble difficile de résister. -

DETECTIVE FICTION Feluda Is One of Satyajit Ray's Greatest Creations

DETECTIVE FICTION Feluda is one of Satyajit Ray’s greatest creations but is he too brilliant for the movies? Although Ray’s version of ‘Sonar Kella’ is a fan favourite, ‘Joi Baba Felunath’ points to the perils of adaptation. Nandini Ramnath Jun 07, 2021 · 10:30 am Soumitra Chatterjee in Joi Baba Felunath (1979) | RD Bansal Productions Satyajit Ray’s novel The Mystery of the Elephant God begins with a peek into its creator’s precise mind. The private detective Feluda berates the hyperbole-prone novelist Jatayu for describing the Durga puja celebrations in Varanasi as “spectacular”. The word is banal and doesn’t explain why the event is important, Feluda tells Jatayu. When Jatayu elaborates, “I still remember my eyes and ears being dazzled by what I saw,” Feluda finally approves. This description appeals to the senses, he says. Ray adapted the book into a movie in 1979. Joi Baba Felunath was Ray’s second Feluda adaptation after Sonar Kella. In both films, the same set of actors played the casually sexy sleuth, his loyal cousin and sidekick Topshe and their hilarious pulp fiction writer friend Jatayu. Giving Soumitra Chatterjee, Siddhartha Chatterjee and Santosh Dutta company in Joi Baba Felunath was Utpal Dutt as the sinister Maganlal Meghraj. The Bengali-language movie, which is being streamed on Mubi India, is entertaining enough. Yet, it underscores the conundrum of a brilliant director unable to keep up with his brilliant writing. Ray cut out the plot points that were possibly difficult to film and changed the ending, weakening the mystery in the process. -

Satyajit Ray 1921-1992 Léo Bonneville

Document generated on 09/27/2021 1:34 p.m. Séquences La revue de cinéma Satyajit Ray 1921-1992 Léo Bonneville Number 161, November 1992 URI: https://id.erudit.org/iderudit/50140ac See table of contents Publisher(s) La revue Séquences Inc. ISSN 0037-2412 (print) 1923-5100 (digital) Explore this journal Cite this article Bonneville, L. (1992). Satyajit Ray 1921-1992. Séquences, (161), 42–43. Tous droits réservés © La revue Séquences Inc., 1992 This document is protected by copyright law. Use of the services of Érudit (including reproduction) is subject to its terms and conditions, which can be viewed online. https://apropos.erudit.org/en/users/policy-on-use/ This article is disseminated and preserved by Érudit. Érudit is a non-profit inter-university consortium of the Université de Montréal, Université Laval, and the Université du Québec à Montréal. Its mission is to promote and disseminate research. https://www.erudit.org/en/ HOMMAGE Satyajit Ray 1921 -1992 Jusqu'à la réalisation de Joueurs d'échecs (1977), tous les classique par mois. Puis il se régale à lire des partitions dont il films de Satyajit Ray étaient en bengali. En conséquence, la fait ses livres de chevet. Dès 1961, il composera la musique de majorité des critiques de son pays (où l'on rencontre dix-huit ses films. idiomes) ne les comprenaient pas. Pourquoi? Ses films n'étaient pas sous-titrés chez lui. Ray en conclut: «C'est banal en Inde d'être anti-Ray.» Il n'y a pas que la langue qui faisait problème. Il y avait aussi le tableau que le cinéaste offrait de son pays. -

Satyajit Ray's the Adventures of Feluda the Golden Fortress Study

Satyajit Ray’s The Adventures of Feluda The Golden Fortress Study Material-I About the writer: Satyajit Ray was not only a great filmmaker and musical composer, he was also a prolific author of popular fiction. He was particularly interested in detective fiction, and he published 17 novels and 18 additional stories featuring his prudent private investigator Feluda, who served for Ray as his Sherlock Holmes. Over his career Ray only made three detective movies – The Zoo (Chiriyakhana, 1967), The Golden Fortress (Sonar Kella, 1974), and Joi Baba Felunath: The Elephant God (Joi Baba Felunath, 1979) – the latter two of which were based on his Feluda novels. Nevertheless, these three films were all hits with the public, particularly The Golden Fortress . The story of The Golden Fortress concerns events surrounding a young boy’s memories, or dreams, of a past life when he supposedly lived in a golden fortress. In particular the boy’s vivid memories of seeing many jewels there inspire some criminals to kidnap him in hopes that he will lead them to a lost treasure. Ray has fashioned this tale as something of a family- oriented adventure, using typecast characters, comic elements, and some exotic backgrounds to liven up the proceedings. The Adventures of Feluda: • The Adventures of Feluda, the stories were written with a younger audience in mind. 1 • Ray wrote the first Feluda story Feludar Goendagiri (Danger in Darjelling) in 1965 while reviving an old family magazine, Sandesh, and because of its popularity, he wrote a total of thirty-four works in all. • It features a private investigator Prodosh/Pradosh Mitter (the anglicized version of Mitra), known as Feluda . -

The Heroic Laughter of Modernity Below Hayak: the Hero (1966) the Heroic Laughter of Modernity: the Life, Cinema and Afterlife of a Bengali Matinee Idol

Articles The Heroic Laughter of Modernity Below Hayak: The Hero (1966) The Heroic Laughter of Modernity: The life, cinema and afterlife of a Bengali matinee idol you see Uttam Kumar? In a two-hour lm that centers around him, he is perfect from every angle, in every scene. The story, the script, the making is mine. But Uttam made it his own with the charisma and effortless- ness that only an actor of his caliber could do. Discerning viewers of cinema can identify the difference between good performance extolled by the lmmaker and performance that is endowed with the gifts intrinsic to the performer. And I can vouch for the fact that Uttam excelled in the second. I nd no fault Copyright Intellectwith him as an 2012 actor […] There is none like him and there will be no one to ever replace By Sayandeb ChowdhuryDo not distributehim. He was and he is unparalleled in Bengali, even Indian cinema. Satyajit Ray (1980) Keywords: Uttam Kumar, May 6th, July 1966. The previous evening Bengali cinema, Calcutta, Satyajit Ray – the world-feted arthouse direc- tor of Bengali cinema, called up Uttam Kumar, arthouse cinema, popular Bengali matinee idol and the protagonist of his cinema, stardom, ‘posthumous new lm Nayak: The Hero (Nayak, 1966). ‘Uttam, value’, party politics tomorrow is the premiere of Nayak at Indira Cinema. I hope you will be there,’ Ray said. ‘But Manikda, [Ray’s nickname that everyone close Hollywood may have lost much of its to him called him by] you know how it is. The enchantment, but the God and heroes it cre- press and public will be there. -

Byomkesh Bakshi Bengali Story Pdf

Byomkesh bakshi bengali story pdf Continue This article is about a fictional character. For other uses, see Byomkesh Bakshi (disambiguation). Fictional Detective in Bengali Literature Byomkesh BakshiFirst appearanceSatyanweshiLast appearanceBishupal BodhCreated bySharadindu BandyopadhyayPortrayed byUttam KumarAjoy GanguliShyamal GhosalRajit KapurSudip MukherjeeAneesh See Yay Saptarshi RoySubhrajit DuttaGaurav ChakrabartyAnirban BhattacharyaJisshu SenguptaAbir Chatterjee Parambrata Chatterjee Dhritiman Chatterjee Sushant Singh RajputIn-universe informationTitleSatyanweshiOccupationPrivate investigatorFamilySatyabati (wife )Khoka (son)Ajit Kumar Banerjee (right hand and writer)NationalityIndian Byomkesh Bakshi is an Indian-Bengali fictional detective created by Sharadindu Bandyopadhyay. Referring to himself as a true-seeker in stories, Bakshi is known for his knowledge of observation, logical reasoning, and the forensic science he uses to solve complex cases, usually murders. The character was often called the Indian version of Sherlock Holmes. Initially appearing in the 1932 story of Satyanweshi, the character's popularity rose immensely in Bengaluru and other parts of India. Although it has gained huge popularity in the Indian Sub-continent with the film Chiriyakhana directed by Satyajit Ray, the screenplay by Sharadindu himself, with Uttam Kumar portraying Byomkesh. Both Byomkesh' names have since entered the Bengali language to describe someone who is intelligent and attentive. It is also used sarcastically to mean someone who states the obvious. The character of Sharadindu Bandyopadhyay is the most well-known fictional character Byomkesh Bakshi first appeared as a character in the story of Satyanweshi (Inquisitor). The story is located in 1932 in the Chinabazar Kolkata area, where 'non-governmental detective' Byomkesh Bakshi, given the approval of the police commissioner, begins to live in disarray in the area under the pseudonym Atul Chandra Niyogi to probe a series of murders. -

6, RAM GOPAL GHOSH ROAD, COSSIPORE, KOLKATA-700 002 (A Self Financed Govt

2019-20 6, RAM GOPAL GHOSH ROAD, COSSIPORE, KOLKATA-700 002 (A Self Financed Govt. approved Minority Institution run under the auspicious of Shree S. S. Jain Sabha) (Accredited by NAAC with ‘B+’ Grade (1st Cycle) Resonance Annual Magazine 2019-20 Contents ">å®è¡[t¡ Trisha Sil 51 šàÒàìØl¡, t¡à¹š¹...... Anirban Bhattacharya 54 Message from the President Governing Body 02 W¡¹³ ¤àÑz¤t¡à Satadeepa Das 56 Message from the Secretary’s Desk 03 ³å[v¡û¡ Prof. Suvadip Das 57 Message from the Principal’s Desk 04 [>Ú³ Sandeepan Pal 65 Message From the Editor’s Desk 05 ëÅÈ ëƒJà– ‘‘³à ëKà "à³à¹ ëÅàºA¡ ¤ºà A¡à\ºà [ƒ[ƒ A¡Òü?’’ A Glorious Journey of 90 years 06 Saptarshi Mandal 66 “Illuminating the path of Academics : Trisha Sil 67 Dr. Kiran Sipani's Inspiring Journey” 07 [Î[i¡ "ó¡ \Ú Cover Story- Sleuths of Bengal [‡-Åt¡ \–µ¤à[È¢A¡ãìt¡ \à>àÒü ÎÅø‡ý¡ šøoà³ Mousami Banerjee 68 Prof. Swara Thacker 11 šø[t¡¤àƒ Prof. Sreeja Ghosh 69 Unconventional Career Choices [¤[ƒÅ๠šì= Dr. Sudipta Mitra 69 Prof. Swara Thacker & Prof. Deepayan Datta 16 [ÅÛ¡A¡ Subhdeep Das 69 Representation of Disability in Indian ¤õ‡ý¡àÅø³ Saranya Trivedi 70 daily soaps and their Dimension Prof Anindita Chattopadhyay 21 ƒãšà¤ºã Sandeepan Pal 70 The long awaited family trip to Picture Gallery 71 Picture Gallery 72 Balasore and Puri Prof. Ankita Ganguly 24 Cross Word 73 Last Dream Souhardya Chatterjee 26 BRAIN TEASERS 74 WITCHCRAFT Prof. Oindrila Sengupta 27 Cross Word Solution 75 Will Newspaper Survive In Future? BRAIN TEASERS--ANSWERS 75 Prof. -

The Home and the World Pdf, Epub, Ebook

THE HOME AND THE WORLD PDF, EPUB, EBOOK Rabindranath Tagore,Surendranath Tagore,Anita Desai,William Radice | 240 pages | 26 Apr 2005 | Penguin Books Ltd | 9780140449860 | English | London, United Kingdom The Home and the World PDF Book Character Analysis. Want to Read saving…. Just a moment while we sign you in to your Goodreads account. Bimala is swept away by Sandip. To clutch hold of that which is untrue as though it were true, is only to throttle oneself. The real story of the movie takes place, meanwhile, within Bimala's heart and mind. But the sun rises and the darkness is dispelled- a moment is sufficient to overcome an infinite distance. Have I not been praying with all my strength, that if happiness may not be mine, let it go; if grief needs must be my lot, let it come; but let me knot be kept in bondage. Nikhil truly wants what is good for the country. Chiriakhana or Chiriyakhana is a Bengali film by Satyajit Ray. Can there be any real happiness for a woman in merely feeling that she has power over a man? Nothing can be compared to it— nothing at all! Chapter 2. Therefore the joy of the higher equality remains permanent; it never slides down to the vulgar level of triviality. His passion and his politics are a contrast to her quiet, passive husband. Roger Ebert was the film critic of the Chicago Sun-Times from until his death in It is the moon which has room or stains, not the stars. He is the one who refuses to be pressured into a premature embrace of Swadeshi, knowing that it is unrealistic and would do more economic harm than good.