Enhancing Automatic ICD-9-CM Code Assignment for Medical Texts with Pubmed

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

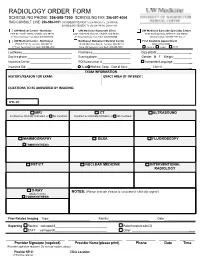

Radiology Order Form

RADIOLOGY ORDER FORM SCHEDULING PHONE: 206-598-7200 SCHEDULING FAX: 206-597-4004 RAD CONSULT LINE: 206-598-0101 UW RADIOLOGY RECORDS: Tel: 206-598-6206 Fax: 206-598-7690 NW RADIOLOGY RECORDS: Tel: 206-668-1748 Fax: 206-688-1398 UW Medical Center - Montlake UW Medicine Roosevelt Clinic UW Medicine Eastside Specialty Center 1959 NE Pacific Street, Seattle, WA 98195 4245 Roosevelt Way NE, Seattle, WA 98105 3100 Northup Way, Bellevue, WA 98004 2nd Floor Radiology Front Desk: 206-598-6200 2nd Floor Radiology Front Desk: 206-598-6868 ESC Front Desk: 425-646-7777 Opt. 2 UW Medical Center - Northwest Northwest Outpatient Medical Center First Available Appointment 1550 N 115th St, Seattle, WA 98133 10330 Meridian Ave N, Seattle, WA 98133 (ANY LOCATION) 2nd Floor Radiology Front Desk: 206-668-1302 Suite 130 Radiology Front Desk: 206-668-6050 Routine Urgent STAT Last Name: First Name: Date of Birth: _ Daytime phone: Evening phone: Gender: M F Weight:___________ Insurance Carrier: RQI/Authorization #: Interpreter/Language: __ Insurance ID#: Auto Workers’ Comp Date of Injury: ______ Claim # __ EXAM INFORMATION HISTORY/REASON FOR EXAM: EXACT AREA OF INTEREST: EXAM INFORMATION QUESTIONS TO BE ANSWERED BY IMAGING: ICD-10: MRI CT ULTRASOUND Contrast as clinically indicated, or No Contrast Contrast as clinically indicated, or No Contrast MAMMOGRAPHY DEXA FLUOROSCOPY TOMOSYNTHESIS PET/CT NUCLEAR MEDICINE INTERVENTIONAL RADIOLOGY X-RAY NOTES: (Please indicate if exam is considered “clinically urgent”) (Walk-In Only) TOMOSYNTHESIS Prior Related Imaging Type:_________________________ Facility:_________________________ Date:___________________ Reporting Routine call report # Patient to return with CD STAT call report # Other: __________ ________________________ ______________________ ____________ _______ ______ Provider Signature (required) Provider Name (please print) Phone Date Time (Provider signature required. -

RADIOGRAPHY to Prepare Individuals to Become Registered Radiologic Technologists

RADIOGRAPHY To prepare individuals to become Registered Radiologic Technologists. THE WORKFORCE CAPITAL This two-year, advanced medical program trains students in radiography. Radiography uses radiation to produce images of tissues, organs, bones and vessels of the body. The radiographer is an essential member of the health care team who works in a variety of settings. Responsibili- ties include accurately positioning the patient, producing quality diagnostic images, maintaining equipment and keeping computerized records. This certificate program of specialized training focuses on each of these responsibilities. Graduates are eligible to apply for the national credential examination to become a registered technologist in radiography, RT(R). Contact Student Services for current tuition rates and enrollment information. 580.242.2750 Mission, Goals, and Student Learning Outcomes Program Effectiveness Data Radiography Program Guidelines (Policies and Procedures) “The programs at Autry prepare you for the workforce with no extra training needed after graduation.” – Kenedy S. autrytech.edu ENDLESS POSSIBILITIES 1201 West Willow | Enid, Oklahoma, 73703 | 580.242.2750 | autrytech.edu COURSE LENGTH Twenty-four-month daytime program î August-July î Monday-Friday Academic hours: 8:15am-3:45pm Clinical hours: Eight-hour shifts between 7am-5pm with some ADMISSION PROCEDURES evening assignments required Applicants should contact Student Services at Autry Technology Center to request an information/application packet. Applicants who have a completed application on file and who have met entrance requirements will be considered for the program. Meeting ADULT IN-DISTRICT COSTS the requirements does not guarantee admission to the program. Qualified applicants will be contacted for an interview, and class Year One: $2732 (Additional cost of books and supplies approx: $1820) selection will be determined by the admissions committee. -

Acr–Nasci–Sir–Spr Practice Parameter for the Performance and Interpretation of Body Computed Tomography Angiography (Cta)

The American College of Radiology, with more than 30,000 members, is the principal organization of radiologists, radiation oncologists, and clinical medical physicists in the United States. The College is a nonprofit professional society whose primary purposes are to advance the science of radiology, improve radiologic services to the patient, study the socioeconomic aspects of the practice of radiology, and encourage continuing education for radiologists, radiation oncologists, medical physicists, and persons practicing in allied professional fields. The American College of Radiology will periodically define new practice parameters and technical standards for radiologic practice to help advance the science of radiology and to improve the quality of service to patients throughout the United States. Existing practice parameters and technical standards will be reviewed for revision or renewal, as appropriate, on their fifth anniversary or sooner, if indicated. Each practice parameter and technical standard, representing a policy statement by the College, has undergone a thorough consensus process in which it has been subjected to extensive review and approval. The practice parameters and technical standards recognize that the safe and effective use of diagnostic and therapeutic radiology requires specific training, skills, and techniques, as described in each document. Reproduction or modification of the published practice parameter and technical standard by those entities not providing these services is not authorized. Revised 2021 (Resolution 47)* ACR–NASCI–SIR–SPR PRACTICE PARAMETER FOR THE PERFORMANCE AND INTERPRETATION OF BODY COMPUTED TOMOGRAPHY ANGIOGRAPHY (CTA) PREAMBLE This document is an educational tool designed to assist practitioners in providing appropriate radiologic care for patients. Practice Parameters and Technical Standards are not inflexible rules or requirements of practice and are not intended, nor should they be used, to establish a legal standard of care1. -

2019 Radiology Cpt Codes

2019 RADIOLOGY CPT CODES BONE DENSITOMETRY 1 Bone Density/DEXA 77080 CT 1 CT Abd & Pelvis W/ Contrast 74177 1 CT Enterography W/ Contrast 74177 1 CT Max/Facial W/O Contrast 70486 # CT Sinus Complete W/O Contrast 70486 1 CT Abd & Pelvis W W/O Contrast 74178 1 CT Extremity Lower W/ Contrast 73701 1 CT Neck W/ Contrast 70491 # CT Sinus Limited W/O Contrast 76380 1 CT Abd & Pelvis W/O Contrast 74176 1 CT Extremity Lower W/O Contrast 73700 1 CT Neck W/O Contrast 70490 # CT Spine Cervical W/ Contrast 72126 1 CT Abd W/ Contrast 74160 1 CT Extremity Upper W/ Contrast 73201 1 CT Orbit/ IAC W/ Contrast 70481 # CT Spine Cervical W/O Contrast 72125 1 CT Abd W/O Contrast 74150 1 CT Extremity Upper W/O Contrast 73200 1 CT Orbit/ IAC W/O Contrast 70480 # CT Spine Lumbar W/ Contrast 72132 1 CT Abd W W/O Contrast 74170 1 CT Head W/ Contrast 70460 1 CT Orbit/ IAC W W/O Contrast 70482 # CT Spine Lumbar W/O Contrast 72131 1 CT Chest W/ Contrast 71260 1 CT Head W/O Contrast 70450 1 CT Pelvis W/ Contrast 72193 # CT Spine Thoracic W/ Contrast 72129 1 CT Chest W/O Contrast 71250 1 CT Head W W/O Contrast 70470 1 CT Pelvis W/O Contrast 72192 # CT Spine Thoracic W/O Contrast 72128 1 CT Chest W W/O Contrast 71270 1 CT Max/Facial W/ Contrast 70487 1 CT Pelvis W W/O Contrast 72194 # CT Stone Protocol W/O Contrast 74176 CTA 1 Cardiac Calcium Score only 75571 1 CT Angiogram Abd & Pelvis W W/O Contrast 74174 1 CT Angiogram Head W W/O Contrast 70496 # CT / CTA Heart W Contrast 75574 1 CT Angiogram Abdomen W W/O Contrast 74175 1 CT Angiogram Chest W W/O Contrast 71275 -

Study Guide Medical Terminology by Thea Liza Batan About the Author

Study Guide Medical Terminology By Thea Liza Batan About the Author Thea Liza Batan earned a Master of Science in Nursing Administration in 2007 from Xavier University in Cincinnati, Ohio. She has worked as a staff nurse, nurse instructor, and level department head. She currently works as a simulation coordinator and a free- lance writer specializing in nursing and healthcare. All terms mentioned in this text that are known to be trademarks or service marks have been appropriately capitalized. Use of a term in this text shouldn’t be regarded as affecting the validity of any trademark or service mark. Copyright © 2017 by Penn Foster, Inc. All rights reserved. No part of the material protected by this copyright may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage and retrieval system, without permission in writing from the copyright owner. Requests for permission to make copies of any part of the work should be mailed to Copyright Permissions, Penn Foster, 925 Oak Street, Scranton, Pennsylvania 18515. Printed in the United States of America CONTENTS INSTRUCTIONS 1 READING ASSIGNMENTS 3 LESSON 1: THE FUNDAMENTALS OF MEDICAL TERMINOLOGY 5 LESSON 2: DIAGNOSIS, INTERVENTION, AND HUMAN BODY TERMS 28 LESSON 3: MUSCULOSKELETAL, CIRCULATORY, AND RESPIRATORY SYSTEM TERMS 44 LESSON 4: DIGESTIVE, URINARY, AND REPRODUCTIVE SYSTEM TERMS 69 LESSON 5: INTEGUMENTARY, NERVOUS, AND ENDOCRINE S YSTEM TERMS 96 SELF-CHECK ANSWERS 134 © PENN FOSTER, INC. 2017 MEDICAL TERMINOLOGY PAGE III Contents INSTRUCTIONS INTRODUCTION Welcome to your course on medical terminology. You’re taking this course because you’re most likely interested in pursuing a health and science career, which entails proficiencyincommunicatingwithhealthcareprofessionalssuchasphysicians,nurses, or dentists. -

X-Ray (Radiography) - Bone Bone X-Ray Uses a Very Small Dose of Ionizing Radiation to Produce Pictures of Any Bone in the Body

X-ray (Radiography) - Bone Bone x-ray uses a very small dose of ionizing radiation to produce pictures of any bone in the body. It is commonly used to diagnose fractured bones or joint dislocation. Bone x-rays are the fastest and easiest way for your doctor to view and assess bone fractures, injuries and joint abnormalities. This exam requires little to no special preparation. Tell your doctor and the technologist if there is any possibility you are pregnant. Leave jewelry at home and wear loose, comfortable clothing. You may be asked to wear a gown. What is Bone X-ray (Radiography)? An x-ray exam helps doctors diagnose and treat medical conditions. It exposes you to a small dose of ionizing radiation to produce pictures of the inside of the body. X-rays are the oldest and most often used form of medical imaging. A bone x-ray makes images of any bone in the body, including the hand, wrist, arm, elbow, shoulder, spine, pelvis, hip, thigh, knee, leg (shin), ankle or foot. What are some common uses of the procedure? A bone x-ray is used to: diagnose fractured bones or joint dislocation. demonstrate proper alignment and stabilization of bony fragments following treatment of a fracture. guide orthopedic surgery, such as spine repair/fusion, joint replacement and fracture reductions. look for injury, infection, arthritis, abnormal bone growths and bony changes seen in metabolic conditions. assist in the detection and diagnosis of bone cancer. locate foreign objects in soft tissues around or in bones. How should I prepare? Most bone x-rays require no special preparation. -

ACR Manual on Contrast Media

ACR Manual On Contrast Media 2021 ACR Committee on Drugs and Contrast Media Preface 2 ACR Manual on Contrast Media 2021 ACR Committee on Drugs and Contrast Media © Copyright 2021 American College of Radiology ISBN: 978-1-55903-012-0 TABLE OF CONTENTS Topic Page 1. Preface 1 2. Version History 2 3. Introduction 4 4. Patient Selection and Preparation Strategies Before Contrast 5 Medium Administration 5. Fasting Prior to Intravascular Contrast Media Administration 14 6. Safe Injection of Contrast Media 15 7. Extravasation of Contrast Media 18 8. Allergic-Like And Physiologic Reactions to Intravascular 22 Iodinated Contrast Media 9. Contrast Media Warming 29 10. Contrast-Associated Acute Kidney Injury and Contrast 33 Induced Acute Kidney Injury in Adults 11. Metformin 45 12. Contrast Media in Children 48 13. Gastrointestinal (GI) Contrast Media in Adults: Indications and 57 Guidelines 14. ACR–ASNR Position Statement On the Use of Gadolinium 78 Contrast Agents 15. Adverse Reactions To Gadolinium-Based Contrast Media 79 16. Nephrogenic Systemic Fibrosis (NSF) 83 17. Ultrasound Contrast Media 92 18. Treatment of Contrast Reactions 95 19. Administration of Contrast Media to Pregnant or Potentially 97 Pregnant Patients 20. Administration of Contrast Media to Women Who are Breast- 101 Feeding Table 1 – Categories Of Acute Reactions 103 Table 2 – Treatment Of Acute Reactions To Contrast Media In 105 Children Table 3 – Management Of Acute Reactions To Contrast Media In 114 Adults Table 4 – Equipment For Contrast Reaction Kits In Radiology 122 Appendix A – Contrast Media Specifications 124 PREFACE This edition of the ACR Manual on Contrast Media replaces all earlier editions. -

The Costs and Benefits of Moving to the ICD-10 Code Sets

CHILDREN AND ADOLESCENTS This PDF document was made available from www.rand.org as a public CIVIL JUSTICE service of the RAND Corporation. EDUCATION ENERGY AND ENVIRONMENT Jump down to document HEALTH AND HEALTH CARE 6 INTERNATIONAL AFFAIRS POPULATION AND AGING The RAND Corporation is a nonprofit research PUBLIC SAFETY SCIENCE AND TECHNOLOGY organization providing objective analysis and effective SUBSTANCE ABUSE solutions that address the challenges facing the public TERRORISM AND HOMELAND SECURITY and private sectors around the world. TRANSPORTATION AND INFRASTRUCTURE U.S. NATIONAL SECURITY Support RAND Purchase this document Browse Books & Publications Make a charitable contribution For More Information Visit RAND at www.rand.org Explore RAND Science and Technology View document details Limited Electronic Distribution Rights This document and trademark(s) contained herein are protected by law as indicated in a notice appearing later in this work. This electronic representation of RAND intellectual property is provided for non-commercial use only. Permission is required from RAND to reproduce, or reuse in another form, any of our research documents for commercial use. This product is part of the RAND Corporation technical report series. Reports may include research findings on a specific topic that is limited in scope; present discus- sions of the methodology employed in research; provide literature reviews, survey instruments, modeling exercises, guidelines for practitioners and research profes- sionals, and supporting documentation; -

Diagnostic Radiology Physics Diagnostic This Publication Provides a Comprehensive Review of Topics Relevant to Diagnostic Radiology Physics

A Handbook for Teachers and Students A Handbook for Teachers Diagnostic Diagnostic This publication provides a comprehensive review of topics relevant to diagnostic radiology physics. It is intended to provide the basis for the education of medical physicists in the field of diagnostic radiology. Bringing together the work of 41 authors and reviewers from 12 countries, the handbook covers a broad range of topics including radiation physics, dosimetry and Radiology instrumentation, image quality and image perception, imaging modality specific topics, recent advances in digital techniques, and radiation biology and protection. It is not designed to replace the large number of textbooks available on many aspects of diagnostic radiology physics, but is expected Radiology Physics Physics to fill a gap in the teaching material for medical radiation physics in imaging, providing in a single manageable volume the broadest coverage of topics currently available. The handbook has been endorsed by several international professional bodies and will be of value to those preparing for their certification A Handbook for as medical physicists, radiologists and diagnostic radiographers. Teachers and Students D.R. Dance S. Christofides A.D.A. Maidment I.D. McLean K.H. Ng Technical Editors International Atomic Energy Agency Vienna ISBN 978–92–0–131010–1 1 @ DIAGNOSTIC RADIOLOGY PHYSICS: A HANDBOOK FOR TEACHERS AND STUDENTS The following States are Members of the International Atomic Energy Agency: AFGHANISTAN GHANA OMAN ALBANIA GREECE PAKISTAN ALGERIA GUATEMALA -

Department of Radiology

Department of Radiology Our renowned Columbia Department of Radiology at New York-Presbyterian provides cutting-edge technology with a full spectrum of radiological services including diagnostic radiology and interventional radiology services. With state-of-the-art equipment, skilled technologists, and board-certified radiologists, our healthcare team provides patients with the highest quality of care in a safe and comfortable environment. Our Facilities in Upper Manhattan NewYork-Presbyterian/Columbia NewYork-Presbyterian Morgan NewYork-Presbyterian Allen Hospital University Irving Medical Center Stanley Children’s Hospital 5141 Broadway (220th Street) Avon Breast Clinic 3959 Broadway New York, NY 10034 1130 St. Nicholas Avenue New York, NY 10032 New York, NY 10032 Transportation: Transportation: Trains 1 & A to 207th Street Herbert Irving Pavilion Trains 1, A, & C to 168th Street Buses BX7, BX20, & Liberty Lines Express 161 Fort Washington Avenue Parking is available Parking is available New York, NY 10032 Milstein Hospital Building 177 Fort Washington Avenue New York, NY 10032 Transportation: Trains 1, A, & C to 168th Street Parking is available To schedule an appointment, please call 212-305-9335 A top 5 hospital in the nation and the #1 hospital in New York according to U.S. News & World Report NewYork-Presbyterian/ NewYork-Presbyterian NewYork-Presbyterian Columbia University Irving Morgan Stanley Children’s Allen Hospital Medical Center Hospital Computerized Monday – Friday Monday – Friday Monday – Friday Tomography (CT) 7:30am -

Interventional Fluoroscopy Reducing Radiation Risks for Patients and Staff

NATIONAL CANCER INSTITUTE THE SOCIETY OF INTERVENTIONAL RADIOLOGY Interventional Fluoroscopy Reducing Radiation Risks for Patients and Staff Introduction CONTENTS Interventional fluoroscopy uses ionizing radiation to guide small instruments such as catheters through blood vessels or other pathways in the body. Interventional fluoroscopy represents a Introduction 1 tremendous advantage over invasive surgical procedures, because Increasing use and it requires only a very small incision, substantially reduces the complexity of risk of infection and allows for shorter recovery time compared interventional fluoroscopy 1 to surgical procedures. These interventions are used by a rapidly expanding number of health care providers in a wide range of Determinants of radiation medical specialties. However, many of these specialists have little dose from interventional fluoroscopy 2 training in radiation science or protection measures. The growing use and increasing complexity of these procedures Radiation risks from interventional fluoroscopy 3 have been accompanied by public health concerns resulting from the increasing radiation exposure to both patients and health care Strategies to optimize personnel. The rise in reported serious skin injuries and the radiation exposure from expected increase in late effects such as lens injuries and interventional fluoroscopy 4 cataracts, and possibly cancer, make clear the need for informa- Physician-patient tion on radiation risks and on strategies to control radiation communication before and exposures to patients and health care providers. This guide after interventional fluoroscopy 4 discusses the value of these interventions, the associated radiation risk and the importance of optimizing radiation dose. Dosimetry records and follow up 4 Education and training 5 Increasing use and complexity of interventional Conclusion 5 fluoroscopy Reference list 5 In 2002, an estimated 657,000 percutaneous transluminal coronary angioplasty (PTCA) procedures were performed in adults in the United States. -

Emory Radiology CT and MRI Patient Preparation Instructions

Emory Radiology CT and MRI Patient Preparation Instructions n These are preparation instructions for adult patients (16+). n For pediatric patients under the age of 16, please call Children’s Healthcare of Atlanta at 404-785-6078 or after hours at 404-785-6117. n For maps and directions to Emory Radiology sites, please call 404-778-7777 or visit us online at www.emoryhealthcare.org/radiology. Pre-Registration: Patients may need to pre-register for radiology exams or procedures. Please call the appropriate Fast-Track Admissions number below prior to your appointment date: Emory University Hospital (EUH): 404-686-5270 or 1-800-640-9293 Hours of Operation: Monday – Friday, 8 a.m. to 7 p.m. Closed weekends and holidays. Emory University Hospital Midtown (EUHM): 404-251-3800 Hours of Operation: Monday – Friday, 9 a.m. to 8:30 p.m. Closed weekends and holidays. Radiology Exams/Procedures: • Please arrive 30 minutes in advance of your scheduled appointment to complete any paperwork and prepare you for your exam. • For most radiology procedures, you will be asked to change into a hospital gown. • If you need to take medications, please take them with a small amount of water unless you have been instructed to withhold your medications. If you are uncertain, please call the appropriate number listed below. • If you are PREGNANT or there is a possibility that you are pregnant, please notify your physician. • Information for common procedures: – For general patient preparation information, please call 404-778-XRAY (9729). – For biopsies and special procedures information, please call 404-712-0566.