Numerous Proofs of Ζ(2) = 6

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Lerch Zeta Function and Related Functions

The Lerch Zeta Function and Related Functions Je↵ Lagarias, University of Michigan Ann Arbor, MI, USA (September 20, 2013) Conference on Stark’s Conjecture and Related Topics , (UCSD, Sept. 20-22, 2013) (UCSD Number Theory Group, organizers) 1 Credits (Joint project with W. C. Winnie Li) J. C. Lagarias and W.-C. Winnie Li , The Lerch Zeta Function I. Zeta Integrals, Forum Math, 24 (2012), 1–48. J. C. Lagarias and W.-C. Winnie Li , The Lerch Zeta Function II. Analytic Continuation, Forum Math, 24 (2012), 49–84. J. C. Lagarias and W.-C. Winnie Li , The Lerch Zeta Function III. Polylogarithms and Special Values, preprint. J. C. Lagarias and W.-C. Winnie Li , The Lerch Zeta Function IV. Two-variable Hecke operators, in preparation. Work of J. C. Lagarias is partially supported by NSF grants DMS-0801029 and DMS-1101373. 2 Topics Covered Part I. History: Lerch Zeta and Lerch Transcendent • Part II. Basic Properties • Part III. Multi-valued Analytic Continuation • Part IV. Consequences • Part V. Lerch Transcendent • Part VI. Two variable Hecke operators • 3 Part I. Lerch Zeta Function: History The Lerch zeta function is: • e2⇡ina ⇣(s, a, c):= 1 (n + c)s nX=0 The Lerch transcendent is: • zn Φ(s, z, c)= 1 (n + c)s nX=0 Thus ⇣(s, a, c)=Φ(s, e2⇡ia,c). 4 Special Cases-1 Hurwitz zeta function (1882) • 1 ⇣(s, 0,c)=⇣(s, c):= 1 . (n + c)s nX=0 Periodic zeta function (Apostol (1951)) • e2⇡ina e2⇡ia⇣(s, a, 1) = F (a, s):= 1 . ns nX=1 5 Special Cases-2 Fractional Polylogarithm • n 1 z z Φ(s, z, 1) = Lis(z)= ns nX=1 Riemann zeta function • 1 ⇣(s, 0, 1) = ⇣(s)= 1 ns nX=1 6 History-1 Lipschitz (1857) studies general Euler integrals including • the Lerch zeta function Hurwitz (1882) studied Hurwitz zeta function. -

A Short and Simple Proof of the Riemann's Hypothesis

A Short and Simple Proof of the Riemann’s Hypothesis Charaf Ech-Chatbi To cite this version: Charaf Ech-Chatbi. A Short and Simple Proof of the Riemann’s Hypothesis. 2021. hal-03091429v10 HAL Id: hal-03091429 https://hal.archives-ouvertes.fr/hal-03091429v10 Preprint submitted on 5 Mar 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. A Short and Simple Proof of the Riemann’s Hypothesis Charaf ECH-CHATBI ∗ Sunday 21 February 2021 Abstract We present a short and simple proof of the Riemann’s Hypothesis (RH) where only undergraduate mathematics is needed. Keywords: Riemann Hypothesis; Zeta function; Prime Numbers; Millennium Problems. MSC2020 Classification: 11Mxx, 11-XX, 26-XX, 30-xx. 1 The Riemann Hypothesis 1.1 The importance of the Riemann Hypothesis The prime number theorem gives us the average distribution of the primes. The Riemann hypothesis tells us about the deviation from the average. Formulated in Riemann’s 1859 paper[1], it asserts that all the ’non-trivial’ zeros of the zeta function are complex numbers with real part 1/2. 1.2 Riemann Zeta Function For a complex number s where ℜ(s) > 1, the Zeta function is defined as the sum of the following series: +∞ 1 ζ(s)= (1) ns n=1 X In his 1859 paper[1], Riemann went further and extended the zeta function ζ(s), by analytical continuation, to an absolutely convergent function in the half plane ℜ(s) > 0, minus a simple pole at s = 1: s +∞ {x} ζ(s)= − s dx (2) s − 1 xs+1 Z1 ∗One Raffles Quay, North Tower Level 35. -

Finding Pi Project

Name: ________________________ Finding Pi - Activity Objective: You may already know that pi (π) is a number that is approximately equal to 3.14. But do you know where the number comes from? Let's measure some round objects and find out. Materials: • 6 circular objects Some examples include a bicycle wheel, kiddie pool, trash can lid, DVD, steering wheel, or clock face. Be sure each object you choose is shaped like a perfect circle. • metric tape measure Be sure your tape measure has centimeters on it. • calculator It will save you some time because dividing with decimals can be tricky. • “Finding Pi - Table” worksheet It may be attached to this page, or on the back. What to do: Step 1: Choose one of your circular objects. Write the name of the object on the “Finding Pi” table. Step 2: With the centimeter side of your tape measure, accurately measure the distance around the outside of the circle (the circumference). Record your measurement on the table. Step 3: Next, measure the distance across the middle of the object (the diameter). Record your measurement on the table. Step 4: Use your calculator to divide the circumference by the diameter. Write the answer on the table. If you measured carefully, the answer should be about 3.14, or π. Repeat steps 1 through 4 for each object. Super Teacher Worksheets - www.superteacherworksheets.com Name: ________________________ “Finding Pi” Table Measure circular objects and complete the table below. If your measurements are accurate, you should be able to calculate the number pi (3.14). Is your answer name of circumference diameter circumference ÷ approximately circular object measurement (cm) measurement (cm) diameter equal to π? 1. -

Infinite Product of a Number

International Journal of Pure and Applied Mathematical Sciences. ISSN 0972-9828 Volume 10, Number 2 (2017), pp. 107-110 © Research India Publications http://www.ripublication.com Infinite Product of a Number Rushal Sohal Student The Lexicon International School, Wagholi, Pune, India. Abstract It seems that multiplying a positive number infinite times would give something extremely huge (infinity), but is it so? In this paper, we will show that any positive integer (>1) raised to the power infinity (i.e. infinite product of n ∞ ∞ =∏푖=1 푛=푛×푛×푛...= 푛 , 푛 > 1) is not infinity; we will use simple mathematical equations and also use some known sums to prove that the infinite product of a positive integer (>1) is not equal to infinity, ∞i.e. 푛∞ ≠ . Keywords: positive integer(퐼+); infinity*(∞); Riemann zeta function 휁(푠). 1. INTRODUCTION So, what is the infinite product of a positive integer (>1), i.e. what is 푛∞? Is it ∞ or something else? Till now, not much research has been done on this topic, and by convention we assume 푛∞ to be equal to ∞. In this paper, we will make simple equations and also use some known sums and prove that 푛∞ = 0 and that, it is not equal to ∞. We are going to approach the problem by observing patterns, assigning and re- assigning variables, and finally solving the equations. But first, we need to prove that 푛∞ ≠ ∞. 2. METHODS The methods for solving the problem are simple but a bit counter-intuitive. In this paper, we will make simple mathematical equations and firstly prove that 푛∞ ≠ ∞. -

Regularized Integral Representations of the Reciprocal Function

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 25 December 2018 doi:10.20944/preprints201812.0310.v1 Peer-reviewed version available at Fractal Fract 2019, 3, 1; doi:10.3390/fractalfract3010001 Article Regularized Integral Representations of the Reciprocal G Function Dimiter Prodanov Correspondence: Environment, Health and Safety, IMEC vzw, Kapeldreef 75, 3001 Leuven, Belgium; [email protected]; [email protected] Version December 25, 2018 submitted to Preprints 1 Abstract: This paper establishes a real integral representation of the reciprocal G function in terms 2 of a regularized hypersingular integral. The equivalence with the usual complex representation is 3 demonstrated. A regularized complex representation along the usual Hankel path is derived. 4 Keywords: gamma function; reciprocal gamma function; integral equation 5 MSC: 33B15; 65D20, 30D10 6 1. Introduction 7 Applications of the Gamma function are ubiquitous in fractional calculus and the special function 8 theory. It has numerous interesting properties summarized in [1]. It is indispensable in the theory of 9 Laplace transforms. The history of the Gamma function is surveyed in [2]. In a previous note I have 10 investigated an approach to regularize derivatives at points, where the ordinary limit diverges [5]. 11 This paper exploits the same approach for the purposes of numerical computation of singular integrals, 12 such as the Euler G integrals for negative arguments. The paper also builds on an observations in [4]. 13 The present paper proves a real singular integral representation of the reciprocal G function. The 14 algorithm is implemented in the Computer Algebra System Maxima for reference and demonstration 15 purposes. -

The Riemann and Hurwitz Zeta Functions, Apery's Constant and New

The Riemann and Hurwitz zeta functions, Apery’s constant and new rational series representations involving ζ(2k) Cezar Lupu1 1Department of Mathematics University of Pittsburgh Pittsburgh, PA, USA Algebra, Combinatorics and Geometry Graduate Student Research Seminar, February 2, 2017, Pittsburgh, PA A quick overview of the Riemann zeta function. The Riemann zeta function is defined by 1 X 1 ζ(s) = ; Re s > 1: ns n=1 Originally, Riemann zeta function was defined for real arguments. Also, Euler found another formula which relates the Riemann zeta function with prime numbrs, namely Y 1 ζ(s) = ; 1 p 1 − ps where p runs through all primes p = 2; 3; 5;:::. A quick overview of the Riemann zeta function. Moreover, Riemann proved that the following ζ(s) satisfies the following integral representation formula: 1 Z 1 us−1 ζ(s) = u du; Re s > 1; Γ(s) 0 e − 1 Z 1 where Γ(s) = ts−1e−t dt, Re s > 0 is the Euler gamma 0 function. Also, another important fact is that one can extend ζ(s) from Re s > 1 to Re s > 0. By an easy computation one has 1 X 1 (1 − 21−s )ζ(s) = (−1)n−1 ; ns n=1 and therefore we have A quick overview of the Riemann function. 1 1 X 1 ζ(s) = (−1)n−1 ; Re s > 0; s 6= 1: 1 − 21−s ns n=1 It is well-known that ζ is analytic and it has an analytic continuation at s = 1. At s = 1 it has a simple pole with residue 1. -

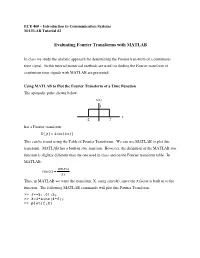

Evaluating Fourier Transforms with MATLAB

ECE 460 – Introduction to Communication Systems MATLAB Tutorial #2 Evaluating Fourier Transforms with MATLAB In class we study the analytic approach for determining the Fourier transform of a continuous time signal. In this tutorial numerical methods are used for finding the Fourier transform of continuous time signals with MATLAB are presented. Using MATLAB to Plot the Fourier Transform of a Time Function The aperiodic pulse shown below: x(t) 1 t -2 2 has a Fourier transform: X ( jf ) = 4sinc(4π f ) This can be found using the Table of Fourier Transforms. We can use MATLAB to plot this transform. MATLAB has a built-in sinc function. However, the definition of the MATLAB sinc function is slightly different than the one used in class and on the Fourier transform table. In MATLAB: sin(π x) sinc(x) = π x Thus, in MATLAB we write the transform, X, using sinc(4f), since the π factor is built in to the function. The following MATLAB commands will plot this Fourier Transform: >> f=-5:.01:5; >> X=4*sinc(4*f); >> plot(f,X) In this case, the Fourier transform is a purely real function. Thus, we can plot it as shown above. In general, Fourier transforms are complex functions and we need to plot the amplitude and phase spectrum separately. This can be done using the following commands: >> plot(f,abs(X)) >> plot(f,angle(X)) Note that the angle is either zero or π. This reflects the positive and negative values of the transform function. Performing the Fourier Integral Numerically For the pulse presented above, the Fourier transform can be found easily using the table. -

2 Values of the Riemann Zeta Function at Integers

MATerials MATem`atics Volum 2009, treball no. 6, 26 pp. ISSN: 1887-1097 2 Publicaci´oelectr`onicade divulgaci´odel Departament de Matem`atiques MAT de la Universitat Aut`onomade Barcelona www.mat.uab.cat/matmat Values of the Riemann zeta function at integers Roman J. Dwilewicz, J´anMin´aˇc 1 Introduction The Riemann zeta function is one of the most important and fascinating functions in mathematics. It is very natural as it deals with the series of powers of natural numbers: 1 1 1 X 1 X 1 X 1 ; ; ; etc. (1) n2 n3 n4 n=1 n=1 n=1 Originally the function was defined for real argu- ments as Leonhard Euler 1 X 1 ζ(x) = for x > 1: (2) nx n=1 It connects by a continuous parameter all series from (1). In 1734 Leon- hard Euler (1707 - 1783) found something amazing; namely he determined all values ζ(2); ζ(4); ζ(6);::: { a truly remarkable discovery. He also found a beautiful relationship between prime numbers and ζ(x) whose significance for current mathematics cannot be overestimated. It was Bernhard Riemann (1826 - 1866), however, who recognized the importance of viewing ζ(s) as 2 Values of the Riemann zeta function at integers. a function of a complex variable s = x + iy rather than a real variable x. Moreover, in 1859 Riemann gave a formula for a unique (the so-called holo- morphic) extension of the function onto the entire complex plane C except s = 1. However, the formula (2) cannot be applied anymore if the real part of s, Re s = x is ≤ 1. -

MATLAB Examples Mathematics

MATLAB Examples Mathematics Hans-Petter Halvorsen, M.Sc. Mathematics with MATLAB • MATLAB is a powerful tool for mathematical calculations. • Type “help elfun” (elementary math functions) in the Command window for more information about basic mathematical functions. Mathematics Topics • Basic Math Functions and Expressions � = 3�% + ) �% + �% + �+,(.) • Statistics – mean, median, standard deviation, minimum, maximum and variance • Trigonometric Functions sin() , cos() , tan() • Complex Numbers � = � + �� • Polynomials = =>< � � = �<� + �%� + ⋯ + �=� + �=@< Basic Math Functions Create a function that calculates the following mathematical expression: � = 3�% + ) �% + �% + �+,(.) We will test with different values for � and � We create the function: function z=calcexpression(x,y) z=3*x^2 + sqrt(x^2+y^2)+exp(log(x)); Testing the function gives: >> x=2; >> y=2; >> calcexpression(x,y) ans = 16.8284 Statistics Functions • MATLAB has lots of built-in functions for Statistics • Create a vector with random numbers between 0 and 100. Find the following statistics: mean, median, standard deviation, minimum, maximum and the variance. >> x=rand(100,1)*100; >> mean(x) >> median(x) >> std(x) >> mean(x) >> min(x) >> max(x) >> var(x) Trigonometric functions sin(�) cos(�) tan(�) Trigonometric functions It is quite easy to convert from radians to degrees or from degrees to radians. We have that: 2� ������� = 360 ������� This gives: 180 � ������� = �[�������] M � � �[�������] = �[�������] M 180 → Create two functions that convert from radians to degrees (r2d(x)) and from degrees to radians (d2r(x)) respectively. Test the functions to make sure that they work as expected. The functions are as follows: function d = r2d(r) d=r*180/pi; function r = d2r(d) r=d*pi/180; Testing the functions: >> r2d(2*pi) ans = 360 >> d2r(180) ans = 3.1416 Trigonometric functions Given right triangle: • Create a function that finds the angle A (in degrees) based on input arguments (a,c), (b,c) and (a,b) respectively. -

Functions of a Complex Variable II Math 562, Spring 2020

Functions of a Complex Variable II Math 562, Spring 2020 Jens Lorenz April 27, 2020 Department of Mathematics and Statistics, UNM, Albuquerque, NM 87131 Contents 1 Notations 5 2 The Schwarz Lemma and Aut(D) 6 2.1 Schwarz Lemma . .6 2.2 Biholomorphic Maps and Automorphisms . .7 2.3 The Automorphism Group of D ...................7 2.4 The Schwarz{Pick Lemma . .9 2.5 Remarks . 11 3 Linear Fractional Transformations and the Riemann Sphere 12 3.1 The Riemann Sphere . 12 3.2 Linear Fractional Transformations . 14 3.3 The Automorphisms of the Riemann Sphere . 16 3.4 A Remarkable Geometric Property of Linear Fractional Trans- formations . 17 3.4.1 Analytical Description of Circles . 18 3.4.2 Circles under Reciprocation . 18 3.4.3 Straight Lines under Reciprocation . 20 3.5 Example: The Cayley Transform in the Complex Plane . 21 3.6 The Cayley Transform of a Matrix . 22 4 The Riemann Mapping Theorem 24 4.1 Statement and Overview of Proof . 24 4.2 The Theorem of Arzela{Ascoli . 26 4.3 Montel's Theorem . 29 4.4 Auxiliary Results on Logarithms and Square Roots . 32 4.5 Construction of a Map in ..................... 33 4.6 A Bound of f 0(P ) for all Ff .................. 35 4.7 Application ofj thej Theorems2 of F Montel and Hurwitz . 36 4.8 Proof That f is Onto . 37 1 4.9 Examples of Biholomorphic Mappings . 39 5 An Introduction to Schwarz–Christoffel Formulas 41 5.1 General Power Functions . 41 5.2 An Example of a Schwarz–Christoffel Map . -

Special Values of Riemann's Zeta Function

The divergence of ζ(1) The identity ζ(2) = π2=6 The identity ζ(−1) = −1=12 Special values of Riemann's zeta function Cameron Franc UC Santa Cruz March 6, 2013 Cameron Franc Special values of Riemann's zeta function The divergence of ζ(1) The identity ζ(2) = π2=6 The identity ζ(−1) = −1=12 Riemann's zeta function If s > 1 is a real number, then the series X 1 ζ(s) = ns n≥1 converges. Proof: Compare the partial sum to an integral, N X 1 Z N dx 1 1 1 ≤ 1 + = 1 + 1 − ≤ 1 + : ns xs s − 1 Ns−1 s − 1 n=1 1 Cameron Franc Special values of Riemann's zeta function The divergence of ζ(1) The identity ζ(2) = π2=6 The identity ζ(−1) = −1=12 The resulting function ζ(s) is called Riemann's zeta function. Was studied in depth by Euler and others before Riemann. ζ(s) is named after Riemann for two reasons: 1 He was the first to consider allowing the s in ζ(s) to be a complex number 6= 1. 2 His deep 1859 paper \Ueber die Anzahl der Primzahlen unter einer gegebenen Gr¨osse" (\On the number of primes less than a given quantity") made remarkable connections between ζ(s) and prime numbers. Cameron Franc Special values of Riemann's zeta function The divergence of ζ(1) The identity ζ(2) = π2=6 The identity ζ(−1) = −1=12 In this talk we will discuss certain special values of ζ(s) for integer values of s. -

36 Surprising Facts About Pi

36 Surprising Facts About Pi piday.org/pi-facts Pi is the most studied number in mathematics. And that is for a good reason. The number pi is an integral part of many incredible creations including the Pyramids of Giza. Yes, that’s right. Here are 36 facts you will love about pi. 1. The symbol for Pi has been in use for over 250 years. The symbol was introduced by William Jones, an Anglo-Welsh philologist in 1706 and made popular by the mathematician Leonhard Euler. 2. Since the exact value of pi can never be calculated, we can never find the accurate area or circumference of a circle. 3. March 14 or 3/14 is celebrated as pi day because of the first 3.14 are the first digits of pi. Many math nerds around the world love celebrating this infinitely long, never-ending number. 1/8 4. The record for reciting the most number of decimal places of Pi was achieved by Rajveer Meena at VIT University, Vellore, India on 21 March 2015. He was able to recite 70,000 decimal places. To maintain the sanctity of the record, Rajveer wore a blindfold throughout the duration of his recall, which took an astonishing 10 hours! Can’t believe it? Well, here is the evidence: https://twitter.com/GWR/status/973859428880535552 5. If you aren’t a math geek, you would be surprised to know that we can’t find the true value of pi. This is because it is an irrational number. But this makes it an interesting number as mathematicians can express π as sequences and algorithms.