Computing Over the Reals Solomon Feferman

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Journal Abbreviations

Abbreviations of Names of Serials This list gives the form of references used in Mathematical Reviews (MR). not previously listed ⇤ The abbreviation is followed by the complete title, the place of publication journal indexed cover-to-cover § and other pertinent information. † monographic series Update date: July 1, 2016 4OR 4OR. A Quarterly Journal of Operations Research. Springer, Berlin. ISSN Acta Math. Hungar. Acta Mathematica Hungarica. Akad. Kiad´o,Budapest. § 1619-4500. ISSN 0236-5294. 29o Col´oq. Bras. Mat. 29o Col´oquio Brasileiro de Matem´atica. [29th Brazilian Acta Math. Sci. Ser. A Chin. Ed. Acta Mathematica Scientia. Series A. Shuxue † § Mathematics Colloquium] Inst. Nac. Mat. Pura Apl. (IMPA), Rio de Janeiro. Wuli Xuebao. Chinese Edition. Kexue Chubanshe (Science Press), Beijing. ISSN o o † 30 Col´oq. Bras. Mat. 30 Col´oquio Brasileiro de Matem´atica. [30th Brazilian 1003-3998. ⇤ Mathematics Colloquium] Inst. Nac. Mat. Pura Apl. (IMPA), Rio de Janeiro. Acta Math. Sci. Ser. B Engl. Ed. Acta Mathematica Scientia. Series B. English § Edition. Sci. Press Beijing, Beijing. ISSN 0252-9602. † Aastaraam. Eesti Mat. Selts Aastaraamat. Eesti Matemaatika Selts. [Annual. Estonian Mathematical Society] Eesti Mat. Selts, Tartu. ISSN 1406-4316. Acta Math. Sin. (Engl. Ser.) Acta Mathematica Sinica (English Series). § Springer, Berlin. ISSN 1439-8516. † Abel Symp. Abel Symposia. Springer, Heidelberg. ISSN 2193-2808. Abh. Akad. Wiss. G¨ottingen Neue Folge Abhandlungen der Akademie der Acta Math. Sinica (Chin. Ser.) Acta Mathematica Sinica. Chinese Series. † § Wissenschaften zu G¨ottingen. Neue Folge. [Papers of the Academy of Sciences Chinese Math. Soc., Acta Math. Sinica Ed. Comm., Beijing. ISSN 0583-1431. -

Matematické Ćasopisy Vedené V Databázi SCOPUS

Matematické časopisy vedené v databázi SCOPUS (stav k 21. 1. 2009) Abhandlungen aus dem Mathematischen Seminar der Universitat Hamburg Abstract and Applied Analysis ACM Computing Surveys ACM Transactions on Algorithms ACM Transactions on Computational Logic ACM Transactions on Computer Systems ACM Transactions on Speech and Language Processing Acta Applicandae Mathematicae Acta Arithmetica Acta Mathematica Acta Mathematica Academiae Paedagogicae Nyiregyhaziensis Acta Mathematica Hungarica Acta Mathematica Scientia Acta Mathematica Sinica, English Series Acta Mathematica Universitatis Comenianae Acta Mathematicae Applicatae Sinica Acta Mathematicae Applicatae Sinica, English Series Acta Mechanica Sinica Acta Numerica Advanced Nonlinear Studies Advanced Studies in Contemporary Mathematics (Kyungshang) Advanced Studies in Theoretical Physics Advances in Applied Clifford Algebras Advances in Applied Mathematics Advances in Applied Probability Advances in Computational Mathematics Advances in Difference Equations Advances in Geometry Advances in Mathematics Advances in modelling and analysis. A, general mathematical and computer tools Advances in Nonlinear Variational Inequalities Advances in Theoretical and Mathematical Physics Aequationes Mathematicae Algebra and Logic Algebra Colloquium Algebra Universalis Algebras and Representation Theory Algorithms for Molecular Biology American Journal of Mathematical and Management Sciences American Journal of Mathematics American Mathematical Monthly Analysis in Theory and Applications Analysis Mathematica -

L. Maligranda REVIEW of the BOOK by MARIUSZ URBANEK

Математичнi Студiї. Т.50, №1 Matematychni Studii. V.50, No.1 УДК 51 L. Maligranda REVIEW OF THE BOOK BY MARIUSZ URBANEK, “GENIALNI – LWOWSKA SZKOL A MATEMATYCZNA” (POLISH) [GENIUSES – THE LVOV SCHOOL OF MATHEMATICS] L. Maligranda. Review of the book by Mariusz Urbanek, “Genialni – Lwowska Szko la Matema- tyczna” (Polish) [Geniuses – the Lvov school of mathematics], Wydawnictwo Iskry, Warsaw 2014, 283 pp. ISBN: 978-83-244-0381-3 , Mat. Stud. 50 (2018), 105–112. This review is an extended version of my short review of Urbanek's book that was published in MathSciNet. Here it is written about his book in greater detail, which was not possible in the short review. I will present facts described in the book as well as some false information there. My short review of Urbanek’s book was published in MathSciNet [24]. Here I write about his book in greater detail. Mariusz Urbanek, writer and journalist, author of many books devoted to poets, politicians and other figures of public life, decided to delve also in the world of mathematicians. He has written a book on the phenomenon in the history of Polish science called the Lvov School of Mathematics. Let us add that at the same time there were also the Warsaw School of Mathematics and the Krakow School of Mathematics, and the three formed together the Polish School of Mathematics. The Lvov School of Mathematics was a group of mathematicians in the Polish city of Lvov (Lw´ow,in Polish; now the city is in Ukraine) in the period 1920–1945 under the leadership of Stefan Banach and Hugo Steinhaus, who worked together and often came to the Scottish Caf´e (Kawiarnia Szkocka) to discuss mathematical problems. -

New Publications Offered by The

New Publications Offered by the AMS To subscribe to email notification of new AMS publications, please go to http://www.ams.org/bookstore-email. Algebra and Algebraic Contemporary Mathematics, Volume 544 June 2011, 159 pages, Softcover, ISBN: 978-0-8218-5259-0, LC Geometry 2011007612, 2010 Mathematics Subject Classification: 17A70, 16T05, 05C12, 43A90, 43A35, 43A75, 22E27, AMS members US$47.20, List US$59, Order code CONM/544 New Developments in Lie Theory and Its On Systems of Applications Equations over Free Carina Boyallian, Esther Galina, Partially Commutative and Linda Saal, Universidad Groups Nacional de Córdoba, Argentina, Montserrat Casals-Ruiz and Ilya Editors Kazachkov, McGill University, This volume contains the proceedings of Montreal, QC, Canada the Seventh Workshop in Lie Theory and Its Applications, which was held November 27–December 1, 2009 at Contents: Introduction; Preliminaries; the Universidad Nacional de Córdoba, in Córdoba, Argentina. The Reducing systems of equations over workshop was preceded by a special event, “Encuentro de teoria de G to constrained generalised equations over F; The process: Lie”, held November 23–26, 2009, in honor of the sixtieth birthday Construction of the tree T ; Minimal solutions; Periodic structures; of Jorge A. Vargas, who greatly contributed to the development of The finite tree T0(Ω) and minimal solutions; From the coordinate ∗ T Lie theory in Córdoba. group GR(Ω ) to proper quotients: The decomposition tree dec and the extension tree Text; The solution tree Tsol(Ω) and the main This volume focuses on representation theory, harmonic analysis in theorem; Bibliography; Index; Glossary of notation. Lie groups, and mathematical physics related to Lie theory. -

L. Maligranda REVIEW of the BOOK by ROMAN

Математичнi Студiї. Т.46, №2 Matematychni Studii. V.46, No.2 УДК 51 L. Maligranda REVIEW OF THE BOOK BY ROMAN DUDA, “PEARLS FROM A LOST CITY. THE LVOV SCHOOL OF MATHEMATICS” L. Maligranda. Review of the book by Roman Duda, “Pearls from a lost city. The Lvov school of mathematics”, Mat. Stud. 46 (2016), 203–216. This review is an extended version of my two short reviews of Duda's book that were published in MathSciNet and Mathematical Intelligencer. Here it is written about the Lvov School of Mathematics in greater detail, which I could not do in the short reviews. There are facts described in the book as well as some information the books lacks as, for instance, the information about the planned print in Mathematical Monographs of the second volume of Banach's book and also books by Mazur, Schauder and Tarski. My two short reviews of Duda’s book were published in MathSciNet [16] and Mathematical Intelligencer [17]. Here I write about the Lvov School of Mathematics in greater detail, which was not possible in the short reviews. I will present the facts described in the book as well as some information the books lacks as, for instance, the information about the planned print in Mathematical Monographs of the second volume of Banach’s book and also books by Mazur, Schauder and Tarski. So let us start with a discussion about Duda’s book. In 1795 Poland was partioned among Austria, Russia and Prussia (Germany was not yet unified) and at the end of 1918 Poland became an independent country. -

Abbreviations of Names of Serials

Abbreviations of Names of Serials This list gives the form of references used in Mathematical Reviews (MR). ∗ not previously listed E available electronically The abbreviation is followed by the complete title, the place of publication § journal reviewed cover-to-cover V videocassette series and other pertinent information. † monographic series ¶ bibliographic journal E 4OR 4OR. Quarterly Journal of the Belgian, French and Italian Operations Research ISSN 1211-4774. Societies. Springer, Berlin. ISSN 1619-4500. §Acta Math. Sci. Ser. A Chin. Ed. Acta Mathematica Scientia. Series A. Shuxue Wuli † 19o Col´oq. Bras. Mat. 19o Col´oquio Brasileiro de Matem´atica. [19th Brazilian Xuebao. Chinese Edition. Kexue Chubanshe (Science Press), Beijing. (See also Acta Mathematics Colloquium] Inst. Mat. Pura Apl. (IMPA), Rio de Janeiro. Math.Sci.Ser.BEngl.Ed.) ISSN 1003-3998. † 24o Col´oq. Bras. Mat. 24o Col´oquio Brasileiro de Matem´atica. [24th Brazilian §ActaMath.Sci.Ser.BEngl.Ed. Acta Mathematica Scientia. Series B. English Edition. Mathematics Colloquium] Inst. Mat. Pura Apl. (IMPA), Rio de Janeiro. Science Press, Beijing. (See also Acta Math. Sci. Ser. A Chin. Ed.) ISSN 0252- † 25o Col´oq. Bras. Mat. 25o Col´oquio Brasileiro de Matem´atica. [25th Brazilian 9602. Mathematics Colloquium] Inst. Nac. Mat. Pura Apl. (IMPA), Rio de Janeiro. § E Acta Math. Sin. (Engl. Ser.) Acta Mathematica Sinica (English Series). Springer, † Aastaraam. Eesti Mat. Selts Aastaraamat. Eesti Matemaatika Selts. [Annual. Estonian Heidelberg. ISSN 1439-8516. Mathematical Society] Eesti Mat. Selts, Tartu. ISSN 1406-4316. § E Acta Math. Sinica (Chin. Ser.) Acta Mathematica Sinica. Chinese Series. Chinese Math. Abh. Braunschw. Wiss. Ges. Abhandlungen der Braunschweigischen Wissenschaftlichen Soc., Acta Math. -

Polish Mathematicians and Mathematics in World War I. Part I: Galicia (Austro-Hungarian Empire)

Science in Poland Stanisław Domoradzki ORCID 0000-0002-6511-0812 Faculty of Mathematics and Natural Sciences, University of Rzeszów (Rzeszów, Poland) [email protected] Małgorzata Stawiska ORCID 0000-0001-5704-7270 Mathematical Reviews (Ann Arbor, USA) [email protected] Polish mathematicians and mathematics in World War I. Part I: Galicia (Austro-Hungarian Empire) Abstract In this article we present diverse experiences of Polish math- ematicians (in a broad sense) who during World War I fought for freedom of their homeland or conducted their research and teaching in difficult wartime circumstances. We discuss not only individual fates, but also organizational efforts of many kinds (teaching at the academic level outside traditional institutions, Polish scientific societies, publishing activities) in order to illus- trate the formation of modern Polish mathematical community. PUBLICATION e-ISSN 2543-702X INFO ISSN 2451-3202 DIAMOND OPEN ACCESS CITATION Domoradzki, Stanisław; Stawiska, Małgorzata 2018: Polish mathematicians and mathematics in World War I. Part I: Galicia (Austro-Hungarian Empire. Studia Historiae Scientiarum 17, pp. 23–49. Available online: https://doi.org/10.4467/2543702XSHS.18.003.9323. ARCHIVE RECEIVED: 2.02.2018 LICENSE POLICY ACCEPTED: 22.10.2018 Green SHERPA / PUBLISHED ONLINE: 12.12.2018 RoMEO Colour WWW http://www.ejournals.eu/sj/index.php/SHS/; http://pau.krakow.pl/Studia-Historiae-Scientiarum/ Stanisław Domoradzki, Małgorzata Stawiska Polish mathematicians and mathematics in World War I ... In Part I we focus on mathematicians affiliated with the ex- isting Polish institutions of higher education: Universities in Lwów in Kraków and the Polytechnical School in Lwów, within the Austro-Hungarian empire. -

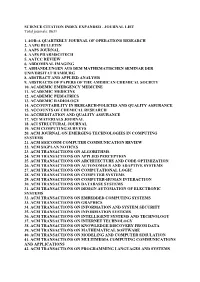

SCIENCE CITATION INDEX EXPANDED - JOURNAL LIST Total Journals: 8631

SCIENCE CITATION INDEX EXPANDED - JOURNAL LIST Total journals: 8631 1. 4OR-A QUARTERLY JOURNAL OF OPERATIONS RESEARCH 2. AAPG BULLETIN 3. AAPS JOURNAL 4. AAPS PHARMSCITECH 5. AATCC REVIEW 6. ABDOMINAL IMAGING 7. ABHANDLUNGEN AUS DEM MATHEMATISCHEN SEMINAR DER UNIVERSITAT HAMBURG 8. ABSTRACT AND APPLIED ANALYSIS 9. ABSTRACTS OF PAPERS OF THE AMERICAN CHEMICAL SOCIETY 10. ACADEMIC EMERGENCY MEDICINE 11. ACADEMIC MEDICINE 12. ACADEMIC PEDIATRICS 13. ACADEMIC RADIOLOGY 14. ACCOUNTABILITY IN RESEARCH-POLICIES AND QUALITY ASSURANCE 15. ACCOUNTS OF CHEMICAL RESEARCH 16. ACCREDITATION AND QUALITY ASSURANCE 17. ACI MATERIALS JOURNAL 18. ACI STRUCTURAL JOURNAL 19. ACM COMPUTING SURVEYS 20. ACM JOURNAL ON EMERGING TECHNOLOGIES IN COMPUTING SYSTEMS 21. ACM SIGCOMM COMPUTER COMMUNICATION REVIEW 22. ACM SIGPLAN NOTICES 23. ACM TRANSACTIONS ON ALGORITHMS 24. ACM TRANSACTIONS ON APPLIED PERCEPTION 25. ACM TRANSACTIONS ON ARCHITECTURE AND CODE OPTIMIZATION 26. ACM TRANSACTIONS ON AUTONOMOUS AND ADAPTIVE SYSTEMS 27. ACM TRANSACTIONS ON COMPUTATIONAL LOGIC 28. ACM TRANSACTIONS ON COMPUTER SYSTEMS 29. ACM TRANSACTIONS ON COMPUTER-HUMAN INTERACTION 30. ACM TRANSACTIONS ON DATABASE SYSTEMS 31. ACM TRANSACTIONS ON DESIGN AUTOMATION OF ELECTRONIC SYSTEMS 32. ACM TRANSACTIONS ON EMBEDDED COMPUTING SYSTEMS 33. ACM TRANSACTIONS ON GRAPHICS 34. ACM TRANSACTIONS ON INFORMATION AND SYSTEM SECURITY 35. ACM TRANSACTIONS ON INFORMATION SYSTEMS 36. ACM TRANSACTIONS ON INTELLIGENT SYSTEMS AND TECHNOLOGY 37. ACM TRANSACTIONS ON INTERNET TECHNOLOGY 38. ACM TRANSACTIONS ON KNOWLEDGE DISCOVERY FROM DATA 39. ACM TRANSACTIONS ON MATHEMATICAL SOFTWARE 40. ACM TRANSACTIONS ON MODELING AND COMPUTER SIMULATION 41. ACM TRANSACTIONS ON MULTIMEDIA COMPUTING COMMUNICATIONS AND APPLICATIONS 42. ACM TRANSACTIONS ON PROGRAMMING LANGUAGES AND SYSTEMS 43. ACM TRANSACTIONS ON RECONFIGURABLE TECHNOLOGY AND SYSTEMS 44. -

Stefan Banach

Stefan Banach Jeremy Noe March 17, 2005 1 Introduction 1 The years just before and after World War I were perhaps the most exciting and influential years in the history of science. In 1901, Henri Lebesgue formu- lated the theory of measure and in his famous paper Sur une g´en´eralisation de l’int´egrale definie, defining a generalization of the Riemann integral that had important ramifications in modern mathematics. Until that time, mathemat- ical analysis had been limited to continuous functions based on the Riemann integration method. Alfred Whitehead and Bertrand Russell published the Principia Mathematica in an attempt to place all of mathematics on a logical footing, and to attempt to illustrate to some degree how the various branches of mathematics are intertwined. New developments in physics during this time were beyond notable. Al- bert Einstein formulated his theory of Special Relativity in 1905, and in 1915 published the theory of General Relativity. Between the relativity the- ories, Einstein had laid the groundwork for the wave-particle duality of light, for which he was later awarded the Nobel Prize. Quantum mechanics was formulated. Advances in the physical sciences and mathematics seemed to be coming at lightning speed. The whole western world was buzzing with the achievements being made. Along with the rest of Europe, the Polish intellectual community flourished during this time. Prior to World War I, not many Polish scolastics pursued research related careers, focusing instead 1Some of this paragraph is paraphrased from Saint Andrews [11]. 1 on education if they went on to the university at all. -

List of Mathematics Impact Factor Journals Indexed in ISI Web of Science (JCR SCI, 2016)

List of Mathematics Impact Factor Journals Indexed in ISI Web of Science (JCR SCI, 2016) Compiled by: Arslan Sheikh In Charge Reference & Research Section Library Information Services COMSATS Institute of Information Technology Park Road, Islamabad-Pakistan. Cell No +92-0321-9423071 [email protected] Rank Journal Title ISSN Impact Factor 1 ACTA NUMERICA 0962-4929 6.250 2 SIAM REVIEW 0036-1445 4.897 3 JOURNAL OF THE AMERICAN MATHEMATICAL SOCIETY 0894-0347 4.692 4 Nonlinear Analysis-Hybrid Systems 1751-570X 3.963 5 ANNALS OF MATHEMATICS 0003-486X 3.921 6 COMMUNICATIONS ON PURE AND APPLIED MATHEMATICS 0010-3640 3.793 7 INTERNATIONAL JOURNAL OF ROBUST AND NONLINEAR CONTROL 1049-8923 3.393 8 ACM TRANSACTIONS ON MATHEMATICAL SOFTWARE 0098-3500 3.275 9 Publications Mathematiques de l IHES 0073-8301 3.182 10 INVENTIONES MATHEMATICAE 0020-9910 2.946 11 MATHEMATICAL MODELS & METHODS IN APPLIED SCIENCES 0218-2025 2.860 12 Advances in Nonlinear Analysis 2191-9496 2.844 13 FOUNDATIONS OF COMPUTATIONAL MATHEMATICS 1615-3375 2.829 14 Communications in Nonlinear Science and Numerical Simulation 1007-5704 2.784 15 FUZZY SETS AND SYSTEMS 0165-0114 2.718 16 APPLIED AND COMPUTATIONAL HARMONIC ANALYSIS 1063-5203 2.634 17 SIAM Journal on Imaging Sciences 1936-4954 2.485 18 ANNALES DE L INSTITUT HENRI POINCARE-ANALYSE NON LINEAIRE 0294-1449 2.450 19 ACTA MATHEMATICA 0001-5962 2.448 20 MATHEMATICAL PROGRAMMING 0025-5610 2.446 21 ARCHIVE FOR RATIONAL MECHANICS AND ANALYSIS 0003-9527 2.392 22 CHAOS 1054-1500 2.283 23 APPLIED MATHEMATICS LETTERS 0893-9659 -

·11M~Mw111¡•·

cf,>o-A is-. i \ UNIVERSITAT DE BARCELONA ON THE CONTRIBUTIONS OF HELENA RASIOWA TO MATHEMATICAL LOGIC by Josep !daría Font AMS 1991 Subject Classification: 03-03, 01A60. 03G ·11m~mw111¡ 0701570850 •· :t\Jathematics Preprint Series No. 216 October 1996 On the contributions of Helena Rasiowa to Mathematical Logic Josep Maria Font* Faculty of Mathematics University of Barcelona Gran Via 585, E-08007 Barcelona, Spain f ont <O cerber. mat. ub. es October 3, 1996 Introduction Let me begin with sorne personal reminiscences. For me, writing a paper on the contributions of HELENA RASIOWA (1917-1994) to Mathematical Logic might 1 be the result of several unexpected coincidences . My relationship with RASIOWA is academic rather than personal. Her book An algebraic approach to non-classical logics [41] is one the main sources that might be found guilty of my professional dedication to research in Algebraic Logic, if sorne <lay I am taken to court for such an extravagant behaviour. Personally, I first met RASIOWA only in 1988, at the 18th ISMVL, held in Palma de Mallorca (Spain). There I contributed a paper [15] on an abstract characterization of BELNAP's four-valued logic which used a mathematical tool inspired by sorne work done by 8IALINICKI-8IRULA and RASIOWA in the fifties [3]. More precisely, I used a mathematical tool abstracted from the works of MoNTEIRO on DE MoRGAN algebras [26, 27], and these works, in turn, were based on that early work of BIALINICKI-BIRULA and RASIOWA. Two years later, that is, in 1990, I attended the 20th ISMVL, held in Charlotte (North Carolina, U.S.A.). -

Scopus Indexed Journals, Updated List 2018

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/320410854 Scopus indexed journals, updated list 2018 Poster · October 2017 DOI: 10.13140/RG.2.2.34648.60168 CITATIONS READS 0 351,409 1 author: Ahmad Rayan Zarqa University 73 PUBLICATIONS 171 CITATIONS SEE PROFILE Some of the authors of this publication are also working on these related projects: A Cross Sectional Study To Evaluate Awareness Regarding Non-Cytotoxic Medication Extravasation Amongst Registered Nurses Representing A Tertiary Health Care Institution In Western Saudi Arabi View project I am working on mental health at school based View project All content following this page was uploaded by Ahmad Rayan on 15 October 2017. The user has requested enhancement of the downloaded file. Source Title Print-ISSN E-ISSN Open Acces status, i.e., registered in DOAJ and/or ROAD. Status March 2016 2D Materials 20531583 3 Biotech 2190572X 21905738 DOAJ/ROAD Open Access 3D Research 20926731 3L: Language, Linguistics, Literature 01285157 DOAJ/ROAD Open Access 4OR 16194500 16142411 A & A case reports 23257237 A + U-Architecture and Urbanism 03899160 A Contrario. Revue interdisciplinaire de sciences sociales 16607880 a/b: Auto/Biography Studies 21517290 A|Z ITU Journal of Faculty of Architecture 13028324 AAA, Arbeiten aus Anglistik und Amerikanistik 01715410 AAC: Augmentative and Alternative Communication 07434618 14773848 AACL Bioflux 18448143 18449166 DOAJ/ROAD Open Access AACN Advanced Critical Care 15597768 AANA Journal 00946354