Open Leiyao-Dissertation.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Knowledge, Imagination, and Stories in the Aesthetic Experience of Forests

Zlom1_2018_Sestava 1 23.3.18 11:39 Stránka 3 Ken Wilder KNOWLEDGE, IMAGINATION, AND STORIES IN THE AESTHETIC EXPERIENCE OF FORESTS JUKKA MIKKONEN A key dispute in environmental aesthetics concerns the role of scientific knowledge in our aesthetic appreciation of the natural environment. In this article, I will explore this debate by focusing on the aesthetic experience of forests. I intend to question reductive forms of the scientific approach and support the role of imagination and stories in nature appreciation. I. INTRODUCTION There has long been a debate in environmental aesthetics about the guiding principles of the aesthetic appreciation of nature. According to the ‘scientific approach’, supported by philosophers like Allen Carlson, Holmes Rolston III, Marcia Muelder Eaton, Robert S. Fudge, Patricia Matthews, and Glenn Parsons, the aesthetic appreciation of nature ought to be based on our scientific knowledge of nature, such as our knowledge of natural history. In turn, philosophers who have argued for ‘non-scientific approaches’, like Ronald Hepburn, Arnold Berleant, Stan Godlovitch, Noël Carroll, and Emily Brady, have maintained that the aesthetic interpretation and evaluation of nature is essentially an imaginative, emotional, or bodily activity. In this paper I shall explore the debate by focusing on the aesthetic experience of forests. The distinctive experience of a forest, together with the mythological and cultural meanings of woodlands and trees, provides an intriguing opportunity to examine the relationship between the essential and the accidental, or the global and the local, in philosophical aesthetics. It is an opportunity to explore what we look at when we look at nature. Indeed, trees, for instance, have a vast amount of symbolic value. -

STEM Subjects Face the Haptic Generation: the Ischolar Tesis

STEM Subjects Face the Haptic Generation: The iScholar Tesis doctoral Nuria Llobregat Gómez Director Dr. D. Luis Manuel Sánchez Ruiz Valencia, noviembre 2019 A mi Madre, a mi Padre (†), a mis Yayos (†), y a mi Hija, sin cuya existencia esto no hubiese podido suceder. Contents Abstract. English Version Resumen. Spanish Version Resum. Valencian Version Acknowledgements Introduction_____________________________________________________________________ 7 Outsight ____________________________________________________________________________________ 13 Insight ______________________________________________________________________________________14 Statement of the Research Questions __________________________________________________________ 15 Dissertation Structure ________________________________________________________________________16 SECTION A. State of the Art. The Drivers ____________________________________________ 19 Chapter 1: Haptic Device Irruption 1.1 Science or Fiction? Some Historical Facts ______________________________________________ 25 1.2 The Irruptive Perspective ___________________________________________________________ 29 1.2.1 i_Learn & i_Different ____________________________________________________________________ 29 1.2.2 Corporate Discourse and Education ________________________________________________________ 31 1.2.3 Size & Portability Impact _________________________________________________________________ 33 First Devices _____________________________________________________________________________ 33 Pro Models -

Computational Media Aesthetics for Media

COMPUTATIONAL MEDIA AESTHETICS FOR MEDIA SYNTHESIS XIANG YANGYANG (B.Sci., Fudan Univ.) A THESIS SUBMITTED FOR THE DEGREE OF DOCTOR OF PHILOSOPHY NUS GRADUATE SCHOOL FOR INTEGRATIVE SCIENCES AND ENGINEERING NATIONAL UNIVERSITY OF SINGAPORE 2013 ii DECLARATION I hereby declare that this thesis is my original work and it has been written by me in its entirety. I have duly acknowledged all the sources of information which have been used in the thesis. This thesis has also not been submitted for any degree in any university previously. XIANG YANGYANG January 2014 iii ACKNOWLEDGMENTS First and foremost, I would like to thank my supervisor Profes- sor Mohan Kankanhalli for his continuous support during my Ph.D study. His patience, enthusiasm, immense knowledge and guidance helped me throughout the research and writing of this thesis. I would like to thank my Thesis Advisory Committee members: Prof. Chua Tat-Seng, and Dr. Tan Ping for their insightful com- ments and questions. I also want to thank all the team members of the Multimedia Analysis and Synthesis Laboratory, without whom the thesis would not have been possible at all. Last but not the least, I would like to express my appreciation to my family. They have spiritually supported and encouraged me through the whole process. iv ABSTRACT Aesthetics is a branch of philosophy and is closely related to the nature of art. It is common to think of aesthetics as a systematic study of beauty, and one of its major concerns is the evaluation of beauty and ugliness. Applied media aesthetics deals with basic media elements, and aims to constitute formative evaluations as well as help create media products. -

AMNH Digital Library

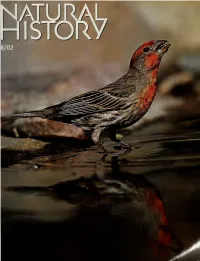

^^<e?& THERE ARE THOSE WHO DO. AND THOSE WHO WOULDACOULDASHOULDA. Which one are you? If you're the kind of person who's willing to put it all on the line to pursue your goal, there's AIG. The organization with more ways to manage risk and more financial solutions than anyone else. Everything from business insurance for growing companies to travel-accident coverage to retirement savings plans. All to help you act boldly in business and in life. So the next time you're facing an uphill challenge, contact AIG. THE GREATEST RISK IS NOT TAKING ONE: AIG INSURANCE, FINANCIAL SERVICES AND THE FREEDOM TO DARE. Insurance and services provided by members of American International Group, Inc.. 70 Pine Street, Dept. A, New York, NY 10270. vww.aig.com TODAY TOMORROW TOYOTA Each year Toyota builds more than one million vehicles in North America. This means that we use a lot of resources — steel, aluminum, and plastics, for instance. But at Toyota, large scale manufacturing doesn't mean large scale waste. In 1992 we introduced our Global Earth Charter to promote environmental responsibility throughout our operations. And in North America it is already reaping significant benefits. We recycle 376 million pounds of steel annually, and aggressive recycling programs keep 18 million pounds of other scrap materials from landfills. Of course, no one ever said that looking after the Earth's resources is easy. But as we continue to strive for greener ways to do business, there's one thing we're definitely not wasting. And that's time. www.toyota.com/tomorrow ©2001 JUNE 2002 VOLUME 111 NUMBER 5 FEATURES AVIAN QUICK-CHANGE ARTISTS How do house finches thrive in so many environments? By reshaping themselves. -

The Literature of Kita Morio DISSERTATION Presented In

Insignificance Given Meaning: The Literature of Kita Morio DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Masako Inamoto Graduate Program in East Asian Languages and Literatures The Ohio State University 2010 Dissertation Committee: Professor Richard Edgar Torrance Professor Naomi Fukumori Professor Shelley Fenno Quinn Copyright by Masako Inamoto 2010 Abstract Kita Morio (1927-), also known as his literary persona Dokutoru Manbô, is one of the most popular and prolific postwar writers in Japan. He is also one of the few Japanese writers who have simultaneously and successfully produced humorous, comical fiction and essays as well as serious literary works. He has worked in a variety of genres. For example, The House of Nire (Nireke no hitobito), his most prominent work, is a long family saga informed by history and Dr. Manbô at Sea (Dokutoru Manbô kôkaiki) is a humorous travelogue. He has also produced in other genres such as children‟s stories and science fiction. This study provides an introduction to Kita Morio‟s fiction and essays, in particular, his versatile writing styles. Also, through the examination of Kita‟s representative works in each genre, the study examines some overarching traits in his writing. For this reason, I have approached his large body of works by according a chapter to each genre. Chapter one provides a biographical overview of Kita Morio‟s life up to the present. The chapter also gives a brief biographical sketch of Kita‟s father, Saitô Mokichi (1882-1953), who is one of the most prominent tanka poets in modern times. -

Vincent Van Gogh Et Le Japon Vincentvangoghetle Apon け4

"Vincent Van Gogh et le Japon", conférence donnée au centenaire de la mort du peintre à Auvers-sur-Oise,le premier juin 1990, Jinbunronsô,『人文論叢』、三重大学人文学部 Faculty of Humanities and Social Sciences, Mié University, March 25, 1991 pp.77-93. linbun linbun Jinbun RonsoRonso,, Mie University No. 8 ,1991,1991 Vincent Van Gogh et le JJaponapon -au centenaire de la mort du peintre conferenceconférence donneedonnée aà Auvers-sur-OiseAuvers-sur-Oise.!, /1 1 juin 1990 け11ft4 Shigemi IN AG A MesdamesMesdames,, mesdemoiselles mesdemoiselles, , messieursmessieurs, , mes chers amis ,, Je Je suis tres très f1 flatté atte d'etre d'être invite invité par le Bateau DaphneDaphné aà participer aà une manifestaion qu'il a organisee organisee organisée sous les auspices de l' l'AmbassadeAmbassade du Japon. C' C'est est aussi pour moi un grand honneur de vous parler de nouveau de Van GoghGogh,, en commemorationcommémoration de son centenaire. En effet effet, , j'ai deja deja déjà eu l' l'occasion,occasion , en automne 1987 1987,, d'assurer une conference conférence au Centre culturel et d'informa- d'informa tion tion de l' l'AmbassadeAmbassade du ]aJaponpon a Paris . (I (l)Kenneth )Kenneth WhiteWhite,, poete poète voyageur et moimoi,, en tant qu' historien historien d'art d'art,, nous avons presente présenté un artiste japonais que nous admirons tous les deux: Hiroshige. Hiroshige. 11 Il s'agissait de feter fêter un peu officiellement la publication en francais français d'un superbe album de Hiroshige : Cent vues celebres célèbres d'Edoquid'Edo, qui reunit réunit les dernieres dernières images laissees laissées par ce dessinateur dessinateur de l' l'estampeestampe japonaise. -

The Social and Environmental Turn in Late 20Th Century Art

THE SOCIAL AND ENVIRONMENTAL TURN IN LATE 20TH CENTURY ART: A CASE STUDY OF HELEN AND NEWTON HARRISON AFTER MODERNISM A DISSERTATION SUBMITTED TO THE PROGRAM IN MODERN THOUGHT AND LITERATURE AND THE COMMITTEE ON GRADUATE STUDIES OF STANFORD UNIVERSITY IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY LAURA CASSIDY ROGERS JUNE 2017 © 2017 by Laura Cassidy Rogers. All Rights Reserved. Re-distributed by Stanford University under license with the author. This work is licensed under a Creative Commons Attribution- Noncommercial-Share Alike 3.0 United States License. http://creativecommons.org/licenses/by-nc-sa/3.0/us/ This dissertation is online at: http://purl.stanford.edu/gy939rt6115 Includes supplemental files: 1. (Rogers_Circular Dendrogram.pdf) 2. (Rogers_Table_1_Primary.pdf) 3. (Rogers_Table_2_Projects.pdf) 4. (Rogers_Table_3_Places.pdf) 5. (Rogers_Table_4_People.pdf) 6. (Rogers_Table_5_Institutions.pdf) 7. (Rogers_Table_6_Media.pdf) 8. (Rogers_Table_7_Topics.pdf) 9. (Rogers_Table_8_ExhibitionsPerformances.pdf) 10. (Rogers_Table_9_Acquisitions.pdf) ii I certify that I have read this dissertation and that, in my opinion, it is fully adequate in scope and quality as a dissertation for the degree of Doctor of Philosophy. Zephyr Frank, Primary Adviser I certify that I have read this dissertation and that, in my opinion, it is fully adequate in scope and quality as a dissertation for the degree of Doctor of Philosophy. Gail Wight I certify that I have read this dissertation and that, in my opinion, it is fully adequate in scope and quality as a dissertation for the degree of Doctor of Philosophy. Ursula Heise Approved for the Stanford University Committee on Graduate Studies. Patricia J. -

Adaptive Sparse Coding for Painting Style Analysis

Adaptive Sparse Coding for Painting Style Analysis Zhi Gao1∗, Mo Shan2∗, Loong-Fah Cheong3, and Qingquan Li4,5 1 Interactive and Digital Media Institute, National University of Singapore, Singapore 2 Temasek Laboratories, National University of Singapore 3 Electrical and Computer Engineering Department, National University of Singapore 4 The State Key Laboratory of Information Engineering in Surveying, Mapping, and Remote Sensing, Wuhan University, Wuhan, China 5 Shenzhen Key Laboratory of Spatial Smart Sensing and Services, Shenzhen University, Shenzhen, China fgaozhinus, [email protected], [email protected], [email protected] Abstract. Inspired by the outstanding performance of sparse coding in applications of image denoising, restoration, classification, etc, we pro- pose an adaptive sparse coding method for painting style analysis that is traditionally carried out by art connoisseurs and experts. Significantly improved over previous sparse coding methods, which heavily rely on the comparison of query paintings, our method is able to determine the au- thenticity of a single query painting based on estimated decision bound- ary. Firstly, discriminative patches containing the most representative characteristics of the given authentic samples are extracted via exploit- ing the statistical information of their representation on the DCT basis. Subsequently, the strategy of adaptive sparsity constraint which assigns higher sparsity weight to the patch with higher discriminative level is enforced to make the dictionary trained on such patches more exclu- sively adaptive to the authentic samples than via previous sparse coding algorithms. Relying on the learnt dictionary, the query painting can be authenticated if both better denoising performance and higher sparse representation are obtained, otherwise it should be denied. -

Japonisme in Britain - a Source of Inspiration: J

Japonisme in Britain - A Source of Inspiration: J. McN. Whistler, Mortimer Menpes, George Henry, E.A. Hornel and nineteenth century Japan. Thesis Submitted for the Degree of Doctor of Philosophy in the Department of History of Art, University of Glasgow. By Ayako Ono vol. 1. © Ayako Ono 2001 ProQuest Number: 13818783 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a com plete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. uest ProQuest 13818783 Published by ProQuest LLC(2018). Copyright of the Dissertation is held by the Author. All rights reserved. This work is protected against unauthorized copying under Title 17, United States C ode Microform Edition © ProQuest LLC. ProQuest LLC. 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 4 8 1 0 6 - 1346 GLASGOW UNIVERSITY LIBRARY 122%'Cop7 I Abstract Japan held a profound fascination for Western artists in the latter half of the nineteenth century. The influence of Japanese art is a phenomenon that is now called Japonisme , and it spread widely throughout Western art. It is quite hard to make a clear definition of Japonisme because of the breadth of the phenomenon, but it could be generally agreed that it is an attempt to understand and adapt the essential qualities of Japanese art. This thesis explores Japanese influences on British Art and will focus on four artists working in Britain: the American James McNeill Whistler (1834-1903), the Australian Mortimer Menpes (1855-1938), and two artists from the group known as the Glasgow Boys, George Henry (1858-1934) and Edward Atkinson Hornel (1864-1933). -

The Letters of Vincent Van Gogh

THE LETTERS OF VINCENT VAN GOGH ‘Van Gogh’s letters… are one of the greatest joys of modern literature, not only for the inherent beauty of the prose and the sharpness of the observations but also for their portrait of the artist as a man wholly and selessly devoted to the work he had to set himself to’ - Washington Post ‘Fascinating… letter after letter sizzles with colorful, exacting descriptions … This absorbing collection elaborates yet another side of this beuiling and brilliant artist’ - The New York Times Book Review ‘Ronald de Leeuw’s magnicent achievement here is to make the letters accessible in English to general readers rather than art historians, in a new translation so excellent I found myself reading even the well-known letters as if for the rst time… It will be surprising if a more impressive volume of letters appears this year’ — Observer ‘Any selection of Van Gogh’s letters is bound to be full of marvellous things, and this is no exception’ — Sunday Telegraph ‘With this new translation of Van Gogh’s letters, his literary brilliance and his statement of what amounts to prophetic art theories will remain as a force in literary and art history’ — Philadelphia Inquirer ‘De Leeuw’s collection is likely to remain the denitive volume for many years, both for the excellent selection and for the accurate translation’ - The Times Literary Supplement ‘Vincent’s letters are a journal, a meditative autobiography… You are able to take in Vincent’s extraordinary literary qualities … Unputdownable’ - Daily Telegraph ABOUT THE AUTHOR, EDITOR AND TRANSLATOR VINCENT WILLEM VAN GOGH was born in Holland in 1853. -

The Coconut Crab the Australian Centre for International Agricultural Research (ACIAR) Was Established in June 1982 by an Act of the Australian Parliament

The Coconut Crab The Australian Centre for International Agricultural Research (ACIAR) was established in June 1982 by an Act of the Australian Parliament. Its mandate is to help identify agricultural problems in developing countries and to commission collaborative research between Australian and developing country researchers in fields where Australia has a special research competence. Where trade names are used this constitutes neither endorsement of nor discrimination against any product by the Centre. ACIAR Monograph Series This peer-reviewed series contains the results of original research supported by ACIAR, or material deemed relevant to ACIAR's research objectives. The series is distributed internationally, with an emphasis on developing countries. Reprinted 1992 © Australian Centre for International Agricultural Research G.P.O. Box 1571, Canberra, ACT, Australia 2601 Brown, I.W. and Fielder, D .R. 1991. The Coconut Crab: aspects of the biology and ecology of Birgus Zatro in the Republic of Vanuatu. ACIAR Monograph No.8, 136 p. ISBN I 86320 054 I Technical editing: Apword Partners, Canberra Production management: Peter Lynch Design and production: BPD Graphic Associates, Canberra, ACT Printed by: Goanna Print, Fyshwick The Coconut Crab: aspects of the biology and ecology of Birgus latro in the Republic of Vanuatu Editors I.w. Brown and D.R. Fielder Australian Centre for International Agricultural Research, Canberra, Australia 199 1 The Authors I.W. Brown. Queensland Department of Primary Industries, Southern Fisheries Centre, PO Box 76, Deception Bay, Queensland, Australia D.R. Fielder. Department of Zoology, University of Queensland, St Lucia, Queensland, Australia W.J. Fletcher. Western Australian Marine Research Laboratories, PO Box 20, North Beach, Western Australia, Australia S. -

ED388602.Pdf

DOCUMENT RESUME ED 388 602 SO 025 569 AUTHOR Csikszentmihalyi, Mihaly; Robinson, Rick E. TITLE The Art of Seeing: An Interpretation of the Aesthetic Encounter. INSTITUTION Getty Center for Education in the Atts, Los Angeles, CA.; J. Paul Getty Museum, Malibu, CA. REPORT NO ISBN-0-89236-156-5 PUB DATE 90 NOTE 224p. AVAILABLE FROMGetty Center for Education in the Arts, 401 Wilshire Blvd., Suite 950, Santa Monica, CA 90401. PUB TYPE Books (010) Reports Research/Technical (143) Tests/Evaluation Instruments (160) EDRS PRICE MF01/PC09 Plus Postage. DESCRIPTORS Aesthetic Education; *Aesthetic Values; Art; *Art Appreciation; Art Criticism; Audience Response; *Critical Viewing; Higher Education; Museums; Perception; Professional Education; Sensory Experience; Skill Analysis; Visual Arts; *Visual Literacy; Visual Stimuli ABSTRACT This study attempts to gain information concerning the receptive, as opposed to the creative, aesthetic experience by talking to museum professionals who spend their working lives identifying, appraising, and explicating works of art. The study is based on an underlying assumption that rules and practices for looking at art exist and must be mastered if success is to ensue. The anthropological research approach uses semi-structured interviews and subjects the responses to systematic analysis. Major conclusions emphasize the unity and diversity of the aesthetic experience. The structure of the aesthetic experience is found to be an intense involvement of attention in response to a visual stimulus, for no other reason than to sustain the interaction. The experiential consequences of such a deep and autotelic :lvolvement are an intense enjoy,lent characterized by feelings of personal wholeness, a sense of discovery, and a sense of human connectedness.