Design Document V3

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Understanding the Value of Arts & Culture | the AHRC Cultural Value

Understanding the value of arts & culture The AHRC Cultural Value Project Geoffrey Crossick & Patrycja Kaszynska 2 Understanding the value of arts & culture The AHRC Cultural Value Project Geoffrey Crossick & Patrycja Kaszynska THE AHRC CULTURAL VALUE PROJECT CONTENTS Foreword 3 4. The engaged citizen: civic agency 58 & civic engagement Executive summary 6 Preconditions for political engagement 59 Civic space and civic engagement: three case studies 61 Part 1 Introduction Creative challenge: cultural industries, digging 63 and climate change 1. Rethinking the terms of the cultural 12 Culture, conflict and post-conflict: 66 value debate a double-edged sword? The Cultural Value Project 12 Culture and art: a brief intellectual history 14 5. Communities, Regeneration and Space 71 Cultural policy and the many lives of cultural value 16 Place, identity and public art 71 Beyond dichotomies: the view from 19 Urban regeneration 74 Cultural Value Project awards Creative places, creative quarters 77 Prioritising experience and methodological diversity 21 Community arts 81 Coda: arts, culture and rural communities 83 2. Cross-cutting themes 25 Modes of cultural engagement 25 6. Economy: impact, innovation and ecology 86 Arts and culture in an unequal society 29 The economic benefits of what? 87 Digital transformations 34 Ways of counting 89 Wellbeing and capabilities 37 Agglomeration and attractiveness 91 The innovation economy 92 Part 2 Components of Cultural Value Ecologies of culture 95 3. The reflective individual 42 7. Health, ageing and wellbeing 100 Cultural engagement and the self 43 Therapeutic, clinical and environmental 101 Case study: arts, culture and the criminal 47 interventions justice system Community-based arts and health 104 Cultural engagement and the other 49 Longer-term health benefits and subjective 106 Case study: professional and informal carers 51 wellbeing Culture and international influence 54 Ageing and dementia 108 Two cultures? 110 8. -

Free Views Tiktok

Free Views Tiktok Free Views Tiktok CLICK HERE TO ACCESS TIKTOK GENERATOR tiktok auto liker hack In July 2021, "The Wall Street Journal" reported the company's annual revenue to be approximately $800 million with a loss of $70 million. By May 2021, it was reported that the video-sharing app generated $5.2 billion in revenue with more than 500 million users worldwide.", free vending machine code tiktok free pro tiktok likes and followers free tiktok fans without downloading any apps The primary difference between Tencent’s WeChat and ByteDance’s Toutiao is that the former has yet to capitalize on the addictive nature of short-form videos, whereas the latter has. TikTok — the latter’s new acquisition — is a comparatively more simple app than its parent company, but it does fit in well with Tencent’s previous acquisition of Meitu, which is perhaps better known for its beauty apps.", In an article published by The New York Times, it was claimed that "An app with more than 500 million users can’t seem to catch a break. From pornography to privacy concerns, there have been quite few controversies surrounding TikTok." It continued by saying that "A recent class-action lawsuit alleged that the app poses health and privacy risks to users because of its allegedly discriminatory algorithm, which restricts some content and promotes other content." This article was published on The New York Times.", The app has received criticism from users for not creating revenue and posting ads on videos which some see as annoying. The app has also been criticized for allowing children younger than age 13 to create videos. -

FEBRUARY 2021 Company Overview: Parallel / Ceres Acquisition Corp

FEBRUARY 2021 Company Overview: Parallel / Ceres Acquisition Corp. SPAC Transaction DISCLOSURES CAUTIONARY STATEMENT This presentation is provided for informational purposes only and has been prepared to assist interested parties in making their own evaluation with respect to an investment in securities in connection with a potential business combination between SH Parent, Inc., a Delaware corporation (“Parallel” or the “Corporation”) and Ceres Acquisition Corp. (“Ceres”) and related transactions (collectively, the “Transaction”) and for no other purpose. This presentation does not contain, nor does it purport to contain, a summary of all the material information concerning Parallel or the terms and conditions of any potential investment in connection with the Transaction. If and when you determine to proceed with discussions and investigations regarding a possible investment in connection with the Transaction, prospective investors are urged to carry out independent investigations in order to determine their interest in investing in connection with the Transaction. The information contained in this presentation has been prepared by Parallel and Ceres and contains confidential information pertaining to the business and operations of the Corporation following the Transaction (the “Resulting Company”). The information contained in this presentation: (a) is provided as at the date hereof, is subject to change without notice, and is based on publicly available information, internally developed data as well as third party information from other -

Google's Next Generation Music Recognition 1

Google’s Next Generation Music Recognition By: Yash Dadia www.attuneww.com All Rights Reserved Table of Content Google’s “Now Playing” Introduction How to set Now Playing on your Device Now Playing versus Sound Search The Core Matching Process of Now Playing Increasing Up Now Playing for the Sound Search Server Updated Overview of Now Playing About Attune Google's Next Generation Music Recognition 1 Google’s “Now Playing” Introduction ● In 2017 Google launched Now Playing on the Pixel 2, using deep neural networks to bring low-power, always-on music recognition to mobile devices. In developing Now Playing, Google’s goal was to create a small, efficient music recognizer which requires a very small fingerprint for each track in the database, allowing music recognition to be run fully on-device without an internet connection. ● As it turns out, Now Playing was not only useful for an on-device music recognizer, but also greatly exceeded the accuracy and efficiency of Google’s then-current server-side system, Sound Search, which was built before the large use of deep neural networks. Naturally, Google wondered if they could bring the same technology that powers Now Playing to the server-side Sound Search, with the goal of making Google’s music recognition capabilities the best in the world. ● Recently, Google introduced a new version of Sound Search that is powered by some of the same technology used by Now Playing. You can use it through the Google Search app or the Google Assistant on any Android Device. Just start a voice query, and if there’s music playing near you, a “What’s this song?” suggestion will pop up just you have to press.You can also ask, “Hey Google, what’s this song?” in the latest version of Sound Search, you’ll get faster, more accurate results than ever before! Google's Next Generation Music Recognition 2 How to set Now Playing on your Device ● If you have used Google to identify a song with your device, you’ve probably seen how to find all those past discoveries. -

Google Buys Songza Streaming Music Service 1 July 2014

Google buys Songza streaming music service 1 July 2014 It has applications tailored for mobile devices powered by Apple or Google-backed Android software. Songza features that have resonated with users will be woven into Google Play Music and YouTube where possible, the California-based technology titan said in a post at its Google+ social network. "They've built a great service which uses contextual expert-curated playlists to give you the right music at the right time," Google said. New York-based Songza has been likened to The Google logo seen at Google headquarters in Pandora, which has a leading ad-supported Mountain View, California on September 2, 2011 streaming music model. Google, Amazon and Apple have music services that compete in a market where Pandora and Google on Tuesday said that it has bought Spotify have found success. Songza, a free online streaming music service that recommends tunes based on what people might Apple in May bought Beats Music and Beats be in the mood to hear. Electronics in a deal worth $3 billion to bolster its position in the hotly contested online music sector. Financial terms of the deal were not disclosed, but unconfirmed online reports valued the deal at The move is expected to help the US tech giant—a around $15 million. pioneer in digital music with its wildly popular iTunes platform—ramp up its efforts to counter the "We're thrilled to announce that we're becoming successful models of streaming services like part of Google," Songza said on its website. Pandora, Spotify and others. -

2012 Yearbook 1 Contents

THIS IS NUTH. PoP’s first student 2012 YEARBOOK 1 CONTENTS. 10 OUR IMPACT Faculty + Superlatives How We Build Board of Directors Hello, Ghana The Staff Local Staff Beyond the Build 32 OUR FINANCIALS Monitoring & Evaluation With Gratitude WHERE YOU START 18 OUR COMMUNITY 40 THE NEXT CHAPTER The Impossible Ones Schools4All SHOULD NOT DEFINE WHERE YOU FINISH. Our Second Gala Leadership Councils Digital Engagement PoP in the Press 26 WHO WE ARE Our New Homeroom Company Culture Partnerships 2 3 2012 TIMELINE. JANUARY 2013 We complete our Guatemala breaks 100th school ground on a school school every day for one week AUGUST 2012 Teacher training and JUNE 2012 NOVEMBER 2012 PoP opens its student scholarships 17 year-old launch in Laos Schools4All Kennedy starts Ghana office launches and FEBRUARY 2012 her cross-country OCTOBER 2012 raises $300,000+ PoP moves into a bike ride for PoP Our second gala JANUARY 2012 JULY 2012 SEPTMEMBER 2012 raises $1.5 million grown-up office PoP breaks ground PoP completes its space Sophia Bush in one night 50th school donates her 30th in Ghana birthday to PoP JAN JUN JUL AUG OCT NOV JAN 4 5 ACCOMPLISHMENTS. PENCILS OF PROMISE BELIEVES PHOTO NICK BY ONKEN EVERY CHILD SHOULD HAVE ACCESS TO QUALITY EDUCATION. WE CREATE STUDENTS SERVED 111 VOLUNTEER HOURS 7, 233 + 150,000 SCHOOLS, PROGRAMS AND GLOBAL COM SCHOOLS MUNITIES AROUND THE COMMON GOAL LIVES IMPACTED INSTRUCTIONAL HOURS 99,259 4,833,000 OF EDUCATION FOR ALL. AS OF JANUARY 2013, WE HAVE BROKEN GROUND ON OVER 100 SCHOOLS. MISSION STATEMENT. -

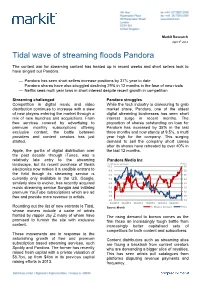

Tidal Wave of Streaming Floods Pandora

Markit Research April 9th 2014 Tidal wave of streaming floods Pandora The content war for streaming content has heated up in recent weeks and short sellers look to have singled out Pandora. Pandora has seen short sellers increase positions by 37% year to date Pandora shares have also struggled declining 29% in 12 months in the face of new rivals Netflix sees multi year lows in short interest despite recent growth in competition Streaming challenged Pandora struggles Competition in digital music and video While the tech industry is clamouring to grab distribution continues to increase with a slew market share, Pandora, one of the oldest of new players entering the market through a digital streaming businesses has seen short mix of new launches and acquisitions. From interest surge in recent months. The free services covered by advertising to proportion of shares outstanding on loan for premium monthly subscriptions offering Pandora has increased by 35% in the last exclusive content, the battle between three months and now stands at 9.5%, a multi providers and content creators has just year high for the company. This surging started. demand to sell the company short comes after its shares have retreated by over 40% in Apple, the gorilla of digital distribution over the last 12 months. the past decade through iTunes, was a relatively late entry to the streaming Pandora Media Inc % Shares on loan Price landscape, but its recent purchase of Beats 10.0 30 electronics now makes it a credible entrant to 9.0 28 the field though its streaming service is 8.0 26 currently only available in the US. -

The Role of Emotion and Context in Musical Preference

The Role of Emotion and Context in Musical Preference Yading Song PhD thesis Thesis submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy of the University of London School of Electronic Engineering and Computer Science Queen Mary, University of London United Kingdom January 2016 Abstract The powerful emotional effects of music increasingly attract the attention of music informa- tion retrieval researchers and music psychologists. In the past decades, a gap exists between these two disciplines, and researchers have focused on different aspects of emotion in music. Music information retrieval researchers are concerned with computational tasks such as the classifica- tion of music by its emotional content, whereas music psychologists are more interested in the understanding of emotion in music. Many of the existing studies have investigated the above issues in the context of classical music, but the results may not be applicable to other genres. This thesis focusses on musical emotion in Western popular music combining knowledge from both disciplines. I compile a Western popular music emotion dataset based on online social tags, and present a music emotion classification system using audio features corresponding to four different musical dimensions. Listeners' perceived and induced emotional responses to the emotion dataset are compared, and I evaluate the reliability of emotion tags with listeners' ratings of emotion using two dominant models of emotion, namely the categorical and the dimensional emotion models. In the next experiment, I build a dataset of musical excerpts identified in a questionnaire, and I train my music emotion classification system with these audio recordings. -

100+ Alternative Search Engines You Should Know by Kay Tan

100+ Alternative Search Engines You Should Know By Kay Tan. Filed in Tools Ads by Google Web Site Optimizer Tooloptimizely.com/Web_Optimizer_ToolThe Hottest Web Site Optimizer Tool on the Planet. Test it out! Google is the most powerful search engine available, but when it comes to searching for something specific, Google may churn out general results for some. For instance, a search for a song in Google may return the singer, the lyrics and even fan sites – depending on the keywords you entered. This is when niche-specific search engines comes into the picture. These search engines allows you to search for the things you’re looking for, and because they are more focused, their results tend to be more accurate. E-books & PDF files, audio & music, videos, etc. are probably the most commonly searched items everyday and with this list, you’ll be able to locate them easily from now on. Enjoy! E-Book & PDF Search Engine PDF Searcher Pdf Searcher is a pdf and ebook search engine. You can search and download every ebook or pdf for free! PDFGeni PDFGeni is a dedicated pdf search engine for PDF ebooks, sheets, forms and documents. Data-Sheet Data-Sheet is a pdf ebook datasheet manual search engine where you can search any ebook datasheet pdf files and download. PDFDatabase PDF Database is a new search engine which uses a unique algorithm to search for pdf and doc files from all over the web quickly and efficiently. PDF Search Engine PDF Search Engine is a book search engine search on sites, forums, message boards for pdf files. -

Download Google Sound Search App Download Google Sound Search App

download google sound search app Download google sound search app. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 67aa15a88a38c401 • Your IP : 188.246.226.140 • Performance & security by Cloudflare. Shortcut for Google Sound Search Mini Forum, Answers, Tips, Tricks and Glitches. Ask a Question or Help other Players by Answering the Questions on the List Below: Rate this app: More details. For Android: 5.0 and up Guide: Shortcut for Google Sound Search cheats tutorial When updated: 2018-12-06 Star Rating: Name: Shortcut for Google Sound Search hack for android Extension: Apk Author: RS Vanadium File Name: com.rocketsauce83.musicsearch Current Version: 1.0 User Rating: Everyone Downloads: 50000- Version: mod, apk, unlock System: Android Type: Education. Share Shortcut for Google Sound Search Cheats Guides Hints And Tutorials - Best Tactics from Users below. Shortcut for Google Sound Search Tricks and Codes: Add your tips. Hints and Tips: Glitches: Codes: Guide: Easter Eggs: Advice for new users: Please wait 10 seconds. -

Merger Policy in Digital Markets: an Ex Post Assessment 3 Study Is to Undertake a Less Common Form of Ex Post Assessment

Journal of Competition Law & Economics, 00(00), 1–46 doi: 10.1093/joclec/nhaa020 MERGER POLICY IN DIGITAL MARKETS: AN EX Downloaded from https://academic.oup.com/jcle/advance-article/doi/10.1093/joclec/nhaa020/5874037 by guest on 18 December 2020 POST ASSESSMENT† Elena Argentesi,∗Paolo Buccirossi,†Emilio Calvano,‡ Tomaso Duso,§,∗ & Alessia Marrazzo,¶ & Salvatore Nava† ABSTRACT This paper presents a broad retrospective evaluation of mergers and merger decisions in markets dominated by multisided digital platforms. First, we doc- ument almost 300 acquisitions carried out by three major tech companies— Amazon, Facebook, and Google—between 2008 and 2018. We cluster target companies on their area of economic activity providing suggestive evidence on the strategies behind these mergers. Second, we discuss the features of digital markets that create new challenges for competition policy. By using relevant case studies as illustrative examples, we discuss theories of harm that have been used or, alternatively, could have been formulated by authorities in these cases. Finally, we retrospectively examine two important merger cases, Facebook/Instagram and Google/Waze, providing a systematic assessment of the theories of harm considered by the UK competition authorities as well as evidence on the evolution of the market after the transactions were approved. We discuss whether the competition authority performed complete and careful analyses to foresee the competitive consequences of the investigated mergers and whether a more effective merger control regime can be achieved within the current legal framework. JEL codes: L4; K21 ∗ Department of Economics, University of Bologna † Lear, Rome ‡ Department of Economics, University of Bologna, Toulouse School of Economics and CEPR, London § Deutsches Institut fuer Wirtschaftsforschung (DIW Berlin), Department of Economics, Tech- nical University (TU) Berlin, CEPR, London and CESifo, Munich ¶ Lear, Rome and Department of Economics, University of Bologna ∗ Corresponding author. -

255 Responses

255 responses Not accepting responses Message for respondents The form "Effects of Demographic Differences in the World of Music Streaming" is no longer accepting responses. How old are you? (255 responses) 1824 2534 3544 4554 55+ 95.7% What is your gender? (255 responses) Male 57.6% Female Prefer not to answer 41.6% Which media platforms do you prefer to use? (254 responses) Spotify 163 (64.2%) Pandora 35 (13.8%) Soundcloud 96 (37.8%) YouTube 142 (55.9%) Other 45 (17.7%) 0 20 40 60 80 100 120 140 160 Why do you prefer that media platform? (255 responses) I love spotify because everything is there and I can use it on my phone easily Soundcloud is cool for discovering music Youtube has everything that isn't on either Convenient Easy 320kbps good quality music, has almost everykind of music. All above are easy to use. Don't like the adds though. Spotify allows music exploration on specific genres. Soundcloud has unique sounds from artists. Both are convenient for on the go play. Spotify: Can choose songs you want to listen to, unlimited plays, discover new music, make playlists, makes playlists for you Youtube: Lots of music you can't find anywhere else (e.g. covers, underground artists, non-English), lots of good recommendations, free, unlimited plays, makes playlists for you, can make playlists Spotify - easy to store playlists of songs; Soundcloud - coolest remixes, best for discovering music; Youtube - autoplay of related songs (Soundcloud has it too but Youtube's variety is somehow better imo) Spotify-because I get to chose what I'm listening to Pandora- because it's a good place to find new music YouTube-because I get to chose what I'm listening to Its between that and Spotify, but I like 8 tracks because its in the browser.