Procedural Generation of Cave-Like Maps for 2D Top-Down Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

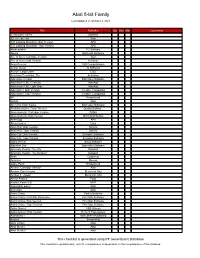

Atari 8-Bit Family

Atari 8-bit Family Last Updated on October 2, 2021 Title Publisher Qty Box Man Comments 221B Baker Street Datasoft 3D Tic-Tac-Toe Atari 747 Landing Simulator: Disk Version APX 747 Landing Simulator: Tape Version APX Abracadabra TG Software Abuse Softsmith Software Ace of Aces: Cartridge Version Atari Ace of Aces: Disk Version Accolade Acey-Deucey L&S Computerware Action Quest JV Software Action!: Large Label OSS Activision Decathlon, The Activision Adventure Creator Spinnaker Software Adventure II XE: Charcoal AtariAge Adventure II XE: Light Gray AtariAge Adventure!: Disk Version Creative Computing Adventure!: Tape Version Creative Computing AE Broderbund Airball Atari Alf in the Color Caves Spinnaker Software Ali Baba and the Forty Thieves Quality Software Alien Ambush: Cartridge Version DANA Alien Ambush: Disk Version Micro Distributors Alien Egg APX Alien Garden Epyx Alien Hell: Disk Version Syncro Alien Hell: Tape Version Syncro Alley Cat: Disk Version Synapse Software Alley Cat: Tape Version Synapse Software Alpha Shield Sirius Software Alphabet Zoo Spinnaker Software Alternate Reality: The City Datasoft Alternate Reality: The Dungeon Datasoft Ankh Datamost Anteater Romox Apple Panic Broderbund Archon: Cartridge Version Atari Archon: Disk Version Electronic Arts Archon II - Adept Electronic Arts Armor Assault Epyx Assault Force 3-D MPP Assembler Editor Atari Asteroids Atari Astro Chase Parker Brothers Astro Chase: First Star Rerelease First Star Software Astro Chase: Disk Version First Star Software Astro Chase: Tape Version First Star Software Astro-Grover CBS Games Astro-Grover: Disk Version Hi-Tech Expressions Astronomy I Main Street Publishing Asylum ScreenPlay Atari LOGO Atari Atari Music I Atari Atari Music II Atari This checklist is generated using RF Generation's Database This checklist is updated daily, and it's completeness is dependent on the completeness of the database. -

Download Jumpman Mp3 Free Download Jumpman Junior

download jumpman mp3 free Download Jumpman Junior. Jumpman Junior is a video game published in 1983 on Commodore 64 by Epyx, Inc.. It's an action game, set in a platform theme, and was also released on Atari 8-bit and ColecoVision. Captures and Snapshots. Comments and reviews. Sonic25 2020-12-22 0 point. One of my faves for the C64! Only 12 levels compared to the 30 of the original but well designed and the difficulty ramps up quicker. mymoon 2020-03-29 0 point Commodore 64 version. This is one of the most enjoyable games of its era. Still could not complete it. Should try again. Mr Vampire 2019-02-09 1 point Commodore 64 version. As good as the original Jumpman. Played this almost as much as the first one back in the 80's when dayglow socks were trendy not camp. Enric 2018-08-08 1 point ColecoVision version. I had this on collecovision and is an mazing game for those who wish the improve on everything. Love it. unknXwn 2017-07-25 -1 point Commodore 64 version. I had this on the ol C64 in the 90's when I was a kid. I got caught up on games before I hit the Playstation. Write a comment. Share your gamer memories, help others to run the game or comment anything you'd like. If you have trouble to run Jumpman Junior (Commodore 64), read the abandonware guide first! Download Jumpman Junior. We may have multiple downloads for few games when different versions are available. Also, we try to upload manuals and extra documentations when possible. -

Press Release Retro Gaming Platform Antstream Announces First

Press Release Retro Gaming Platform Antstream Announces First Wave Partnerships with Legendary Game Brands Ahead of Global Launch SNK, Data East, Epyx, Gremlin Interactive And Technōs Among The Iconic Game Brands Players Will Find On The Service At Launch Q1 2019 London, United Kingdom - 13 December, 2018 - Antstream, the upcoming gaming platform dedicated to retro games, is proud to announce it has secured a partnership with five legendary gaming brands and will be able to offer a variety of their most famous titles at launch. Antstream will then feature multiple games from: ● SNK (Ikari Warriors) ● Data East (Joe & Mac) ● Epyx (California Games) ● Gremlin Interactive (Zool) ● Technōs (Double Dragon) Antstream will offer its subscribers an easy, legal, and fun way to play thousands of classic games that have previously been beyond the reach of most gamers. Antstream is set to launch on PC, Mac, Xbox One, Tablets and Smartphones in Q1 2019. “Giving instant access to great games is at the heart of what we are building, tracking down and securing the rights of those titles that are the foundation of videogaming is no easy task, and the partners we are able to announce today are just the tip of the iceberg of what we have planned.” said Steve Cottam CEO at Antstream. “The video games industry had been suffering from the absence of a way for many arcade games to be played years after their original platform disappeared,” said Koichi Toyama, Company President & CEO at SNK. “We are working with Antstream to right this wrong, and allow everyone to play and challenge their friends at some of our most iconic retro titles.” In the new year, Antstream will reveal more about its plan to bring back retro games to the world, players can register today on the website to be the first informed: www.antstream.com. -

Atari Games Corp. V. Nintendo of America, Inc. ATARI GAMES CORP

Atari Games Corp. v. Nintendo of America, Inc. ATARI GAMES CORP. and TENGEN, INC., Plaintiffs-Appellants, vs. NINTENDO OF AMERICA INC. AND NINTENDO CO., LTD., Defendants-Appellees. 91-1293 United States Court Of Appeals For The Federal Circuit 975 F.2d 832, 24 U.S.P.Q.2D (BNA) 1015, Copy. L. Rep. (CCH) P26,978, 1992-2 Trade Cas. (CCH) P69,969, 92 Cal. Daily Op. Service 7858, 92 Daily Journal DAR 12936, 1992 U.S. App. Decision September 10, 1992, Decided Appealed from: U.S. District Court for the Northern District of California. Judge Smith M. Laurence Popofsky, Heller, Ehrman, White & McAuliffe, of San Francisco, California, argued for plaintiffs-appellants. With him on the brief were Robert S. Venning, Peter A. Wald, Kirk G. Werner, Robert B. Hawk, Michael K. Plimack and Dale A. Rice. Also on the brief were James B. Bear, Knobbe, Martens, Olson & Bear, of Newport Beach, California and G. Gervaise Davis, II, Schroeder, Davis & Orliss, Inc., of Monterey, California. Thomas G. Gallatin, Jr., Mudge, Rose, Guthrie, Alexander & Ferdon, of New York, New York, argued for defendants-appellees. With him on the brief was John J. Kirby, Jr.. Also on the brief was Larry S. Nixon, Nixon & Vanderhye, P.C., of Arlington, Virginia. Before CLEVENGER, Circuit Judge, SMITH, Senior Circuit Judge, and RADER, Circuit Judge. [F.2d 835] RADER, Circuit Judge. Nintendo of America Inc., and Nintendo Co., Ltd. sell the Nintendo Entertainment System (NES). Two of Nintendo's competitors, Atari Games Corporation and its wholly-owned subsidiary, Tengen, Inc., sued Nintendo for, among other things, unfair competition, Sherman Act violations, and patent infringement. -

History of Video Games-Wikipedia

History of video games From Wikipedia, the free encyclopedia The Atari VCS was a popular home video game console in the late 1970s and early 1980s. Pictured is the four-switch model from 1980–1982. An Atari CX40 joystick controller, with a single button The history of video games goes as far back as the early 1950s, when academic computer scientists began designing simple games and simulations as part of their research or just for fun. At M.I.T. in the 1960s, professors and students played games such as 3D tic-tac-toe and Moon Landing. These games were played on computer such as the IBM 1560, and moves were made by means of punch cards. Video gaming did not reach mainstream popularity until the 1970s and 1980s, when video arcade games and gaming consoles using joysticks, buttons, and other controllers, along with graphics on computer screens and home computer games were introduced to the general public. Since the 1980s, video gaming has become a popular form of entertainment and a part of modern popular culture in most parts of the world. One of the early games was Spacewar!, which was developed by computer scientists. Early arcade video games developed from 1972 to 1978. During the 1970s, the first generation of home consoles emerged, including the popular game Pong and various "clones". The 1970s was also the era of mainframe computer games. The golden age of arcade video games was from 1978 to 1982. Video arcades with large, graphics- decorated coin-operated machines were common at malls and popular, affordable home consoles such as the Atari 2600 and Intellivision enabled people to play games on their home TVs. -

FULLTEXT01.Pdf

Abstract Procedural Content Generation means the algorithmic creation of game content with limited or indirect user input. This technique is currently widespread in the game industry. However, its effects when applied to elements that do not engage directly with the player, also known as Game Bits, require more research. This paper focuses on how players experience a game when these Game Bits are procedurally generated, and how this alters their will to continue playing the game. By developing and using a 2D Roguelike game to perform a qualitative study with eight participants, this dissertation shows an indication that procedurally generating Game Bits does not alter how the players experience a game or their desire to replay it. Keywords: Procedural content generation, PCG, Game Bits, Game User Experience, Roguelike. Table of Contents 1. Introduction 1 2. Background 2 2.1 Procedural Content Generation 2 2.2 PCG Methods 3 2.3 Layers of Game Content 4 2.4 PCG in games 5 2.5 Roguelikes 6 2.6 User Experience (UX) and Game User Experience 7 2.7 Color and emotion 10 2.8 Related studies 10 3. Problem 12 3.1 Method 13 3.2 Ethical considerations 15 4. The game prototype 16 4.1 Core mechanics and User Interface 16 4.2 Environment 18 4.3 Enemies 19 4.4 Environmental objects 22 5. Results and analysis 23 5.1 Participants and their background 23 5.2 General impression of the game 24 5.3 Enjoyment 25 5.4 Frustration 27 5.5 Replayability 28 5.6 Environment and aesthetic value 30 5.7 Analysis 32 6. -

Epyx-82Catalog

What is the hardest thing in the world? Tearing yourself away from an EPYX game. EPyx Catalog New! Brand New ... Brand Alien Garden You are a cosmic critter in an Alien world brimming with curious Spanking New from EPYX! crystals. Some grow, some explode-killing your critter. Others shrink-right before your eyes. Some are edible, others poisonous. Armor Assault: NATO forces clash with the Soviets in a tactical-level World War III Your challenge is to find the edible ones. And eat all you can. scenario for one or two players. (See Page 11) Dart your cosmic critter in and out between crystals. Sting them, PlatterMania: Test your skill as you perform amazing plate-balancing feats to rack up bump them or eat them to find out what each of the 20 unique pOints! (See Page 1) varieties does. Make the right move to gain the most points! You'll hear the shattering and tinkling of crystals as they grow, New World: Lead an expedition to the new world in a game of conquest and shrink and explode-all in color. colonization . (See Page 10) Rack up as many points as possible as you eat, bump and sting Monster Maze: A wild chase through a 3-D maze-earn points as you grab gold bars, your way through the garden. It's up to you to remember which gobble up vitamins and mow down monsters. (See Page 1) crystals do what. Oil Barons: Gamble for the highest stakes as you try to become an Oil Baron. A game And the garden is different every time you play. -

Definitions of “Role-Playing Games”

This is an Accepted Manuscript of a book chapter published by Routledge in Role- Playing Game Studies: Transmedia Foundations on April 4, 2018, available online: https://www.routledge.com/Role-Playing-Game-Studies-Transmedia-Foundations/Deterding-Zagal/p/book/9781138638907 Please cite as: Zagal, José P. and Deterding, S. (2018). Definitions of “Role-Playing Games”. In Zagal, José P. and Deterding, S. (eds.), Role-Playing Game Studies: Transmedia Foundations. New York: Routledge, 19-51. 2 Definitions of “Role-Playing Games” José P. Zagal; Sebastian Deterding For some, defining “game” is a hopeless task (Parlett 1999). For others, the very idea that one could capture the meaning of a word in a list of defining features is flawed, because language and meaning-making do not work that way (Wittgenstein 1963). Still, we use the word “game” every day and, generally, understand each other when we do so. Among game scholars and professionals, we debate “game” definitions with fervor and sophistication. And yet, while we usually agree with some on some aspects, we never seem to agree with everyone on all. At most, we agree on what we disagree about – that is, what disagreements we consider important for understanding and defining “games” (Stenros 2014). What is true for “games” holds doubly for “role-playing games”. In fact, role- playing games (RPGs) are maybe the most contentious game phenomenon: the exception, the outlier, the not-quite-a-game game. In their foundational game studies text Rules of Play, Salen and Zimmerman (2004, 80) acknowledge that their definition of a game (“a system in which players engage in an artificial conflict, defined by rules, that results in a quantifiable outcome”) considers RPGs a borderline case. -

Paradigm Shift: How the Evolution of Two Generations of Home

PARADIGM SHIFT: HOW THE EVOLUTION OF TWO GENERATIONS OF HOME CONSOLES, ARCADES, AND COMPUTERS INFLUENCED AMERICAN CULTURE, 1985-1995 By Jason Terence Wiley A thesis submitted to the Graduate Faculty in partial fulfillment of the requirements for the degree of MASTER OF ARTS IN HISTORY University of Central Oklahoma Spring, 2016 iii Abstract Author: Jason Terence Wiley Thesis Chair: Dr. Patricia Loughlin Title of Thesis: Paradigm Shift: How the Evolution of Two Generations of Home Consoles, Arcades, and Computers Influenced American Culture, 1985-1995 Abstract: As of 2016, unlike many popular media forms found here in the United States, video games possess a unique influence, one that gained its own a large widespread appeal, but also its own distinct cultural identity created by millions of fans both here stateside and across the planet. Yet, despite its significant contributions, outside of the gaming’s arcade golden age of the early 1980s, the history of gaming post Atari shock goes rather unrepresented as many historians simply refuse to discuss the topic for trivial reasons thus leaving a rather noticeable gap within the overall history. One such important aspect not covered by the majority of the scholarship and the primary focus of thesis argues that the history of early modern video games in the North American market did not originate during the age of Atari in the 1970s and early 1980s. Instead, the real genesis of today’s market and popular gaming culture began with the creation and establishment of the third and fourth generation of video games, which firmly solidified gaming as both a multi-billion dollar industry and as an accepted form of entertainment in the United States. -

A History of Gaming Platforms: the Commodore 64

A History of Gaming Platforms: The Commodore 64 gamasutra.com/view/feature/130406/a_history_of_gaming_platforms_the_.php By Matt Barton,Bill Loguidice Summary The Commodore 64 (C64) is perhaps the best known 8-bit computing platform ever designed, rivaled only by the Apple II in terms of popularity and longevity. Within a few short years after its introduction in 1982, the Commodore 64 dominated the low-end computer market, receiving a steady stream of software and peripheral support that lasted through the decade. In 1985, Commodore followed up with the lesser known Commodore 128 (C128), a technically superior machine that failed to win over the massive base of C64 fans and developers. History The Commodore 64 (C64) wasn't Commodore's first foray into the home computer industry. In 1977, Commodore had earned some recognition with its ground-breaking PET, which went through several iterations over the years and was quite popular in schools. The PET was followed by the VIC 20 in 1981, the direct ancestor of the C64. The VIC 20 was a smashing success, eventually selling millions of units and establishing Commodore's reputation for making highly capable computers at prices that rivaled the era's videogame consoles. “Why buy a videogame when you can have a computer?,” asked Star Trek’s William Shatner in a famous series of print and television advertisements. 1/14 The legendary Commodore 64 Still, although the VIC 20 was a great value for the budget-conscious, its limitations were onerous for many enthusiasts. They wanted a more powerful machine and were willing to pay extra to get it. -

Applying Copyright Law to Videogames: Litigation Strategies for Lawyers

\\jciprod01\productn\H\HLS\10-2\HLS204.txt unknown Seq: 1 26-APR-19 15:23 Applying Copyright Law to Videogames: Litigation Strategies for Lawyers John Quagliariello I. Introduction Over the last 50 years, videogames have become a significant aspect of American history and culture. While primarily serving as a source of en- tertainment, videogames have also stemmed academic debates,1 technologi- cal developments,2 pop-culture references,3 and multi-million-dollar career paths.4 Videogames are arguably now just as American as baseball, hotdogs, apple pie, and Chevrolet.5 Because of this place in American culture, vide- ogames have historically intersected with another American “staple:” the American legal system. This intersection is especially present in the realm of 1 See, e.g., Lauren Goldbeck and Alex Pew, Violent Video Games and Aggression, National Center for Health Research, http://www.center4research.org/vio lent-video-games-can-increase-aggression/, [https://perma.cc/7NCQ-MZCZ ] (last visited Apr. 15, 2018) (on file with the Harvard Law School Library). 2 See, e.g., David M. Ewalt, Nintendo’s Wii Is a Revolution, Forbes, (Nov. 13, 2006), https://www.forbes.com/2006/11/13/wii-review-ps3-tech-media-cx_de_11 13wii.html#6cb7a5b275bb, [https://perma.cc/QJ5N-D6P8] (on file with the Harv- ard Law School Library). 3 See, e.g., Meet the Voice Behind ‘It’s-a Me, Mario!’, Great Big Story, https:// www.greatbigstory.com/stories/it-s-me-mario-meet-the-voice-behind-a-nintendo- legend, [https://perma.cc/MUA3-SK3A] (last visited Apr. 13, 2018). 4 See, e.g., Abayomi Jegede, Top 11 Richest Gamers in the World, Trendrr, Jan. -

You've Seen the Movie, Now Play The

“YOU’VE SEEN THE MOVIE, NOW PLAY THE VIDEO GAME”: RECODING THE CINEMATIC IN DIGITAL MEDIA AND VIRTUAL CULTURE Stefan Hall A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2011 Committee: Ronald Shields, Advisor Margaret M. Yacobucci Graduate Faculty Representative Donald Callen Lisa Alexander © 2011 Stefan Hall All Rights Reserved iii ABSTRACT Ronald Shields, Advisor Although seen as an emergent area of study, the history of video games shows that the medium has had a longevity that speaks to its status as a major cultural force, not only within American society but also globally. Much of video game production has been influenced by cinema, and perhaps nowhere is this seen more directly than in the topic of games based on movies. Functioning as franchise expansion, spaces for play, and story development, film-to-game translations have been a significant component of video game titles since the early days of the medium. As the technological possibilities of hardware development continued in both the film and video game industries, issues of media convergence and divergence between film and video games have grown in importance. This dissertation looks at the ways that this connection was established and has changed by looking at the relationship between film and video games in terms of economics, aesthetics, and narrative. Beginning in the 1970s, or roughly at the time of the second generation of home gaming consoles, and continuing to the release of the most recent consoles in 2005, it traces major areas of intersection between films and video games by identifying key titles and companies to consider both how and why the prevalence of video games has happened and continues to grow in power.