Mixed Model OCR Training on Historical Latin Script for Out-Of-The-Box Recognition and Finetuning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ISO Basic Latin Alphabet

ISO basic Latin alphabet The ISO basic Latin alphabet is a Latin-script alphabet and consists of two sets of 26 letters, codified in[1] various national and international standards and used widely in international communication. The two sets contain the following 26 letters each:[1][2] ISO basic Latin alphabet Uppercase Latin A B C D E F G H I J K L M N O P Q R S T U V W X Y Z alphabet Lowercase Latin a b c d e f g h i j k l m n o p q r s t u v w x y z alphabet Contents History Terminology Name for Unicode block that contains all letters Names for the two subsets Names for the letters Timeline for encoding standards Timeline for widely used computer codes supporting the alphabet Representation Usage Alphabets containing the same set of letters Column numbering See also References History By the 1960s it became apparent to thecomputer and telecommunications industries in the First World that a non-proprietary method of encoding characters was needed. The International Organization for Standardization (ISO) encapsulated the Latin script in their (ISO/IEC 646) 7-bit character-encoding standard. To achieve widespread acceptance, this encapsulation was based on popular usage. The standard was based on the already published American Standard Code for Information Interchange, better known as ASCII, which included in the character set the 26 × 2 letters of the English alphabet. Later standards issued by the ISO, for example ISO/IEC 8859 (8-bit character encoding) and ISO/IEC 10646 (Unicode Latin), have continued to define the 26 × 2 letters of the English alphabet as the basic Latin script with extensions to handle other letters in other languages.[1] Terminology Name for Unicode block that contains all letters The Unicode block that contains the alphabet is called "C0 Controls and Basic Latin". -

Unicode Alphabets for L ATEX

Unicode Alphabets for LATEX Specimen Mikkel Eide Eriksen March 11, 2020 2 Contents MUFI 5 SIL 21 TITUS 29 UNZ 117 3 4 CONTENTS MUFI Using the font PalemonasMUFI(0) from http://mufi.info/. Code MUFI Point Glyph Entity Name Unicode Name E262 � OEligogon LATIN CAPITAL LIGATURE OE WITH OGONEK E268 � Pdblac LATIN CAPITAL LETTER P WITH DOUBLE ACUTE E34E � Vvertline LATIN CAPITAL LETTER V WITH VERTICAL LINE ABOVE E662 � oeligogon LATIN SMALL LIGATURE OE WITH OGONEK E668 � pdblac LATIN SMALL LETTER P WITH DOUBLE ACUTE E74F � vvertline LATIN SMALL LETTER V WITH VERTICAL LINE ABOVE E8A1 � idblstrok LATIN SMALL LETTER I WITH TWO STROKES E8A2 � jdblstrok LATIN SMALL LETTER J WITH TWO STROKES E8A3 � autem LATIN ABBREVIATION SIGN AUTEM E8BB � vslashura LATIN SMALL LETTER V WITH SHORT SLASH ABOVE RIGHT E8BC � vslashuradbl LATIN SMALL LETTER V WITH TWO SHORT SLASHES ABOVE RIGHT E8C1 � thornrarmlig LATIN SMALL LETTER THORN LIGATED WITH ARM OF LATIN SMALL LETTER R E8C2 � Hrarmlig LATIN CAPITAL LETTER H LIGATED WITH ARM OF LATIN SMALL LETTER R E8C3 � hrarmlig LATIN SMALL LETTER H LIGATED WITH ARM OF LATIN SMALL LETTER R E8C5 � krarmlig LATIN SMALL LETTER K LIGATED WITH ARM OF LATIN SMALL LETTER R E8C6 UU UUlig LATIN CAPITAL LIGATURE UU E8C7 uu uulig LATIN SMALL LIGATURE UU E8C8 UE UElig LATIN CAPITAL LIGATURE UE E8C9 ue uelig LATIN SMALL LIGATURE UE E8CE � xslashlradbl LATIN SMALL LETTER X WITH TWO SHORT SLASHES BELOW RIGHT E8D1 æ̊ aeligring LATIN SMALL LETTER AE WITH RING ABOVE E8D3 ǽ̨ aeligogonacute LATIN SMALL LETTER AE WITH OGONEK AND ACUTE 5 6 CONTENTS -

Proposal for Generation Panel for Latin Script Label Generation Ruleset for the Root Zone

Generation Panel for Latin Script Label Generation Ruleset for the Root Zone Proposal for Generation Panel for Latin Script Label Generation Ruleset for the Root Zone Table of Contents 1. General Information 2 1.1 Use of Latin Script characters in domain names 3 1.2 Target Script for the Proposed Generation Panel 4 1.2.1 Diacritics 5 1.3 Countries with significant user communities using Latin script 6 2. Proposed Initial Composition of the Panel and Relationship with Past Work or Working Groups 7 3. Work Plan 13 3.1 Suggested Timeline with Significant Milestones 13 3.2 Sources for funding travel and logistics 16 3.3 Need for ICANN provided advisors 17 4. References 17 1 Generation Panel for Latin Script Label Generation Ruleset for the Root Zone 1. General Information The Latin script1 or Roman script is a major writing system of the world today, and the most widely used in terms of number of languages and number of speakers, with circa 70% of the world’s readers and writers making use of this script2 (Wikipedia). Historically, it is derived from the Greek alphabet, as is the Cyrillic script. The Greek alphabet is in turn derived from the Phoenician alphabet which dates to the mid-11th century BC and is itself based on older scripts. This explains why Latin, Cyrillic and Greek share some letters, which may become relevant to the ruleset in the form of cross-script variants. The Latin alphabet itself originated in Italy in the 7th Century BC. The original alphabet contained 21 upper case only letters: A, B, C, D, E, F, Z, H, I, K, L, M, N, O, P, Q, R, S, T, V and X. -

Processing South Asian Languages Written in the Latin Script: the Dakshina Dataset

Processing South Asian Languages Written in the Latin Script: the Dakshina Dataset Brian Roarky, Lawrence Wolf-Sonkiny, Christo Kirovy, Sabrina J. Mielkez, Cibu Johnyy, Işın Demirşahiny and Keith Hally yGoogle Research zJohns Hopkins University {roark,wolfsonkin,ckirov,cibu,isin,kbhall}@google.com [email protected] Abstract This paper describes the Dakshina dataset, a new resource consisting of text in both the Latin and native scripts for 12 South Asian languages. The dataset includes, for each language: 1) native script Wikipedia text; 2) a romanization lexicon; and 3) full sentence parallel data in both a native script of the language and the basic Latin alphabet. We document the methods used for preparation and selection of the Wikipedia text in each language; collection of attested romanizations for sampled lexicons; and manual romanization of held-out sentences from the native script collections. We additionally provide baseline results on several tasks made possible by the dataset, including single word transliteration, full sentence transliteration, and language modeling of native script and romanized text. Keywords: romanization, transliteration, South Asian languages 1. Introduction lingua franca, the prevalence of loanwords and code switch- Languages in South Asia – a region covering India, Pak- ing (largely but not exclusively with English) is high. istan, Bangladesh and neighboring countries – are generally Unlike translations, human generated parallel versions of written with Brahmic or Perso-Arabic scripts, but are also text in the native scripts of these languages and romanized often written in the Latin script, most notably for informal versions do not spontaneously occur in any meaningful vol- communication such as within SMS messages, WhatsApp, ume. -

Chapter 5. Characters: Typology and Page Encoding 1

Chapter 5. Characters: typology and page encoding 1 Chapter 5. Characters: typology and encoding Version 2.0 (16 May 2008) 5.1 Introduction PDF of chapter 5. The basic characters a-z / A-Z in the Latin alphabet can be encoded in virtually any electronic system and transferred from one system to another without loss of information. Any other characters may cause problems, even well established ones such as Modern Scandinavian ‘æ’, ‘ø’ and ‘å’. In v. 1 of The Menota handbook we therefore recommended that all characters outside a-z / A-Z should be encoded as entities, i.e. given an appropriate description and placed between the delimiters ‘&’ and ‘;’. In the last years, however, all major operating systems have implemented full Unicode support and a growing number of applications, including most web browsers, also support Unicode. We therefore believe that encoders should take full advantage of the Unicode Standard, as recommended in ch. 2.2.2 above. As of version 2.0, the character encoding recommended in The Menota handbook has been synchronised with the recommendations by the Medieval Unicode Font Initiative . The character recommendations by MUFI contain more than 1,300 characters in the Latin alphabet of potential use for the encoding of Medieval Nordic texts. As a consequence of the synchronisation, the list of entities which is part of the Menota scheme is identical to the one by MUFI. In other words, if a character is encoded with a code point or an entity in the MUFI character recommendation, it will be a valid character encoding also in a Menota text. -

Characters for Classical Latin

Characters for Classical Latin David J. Perry version 13, 2 July 2020 Introduction The purpose of this document is to identify all characters of interest to those who work with Classical Latin, no matter how rare. Epigraphers will want many of these, but I want to collect any character that is needed in any context. Those that are already available in Unicode will be so identified; those that may be available can be debated; and those that are clearly absent and should be proposed can be proposed; and those that are so rare as to be unencodable will be known. If you have any suggestions for additional characters or reactions to the suggestions made here, please email me at [email protected] . No matter how rare, let’s get all possible characters on this list. Version 6 of this document has been updated to reflect the many characters of interest to Latinists encoded as of Unicode version 13.0. Characters are indicated by their Unicode value, a hexadecimal number, and their name printed IN SMALL CAPITALS. Unicode values may be preceded by U+ to set them off from surrounding text. Combining diacritics are printed over a dotted cir- cle ◌ to show that they are intended to be used over a base character. For more basic information about Unicode, see the website of The Unicode Consortium, http://www.unicode.org/ or my book cited below. Please note that abbreviations constructed with lines above or through existing let- ters are not considered separate characters except in unusual circumstances, nor are the space-saving ligatures found in Latin inscriptions unless they have a unique grammatical or phonemic function (which they normally don’t). -

MUFI Character Recommendation V. 3.0: Code Chart Order

MUFI character recommendation Characters in the official Unicode Standard and in the Private Use Area for Medieval texts written in the Latin alphabet ⁋ ※ ð ƿ ᵹ ᴆ ※ ¶ ※ Part 2: Code chart order ※ Version 3.0 (5 July 2009) ※ Compliant with the Unicode Standard version 5.1 ____________________________________________________________________________________________________________________ ※ Medieval Unicode Font Initiative (MUFI) ※ www.mufi.info ISBN 978-82-8088-403-9 MUFI character recommendation ※ Part 2: code chart order version 3.0 p. 2 / 245 Editor Odd Einar Haugen, University of Bergen, Norway. Background Version 1.0 of the MUFI recommendation was published electronically and in hard copy on 8 December 2003. It was the result of an almost two-year-long electronic discussion within the Medieval Unicode Font Initiative (http://www.mufi.info), which was established in July 2001 at the International Medi- eval Congress in Leeds. Version 1.0 contained a total of 828 characters, of which 473 characters were selected from various charts in the official part of the Unicode Standard and 355 were located in the Private Use Area. Version 1.0 of the recommendation is compliant with the Unicode Standard version 4.0. Version 2.0 is a major update, published electronically on 22 December 2006. It contains a few corrections of misprints in version 1.0 and 516 additional char- acters (of which 123 are from charts in the official part of the Unicode Standard and 393 are additions to the Private Use Area). There are also 18 characters which have been decommissioned from the Private Use Area due to the fact that they have been included in later versions of the Unicode Standard (and, in one case, because a character has been withdrawn). -

Greek Type Design Introduction

A primer on Greek type design by Gerry Leonidas T the 1997 ATypI Conference at Reading I gave a talk Awith the title ‘Typography & the Greek language: designing typefaces in a cultural context.’ The inspiration for that talk was a discussion with Christopher Burke on designing typefaces for a script one is not linguistically familiar with. My position was that knowledge and use of a language is not a prerequisite for understanding the script to a very high, if not conclusive, degree. In other words, although a ‘typographically attuned’ native user should test a design in real circumstances, any designer could, with the right preparation and monitoring, produce competent typefaces. This position was based on my understanding of the decisions a designer must make in designing a Greek typeface. I should add that this argument had two weak points: one, it was based on a small amount of personal experience in type design and a lot of intuition, rather than research; and, two, it was quite possible that, as a Greek, I was making the ‘right’ choices by default. Since 1997, my own work proved me right on the first point, and that of other designers – both Greeks and non- Greeks – on the second. The last few years saw multilingual typography literally explode. An obvious arena was the broader European region: the Amsterdam Treaty of 1997 which, at the same time as bringing the European Union closer to integration on a number of fields, marked a heightening of awareness in cultural characteristics, down to an explicit statement of support for dialects and local script variations. -

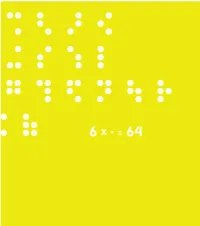

Nemeth Code Uses Some Parts of Textbook Format but Has Some Idiosyncrasies of Its Own

This book is a compilation of research, in “Understanding and Tracing the Problem faced by the Visually Impaired while doing Mathematics” as a Diploma project by Aarti Vashisht at the Srishti School of Art, Design and Technology, Bangalore. 6 DOTS 64 COMBINATIONS A Braille character is formed out of a combination of six dots. Including the blank space, sixty four combinations are possible using one or more of these six dots. CONTENTS Introduction 2 About Braille 32 Mathematics for the Visually Impaired 120 Learning Mathematics 168 C o n c l u s i o n 172 P e o p l e a n d P l a c e s 190 Acknowledgements INTRODUCTION This project tries to understand the nature of the problems that are faced by the visually impaired within the realm of mathematics. It is a summary of my understanding of the problems in this field that may be taken forward to guide those individuals who are concerned about this subject. My education in design has encouraged interest in this field. As a designer I have learnt to be aware of my community and its needs, to detect areas where design can reach out and assist, if not resolve, a problem. Thus began, my search, where I sought to grasp a fuller understanding of the situation by looking at the various mediums that would help better communication. During the project I realized that more often than not work happened in individual pockets which in turn would lead to regionalization of many ideas and opportunities. Data collection got repetitive, which would delay or sometimes even hinder the process. -

Encoding Diversity for All the World's Languages

Encoding Diversity for All the World’s Languages The Script Encoding Initiative (Universal Scripts Project) Michael Everson, Evertype Westport, Co. Mayo, Ireland Bamako, Mali • 6 May 2005 1. Current State of the Unicode Standard • Unicode 4.1 defines over 97,000 characters 1. Current State of the Unicode Standard: New Script Additions Unicode 4.1 (31 March 2005): For Unicode 5.0 (2006): Buginese N’Ko Coptic Balinese Glagolitic Phags-pa New Tai Lue Phoenician Nuskhuri (extends Georgian) Syloti Nagri Cuneiform Tifinagh Kharoshthi Old Persian Cuneiform 1. Current State of the Unicode Standard • Unicode 4.1 defines over 97,000 characters • Unicode covers over 50 scripts (many of which are used for languages with over 5 million speakers) 1. Current State of the Unicode Standard • Unicode 4.1 defines over 97,000 characters • Unicode covers over 50 scripts (often used for languages with over 5 million speakers) • Unicode enables millions of users worldwide to view web pages, send e-mails, converse in chat-rooms, and share text documents in their native script 1. Current State of the Unicode Standard • Unicode 4.1 defines over 97,000 characters • Unicode covers over 50 scripts (often used for languages with over 5 million speakers) • Unicode enables millions of users worldwide to view web pages, send e-mails, converse in chat- rooms, and share text documents in their native script • Unicode is widely supported by current fonts and operating systems, but… Over 80 scripts are missing! Missing Modern Minority Scripts India, Nepal, Southeast Asia China: -

Ancient and Other Scripts

The Unicode® Standard Version 13.0 – Core Specification To learn about the latest version of the Unicode Standard, see http://www.unicode.org/versions/latest/. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trade- mark claim, the designations have been printed with initial capital letters or in all capitals. Unicode and the Unicode Logo are registered trademarks of Unicode, Inc., in the United States and other countries. The authors and publisher have taken care in the preparation of this specification, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The Unicode Character Database and other files are provided as-is by Unicode, Inc. No claims are made as to fitness for any particular purpose. No warranties of any kind are expressed or implied. The recipient agrees to determine applicability of information provided. © 2020 Unicode, Inc. All rights reserved. This publication is protected by copyright, and permission must be obtained from the publisher prior to any prohibited reproduction. For information regarding permissions, inquire at http://www.unicode.org/reporting.html. For information about the Unicode terms of use, please see http://www.unicode.org/copyright.html. The Unicode Standard / the Unicode Consortium; edited by the Unicode Consortium. — Version 13.0. Includes index. ISBN 978-1-936213-26-9 (http://www.unicode.org/versions/Unicode13.0.0/) 1. -

Ronald D. Rotunda, the Notice of Withdrawal and the New Model

RONALD D. ROTUNDA* The Notice of Withdrawal and the New Model Rules of Professional Conduct: Blowing the Whistle and Waving the Red Flag I A problem that has been a leitmotif in the literature and case law of legal ethics concerns the ethical responsibility of the lawyer who learns that a client has committed or plans to commit perjury, or through a lie or misrepresentation commits some other fraud on a tribunal or on another party. Although this problem has been much debated by courts’ and commentators,2 and is the sub- * Professor of Law, University of Illinois. The author gratefully acknowledges the support provided to him by the 1984 David C. Baum Memorial Research Award of the University of Illinois College of Law and a grant from the National Institute of Dispute Resolution in Washington, D.C. The author also wishes to thank Professors Bryant Garth, Sanford Levinson, Patricia Marschall, Deborah mode, Eugene Smith, and John Sutton, who read the manuscript and offered helpful suggestions, and Ms. Marie T. Reilly, for her helpful research and suggestions. I am pleased to contribute this article to the issue dedicated to my good friend and colleague, Eugene Scoles. I See People v. Schultheis, 638 P.2d 8 (Colo. 1981) (witness perjury); In re Fried- man, 76 111. 2d 392: 392 N.E.2d 1333 (1979) (use of false testimony to trap bribers); Comm. on Prof. Ethics and Conduct of the Iowa State Bar Ass’n v. Crary, 245 N.W.2d 298 (Iowa 1976) (client perjury during deposition); Louisiana State Bar Ass’n v.