Processing South Asian Languages Written in the Latin Script: the Dakshina Dataset

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cross-Language Framework for Word Recognition and Spotting of Indic Scripts

Cross-language Framework for Word Recognition and Spotting of Indic Scripts aAyan Kumar Bhunia, bPartha Pratim Roy*, aAkash Mohta, cUmapada Pal aDept. of ECE, Institute of Engineering & Management, Kolkata, India bDept. of CSE, Indian Institute of Technology Roorkee India cCVPR Unit, Indian Statistical Institute, Kolkata, India bemail: [email protected], TEL: +91-1332-284816 Abstract Handwritten word recognition and spotting of low-resource scripts are difficult as sufficient training data is not available and it is often expensive for collecting data of such scripts. This paper presents a novel cross language platform for handwritten word recognition and spotting for such low-resource scripts where training is performed with a sufficiently large dataset of an available script (considered as source script) and testing is done on other scripts (considered as target script). Training with one source script and testing with another script to have a reasonable result is not easy in handwriting domain due to the complex nature of handwriting variability among scripts. Also it is difficult in mapping between source and target characters when they appear in cursive word images. The proposed Indic cross language framework exploits a large resource of dataset for training and uses it for recognizing and spotting text of other target scripts where sufficient amount of training data is not available. Since, Indic scripts are mostly written in 3 zones, namely, upper, middle and lower, we employ zone-wise character (or component) mapping for efficient learning purpose. The performance of our cross- language framework depends on the extent of similarity between the source and target scripts. -

SC22/WG20 N896 L2/01-476 Universal Multiple-Octet Coded Character Set International Organization for Standardization Organisation Internationale De Normalisation

SC22/WG20 N896 L2/01-476 Universal multiple-octet coded character set International organization for standardization Organisation internationale de normalisation Title: Ordering rules for Khmer Source: Kent Karlsson Date: 2001-12-19 Status: Expert contribution Document type: Working group document Action: For consideration by the UTC and JTC 1/SC 22/WG 20 1 Introduction The Khmer script in Unicode/10646 uses conjoining characters, just like the Indic scripts and Hangul. An alternative that is suitable (and much more elegant) for Khmer, but not for Indic scripts, would have been to have combining- below (and sometimes a bit to the side) consonants and combining-below independent vowels, similar to the combining-above Latin letters recently encoded, as well as how Tibetan is handled. However that is not the chosen solution, which instead uses a combining character (COENG) that makes characters conjoin (glue) like it is done for Indic (Brahmic) scripts and like Hangul Jamo, though the latter does not have a separate gluer character. In the Khmer script, using the COENG based approach, the words are formed from orthographic syllables, where an orthographic syllable has the following structure [add ligation control?]: Khmer-syllable ::= (K H)* K M* where K is a Khmer consonant (most with an inherent vowel that is pronounced only if there is no consonant, independent vowel, or dependent vowel following it in the orthographic syllable) or a Khmer independent vowel, H is the invisible Khmer conjoint former COENG, M is a combining character (including COENG, though that would be a misspelling), in particular a combining Khmer vowel (noted A below) or modifier sign. -

ISO Basic Latin Alphabet

ISO basic Latin alphabet The ISO basic Latin alphabet is a Latin-script alphabet and consists of two sets of 26 letters, codified in[1] various national and international standards and used widely in international communication. The two sets contain the following 26 letters each:[1][2] ISO basic Latin alphabet Uppercase Latin A B C D E F G H I J K L M N O P Q R S T U V W X Y Z alphabet Lowercase Latin a b c d e f g h i j k l m n o p q r s t u v w x y z alphabet Contents History Terminology Name for Unicode block that contains all letters Names for the two subsets Names for the letters Timeline for encoding standards Timeline for widely used computer codes supporting the alphabet Representation Usage Alphabets containing the same set of letters Column numbering See also References History By the 1960s it became apparent to thecomputer and telecommunications industries in the First World that a non-proprietary method of encoding characters was needed. The International Organization for Standardization (ISO) encapsulated the Latin script in their (ISO/IEC 646) 7-bit character-encoding standard. To achieve widespread acceptance, this encapsulation was based on popular usage. The standard was based on the already published American Standard Code for Information Interchange, better known as ASCII, which included in the character set the 26 × 2 letters of the English alphabet. Later standards issued by the ISO, for example ISO/IEC 8859 (8-bit character encoding) and ISO/IEC 10646 (Unicode Latin), have continued to define the 26 × 2 letters of the English alphabet as the basic Latin script with extensions to handle other letters in other languages.[1] Terminology Name for Unicode block that contains all letters The Unicode block that contains the alphabet is called "C0 Controls and Basic Latin". -

Finite-State Script Normalization and Processing Utilities: the Nisaba Brahmic Library

Finite-state script normalization and processing utilities: The Nisaba Brahmic library Cibu Johny† Lawrence Wolf-Sonkin‡ Alexander Gutkin† Brian Roark‡ Google Research †United Kingdom and ‡United States {cibu,wolfsonkin,agutkin,roark}@google.com Abstract In addition to such normalization issues, some scripts also have well-formedness constraints, i.e., This paper presents an open-source library for efficient low-level processing of ten ma- not all strings of Unicode characters from a single jor South Asian Brahmic scripts. The library script correspond to a valid (i.e., legible) grapheme provides a flexible and extensible framework sequence in the script. Such constraints do not ap- for supporting crucial operations on Brahmic ply in the basic Latin alphabet, where any permuta- scripts, such as NFC, visual normalization, tion of letters can be rendered as a valid string (e.g., reversible transliteration, and validity checks, for use as an acronym). The Brahmic family of implemented in Python within a finite-state scripts, however, including the Devanagari script transducer formalism. We survey some com- mon Brahmic script issues that may adversely used to write Hindi, Marathi and many other South affect the performance of downstream NLP Asian languages, do have such constraints. These tasks, and provide the rationale for finite-state scripts are alphasyllabaries, meaning that they are design and system implementation details. structured around orthographic syllables (aksara)̣ as the basic unit.1 One or more Unicode characters 1 Introduction combine when rendering one of thousands of leg- The Unicode Standard separates the representation ible aksara,̣ but many combinations do not corre- of text from its specific graphical rendering: text spond to any aksara.̣ Given a token in these scripts, is encoded as a sequence of characters, which, at one may want to (a) normalize it to a canonical presentation time are then collectively rendered form; and (b) check whether it is a well-formed into the appropriate sequence of glyphs for display. -

Proposal for Generation Panel for Latin Script Label Generation Ruleset for the Root Zone

Generation Panel for Latin Script Label Generation Ruleset for the Root Zone Proposal for Generation Panel for Latin Script Label Generation Ruleset for the Root Zone Table of Contents 1. General Information 2 1.1 Use of Latin Script characters in domain names 3 1.2 Target Script for the Proposed Generation Panel 4 1.2.1 Diacritics 5 1.3 Countries with significant user communities using Latin script 6 2. Proposed Initial Composition of the Panel and Relationship with Past Work or Working Groups 7 3. Work Plan 13 3.1 Suggested Timeline with Significant Milestones 13 3.2 Sources for funding travel and logistics 16 3.3 Need for ICANN provided advisors 17 4. References 17 1 Generation Panel for Latin Script Label Generation Ruleset for the Root Zone 1. General Information The Latin script1 or Roman script is a major writing system of the world today, and the most widely used in terms of number of languages and number of speakers, with circa 70% of the world’s readers and writers making use of this script2 (Wikipedia). Historically, it is derived from the Greek alphabet, as is the Cyrillic script. The Greek alphabet is in turn derived from the Phoenician alphabet which dates to the mid-11th century BC and is itself based on older scripts. This explains why Latin, Cyrillic and Greek share some letters, which may become relevant to the ruleset in the form of cross-script variants. The Latin alphabet itself originated in Italy in the 7th Century BC. The original alphabet contained 21 upper case only letters: A, B, C, D, E, F, Z, H, I, K, L, M, N, O, P, Q, R, S, T, V and X. -

Characters for Classical Latin

Characters for Classical Latin David J. Perry version 13, 2 July 2020 Introduction The purpose of this document is to identify all characters of interest to those who work with Classical Latin, no matter how rare. Epigraphers will want many of these, but I want to collect any character that is needed in any context. Those that are already available in Unicode will be so identified; those that may be available can be debated; and those that are clearly absent and should be proposed can be proposed; and those that are so rare as to be unencodable will be known. If you have any suggestions for additional characters or reactions to the suggestions made here, please email me at [email protected] . No matter how rare, let’s get all possible characters on this list. Version 6 of this document has been updated to reflect the many characters of interest to Latinists encoded as of Unicode version 13.0. Characters are indicated by their Unicode value, a hexadecimal number, and their name printed IN SMALL CAPITALS. Unicode values may be preceded by U+ to set them off from surrounding text. Combining diacritics are printed over a dotted cir- cle ◌ to show that they are intended to be used over a base character. For more basic information about Unicode, see the website of The Unicode Consortium, http://www.unicode.org/ or my book cited below. Please note that abbreviations constructed with lines above or through existing let- ters are not considered separate characters except in unusual circumstances, nor are the space-saving ligatures found in Latin inscriptions unless they have a unique grammatical or phonemic function (which they normally don’t). -

Designing Soft Keyboards for Brahmic Scripts

Designing Soft Keyboards for Brahmic Scripts Lauren Hinkle Miguel Lezcano Jugal Kalita Colorado College University of Colorado University of Colorado Colorado Springs, CO, USA Colorado Springs, CO, USA Colorado Springs, CO, USA lauren.hinkle [email protected] [email protected] @coloradocollege.edu Abstract Soft keyboards allow a user to input text with and without the use of a physical keyboard. They Despite being spoken by a large percent- are versatile because they allow data to be input age of the world, many Indic languages through mouse clicks on an on-screen keyboard, have escaped research and development in through a touch screen on a computer, cell phone, the realm of text-input. There exist lan- or PDA, or by mapping a virtual keyboard to guages with poor or no support for typ- a standard physical keyboard. With the recent ing. Soft keyboards, because of their ease surge in popularity of touchscreen media such of installation and lack of reliance on spe- as cell phones and computers, well-designed cific hardware, are a promising solution as soft keyboards are becoming more important an input device for many languages. De- everywhere. veloping an acceptable soft keyboard re- For languages that don’t conform well to quires frequency analysis of characters in standard QWERTY based keyboards that order to design a layout that minimizes accompany most computers, soft keyboards are text-input time. This paper proposes us- especially important. Brahmic scripts (Coulmas ing various development techniques, lay- 1990) have more characters and ligatures than fit out variations, and evaluation methods usably on a standard keyboard. -

Greek Type Design Introduction

A primer on Greek type design by Gerry Leonidas T the 1997 ATypI Conference at Reading I gave a talk Awith the title ‘Typography & the Greek language: designing typefaces in a cultural context.’ The inspiration for that talk was a discussion with Christopher Burke on designing typefaces for a script one is not linguistically familiar with. My position was that knowledge and use of a language is not a prerequisite for understanding the script to a very high, if not conclusive, degree. In other words, although a ‘typographically attuned’ native user should test a design in real circumstances, any designer could, with the right preparation and monitoring, produce competent typefaces. This position was based on my understanding of the decisions a designer must make in designing a Greek typeface. I should add that this argument had two weak points: one, it was based on a small amount of personal experience in type design and a lot of intuition, rather than research; and, two, it was quite possible that, as a Greek, I was making the ‘right’ choices by default. Since 1997, my own work proved me right on the first point, and that of other designers – both Greeks and non- Greeks – on the second. The last few years saw multilingual typography literally explode. An obvious arena was the broader European region: the Amsterdam Treaty of 1997 which, at the same time as bringing the European Union closer to integration on a number of fields, marked a heightening of awareness in cultural characteristics, down to an explicit statement of support for dialects and local script variations. -

Nemeth Code Uses Some Parts of Textbook Format but Has Some Idiosyncrasies of Its Own

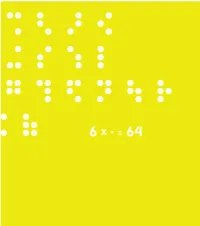

This book is a compilation of research, in “Understanding and Tracing the Problem faced by the Visually Impaired while doing Mathematics” as a Diploma project by Aarti Vashisht at the Srishti School of Art, Design and Technology, Bangalore. 6 DOTS 64 COMBINATIONS A Braille character is formed out of a combination of six dots. Including the blank space, sixty four combinations are possible using one or more of these six dots. CONTENTS Introduction 2 About Braille 32 Mathematics for the Visually Impaired 120 Learning Mathematics 168 C o n c l u s i o n 172 P e o p l e a n d P l a c e s 190 Acknowledgements INTRODUCTION This project tries to understand the nature of the problems that are faced by the visually impaired within the realm of mathematics. It is a summary of my understanding of the problems in this field that may be taken forward to guide those individuals who are concerned about this subject. My education in design has encouraged interest in this field. As a designer I have learnt to be aware of my community and its needs, to detect areas where design can reach out and assist, if not resolve, a problem. Thus began, my search, where I sought to grasp a fuller understanding of the situation by looking at the various mediums that would help better communication. During the project I realized that more often than not work happened in individual pockets which in turn would lead to regionalization of many ideas and opportunities. Data collection got repetitive, which would delay or sometimes even hinder the process. -

Encoding Diversity for All the World's Languages

Encoding Diversity for All the World’s Languages The Script Encoding Initiative (Universal Scripts Project) Michael Everson, Evertype Westport, Co. Mayo, Ireland Bamako, Mali • 6 May 2005 1. Current State of the Unicode Standard • Unicode 4.1 defines over 97,000 characters 1. Current State of the Unicode Standard: New Script Additions Unicode 4.1 (31 March 2005): For Unicode 5.0 (2006): Buginese N’Ko Coptic Balinese Glagolitic Phags-pa New Tai Lue Phoenician Nuskhuri (extends Georgian) Syloti Nagri Cuneiform Tifinagh Kharoshthi Old Persian Cuneiform 1. Current State of the Unicode Standard • Unicode 4.1 defines over 97,000 characters • Unicode covers over 50 scripts (many of which are used for languages with over 5 million speakers) 1. Current State of the Unicode Standard • Unicode 4.1 defines over 97,000 characters • Unicode covers over 50 scripts (often used for languages with over 5 million speakers) • Unicode enables millions of users worldwide to view web pages, send e-mails, converse in chat-rooms, and share text documents in their native script 1. Current State of the Unicode Standard • Unicode 4.1 defines over 97,000 characters • Unicode covers over 50 scripts (often used for languages with over 5 million speakers) • Unicode enables millions of users worldwide to view web pages, send e-mails, converse in chat- rooms, and share text documents in their native script • Unicode is widely supported by current fonts and operating systems, but… Over 80 scripts are missing! Missing Modern Minority Scripts India, Nepal, Southeast Asia China: -

Simplified Abugidas

Simplified Abugidas Chenchen Ding, Masao Utiyama, and Eiichiro Sumita Advanced Translation Technology Laboratory, Advanced Speech Translation Research and Development Promotion Center, National Institute of Information and Communications Technology 3-5 Hikaridai, Seika-cho, Soraku-gun, Kyoto, 619-0289, Japan fchenchen.ding, mutiyama, [email protected] phonogram segmental abjad Abstract writing alphabet system An abugida is a writing system where the logogram syllabic abugida consonant letters represent syllables with Figure 1: Hierarchy of writing systems. a default vowel and other vowels are de- noted by diacritics. We investigate the fea- ណូ ន ណណន នួន ននន …ជិតណណន… sibility of recovering the original text writ- /noon/ /naen/ /nuən/ /nein/ vowel machine ten in an abugida after omitting subordi- diacritic omission learning ណន នន methods nate diacritics and merging consonant let- consonant character ters with similar phonetic values. This is merging N N … J T N N … crucial for developing more efficient in- (a) ABUGIDA SIMPLIFICATION (b) RECOVERY put methods by reducing the complexity in abugidas. Four abugidas in the south- Figure 2: Overview of the approach in this study. ern Brahmic family, i.e., Thai, Burmese, ters equally. In contrast, abjads (e.g., the Arabic Khmer, and Lao, were studied using a and Hebrew scripts) do not write most vowels ex- newswire 20; 000-sentence dataset. We plicitly. The third type, abugidas, also called al- compared the recovery performance of a phasyllabary, includes features from both segmen- support vector machine and an LSTM- tal and syllabic systems. In abugidas, consonant based recurrent neural network, finding letters represent syllables with a default vowel, that the abugida graphemes could be re- and other vowels are denoted by diacritics. -

Ancient and Other Scripts

The Unicode® Standard Version 13.0 – Core Specification To learn about the latest version of the Unicode Standard, see http://www.unicode.org/versions/latest/. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trade- mark claim, the designations have been printed with initial capital letters or in all capitals. Unicode and the Unicode Logo are registered trademarks of Unicode, Inc., in the United States and other countries. The authors and publisher have taken care in the preparation of this specification, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The Unicode Character Database and other files are provided as-is by Unicode, Inc. No claims are made as to fitness for any particular purpose. No warranties of any kind are expressed or implied. The recipient agrees to determine applicability of information provided. © 2020 Unicode, Inc. All rights reserved. This publication is protected by copyright, and permission must be obtained from the publisher prior to any prohibited reproduction. For information regarding permissions, inquire at http://www.unicode.org/reporting.html. For information about the Unicode terms of use, please see http://www.unicode.org/copyright.html. The Unicode Standard / the Unicode Consortium; edited by the Unicode Consortium. — Version 13.0. Includes index. ISBN 978-1-936213-26-9 (http://www.unicode.org/versions/Unicode13.0.0/) 1.