Raspberry Pi Assembler

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AMD Athlon™ Processor X86 Code Optimization Guide

AMD AthlonTM Processor x86 Code Optimization Guide © 2000 Advanced Micro Devices, Inc. All rights reserved. The contents of this document are provided in connection with Advanced Micro Devices, Inc. (“AMD”) products. AMD makes no representations or warranties with respect to the accuracy or completeness of the contents of this publication and reserves the right to make changes to specifications and product descriptions at any time without notice. No license, whether express, implied, arising by estoppel or otherwise, to any intellectual property rights is granted by this publication. Except as set forth in AMD’s Standard Terms and Conditions of Sale, AMD assumes no liability whatsoever, and disclaims any express or implied warranty, relating to its products including, but not limited to, the implied warranty of merchantability, fitness for a particular purpose, or infringement of any intellectual property right. AMD’s products are not designed, intended, authorized or warranted for use as components in systems intended for surgical implant into the body, or in other applications intended to support or sustain life, or in any other applica- tion in which the failure of AMD’s product could create a situation where per- sonal injury, death, or severe property or environmental damage may occur. AMD reserves the right to discontinue or make changes to its products at any time without notice. Trademarks AMD, the AMD logo, AMD Athlon, K6, 3DNow!, and combinations thereof, AMD-751, K86, and Super7 are trademarks, and AMD-K6 is a registered trademark of Advanced Micro Devices, Inc. Microsoft, Windows, and Windows NT are registered trademarks of Microsoft Corporation. -

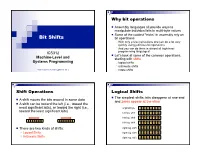

Bit Shifts Bit Operations, Logical Shifts, Arithmetic Shifts, Rotate Shifts

Why bit operations Assembly languages all provide ways to manipulate individual bits in multi-byte values Some of the coolest “tricks” in assembly rely on Bit Shifts bit operations With only a few instructions one can do a lot very quickly using judicious bit operations And you can do them in almost all high-level ICS312 programming languages! Let’s look at some of the common operations, Machine-Level and starting with shifts Systems Programming logical shifts arithmetic shifts Henri Casanova ([email protected]) rotate shifts Shift Operations Logical Shifts The simplest shifts: bits disappear at one end A shift moves the bits around in some data and zeros appear at the other A shift can be toward the left (i.e., toward the most significant bits), or toward the right (i.e., original byte 1 0 1 1 0 1 0 1 toward the least significant bits) left log. shift 0 1 1 0 1 0 1 0 left log. shift 1 1 0 1 0 1 0 0 left log. shift 1 0 1 0 1 0 0 0 There are two kinds of shifts: right log. shift 0 1 0 1 0 1 0 0 Logical Shifts right log. shift 0 0 1 0 1 0 1 0 Arithmetic Shifts right log. shift 0 0 0 1 0 1 0 1 Logical Shift Instructions Shifts and Numbers Two instructions: shl and shr The common use for shifts: quickly multiply and divide by powers of 2 One specifies by how many bits the data is shifted In decimal, for instance: multiplying 0013 by 10 amounts to doing one left shift to obtain 0130 Either by just passing a constant to the instruction multiplying by 100=102 amounts to doing two left shifts to obtain 1300 Or by using whatever -

Lecture Notes in Assembly Language

Lecture Notes in Assembly Language Short introduction to low-level programming Piotr Fulmański Łódź, 12 czerwca 2015 Spis treści Spis treści iii 1 Before we begin1 1.1 Simple assembler.................................... 1 1.1.1 Excercise 1 ................................... 2 1.1.2 Excercise 2 ................................... 3 1.1.3 Excercise 3 ................................... 3 1.1.4 Excercise 4 ................................... 5 1.1.5 Excercise 5 ................................... 6 1.2 Improvements, part I: addressing........................... 8 1.2.1 Excercise 6 ................................... 11 1.3 Improvements, part II: indirect addressing...................... 11 1.4 Improvements, part III: labels............................. 18 1.4.1 Excercise 7: find substring in a string .................... 19 1.4.2 Excercise 8: improved polynomial....................... 21 1.5 Improvements, part IV: flag register ......................... 23 1.6 Improvements, part V: the stack ........................... 24 1.6.1 Excercise 12................................... 26 1.7 Improvements, part VI – function stack frame.................... 29 1.8 Finall excercises..................................... 34 1.8.1 Excercise 13................................... 34 1.8.2 Excercise 14................................... 34 1.8.3 Excercise 15................................... 34 1.8.4 Excercise 16................................... 34 iii iv SPIS TREŚCI 1.8.5 Excercise 17................................... 34 2 First program 37 2.1 Compiling, -

Targeting Embedded Powerpc

Freescale Semiconductor, Inc. EPPC.book Page 1 Monday, March 28, 2005 9:22 AM CodeWarrior™ Development Studio PowerPC™ ISA Communications Processors Edition Targeting Manual Revised: 28 March 2005 For More Information: www.freescale.com Freescale Semiconductor, Inc. EPPC.book Page 2 Monday, March 28, 2005 9:22 AM Metrowerks, the Metrowerks logo, and CodeWarrior are trademarks or registered trademarks of Metrowerks Corpora- tion in the United States and/or other countries. All other trade names and trademarks are the property of their respective owners. Copyright © 2005 by Metrowerks, a Freescale Semiconductor company. All rights reserved. No portion of this document may be reproduced or transmitted in any form or by any means, electronic or me- chanical, without prior written permission from Metrowerks. Use of this document and related materials are governed by the license agreement that accompanied the product to which this manual pertains. This document may be printed for non-commercial personal use only in accordance with the aforementioned license agreement. If you do not have a copy of the license agreement, contact your Metrowerks representative or call 1-800-377- 5416 (if outside the U.S., call +1-512-996-5300). Metrowerks reserves the right to make changes to any product described or referred to in this document without further notice. Metrowerks makes no warranty, representation or guarantee regarding the merchantability or fitness of its prod- ucts for any particular purpose, nor does Metrowerks assume any liability arising -

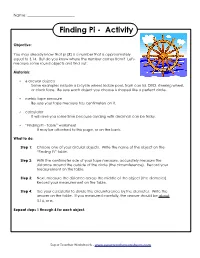

Finding Pi Project

Name: ________________________ Finding Pi - Activity Objective: You may already know that pi (π) is a number that is approximately equal to 3.14. But do you know where the number comes from? Let's measure some round objects and find out. Materials: • 6 circular objects Some examples include a bicycle wheel, kiddie pool, trash can lid, DVD, steering wheel, or clock face. Be sure each object you choose is shaped like a perfect circle. • metric tape measure Be sure your tape measure has centimeters on it. • calculator It will save you some time because dividing with decimals can be tricky. • “Finding Pi - Table” worksheet It may be attached to this page, or on the back. What to do: Step 1: Choose one of your circular objects. Write the name of the object on the “Finding Pi” table. Step 2: With the centimeter side of your tape measure, accurately measure the distance around the outside of the circle (the circumference). Record your measurement on the table. Step 3: Next, measure the distance across the middle of the object (the diameter). Record your measurement on the table. Step 4: Use your calculator to divide the circumference by the diameter. Write the answer on the table. If you measured carefully, the answer should be about 3.14, or π. Repeat steps 1 through 4 for each object. Super Teacher Worksheets - www.superteacherworksheets.com Name: ________________________ “Finding Pi” Table Measure circular objects and complete the table below. If your measurements are accurate, you should be able to calculate the number pi (3.14). Is your answer name of circumference diameter circumference ÷ approximately circular object measurement (cm) measurement (cm) diameter equal to π? 1. -

Linux Boot Loaders Compared

Linux Boot Loaders Compared L.C. Benschop May 29, 2003 Copyright c 2002, 2003, L.C. Benschop, Eindhoven, The Netherlands. Per- mission is granted to make verbatim copies of this document. This is version 1.1 which has some minor corrections. Contents 1 introduction 2 2 How Boot Loaders Work 3 2.1 What BIOS does for us . 3 2.2 Parts of a boot loader . 6 2.2.1 boot sector program . 6 2.2.2 second stage of boot loader . 7 2.2.3 Boot loader installer . 8 2.3 Loading the operating system . 8 2.3.1 Loading the Linux kernel . 8 2.3.2 Chain loading . 10 2.4 Configuring the boot loader . 10 3 Example Installations 11 3.1 Example root file system and kernel . 11 3.2 Linux Boot Sector . 11 3.3 LILO . 14 3.4 GNU GRUB . 15 3.5 SYSLINUX . 18 3.6 LOADLIN . 19 3.7 Where Can Boot Loaders Live . 21 1 4 RAM Disks 22 4.1 Living without a RAM disk . 22 4.2 RAM disk devices . 23 4.3 Loading a RAM disk at boot time . 24 4.4 The initial RAM disk . 24 5 Making Diskette Images without Diskettes 25 6 Hard Disk Installation 26 7 CD-ROM Installation 29 8 Conclusions 31 1 introduction If you use Linux on a production system, you will only see it a few times a year. If you are a hobbyist who compiles many kernels or who uses many operating systems, you may see it several times per day. -

Codewarrior® Targeting Embedded Powerpc

CodeWarrior® Targeting Embedded PowerPC Because of last-minute changes to CodeWarrior, some of the information in this manual may be inaccurate. Please read the Release Notes on the CodeWarrior CD for the most recent information. Revised: 991129-CIB Metrowerks CodeWarrior copyright ©1993–1999 by Metrowerks Inc. and its licensors. All rights reserved. Documentation stored on the compact disk(s) may be printed by licensee for personal use. Except for the foregoing, no part of this documentation may be reproduced or trans- mitted in any form by any means, electronic or mechanical, including photocopying, recording, or any information storage and retrieval system, without permission in writing from Metrowerks Inc. Metrowerks, the Metrowerks logo, CodeWarrior, and Software at Work are registered trademarks of Metrowerks Inc. PowerPlant and PowerPlant Constructor are trademarks of Metrowerks Inc. All other trademarks and registered trademarks are the property of their respective owners. ALL SOFTWARE AND DOCUMENTATION ON THE COMPACT DISK(S) ARE SUBJECT TO THE LICENSE AGREEMENT IN THE CD BOOKLET. How to Contact Metrowerks: U.S.A. and international Metrowerks Corporation 9801 Metric Blvd., Suite 100 Austin, TX 78758 U.S.A. Canada Metrowerks Inc. 1500 du College, Suite 300 Ville St-Laurent, QC Canada H4L 5G6 Ordering Voice: (800) 377–5416 Fax: (512) 873–4901 World Wide Web http://www.metrowerks.com Registration information [email protected] Technical support [email protected] Sales, marketing, & licensing [email protected] CompuServe Goto: Metrowerks Table of Contents 1 Introduction 11 Read the Release Notes! . 11 Solaris: Host-Specific Information. 12 About This Book . 12 Where to Go from Here . -

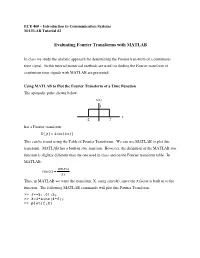

Evaluating Fourier Transforms with MATLAB

ECE 460 – Introduction to Communication Systems MATLAB Tutorial #2 Evaluating Fourier Transforms with MATLAB In class we study the analytic approach for determining the Fourier transform of a continuous time signal. In this tutorial numerical methods are used for finding the Fourier transform of continuous time signals with MATLAB are presented. Using MATLAB to Plot the Fourier Transform of a Time Function The aperiodic pulse shown below: x(t) 1 t -2 2 has a Fourier transform: X ( jf ) = 4sinc(4π f ) This can be found using the Table of Fourier Transforms. We can use MATLAB to plot this transform. MATLAB has a built-in sinc function. However, the definition of the MATLAB sinc function is slightly different than the one used in class and on the Fourier transform table. In MATLAB: sin(π x) sinc(x) = π x Thus, in MATLAB we write the transform, X, using sinc(4f), since the π factor is built in to the function. The following MATLAB commands will plot this Fourier Transform: >> f=-5:.01:5; >> X=4*sinc(4*f); >> plot(f,X) In this case, the Fourier transform is a purely real function. Thus, we can plot it as shown above. In general, Fourier transforms are complex functions and we need to plot the amplitude and phase spectrum separately. This can be done using the following commands: >> plot(f,abs(X)) >> plot(f,angle(X)) Note that the angle is either zero or π. This reflects the positive and negative values of the transform function. Performing the Fourier Integral Numerically For the pulse presented above, the Fourier transform can be found easily using the table. -

GNU MP the GNU Multiple Precision Arithmetic Library Edition 6.2.1 14 November 2020

GNU MP The GNU Multiple Precision Arithmetic Library Edition 6.2.1 14 November 2020 by Torbj¨ornGranlund and the GMP development team This manual describes how to install and use the GNU multiple precision arithmetic library, version 6.2.1. Copyright 1991, 1993-2016, 2018-2020 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, with the Front-Cover Texts being \A GNU Manual", and with the Back-Cover Texts being \You have freedom to copy and modify this GNU Manual, like GNU software". A copy of the license is included in Appendix C [GNU Free Documentation License], page 132. i Table of Contents GNU MP Copying Conditions :::::::::::::::::::::::::::::::::::: 1 1 Introduction to GNU MP ::::::::::::::::::::::::::::::::::::: 2 1.1 How to use this Manual :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 2 2 Installing GMP ::::::::::::::::::::::::::::::::::::::::::::::::: 3 2.1 Build Options:::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 3 2.2 ABI and ISA :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 8 2.3 Notes for Package Builds:::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 11 2.4 Notes for Particular Systems :::::::::::::::::::::::::::::::::::::::::::::::::::::: 12 2.5 Known Build Problems ::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 14 2.6 Performance -

Introduction to X86 Assembly

CS342 Computer Security Handout # 5 Prof. Lyn Turbak Monday, Oct. 04, 2010 Wellesley College Lab 4: Introduction to x86 Assembly Reading: Hacking, 0x250, 0x270 Overview Today, we continue to cover low-level programming details that are essential for understanding software vulnerabilities like buffer overflow attacks and format string exploits. You will get exposure to the following: • Understanding conventions used by compiler to translate high-level programs to low-level assembly code (in our case, using Gnu C Compiler (gcc) to compile C programs). • The ability to read low-level assembly code (in our case, Intel x86). • Understanding how assembly code instructions are represented as machine code. • Being able to use gdb (the Gnu Debugger) to read the low-level code produced by gcc and understand its execution. In tutorials based on this handout, we will learn about all of the above in the context of some simple examples. Intel x86 Assembly Language Since Intel x86 processors are ubiquitous, it is helpful to know how to read assembly code for these processors. We will use the following terms: byte refers to 8-bit quantities; short word refers to 16-bit quantities; word refers to 32-bit quantities; and long word refers to 64-bit quantities. There are many registers, but we mostly care about the following: • EAX, EBX, ECX, EDX are 32-bit registers used for general storage. • ESI and EDI are 32-bit indexing registers that are sometimes used for general storage. • ESP is the 32-bit register for the stack pointer, which holds the address of the element currently at the top of the stack. -

ECE 598 – Advanced Operating Systems Lecture 2

ECE 598 { Advanced Operating Systems Lecture 2 Vince Weaver http://www.eece.maine.edu/~vweaver [email protected] 15 January 2015 Announcements • Update on room situation (shouldn't be locked anymore, but not much chance of getting a better room) 1 Building Linux by Hand • Check out with git or download tarball: git clone git://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git http://www.kernel.org/pub/linux/kernel/v3.x/ • Configure. make config or make menuconfig Also can copy existing .config and run make oldconfig • Compile. 2 make What does make -j 8 do differently? • Make the modules. make modules • sudo make modules install • copy bzImage to boot, update boot loader 3 Building Linux Automated • If in a distro there are other commands to building a package. • For example on Debian make-kpkg --initrd --rootcmd fakeroot kernel image • Then dpkg -i to install; easier to track 4 Overhead (i.e. why not to do it natively on a Pi) • Size { clean git source tree (x86) 1.8GB, compiled with kernel, 2.5GB, compiled kernel with debug features (x86), 12GB!!! Tarball version with compiled kernel (ARM) 1.5GB • Time to compile { minutes (on fast multicore x86 machine) to hours (18 hours or so on Pi) 5 Linux on the Pi • Mainline kernel, bcm2835 tree Missing some features • Raspberry-pi foundation bcm2708 tree More complete, not upstream 6 Compiling { how does it work? • Take C-code • Turn into assembler • Run assembler to make object (machine language files). Final addresses not necessarily put into place • Run linker (which uses linker script) • Makes some sort of executable. -

MATLAB Examples Mathematics

MATLAB Examples Mathematics Hans-Petter Halvorsen, M.Sc. Mathematics with MATLAB • MATLAB is a powerful tool for mathematical calculations. • Type “help elfun” (elementary math functions) in the Command window for more information about basic mathematical functions. Mathematics Topics • Basic Math Functions and Expressions � = 3�% + ) �% + �% + �+,(.) • Statistics – mean, median, standard deviation, minimum, maximum and variance • Trigonometric Functions sin() , cos() , tan() • Complex Numbers � = � + �� • Polynomials = =>< � � = �<� + �%� + ⋯ + �=� + �=@< Basic Math Functions Create a function that calculates the following mathematical expression: � = 3�% + ) �% + �% + �+,(.) We will test with different values for � and � We create the function: function z=calcexpression(x,y) z=3*x^2 + sqrt(x^2+y^2)+exp(log(x)); Testing the function gives: >> x=2; >> y=2; >> calcexpression(x,y) ans = 16.8284 Statistics Functions • MATLAB has lots of built-in functions for Statistics • Create a vector with random numbers between 0 and 100. Find the following statistics: mean, median, standard deviation, minimum, maximum and the variance. >> x=rand(100,1)*100; >> mean(x) >> median(x) >> std(x) >> mean(x) >> min(x) >> max(x) >> var(x) Trigonometric functions sin(�) cos(�) tan(�) Trigonometric functions It is quite easy to convert from radians to degrees or from degrees to radians. We have that: 2� ������� = 360 ������� This gives: 180 � ������� = �[�������] M � � �[�������] = �[�������] M 180 → Create two functions that convert from radians to degrees (r2d(x)) and from degrees to radians (d2r(x)) respectively. Test the functions to make sure that they work as expected. The functions are as follows: function d = r2d(r) d=r*180/pi; function r = d2r(d) r=d*pi/180; Testing the functions: >> r2d(2*pi) ans = 360 >> d2r(180) ans = 3.1416 Trigonometric functions Given right triangle: • Create a function that finds the angle A (in degrees) based on input arguments (a,c), (b,c) and (a,b) respectively.