Declaration for an Increased Public Awareness of Climate Change

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PDF Version for Printing

Fermilab Today Thursday, March 27, 2008 Subscribe | Contact Fermilab Today | Archive | Classifieds Search Furlough Information Feature Fermilab Result of the Week Reminder: symmetry expands online Shedding new light An IDES representative will presence, features new blog on an old problem conduct the final on-site group meetings at 11 a.m. and noon in the Wilson Hall One West conference room on Friday, March 28. New furlough information, including an up-to-date Q&A section, appears on the furlough Web pages daily. symmetry magazine now provides more online content while it saves money by publishing fewer Layoff Information print issues. This figure shows the transverse photon New information on Fermilab From the symmetry editor: momentum in large extra dimension candidate layoffs, including an up-to-date events. The additional rate of signal events is This week's distribution of the new issue of Q&A section, appears on the shown in the open histogram along with the layoff Web pages daily. symmetry launches the next phase of the expected backgrounds. magazine's development. Our readers now Calendar use the magazine in different ways, and we When it comes to particle interactions, the are reaching a much larger audience. While force of gravity is an oddity. It is also entirely Thursday, March 27 readers are outspoken in wanting to keep the absent from the Standard Model theory. 10 a.m. print magazine, many of them are now more Although gravity is an undeniable force in our Presentations to the Physics comfortable reading online. everyday life and dominates interactions on the cosmological scale, it is by far the weakest Advisory Committee - Curia II Starting from this issue, we will publish six force at the particle level and has never been 1 p.m. -

2006 - Biodiversity and Cultural Diversity in the Andes and Amazon 1: Biodiversity

Lyonia 9(1) 2006 - Biodiversity and Cultural Diversity in the Andes and Amazon 1: Biodiversity Volume 9 (1) February 2006 ISSN: 0888-9619 Introduction In 2001, the 1. Congress of Conservation of Biological and Cultural Diversity in the Andes and the Amazon Basin in Cusco, Peru, attempted to provide a platform to bridge the existing gap between Scientists, Non Governmental Organizations, Indigenous Populations and Governmental Agencies. This was followed by a 2. Congress in 2003, held in Loja, Ecuador together with the IV Ecuadorian Botanical Congress. The most important results of these conferences were published in Lyonia 6 (1/2) and 7 (1/2) 2004. Since then, the "Andes and Amazon" Biodiversity Congress has become a respected institution, and is being held every two years in Loja, Ecuador, where it has found a permanent home at the Universidad Tecnica Particular. In 2005, the 3. Congres on Biological and Cultural Diversity of the Andes and Amazon Basin joined efforts with the 2. Dry Forest Congress and the 5. Ecuadorian Botanical Congress, to provide an even broader venue. The Tropical Dry Forests of Latin America as well as the Andes and the Amazon Basin represent one of the most important Biodiversity-Hotspots on Earth. At the same time, both systems face imminent dangers due to unsustainable use. Attempts of sustainable management and conservation must integrate local communities and their traditional knowledge. Management decisions need to include the high importance of natural resources in providing building materials, food and medicines for rural as well as urbanized communities. The traditional use of forest resources, particularly of non-timber products like medicinal plants, has deep roots not only in indigenous communities, but is practiced in a wide section of society. -

Downloadable and Ready Crucial to Our Understanding of What It Is to Be Physiology and Biophysics, and Director of the for Re-Use in Ways the Original Human

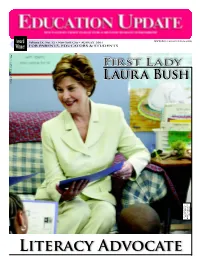

www.EDUCATIONUPDATE.com AwardAward Volume IX, No. 12 • New York City • AUGUST 2004 Winner FOR PARENTS, EDUCATORS & STUDENTS White House photo by Joyce Naltchayan First Lady Laura Bush U.S. POSTAGE PAID U.S. POSTAGE VOORHEES, NJ Permit No.500 PRSRT STD. PRSRT LITERACY ADVOCATE 2 SPOTLIGHT ON SCHOOLS ■ EDUCATION UPDATE ■ AUGUST 2004 Corporate Contributions to Education - Part I This Is The First In A Series On Corporate Contributions To Education, Interviewing Leaders Who Have Changed The Face Of Education In Our Nation DANIEL ROSE, CEO, ROSE ASSOCIATES FOCUSES ON HARLEM EDUCATIONAL ACTIVITIES FUND By JOAN BAUM, Ph.D. living in tough neighborhoods and wound up concentrating on “being effective at So what does a super-dynamic, impassioned, finding themselves in overcrowded the margin.” First HEAF took under its wing articulate humanitarian from a well known phil- classrooms. Of course, Rose is a real- the lowest-ranking public school in the city and anthropic family do when he becomes Chairman ist: He knows that the areas HEAF five years later moved it from having only 9 Emeritus, after having founded and funded a serves—Central Harlem, Washington percent of its students at grade level to 2/3rds. significant venture for educational reform? If Heights, the South Bronx—are rife Then HEAF turned its attention to a minority he’s Daniel Rose, of Rose Associates, Inc., he’s with conditions that all too easily school with 100 percent at or above grade level “bursting with pride” at having a distinguished breed negative peer pressure, poor but whose students were not successful in getting new team to whom he has passed the torch— self-esteem, and low aspirations and into the city’s premier public high schools. -

Diurnal and Nocturnal Lepidoptera of Buenaventura (Piñas-Ecuador) Lepidópteros Diurnos Y Nocturnos De La Reserva Buenaventura (Piñas –Ecuador)

Volume 9 (1) Diurnal and nocturnal lepidoptera of Buenaventura (Piñas-Ecuador) Lepidópteros diurnos y nocturnos de la Reserva Buenaventura (Piñas –Ecuador) Sebastián Padrón Universidad del Azuay Escuela de Biología del Medio Ambiente [email protected] February 2006 Download at: http://www.lyonia.org/downloadPDF.php?pdfID=2.408.1 Diurnal and nocturnal lepidoptera of Buenaventura (Piñas-Ecuador) Resumen En un bosque húmedo tropical en el sur de Ecuador, dentro de un gradiente altitudinal de 600-1000m sobre el nivel del mar, se realizo un inventario de mariposas diurnas y nocturnas elaborando así una lista preliminar de especies. La región de investigación (La Reserva Buenaventura) está ubicada en la parte alta de la provincia del Oro cerca de la ciudad de Piñas, esta reserva presenta una gran diversidad de aves, especies vegetales e insectos. Las comunidades de mariposas diurnas y nocturnas fueron muestreadas durante los meses de Agosto y Septiembre del 2004, para lo cual se utilizo trampas aéreas, de cebo, e intercepción con red y de luz Vapor de Mercurio 250 watt. Las trampas aéreas, de cebo y de intercepción fueron efectuadas a lo largo de transectos definidos dentro de la reserva, estos fueron monitoreados cada dos días dentro de los 24 días de la investigación. La trampa de luz fue ubicada en la estación científica y esta se uso, por tres horas desde 18:30 hasta 21:30, durante 15 noches. Se pudo capturar 550 especimenes las cuales fueron conservadas, montadas e identificación. Se pudo clasificar 255 especies de lepidópteros de las cuales 60 pertenecen a mariposas diurnas y 195 a nocturnas. -

World on Fire

Biographical Notes World on Fire Peter Bowker Writer, World on Fire MASTERPIECE fans remember Peter Bowker’s impassioned adaptation of Wuthering Heights from 2009, which captured Emily Brontë’s masterpiece in all its complexity. With World on Fire he has created a plot with even more twists, turns, and memorable characters, centered on the chaotic events at the outset of World War II. Bowker has been an established screenwriter in the U.K since penning scripts for Casualty in the early 1990s. Since then, he has written for many long-running series such as Where the Heart Is and Clocking Off. His original work has included Undercover Heart; Flesh and Blood, for which he won Best Writer at the RTS Awards; Blackpool, which was awarded BANFF Film Festival Best Mini-Series and Global Television Grand Prize; and Occupation, which was awarded Best Drama Serial at the BAFTA Awards, in addition to Best Short-Form Drama at the WGGB Awards and another RTS Best Writer Award. He has since written Eric and Ernie and Marvellous. Both productions have won prestigious awards, including a Best Drama BAFTA for Marvellous, which became the most popular BBC2 single drama of the last 20 years. Most recently he has written an adaptation of John Lanchester’s novel Capital and two series of the acclaimed The A Word. Julia Brown Lois Bennett, World on Fire Driven to escape her cheating lover and dysfunctional family, Julia Brown’s character in World on Fire finds a wartime role that exploits her remarkable singing talent. Luckily, Brown herself is a gifted vocalist and sang much of the soundtrack that accompanies the episodes. -

Vol 30 Svsn.Pdf

c/o Museo di Storia Naturale Fontego dei Turchi, S. Croce 1730 30135 Venezia (Italy) Tel. 041 2750206 - Fax 041 721000 codice fiscale 80014010278 sito web: www.svsn.it e-mail: [email protected] Lavori Vol. 30 Venezia 31 gennaio 2005 La Società Veneziana di Scienze Naturali si è costituita a Venezia nel Dicembre 1975 Consiglio Direttivo Presidente della Società: Giampietro Braga Vice Presidente: Fabrizio Bizzarini Consiglieri (*) Botanica: Linda Bonello Maria Teresa Sammartino Didattica, Ecologia,Tutela ambientale: Giuseppe Gurnari Maria Chiara Lazzari Scienze della Terra e dell’Uomo: Fabrizio Bizzarini Simone Citon Zoologia: Raffaella Trabucco Segretario Tesoriere: Anna Maria Confente Revisori dei Conti: Luigi Bruni Giulio Scarpa Comitato scientifico di redazione: Giovanni Caniglia (Direttore), Fabrizio Bizzarini, Giampietro Braga, Paolo Canestrelli, Corrado Lazzari, Francesco Mezzavilla, Alessandro Minelli, Enrico Negrisolo, Michele Pellizzato Direttore responsabile della rivista: Alberto Vitucci Iniziativa realizzata con il contributo della Regione Veneto Il 15 ottobre 1975 il tribunale di Venezia autorizzava la pubblicazione della rivista scientifica “Lavori” e nel gennaio del 1976 la Società Veneziana di Scienze Naturali presentava ai soci il primo numero della rivista che conteneva 13 con- tributi scientifici. In ordine alfabetico ne elenchiamo gli autori: Lorenzo Bonometto, Silvano Canzoneri, Paolo Cesari, Antonio Dal Corso, Federico De Angeli, Giorgio Ferro, Lorenzo Munari, Helio Pierotti, Leone Rampini, Giampaolo Rallo, Enrico Ratti, Marino Sinibaldi e Roberto Vannucci. Nasceva così quell’impegno editoriale che caratterizza da allora la nostra società non solo nel puntuale rispetto dei tempi di stampa, entro il primo trimestre di ogni anno, del volume degli atti scientifici: “Lavori”, ma anche nelle altre pub- blicazione. -

Continuous Project #8 Table of Contents

Continuous Project #8 TABLE OF CONTENTS Continuous Project, Introduction 7 Continuous Project, CNEAI Exhibition, May 2006 8 Allen Ruppersberg, Metamorphosis: Patriote Palloy and Harry Houdini 11 Jacques Rancière, The Emancipated Spectator 19 Seth Price, Law Poem 31 Claire Fontaine, The Ready-Made Artist and Human Strike: A Few Clarifications 33 Dan Graham, Two-Way Mirror Cylinder inside Two-Way Mirror Cube; manuscript, contributed by Karen Kelly 45 Bettina Funcke, Urgency 53 Matthew Brannon, The last thing you remember was staring at the little white tile (Hers) and The last thing you remember was staring at the little white tile (His) 59 Alexander Kluge and Oskar Negt, The Public Sphere of Children 61 Mai-Thu Perret, Letter Home 67 Left Behind. A Continuous Project Symposium with Joshua Dubler 71 Tim Griffin, Rosters 79 August Bebel, Charles Fourier: His Life and His Theories 81 Maria Muhle, Equality and Public Realm according to Hannah Arendt 83 Pablo Lafuente, Image of the People, Voices of the People 91 Melanie Gilligan, The Emancipated – or Letters Not about Art 99 Simon Baier, Remarks on Installation 109 Donald Judd, ART AND INTERNATIONALISM. Prolegomena, contributed by Ei Arakawa 117 Mai-Thu Perret, Bake Sale 127 Nico Baumbach, Impure Ideas: On the Use of Badiou and Deleuze for Contemporary Film Theory 129 Serge Daney, In Stubborn Praise of Information 135 Johanna Burton, ‘Of Things Near at Hand,’ or Plumbing Cezanne’s Navel 139 Warren Niesluchowski, The Ars of Imperium 147 Summaries 158 6 CONTINOUS PROJEct #8 INTRODUctION 7 INTRODUCTION The Centre national de l’estampe et de l’art imprimé (CNEAI) invited us, as Continuous Project, to spend a month in Paris in the Spring of 2006 in order to realize a publication and an exhibition. -

January 1-4, 2020 January 5-8, 2020

TENTH ANNUAL January 1-4, 2020 Waimea, Mauna Kea Resort + Fairmont Orchid, Hawai‘i January 5-8, 2020 Four Seasons Resort Hualālai Sponsors Contents + About Our Area INNER CIRCLE SPONSORS Contents 4 About the Festival 5 Letter from the Director 8 Host Venues and Map 10 Films 38 Waimea Schedule 42 Waimea Breakfast Talks 46 Four Seasons Schedule 48 Four Seasons Breakfast Talks 50 Guest Speakers and Presentations 84 Artwork and Exhibits MEDIA AND LOCAL SPONSORS Artwork by Christian Enns 90 Thank You to Our Contributors BIG ISLAND About Our Area TRAVELER The Island of Hawai‘i, known as The the world, inhabit these reefs, along Big Island to avoid confusion with the with Hawaiian Hawksbill turtles, state, was formed by five volcanoes to octopus, eel and smaller reef sharks. became one land mass. The still active Spinner dolphins come to rest in Kīlauea sits at the heart of Hawai‘i shallow bays during the day, before Volcanoes National Park, while Mauna returning to deeper water to hunt at Kea, Mauna Loa and Hualālai rise about night. Humpback whales can be seen the Kohala and Kona coastline, where along the coast during winter, when stark lava fields meet turquoise waters the ocean fills with the sound of their and multihued sand beaches. The beautiful song. gentle slopes of the Kohala Mountains, The town of Waimea, also known as now volcanically extinct, provide the Kamuela, sits in the saddle between FOOD AND BEVERAGE SPONSORS backdrop to the town of Waimea and to the dry and green sides of the island, northern Hawi and Kapa‛au. -

Hollins 2015

WILDLIFE DIARY AND NEWS FOR DEC 28 - JAN 3 (WEEK 53 OF 2015) Fri 1st January 2016 54 Birds and 45 Flowers to start the year In my garden just before sunrise the moon was close to Jupiter high in the southern sky and Robins and a Song Thrush were serenading it from local gardens as a Carrion Crow flew down to collect the sraps of bread I threw out on the lawn before setting out on my bike in search of birds. Also on my lawn I was surprised to see that a Meadow Waxcap fungus had sprung up a couple of weeks after I thought my garden fungus season was over - later I added another fungus to my day list with a smart fresh Yellow Fieldcap (Bolbitius vitellinus) growing from a cowpat on the South Moors Internet photos of Yellow Fieldcap and Meadow Waxcap My proposed route was along the shore from Langstone to Farlington Marshes lake and as the wind was forecast to become increasingly strong from the south- east, and the tide to be rising from low at 9.0am I thought it best to follow the shore with the wind behind me on the way out and to stick to the cycle track and roads on the way home so I rode down Wade Court Road listening out for garden birds among which I heard bursts of song from more than one Dunnock (not a bird I associate with winter song) and saw two smart cock Pheasants in the horse feeding area immediately south of Wade Court. -

It Reveals Who I Really Am”: New Metaphors, Symbols, and Motifs in Representations of Autism Spectrum Disorders in Popular Culture

“IT REVEALS WHO I REALLY AM”: NEW METAPHORS, SYMBOLS, AND MOTIFS IN REPRESENTATIONS OF AUTISM SPECTRUM DISORDERS IN POPULAR CULTURE By Summer Joy O’Neal A Dissertation Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy in English Middle Tennessee State University 2013 Dissertation Committee: Dr. Angela Hague, Chair Dr. David Lavery Dr. Robert Petersen Copyright © 2013 Summer Joy O’Neal ii ACKNOWLEDGEMENTS There simply is not enough thanks to thank my family, my faithful parents, T. Brian and Pamela O’Neal, and my understanding sisters, Auburn and Taffeta, for their lifelong support; without their love, belief in my strengths, patience with my struggles, and encouragement, I would not be in this position today. I am forever grateful to my wonderful director, Dr. Angela Hague, whose commitment to this project went above and beyond what I deserved to expect. To the rest of my committee, Dr. David Lavery and Dr. Robert Petersen, for their seasoned advice and willingness to participate, I am also indebted. Beyond these, I would like to recognize some “unofficial” members of my committee, including Dr. Elyce Helford, Dr. Alicia Broderick, Ari Ne’eman, Chris Foss, and Melanie Yergau, who graciously offered me necessary guidance and insightful advice for this project, particularly in the field of Disability Studies. Yet most of all, Ephesians 3.20-21. iii ABSTRACT Autism has been sensationalized by the media because of the disorder’s purported prevalence: Diagnoses of this condition that was traditionally considered to be quite rare have radically increased in recent years, and an analogous fascination with autism has emerged in the field of popular culture. -

Final Frontier: Newly Discovered Species of New Guinea

REPORT 2011 Conservation Climate Change Sustainability Final Frontier: Newly discovered species of New Guinea (1998 - 2008) WWF Western Melanesia Programme Office Author: Christian Thompson (the green room) www.greenroomenvironmental.com, with contributions from Neil Stronach, Eric Verheij, Ted Mamu (WWF Western Melanesia), Susanne Schmitt and Mark Wright (WWF-UK), Design: Torva Thompson (the green room) Front cover photo: Varanus macraei © Lutz Obelgonner. This page: The low water in a river exposes the dry basin, at the end of the dry season in East Sepik province, Papua New Guinea. © Text 2011 WWF WWF is one of the world’s largest and most experienced independent conservation organisations, with over 5 million supporters and a global Network active in more than 100 countries. WWF’s mission is to stop the degradation of the planet’s natural environment and to build a future in which humans live in harmony with nature, by conserving the world’s biological diversity, ensuring that the use of renewable natural resources is sustainable, and promoting the reduction of pollution and wasteful consumption. © Brent Stirton / Getty images / WWF-UK © Brent Stirton / Getty Images / WWF-UK Closed-canopy rainforest in New Guinea. New Guinea is home to one of the world’s last unspoilt rainforests. This report FOREWORD: shows, it’s a place where remarkable new species are still being discovered today. As well as wildlife, New Guinea’s forests support the livelihoods of several hundred A VITAL YEAR indigenous cultures, and are vital to the country’s development. But they’re under FOR FORESTS threat. This year has been designated the International Year of Forests, and WWF is redoubling its efforts to protect forests for generations to come – in New Guinea, and all over the world. -

Ritsumeikan University

RITSUMEIKAN UNIVERSITY REPORT KYOTO innaji, located an approximate 20- In 1340, poet and Zen monk Yoshida Kenko Nminute walk from Ritsumeikan Uni- (1283-1350) wrote Essays in Idleness, a col- RITSUMEIKAN versity’s Kinugasa Campus, is one of the old- lection of 243 essays that advocate the UNIVERSITY est temples in Kyoto. The temple was foun- ideals of humility and simplicity within daily ded in 888 and was designated as a World life. One of his more famous essays is a story NEWSLETTER Heritage Site by UNESCO in 1994. The old- called “Drunkenness,“ set in Ninnaji. In the est surviving buildings date back to the 17th story, Ninnaji priests are celebrating a young century and are found in the northern half of the temple grounds, which is comprised of a large courtyard with buildings that include 4 the main hall, five-story pagoda, and a scrip- Vol.1 Issue 4 ture house. The Edo period garden and the FALL Ninnaji Historical Highlights a cultural treasure of Kyoto gates that rise above the acolyte who is about to rest of the temple are also enter the priesthood. The popular attractions. acolyte becomes drunk and puts an iron pot over Ninnaji is an important pil- his head, tightly covering grimage site for followers of his ears and nose. At the Shingon sect of Buddrism. first, the priests enjoy this In mountains north of the amusement and dance temple, pilgrims follow a happily, but when the acolyte tries to re- scaled-down version of the move the pot they find out it is stuck.