Curation of Digital Museum Content: Teachers Discover, Create, and Share in the Smithsonian Learning Lab

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Envisioning the Future of Computational Media the Final Report of the Media Systems Project Convening Partners

Envisioning the Future of Computational Media The Final Report of the Media Systems Project Convening Partners Convening Hosts For videos of talks and more, visit mediasystems.soe.ucsc.edu Envisioning the Future of Computational Media The Final Report of the Media Systems Project Report Authors NOAH WARDRIP-FRUIN Associate Professor, Department of Computer Science Co-Director, Expressive Intelligence Studio Director, Digital Art and New Media MFA Program University of California, Santa Cruz MIchAEL MATEAS Professor, Department of Computer Science Co-Director, Expressive Intelligence Studio Director, Center for Games and Playable Media University of California, Santa Cruz © 2014 Noah Wardrip-Fruin and Michael Mateas. Published by the Center for Games and Playable Media at the University of California, Santa Cruz. Text is licensed under a Creative Commons Attribution 4.0 International License. For more information on this license, see http:// creativecommons.org/licenses/by/4.0/. Images are copyright their respective creators and not covered by Creative Commons license. Design by Jacob Garbe in InDesign using Nexa Bold and Arial. This material is based upon a workshop supported by the National Science Foundation (under Grant Number 1152217), the National Endowment for the Humanities’ Office of Digital Humanities (under Grant Number HC-50011-12), the National Endowment for the Arts’ Office of Program Innovation, Microsoft Studios, and Microsoft Research.Any opinions, findings, and conclusions or recommendations expressed in this material -

CONFERENCE PROCEEDINGS the 2Nd Foitic 2020

FACULTY OF INDUSTRIAL TECHNOLOGY INTERNATIONAL CONGRESS (FoITIC) CONFERENCE PROCEEDINGS The 2nd FoITIC 2020 “Toward Industry 4.0: Challenges and Opportunities for Industrial Technology and Other Sector” ISBN 978-623-7525-37-0 Campus of Institut Teknologi Nasional Bandung West Java – Indonesia January 28 – 30, 2020 FACULTY OF INDUSTRIAL TECHNOLOGY INSTITUT TEKNOLOGI NASIONAL BANDUNG Proceedings of FoITIC 2020 – International Conference Editorial Board: Prof. Dr. Istvan Farkas Szent Istvan University – Hungary Prof. Dr. Rizalman Mamat Universiti Malaysia Pahang – Malaysia Prof. RNDr. Zuzana Hlavacova, CSc. Slovak University of Agriculture in Nitra – Slovak Republic Dr. Martino Luis The University of Exeter – United Kingdom Dr. Dani Rusirawan Institut Teknologi Nasional Bandung – Indonesia Dr. Dyah Setyo Pertiwi Institut Teknologi Nasional Bandung – Indonesia Dr. Fahmi Arif Institut Teknologi Nasional Bandung – Indonesia Dr. Marisa Widyastuti P Telkom University – Indonesia Dr. Achmad Ghazali Institut Teknologi Bandung – Indonesia Dr. Winarno Sugeng Institut Teknologi Nasional Bandung – Indonesia Cover Design and Layout of Proceedings: Mr. Aldrian Agusta (Cover Design) Mr. Liman Hartawan Ms. Dina Budhi Utami Mr. Muktiadi Akhmad Januar Copyright @ 2021 by the FTI – Itenas and the authors Conference Organizer: Faculty of Industrial Technology, Institut Teknologi Nasional Bandung - Indonesia Jl. PKHH. Mustapa No. 23 Bandung 40124, West Java - INDONESIA Email: [email protected], Website: http://foitic.itenas.ac.id, www.itenas.ac.id ISBN 978-623-7525-37-0 All rights reserved. No part of the publication may be produced, transmitted, in any form or by means of electronic, mechanical, photocopying, recording or otherwise, without the permission of the publisher, except the case in critical articles and review or where prior rights are preserved. -

The Work of Microsoft Research Connections in the Region

• To tell you more about Microsoft Research Connections • Global • EMEA • PhD Programme • Other engagements • • • Microsoft Research Connections Work broadly with the academic and research community to speed research, improve education, foster innovation and improve lives around the world. Accelerate university Support university research and research through education through collaborative technology partnerships investments Inspire the next Drive awareness generation of of Microsoft researchers and contributions scientists to research Engagement and Collaboration Focus Core Computer Natural User Earth Education and Health and Science Interface Energy Scholarly Wellbeing Environment Communication Research Accelerators Global Partnerships People • • • • • • • • • • • • • • Investment Focus Education & Earth, Energy, Health & Computer Science Scholarly and Environment Wellbeing Communication Programming, Natural User WW Telescope, Academic Search, MS Biology Tools, Mobile Interfaces Climate Change Digital Humanities, Foundation & Tools Earth Sciences Publishing Judith Bishop Kris Tolle Dan Fay Lee Dirks Simon Mercer Regional Outreach/Engagements EMEA: Fabrizio Gagliardi LATAM: Jaime Puente India: Vidya Natampally Asia: Lolan Song America/Aus/NZ: Harold Javid Engineering High-quality and high-impact software release and community adoption Derick Campbell CMIC EMIC ILDC • • . New member of MSR family • • • . Telecoms, Security, Online services and Entertainment Microsoft Confidential Regional Collaborations at Joint Institutes INRIA, FRANCE -

Teaching & Researching Big History: Exploring a New Scholarly Field

Teaching & Researching Big History: Exploring a New Scholarly Field Leonid Grinin, David Baker, Esther Quaedackers, Andrey Korotayev To cite this version: Leonid Grinin, David Baker, Esther Quaedackers, Andrey Korotayev. Teaching & Researching Big History: Exploring a New Scholarly Field. France. Uchitel, pp.368, 2014, 978-5-7057-4027-7. hprints- 01861224 HAL Id: hprints-01861224 https://hal-hprints.archives-ouvertes.fr/hprints-01861224 Submitted on 24 Aug 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Public Domain INTERNATIONAL BIG HISTORY ASSOCIATION RUSSIAN ACADEMY OF SCIENCES INSTITUTE OF ORIENTAL STUDIES The Eurasian Center for Big History and System Forecasting TEACHING & RESEARCHING BIG HISTORY: EXPLORING A NEW SCHOLARLY FIELD Edited by Leonid Grinin, David Baker, Esther Quaedackers, and Andrey Korotayev ‘Uchitel’ Publishing House Volgograd ББК 28.02 87.21 Editorial Council: Cynthia Stokes Brown Ji-Hyung Cho David Christian Barry Rodrigue Teaching & Researching Big History: Exploring a New Scholarly Field / Edited by Leonid E. Grinin, David Baker, Esther Quaedackers, and Andrey V. Korotayev. – Volgograd: ‘Uchitel’ Publishing House, 2014. – 368 pp. According to the working definition of the International Big History Association, ‘Big History seeks to understand the integrated history of the Cosmos, Earth, Life and Humanity, using the best available empirical evidence and scholarly methods’. -

UC Santa Barbara UC Santa Barbara Electronic Theses and Dissertations

CORE Metadata, citation and similar papers at core.ac.uk Provided by eScholarship - University of California UC Santa Barbara UC Santa Barbara Electronic Theses and Dissertations Title Advanced Automated Web Application Vulnerability Analysis Permalink https://escholarship.org/uc/item/63d660tp Author Doupé, Adam Publication Date 2014 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA Santa Barbara Advanced Automated Web Application Vulnerability Analysis A Dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science by Adam Loe Doupe´ Committee in Charge: Professor Giovanni Vigna, Chair Professor Christopher Kruegel Professor Ben Hardekopf September 2014 The Dissertation of Adam Loe Doup´eis approved: Professor Christopher Kruegel Professor Ben Hardekopf Professor Giovanni Vigna, Committee Chairperson April 2014 Advanced Automated Web Application Vulnerability Analysis Copyright © 2014 by Adam Loe Doup´e iii Acknowledgements I would like to thank the following people who, with their love and support, encour- aged and motivated me to finish this dissertation. Giovanni is the reason that I became addicted to computer security: From the mo- ment that I took his undergrad security class I was hooked. I am forever indebted to him because he has constantly invested his time in me. First, by inviting me to join his hacking club. Then, he took a chance on mentoring a Master’s student, and, upon graduation for my Master’s degree, told me that I could “come back for the real thing.” One year later I did, and I don’t regret it for a second. -

Chronozoom Overview

Millions of Years Ago 125Ma 120 115 110 105 100 95 90 85 80 75 70 65 60 55 50 45 40 35 30 25 20 15 10 5 Today An infinite canvas in time Become a time traveler at ChronoZoomProject.org “With ChronoZoom, you can EXPLORE LEARN DISCOVER browse history, rather than Travel through time—from the Big Bang, Bring history to life by exploring Compare timelines and digging it out, piece by piece.” to the era of the dinosaurs, to the high-resolution gigapixel images, events from different present day. Explore all of the past watching lecture videos, or episodes of history to across the major regimes that unify all browsing through vast image uncover trends, patterns, historical knowledge, collectively known collections. Take a guided tour and cycles. Walter Alvarez as Big History: cosmos, Earth, life, human with captions and narration right Professor of Geology, University of California, Berkeley prehistory, and written human history. in your web browser. The primary goal of ChronoZoom is to make time relationships between different studies of history clear and vivid. To do this, it unifies a wide variety of data, media, and historical perspectives. ChronoZoom is built using HTML5, so that it works on a wide range of browsers and devices. It is powered by Windows Azure for flexibility and scalability. The project is hosted by the Outercurve Foundation, which promotes and accelerates the development of open-source community projects. CHRONOZOOM FEATURES ChronoZoom can smoothly zoom from a single day all the way back to the Big Bang 13.7 billion years ago—a total zoom • Zoom factor of 5 trillion, covering a single day to 13.7 billion years factor of 5 trillion. -

Developing a Zoomable Timeline for Big History

19 From Concept to Reality: Developing a Zoomable Timeline for Big History Roland Saekow Abstract Big History is proving to be an excellent framework for designing undergradu- ate synthesis courses. A serious problem in teaching such courses is how to convey the vast stretches of time from the Big Bang, 13.7 billion years ago, to the present, and how to clarify the wildly different time scales of cosmic history, Earth and life history, human prehistory and human history. Inspired by a se- ries of printable timelines created by Professor Walter Alvarez at the Univer- sity of California, Berkeley, a time visualization tool called ‘ChronoZoom’ was developed through a collaborative effort of the Department of Earth and Plane- tary Science at UC Berkeley and Microsoft Research. With the help of the Of- fice of Intellectual Property and Industry Research Alliances at UC Berkeley, a relationship was established that resulted in the creation of a prototype of ChronoZoom, leveraging Microsoft Seadragon zoom technology. Work on a sec- ond version of ChronoZoom is presently underway with the hope that it will be among the first in a new generation of tools to enhance the study of Big History. In Spring of 2009, I had the good fortune of taking Walter Alvarez' Big His- tory course at the University of California Berkeley. As a senior about to com- plete an interdisciplinary degree in Design, I was always attracted to big picture courses rather than those that focused on specifics. So when a housemate told me about Walter's Big History course, I immediately enrolled. -

Free Tools from Microsoft: This Is a List of More Free Tools from Microsoft That Many Teachers Have Found Useful

Free Software and Tools from Microsoft Have the following free software installed on your laptop: Before you come to the Institute in November, install these six pieces of software and play around with each of them. You should have a basic understanding of each of these. You will want to use at least one of these for your Learning Excursion project. 1. AutoCollage (from Partners in Learning) http://us.partnersinlearningnetwork.com/Resources/Pages/AutoCollage.aspx Photo collages celebrate important events and themes in our lives. Pick a folder, press a button, and in a few minutes AutoCollage presents you with a unique memento to print or email to your family and friends. 2. Photosynth http://photosynth.net/create.aspx Photosynth takes your photos, mashes them together and recreates a 3D scene out of them that anyone can view and move around in. Different than static photos and video, Photosynth allows you to explore details of places, objects, and events unlike any other media. You can’t stop video, move around and zoom in to check out the smallest details, but with Photosynth you can. And you can’t look at a photo gallery and immediately see the spatial relation between the photos, but with Photosynth you can! 3. Image Composite Editor http://research.microsoft.com/en-us/um/redmond/groups/ivm/ice/ Microsoft Image Composite Editor is an advanced panoramic image stitcher. Given a set of overlapping photographs of a scene shot from a single camera location, the application creates a high-resolution panorama that seamlessly combines the original images. -

2014 Compilation

BNL-107876-2014 A Compilation of Internship Reports 2014 Prepared for The Offi ce of Educational Programs Brookhaven National Laboratory Offi ce of Educational Programs Offi ce of Educational Programs, 2012 Compilation of Internship Reports 1 DISCLAIMER This work was prepared as an account of work sponsored by an agency of the United States Government. Neither the United States Government nor any agency thereof, nor any of their employees, nor any of their contractors, subcontractors or their employees, makes any warranty, ex- press or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or any third party’s use or the results of such use of any information, apparatus, product, or process disclosed, or rep- resents that its use would not infringe privately owned rights. Reference herein to any specifi c commercial product, process, or service by trade name, trademark, manufacturer, or otherwise, does not necessarily con- stitute or imply its endorsement, recommendation, or favoring by the United States Government or any agency thereof or its contractors or subcontractors. The views and opinions of authors expressed herein do not necessarily state or refl ect those of the United States Government or any agency thereof. Table of Contents Examining the Water Gas Shift Reaction using a Au-CeO2 nanorod Catalyst . 7 Stefani Ahsanov Harmonic fl ow in the quark-gluon plasma: insights from particle correlation spectra in heavy ion collisions . 12 Mamoudou Ba Paul Glenn An intercomparison of Vaisala RS92 and RS41 radiosondes . 16 Shannon Baxter Testing of a prototype high energy effi cient CdTe pixel array x-ray detector (ImXPAD) . -

American Folk Art Museum

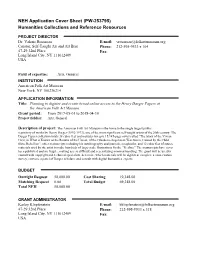

NEH Application Cover Sheet (PW-253795) Humanities Collections and Reference Resources PROJECT DIRECTOR Dr. Valerie Rousseau E-mail: [email protected] Curator, Self-Taught Art and Art Brut Phone: 212-595-9533 x 104 47-29 32nd Place Fax: Long Island City, NY 111012409 USA Field of expertise: Arts, General INSTITUTION American Folk Art Musuem New York, NY 100236214 APPLICATION INFORMATION Title: Planning to digitize and create broad online access to the Henry Darger Papers at the American Folk Art Museum. Grant period: From 2017-05-01 to 2018-04-30 Project field(s): Arts, General Description of project: The American Folk Art Museum is the home to the single largest public repository of works by Henry Darger (1892-1973), one of the most significant self-taught artists of the 20th century. The Darger Papers collection totals 38 cubic feet and includes his epic 15,145-page novel called “The Story of the Vivian Girls, in What is Known as the Realms of the Unreal, of the Glandeco-Angelinian War Storm, Caused by the Child Slave Rebellion”, other manuscripts including his autobiography and journals, scrapbooks, and 12 cubic feet of source materials used by the artist to make hundreds of large-scale illustrations for the “Realms.” The manuscripts have never been published and are fragile, making access difficult and necessitating minimal handling. The grant will be used to consult with copyright and technical specialists, determine which materials will be digitized, complete a conservation survey, convene a panel of Darger scholars, and consult with digital humanities experts. BUDGET Outright Request 50,000.00 Cost Sharing 19,348.00 Matching Request 0.00 Total Budget 69,348.00 Total NEH 50,000.00 GRANT ADMINISTRATOR Karley Klopfenstein E-mail: [email protected] 47-29 32nd Place Phone: 212-595-9533 x 318 Long Island City, NY 111012409 Fax: USA American Folk Art Museum Digitizing the Henry Darger Papers 1. -

Content Selection for Timeline Generation from Single History Articles

Content Selection for Timeline Generation from Single History Articles Sandro Mario Bauer University of Cambridge Computer Laboratory St John's College Supervisors: Dr Simone Teufel Dr Stephen Clark August 2017 This dissertation is submitted for the degree of Doctor of Philosophy Declaration This dissertation is the result of my own work and includes nothing which is the outcome of work done in collaboration except as declared in the Preface and specified in the text. It is not substantially the same as any that I have submitted, or, is being concurrently submitted for a degree or diploma or other qualification at the University of Cambridge or any other University or similar institution except as declared in the Preface and specified in the text. I further state that no substantial part of my dissertation has already been submitted, or, is being concurrently submitted for any such degree, diploma or other qualification at the University of Cambridge or any other University or similar institution except as declared in the Preface and specified in the text. It does not exceed 63,000 words in length. Content Selection for Timeline Generation from Single History Articles Sandro Mario Bauer Summary This thesis investigates the problem of content selection for timeline generation from single history articles. While the task of timeline generation has been addressed before, most previous approaches assume the existence of a large corpus of history articles from the same era. They exploit the fact that salient information is likely to be mentioned multiple times in such corpora. However, large resources of this kind are only available for historical events that happened in the most recent decades. -

The-Evolving-Universe-Resource.Pdf

Educational resource for THE EVOLVING UNIVERSE 10 June to 27 August 2017 INTRODUCTION ABOUT THE EXHIBITION Journey from Earth to beyond our galaxy, travelling The Evolving Universe is an exhibition that is touring back through time and into space in The Evolving the world and comes all the way from Washington in Universe. Through breath-taking photographs taken conjunction with NASA, the Smithsonian. It will take you by the largest telescopes in the world, you will enter on a mind-bending journey from Earth to the far reaches a world of supernovas, stellar nurseries and nebulae of space—back to the beginning of the universe about in this exclusive to Upper Hutt exhibition from the 13.6 billion years ago. The further we peer into space with Smithsonian Institutes, USA. This exhibition is an powerful telescopes, the further back into the history awesome opportunity for your students to view images of the universe we see. The light from our Sun—a mere of the universe and the changes that have happened over 150 million kilometres away—takes only a few minutes time. This exhibition is timed beautifully with Matariki to reach Earth. But when we look at stars and galaxies celebrations. in the night sky, we are seeing light that has travelled for millions—even billions—of years to reach us. Matariki hunga, Matariki ahunga nui. The Evolving Universe exhibition explores how stars, Matariki has many admirers, Matariki brings us together. galaxies and the universe change and mature throughout their lifespans. The exhibition contains rare, beautiful photographs of the cosmos: supernovas, stellar nurseries, nebulae, and galaxy clusters.