Benelearn 2011

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Uila Supported Apps

Uila Supported Applications and Protocols updated Oct 2020 Application/Protocol Name Full Description 01net.com 01net website, a French high-tech news site. 050 plus is a Japanese embedded smartphone application dedicated to 050 plus audio-conferencing. 0zz0.com 0zz0 is an online solution to store, send and share files 10050.net China Railcom group web portal. This protocol plug-in classifies the http traffic to the host 10086.cn. It also 10086.cn classifies the ssl traffic to the Common Name 10086.cn. 104.com Web site dedicated to job research. 1111.com.tw Website dedicated to job research in Taiwan. 114la.com Chinese web portal operated by YLMF Computer Technology Co. Chinese cloud storing system of the 115 website. It is operated by YLMF 115.com Computer Technology Co. 118114.cn Chinese booking and reservation portal. 11st.co.kr Korean shopping website 11st. It is operated by SK Planet Co. 1337x.org Bittorrent tracker search engine 139mail 139mail is a chinese webmail powered by China Mobile. 15min.lt Lithuanian news portal Chinese web portal 163. It is operated by NetEase, a company which 163.com pioneered the development of Internet in China. 17173.com Website distributing Chinese games. 17u.com Chinese online travel booking website. 20 minutes is a free, daily newspaper available in France, Spain and 20minutes Switzerland. This plugin classifies websites. 24h.com.vn Vietnamese news portal 24ora.com Aruban news portal 24sata.hr Croatian news portal 24SevenOffice 24SevenOffice is a web-based Enterprise resource planning (ERP) systems. 24ur.com Slovenian news portal 2ch.net Japanese adult videos web site 2Shared 2shared is an online space for sharing and storage. -

Marginal but Significant the Impact of Social Media on Preferential Voting

Working paper Marginal but significant The impact of social media on preferential voting Niels Spierings, Kristof Jacobs Institute for Management Research Creating knowledge for society POL12-01 Marginal but significant The impact of social media on preferential voting Niels Spierings, Radboud University Nijmegen Kristof Jacobs, Radboud University Nijmegen 1 Getting personal? The impact of social media on preferential voting Abstract Accounts of the state of contemporary democracies often focus on parties and partisan representation. It has been noted by several authors that parties are in a dire state. Parties are said to withdraw themselves from society and citizens in turn are withdrawing themselves from parties. However, two trends are rarely taken into account, namely (1) an increasing personalization of electoral systems and (2) the spread of cheap and easy- to-use social media which allow politicians to build personal ties with citizens. When considering these two trends, the process of ‘mutual withdrawal’ may be less problematic. Our research seeks to examine whether or not candidates make use of social media during election campaigns to reach out to citizens and whether citizens in turn connect to politicians. Afterwards it examines whether social media make a difference and yield a preference vote bonus. Four types of effects are outlined, namely a direct effect of the number of followers a candidate has; an interaction effect whereby a higher number of followers only yields more votes when the candidate actively uses the social media; an indirect effect whereby social media first lead to more coverage in traditional media and lastly the absence of any effect. -

Social Media Weller, Katrin; Meckel, Martin Sebastian; Stahl, Matthias

www.ssoar.info Social Media Weller, Katrin; Meckel, Martin Sebastian; Stahl, Matthias Veröffentlichungsversion / Published Version Bibliographie / bibliography Zur Verfügung gestellt in Kooperation mit / provided in cooperation with: GESIS - Leibniz-Institut für Sozialwissenschaften Empfohlene Zitierung / Suggested Citation: Weller, K., Meckel, M. S., & Stahl, M. (2013). Social Media. (Recherche Spezial, 1/2013). Köln: GESIS - Leibniz-Institut für Sozialwissenschaften. https://nbn-resolving.org/urn:nbn:de:0168-ssoar-371652 Nutzungsbedingungen: Terms of use: Dieser Text wird unter einer Deposit-Lizenz (Keine This document is made available under Deposit Licence (No Weiterverbreitung - keine Bearbeitung) zur Verfügung gestellt. Redistribution - no modifications). We grant a non-exclusive, non- Gewährt wird ein nicht exklusives, nicht übertragbares, transferable, individual and limited right to using this document. persönliches und beschränktes Recht auf Nutzung dieses This document is solely intended for your personal, non- Dokuments. Dieses Dokument ist ausschließlich für commercial use. All of the copies of this documents must retain den persönlichen, nicht-kommerziellen Gebrauch bestimmt. all copyright information and other information regarding legal Auf sämtlichen Kopien dieses Dokuments müssen alle protection. You are not allowed to alter this document in any Urheberrechtshinweise und sonstigen Hinweise auf gesetzlichen way, to copy it for public or commercial purposes, to exhibit the Schutz beibehalten werden. Sie dürfen dieses Dokument document in public, to perform, distribute or otherwise use the nicht in irgendeiner Weise abändern, noch dürfen Sie document in public. dieses Dokument für öffentliche oder kommerzielle Zwecke By using this particular document, you accept the above-stated vervielfältigen, öffentlich ausstellen, aufführen, vertreiben oder conditions of use. anderweitig nutzen. Mit der Verwendung dieses Dokuments erkennen Sie die Nutzungsbedingungen an. -

Modality Switching in Online Dating: Identifying the Communicative Factors That Make the Transition from an Online to an Offline Relationship More Or Less Successful

MODALITY SWITCHING IN ONLINE DATING: IDENTIFYING THE COMMUNICATIVE FACTORS THAT MAKE THE TRANSITION FROM AN ONLINE TO AN OFFLINE RELATIONSHIP MORE OR LESS SUCCESSFUL BY LIESEL L. SHARABI DISSERTATION Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Communication in the Graduate College of the University of Illinois at Urbana-Champaign, 2015 Urbana, Illinois Doctoral Committee: Professor John Caughlin, Chair Professor Leanne Knobloch Associate Professor Karrie Karahalios Associate Professor Artemio Ramirez, University of South Florida Abstract Perhaps one of the most significant turning points in online dating occurs when partners decide to meet face-to-face (FtF) for the first time. Existing theory proposes that the affordances of the Internet can lead people to develop overly positive impressions of those they meet online, which could prove advantageous for relationships initiated on online dating sites. However, empirical evidence suggests that while such hyperpersonal impressions can intensify the development of mediated relationships, they can also result in disillusionment if the first date fails to meet both partners’ expectations. Accordingly, this dissertation set out to uncover the communicative factors responsible for more or less successful transitions offline. Drawing from the computer- mediated communication (CMC) and personal relationships literatures, the present study introduced a conceptual model of relationship success in online dating and tested it using a longitudinal survey design. Participants (N = 186) were surveyed before and after their first date with someone they met on an online dating site or mobile dating app. As part of the survey, they also supplied the emails they had sent to their partner so their communication could be observed. -

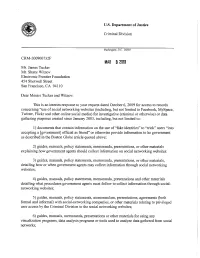

Obtaining and Using Evidence from Social Networking Sites

U.S. Department of Justice Criminal Division Washington, D.C. 20530 CRM-200900732F MAR 3 2010 Mr. James Tucker Mr. Shane Witnov Electronic Frontier Foundation 454 Shotwell Street San Francisco, CA 94110 Dear Messrs Tucker and Witnov: This is an interim response to your request dated October 6, 2009 for access to records concerning "use of social networking websites (including, but not limited to Facebook, MySpace, Twitter, Flickr and other online social media) for investigative (criminal or otherwise) or data gathering purposes created since January 2003, including, but not limited to: 1) documents that contain information on the use of "fake identities" to "trick" users "into accepting a [government] official as friend" or otherwise provide information to he government as described in the Boston Globe article quoted above; 2) guides, manuals, policy statements, memoranda, presentations, or other materials explaining how government agents should collect information on social networking websites: 3) guides, manuals, policy statements, memoranda, presentations, or other materials, detailing how or when government agents may collect information through social networking websites; 4) guides, manuals, policy statements, memoranda, presentations and other materials detailing what procedures government agents must follow to collect information through social- networking websites; 5) guides, manuals, policy statements, memorandum, presentations, agreements (both formal and informal) with social-networking companies, or other materials relating to privileged user access by the Criminal Division to the social networking websites; 6) guides, manuals, memoranda, presentations or other materials for using any visualization programs, data analysis programs or tools used to analyze data gathered from social networks; 7) contracts, requests for proposals, or purchase orders for any visualization programs, data analysis programs or tools used to analyze data gathered from social networks. -

Comments of the Center for Democracy & Technology

Comments of the Center for Democracy & Technology Regarding Agency Information Collection Activities: Arrival and Departure Record (Forms I-94 and I-94W) and Electronic System for Travel Authorization 19 August 2016 The Center for Democracy & Technology appreciates the opportunity to provide comments to the Department of Homeland Security on its proposal to begin requesting disclosure of social media identifiers and other online account information from Visa Waiver Program applicants. DHS proposes to ask foreign visitors applying for a waiver of visa requirements to provide “information associated with [their] online presence,” including the “provider/platform” and “social media identifier” used by the applicant. While the details of this proposed information collection are unclear, DHS’s Notice of Collection Activities states that the solicited online identity information “will enhance the existing investigative process” and “provide DHS greater clarity and visibility to possible nefarious activity and connections” of visitors to the United States.1 CDT is deeply concerned that this proposal would invade the privacy and chill the freedom of expression of visitors to the United States and United States citizens. Under the proposed changes, visitors to the U.S. who seek admittance through the Electronic System of Travel Authorization (ESTA), or complete Form I-94W, will be subject to unspecified review and monitoring of their public online activity by U.S. Customs and Border Protection (CBP) officials. This program will also increase the surveillance of U.S. citizens, both as a result of their online connections to visitors to the U.S. and because other countries may seek similar information from U.S. -

Systematic Scoping Review on Social Media Monitoring Methods and Interventions Relating to Vaccine Hesitancy

TECHNICAL REPORT Systematic scoping review on social media monitoring methods and interventions relating to vaccine hesitancy www.ecdc.europa.eu ECDC TECHNICAL REPORT Systematic scoping review on social media monitoring methods and interventions relating to vaccine hesitancy This report was commissioned by the European Centre for Disease Prevention and Control (ECDC) and coordinated by Kate Olsson with the support of Judit Takács. The scoping review was performed by researchers from the Vaccine Confidence Project, at the London School of Hygiene & Tropical Medicine (contract number ECD8894). Authors: Emilie Karafillakis, Clarissa Simas, Sam Martin, Sara Dada, Heidi Larson. Acknowledgements ECDC would like to acknowledge contributions to the project from the expert reviewers: Dan Arthus, University College London; Maged N Kamel Boulos, University of the Highlands and Islands, Sandra Alexiu, GP Association Bucharest and Franklin Apfel and Sabrina Cecconi, World Health Communication Associates. ECDC would also like to acknowledge ECDC colleagues who reviewed and contributed to the document: John Kinsman, Andrea Würz and Marybelle Stryk. Suggested citation: European Centre for Disease Prevention and Control. Systematic scoping review on social media monitoring methods and interventions relating to vaccine hesitancy. Stockholm: ECDC; 2020. Stockholm, February 2020 ISBN 978-92-9498-452-4 doi: 10.2900/260624 Catalogue number TQ-04-20-076-EN-N © European Centre for Disease Prevention and Control, 2020 Reproduction is authorised, provided the -

Regulation OTT Regulation

OTT Regulation OTT Regulation MINISTRY OF SCIENCE, TECHNOLOGY, INNOVATIONS AND COMMUNICATIONS EUROPEAN UNION DELEGATION TO BRAZIL (MCTIC) Head of the European Union Delegation Minister João Gomes Cravinho Gilberto Kassab Minister Counsellor - Head of Development and Cooperation Section Secretary of Computing Policies Thierry Dudermel Maximiliano Salvadori Martinhão Cooperation Attaché – EU-Brazil Sector Dialogues Support Facility Coordinator Director of Policies and Sectorial Programs for Information and Communication Asier Santillan Luzuriaga Technologies Miriam Wimmer Implementing consortium CESO Development Consultants/FIIAPP/INA/CEPS Secretary of Telecommunications André Borges CONTACTS Director of Telecommunications Services and Universalization MINISTRY OF SCIENCE, TECHNOLOGY, INNOVATIONS AND COMMUNICATIONS Laerte Davi Cleto (MCTIC) Author Secretariat of Computing Policies Senior External Expert + 55 61 2033.7951 / 8403 Vincent Bonneau [email protected] Secretariat of Telecommunications MINISTRY OF PLANNING, DEVELOPMENT AND MANAGEMENT + 55 61 2027.6582 / 6642 [email protected] Ministry Dyogo Oliveira PROJECT COORDINATION UNIT EU-BRAZIL SECTOR DIALOGUES SUPPORT FACILITY Secretary of Management Gleisson Cardoso Rubin Secretariat of Public Management Ministry of Planning, Development and Management Project National Director Telephone: + 55 61 2020.4645/4168/4785 Marcelo Mendes Barbosa [email protected] www.sectordialogues.org 2 3 OTT Regulation OTT © European Union, 2016 Regulation Responsibility -

Comment Submitted by David Crawley Comment View Document

Comment Submitted by David Crawley Comment View document: The notion that my social media identifier should have anything to do with entering the country is a shockingly Nazi like rule. The only thing that this could facilitate anyway is trial by association - something that is known to ensnare innocent people, and not work. What is more this type of thing is repugnant to a free society. The fact that it is optional doesn't help - as we know that this "optional" thing will soon become not-optional. If your intent was to keep it optional - why even bother? Most importantly the rules that we adopt will tend to get adopted by other countries and frankly I don't trust China, India, Turkey or even France all countries that I regularly travel to from the US with this information. We instead should be a beacon for freedom - and we fail in our duty when we try this sort of thing. Asking for social media identifier should not be allowed here or anywhere. Comment Submitted by David Cain Comment View document: I am firmly OPPOSED to the inclusion of a request - even a voluntary one - for social media profiles on the I-94 form. The government should NOT be allowed to dip into users' social media activity, absent probable cause to suspect a crime has been committed. Bad actors are not likely to honor the request. Honest citizens are likely to fill it in out of inertia. This means that the pool of data that is built and retained will serve as a fishing pond for a government looking to control and exploit regular citizens. -

Social Network Site Recruitment 2011

H.R.A. Klerks Social Network Site Recruitment 2011 Social network site recruitment Two adaptations of TAM; SNS usage by ‘Gen Y’ job seekers and organizational attractiveness on SNS A master thesis from the University of Twente, faculty of Management and Governance, Business Administration March 2011 Student: H.R.A. Klerks S1001183 Supervisors: dr. M. van Velzen & dr. T. Bondarouk 1 H.R.A. Klerks Social Network Site Recruitment 2011 Contents INTRODUCTION ................................................................................................................................................ 3 THEORETICAL FRAMEWORK ....................................................................................................................... 5 THE TECHNOLOGY ACCEPTANCE MODEL ..................................................................................................................... 5 METHODOLOGY ............................................................................................................................................. 15 SAMPLING ........................................................................................................................................................................ 15 RESEARCH MODEL 1: THE QUESTIONNAIRE .............................................................................................................. 16 RESEARCH MODEL 2: THE EXPERIMENT..................................................................................................................... 18 THEORETICAL BACKGROUND -

Economy-Crone-Rozzell-2014.Pdf

Computers in Human Behavior 38 (2014) 272–280 Contents lists available at ScienceDirect Computers in Human Behavior journal homepage: www.elsevier.com/locate/comphumbeh Notification pending: Online social support from close and nonclose relational ties via Facebook ⇑ Bobby Rozzell a, , Cameron W. Piercy a, Caleb T. Carr b, Shawn King a, Brianna L. Lane a, Michael Tornes a, Amy Janan Johnson a, Kevin B. Wright c a University of Oklahoma, Department of Communication, United States b Illinois State University, School of Communication, United States c George Mason University, Department of Communication, United States article info abstract Article history: Previous research has often assumed social support as a unique affordance of close relationships. Available online 3 July 2014 Computer-mediated communication alters the availability of relationally nonclose others, and may to enable additional sources or social support through venues like social networking sites. Eighty-eight Keywords: college students completed a questionnaire based on their most recent Facebook status updates and Network ties the comments those updates generated. Items queried participants’ perception of each response as well Social support as the participants’ relationship closeness with the responder. Individuals perceived as relationally close Computer-mediated communication provide significant social support via Facebook; however, individuals perceived to be relationally Social media nonclose provided equal social support online. While SNSs has not eroded the importance of close rela- tionships, results demonstrate the social media tools may allow for social support to be obtained from nonclose as well as close relationships, with access to a significant proportion of nonclose relationships. Ó 2014 Elsevier Ltd. All rights reserved. -

Informe De Contenidos Digitales 2011

Elaboración y Edición: AMETIC Coordinación: FUNCOAS Foro de Expertos en Contenidos Digitales que ha colaborado en la elaboración del informe: • D. Martín Pérez Sánchez, Vicepresidente de AMETIC • D. José Pérez García, Director General AMETIC • D. Antonio Guisasola, Presidente de Promusicae • D. Pedro Pérez, Presidente de FAPAE • D. Ángel García Castillejo, Consejero Comisión del Mercado de Telecomunicaciones • D. Ignacio Pérez Dolset, Presidente del Área Sectorial de Contenidos Digitales de AMETIC • D. Fernando Davara, Director de FUNCOAS • D. Antonio Fernández Carrasco, Director del Área Sectorial de Contenidos Digitales de AMETIC • D. Juan Zafra, Asesor del Gabinete de Comunicación de Presidencia del Gobierno • D. José Ángel Alonso Jiménez, Responsable del área de contenidos digitales de la Subdirección General de Fomento de la Sociedad de la Información del MITYC • Dña. Patricia Fernández-Mazarambroz, Subdirectora General Adjunta de Propiedad Intelectual del Ministerio de Cultura • D. Eladio Gutiérrez Montes, Experto en Medios de Comunicación Audiovisuales • D. Rafael Sánchez Jiménez, Director Gerente EGEDA • D. Carlos Iglesias Redondo, Secretario General ADeSe • D. Pedro Martín Jurado, Director del ONTSI de Red.es • D. Alberto Urueña, Responsable del área de estudios y prospectiva del ONTSI de Red.es • Dña. Maite Arcos, Directora General REDTEL • D. Ignacio M. Benito García, Director General AEDE • D. Antonio María Ávila, Director Ejecutivo FGEE • Dña. Magdalena Vinent, Directora General de CEDRO • Moderador del Foro • D. Jorge Pérez