Gumbel-Matrix Routing for Flexible Multi-Task Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

History of Writing

History of Writing On present archaeological evidence, full writing appeared in Mesopotamia and Egypt around the same time, in the century or so before 3000 BC. It is probable that it started slightly earlier in Mesopotamia, given the date of the earliest proto-writing on clay tablets from Uruk, circa 3300 BC, and the much longer history of urban development in Mesopotamia compared to the Nile Valley of Egypt. However we cannot be sure about the date of the earliest known Egyptian historical inscription, a monumental slate palette of King Narmer, on which his name is written in two hieroglyphs showing a fish and a chisel. Narmer’s date is insecure, but probably falls in the period 3150 to 3050 BC. In China, full writing first appears on the so-called ‘oracle bones’ of the Shang civilization, found about a century ago at Anyang in north China, dated to 1200 BC. Many of their signs bear an undoubted resemblance to modern Chinese characters, and it is a fairly straightforward task for scholars to read them. However, there are much older signs on the pottery of the Yangshao culture, dating from 5000 to 4000 BC, which may conceivably be precursors of an older form of full Chinese writing, still to be discovered; many areas of China have yet to be archaeologically excavated. In Europe, the oldest full writing is the Linear A script found in Crete in 1900. Linear A dates from about 1750 BC. Although it is undeciphered, its signs closely resemble the somewhat younger, deciphered Linear B script, which is known to be full writing; Linear B was used to write an archaic form of the Greek language. -

Communicator Summer 2015 47

Learn more about Microsoft Word Start to delve into VBA and create your own macros CommunicatorThe Institute of Scientific and Technical Communicators Summer 2015 What’s a technical metaphor? Find out more. Create and implement personalised learning Re-think your accessibility requirements with SVG Discover how a security technology team engaged with customers 46 Global brand success Success with desktop publishing Kavita Kovvali from translate plus takes a look into the role that DTP plays to help organisations maximise their international reach. them, with negative implications for a brand if it’s not. Common Sense Advisory’s survey across three continents about online buying behaviour states that 52% of participants only make online purchases if a website presents information in their language, with many considering this more important than the price of the product.2 Supporting this is an analytical report carried out by the European Commission across 27 EU member states regarding purchasing behaviour. Their survey shows that of their respondents, 9 out of 10 internet users3 said that when given a choice of languages, they always visited a website in their own language, with only a small majority (53%) stating that they would accept using an English language site if their own language wasn’t available. This shows the overwhelming need to communicate to audiences in their local languages. As you can see in this chart4 compiled by Statista, although English has the highest number of total speakers due to its widespread learning across the word, other languages such as Chinese and Hindi have a higher number of native speakers. -

Neo-Aramaic Garshuni: Observations Based on Manuscripts

Hugoye: Journal of Syriac Studies, Vol. 17.1, 17-31 © 2014 by Beth Mardutho: The Syriac Institute and Gorgias Press NEO-ARAMAIC GARSHUNI: OBSERVATIONS BASED ON MANUSCRIPTS EMANUELA BRAIDA UNIVERSITY OF TORONTO* ABSTRACT The present paper is a preliminary study of key spelling features detected in the rendering of foreign words in some Neo-Aramaic Christian texts belonging to the late literary production from the region of Alqosh in Northern Iraq. This Neo-Syriac literature laid the groundwork for its written form as early as the end of the 16th century, when scribes of the so-called ‘school of Alqosh’ wrote down texts for the local population. From a linguistic point of view, scribes and authors developed a Neo-Syriac literary koine based on the vernacular languages of the region of Alqosh. Despite the sporadic presence of certain orthographical conventions, largely influenced by Classical Syriac, these texts lacked a standard orthography and strict conventions in spelling. Since a large number of vernacular terms have strayed over time from the classical language or, in many cases, they have been borrowed from other languages, the rendering of the terms was usually characterized by a mostly phonetic rendition, especially in the case of foreign words that cannot be written according to an original or a standard form. In these cases, scribes and authors had to adapt the Syriac alphabet to * A Post Doctoral Research Fellowship granted by Foreign Affairs and International Trade Canada. I would like to thank my supervisor, Prof. Amir Harrak, for the guidance, encouragement and advice he has provided throughout my time as Post-doctoral fellow. -

A Note on the Phonetic and Graphic Representation of Greek Vowels and of the Spiritus Asper in the Aramaic Transcription of Greek Loanwords Abraham Wasserstein

A Note on the Phonetic and Graphic Representation of Greek Vowels and of the Spiritus Asper in the Aramaic Transcription of Greek Loanwords Abraham Wasserstein Raanana Meridor has probably taught Greek to more men and women in Israel than any other person. Her learning, constantly enriched by intellectual curiosity, enthusiasm and conscientiousness, has for many years been a source of encour- agement to her students and a model to be emulated by her colleagues. It is an honour to be allowed to participate in the tribute offered to her on her retirement by those who are linked to her by friendship, affection and admiration. Ancient transcriptions of Greek (and other) names and loanwords into Semitic alphabets are a notorious source of mistakes and ambiguities. Since these alpha- bets normally use only consonantal signs the vowels of the words in the source language are often liable to be corrupted. This happens routinely in Greek loan- words in Syriac and in rabbinic Hebrew and Aramaic. Thus, though no educated in rabbinic Hebrew being סנהדרין modern Hebrew-speaker has any doubt about derived from the Greek συνέδριον, there cannot have been many occasions since late antiquity on which any non-hellenized Jew has pronounced the first vowel of that word in any way other than if it had been an alpha; indeed I know of no modern western language in which that word, when applied to the Pales- tinian institution of that name is not, normally, = Sanhedrin.1 Though, in principle, it can be stated that practically all vowel sounds can remain unrepresented in Syriac and Jewish Aramaic transcription2 it is true that it is possible to indicate Greek vowels or diphthongs by approximate representa- See below for the internal aspiration in this word. -

Jan Just Witkam & A.G.P. Janson, Multi-Lingual

Multi-Lingual Scholar'^ and Font Scholàr'^, a word-processorand a tool for font design for the student of Orientallanguages A testfu JanJast Vitkam dy A.G.P. Janson* l. Murrr-LTNGUALScHOLAR''. rne Wono-PnocessoR up to l5 printer fonts (5 Roman, 4 Hebrew, 2 Greek.2 Cyrillic and 2 Arabic fonts); thus with these five When comparedto other word-processors,what extras alphabetsone can write, edit and print in dozens of doesthis program offer its usersand what is neededto different languages.The program works in a purely operateit? The most conspicuousextra feature of this graphicalway. so that it offersnumerous other features program is that it enabiesthe userto work on the same which are seldomavailable in other word-processing documentconcurrently with up to five different alpha- software.One manifestexample of suchan extra featu- bets of one'sown choice- provided.of course.that re is the option ol uorking eitheruith largeon-screen they areinstalled. These alphabets can be mixedfreely'. characters(in ,10columns) or nith smallercharacters irrespectiveof the direction of u'riting. These Ín'e (in the usual80 columns).Workin-s *'ith largecharac- alphabetsinclude all sortsof accentsand vow'elsigns. tershas the adranta-qethat all the rouels. accentsand for both Europeanand non-Europeanlanguages. and the iike can be typed and seen on the screen.Using eachhas multiple font sizesand characterstyles. theo- eitherof thesescreen fonts has no consequencesfor the retically up to 30 fonts per document and in practice, way the document will be printed. since the program with the fonts that are suppliedas standardprocedure, recalculatesevery page for printing according to the user'sspecifications. * Multi-Lingual Scholar'' reviewedby Jan Just Witkam; To operateMLS, one needsto have at leastan reN,ÍPC Font Scholar''reviewed by' A.G.P. -

Ms Cairo Syriac 11: a Tri-Lingual Garshuni

Ester Petrosyan MS CAIRO SYRIAC 11: A TRI-LINGUAL GARSHUNI MANUSCRIPT MA Thesis in Comparative History, with a specialization in Interdisciplinary Medieval Studies. CEU eTD Collection Central European University Budapest May 2016 MS CAIRO SYRIAC 11: A TRI-LINGUAL GARSHUNI MANUSCRIPT by Ester Petrosyan (Armenia) Thesis submitted to the Department of Medieval Studies, Central European University, Budapest, in partial fulfillment of the requirements of the Master of Arts degree in Comparative History, with a specialization in Interdisciplinary Medieval Studies. Accepted in conformance with the standards of the CEU. ____________________________________________ Chair, Examination Committee ____________________________________________ Thesis Supervisor CEU eTD Collection ____________________________________________ Examiner ____________________________________________ Examiner MS CAIRO SYRIAC 11: A TRI-LINGUAL GARSHUNI MANUSCRIPT by Ester Petrosyan (Armenia) Thesis submitted to the Department of Medieval Studies, Central European University, Budapest, in partial fulfillment of the requirements of the Master of Arts degree in Comparative History, with a specialization in Interdisciplinary Medieval Studies. Accepted in conformance with the standards of the CEU. ____________________________________________ External Reader CEU eTD Collection MS CAIRO SYRIAC 11: A TRI-LINGUAL GARSHUNI MANUSCRIPT by Ester Petrosyan (Armenia) Thesis submitted to the Department of Medieval Studies, Central European University, Budapest, in partial fulfillment of the -

Accordance Fonts.Pdf

Accordance Fonts (November 2015) Contents Unicode Export . 4 Helena Greek Font . 3 Additional Character Positions for Helena . 3 Helena Greek Font . 4 Sylvanus Uncial/Coptic Font . 5 Sylvanus Uncial Font . 6 Yehudit Hebrew Font . 7 Table of Hebrew Vowels and Other Characters . 7 Yehudit Hebrew Font . 8 Notes on Yehudit Keyboard . 9 Table of Diacritical Characters(not used in the Hebrew Bible) . 9 Table of Accents (Cantillation Marks, Te‘amim) — see notes at end . 9 Notes on the Accent Table . 12 Lakhish PaleoHebrew Font . 13 Lakhish PaleoHebrew Font . 14 Peshitta Syriac Font . 15 Characters in non-standard positions . 15 Peshitta Syriac Font . 16 Syriac vowels, other diacriticals, and punctuation: . 17 Salaam Arabic Font . 18 Characters in non-standard positions . 18 Salaam Arabic Font . .19 Arabic vowels and other diacriticals: . 20 Rosetta Transliteration Font . 21 Character Positions for Rosetta: . 21 MSS Font for Manuscript Citation . 23 MSS Manuscript Font . 26 Page 1 Accordance Fonts Nine fonts are supplied for use with Accordance 11 .1 and up: Helena for Greek, Yehudit for Hebrew, Lakhish for PaleoHebrew, Peshitta for Syriac, Rosetta for transliteration characters, Sylvanus for uncial manuscripts, Salaam for Arabic, and MSS font for manuscript symbols . These fonts are included in the Accordance application bundle, and automatically available to Accordance on Windows and Mac . The fonts can be used in other Mac programs if they are installed into the Fonts folder, but we recommend converting the text to Unicode on export . This is now the default setting, and the only option on Windows . These fonts each include special accents and other characters which occur in various overstrike positions for different characters . -

ALPHABETUM Unicode Font for Ancient Scripts

(VERSION 14.00, March 2020) A UNICODE FONT FOR LINGUISTICS AND ANCIENT LANGUAGES: OLD ITALIC (Etruscan, Oscan, Umbrian, Picene, Messapic), OLD TURKIC, CLASSICAL & MEDIEVAL LATIN, ANCIENT GREEK, COPTIC, GOTHIC, LINEAR B, PHOENICIAN, ARAMAIC, HEBREW, SANSKRIT, RUNIC, OGHAM, MEROITIC, ANATOLIAN SCRIPTS (Lydian, Lycian, Carian, Phrygian and Sidetic), IBERIC, CELTIBERIC, OLD & MIDDLE ENGLISH, CYPRIOT, PHAISTOS DISC, ELYMAIC, CUNEIFORM SCRIPTS (Ugaritic and Old Persian), AVESTAN, PAHLAVI, PARTIAN, BRAHMI, KHAROSTHI, GLAGOLITIC, OLD CHURCH SLAVONIC, OLD PERMIC (ANBUR), HUNGARIAN RUNES and MEDIEVAL NORDIC (Old Norse and Old Icelandic). (It also includes characters for LATIN-based European languages, CYRILLIC-based languages, DEVANAGARI, BENGALI, HIRAGANA, KATAKANA, BOPOMOFO and I.P.A.) USER'S MANUAL ALPHABETUM homepage: http://guindo.pntic.mec.es/jmag0042/alphabet.html Juan-José Marcos Professor of Classics. Plasencia. Spain [email protected] 1 March 2020 TABLE OF CONTENTS Chapter Page 1. Intr oduc tion 3 2. Font installati on 3 3. Encod ing syst em 4 4. So ft ware req uiremen ts 5 5. Unicode co verage in ALP HAB ETUM 5 6. Prec ompo sed cha racters and co mbining diacriticals 6 7. Pri vate Use Ar ea 7 8. Classical Latin 8 9. Anc ient (po lytonic) Greek 12 10. Old & Midd le En glis h 16 11. I.P.A. Internati onal Phon etic Alph abet 17 12. Pub lishing cha racters 17 13. Mi sce llaneous ch aracters 17 14. Espe ran to 18 15. La tin-ba sed Eu ropean lan gua ges 19 16. Cyril lic-ba sed lan gua ges 21 17. Heb rew 22 18. -

Classical Syriac Estrangela Script

Introduction and Alphabet 1 Mark Steven Francois (Ph.D., The University of St. Michael’s College) Classical Syriac Estrangela Script Chapter 1 (Last updated November 20, 2019) 1.1. What is Classical Syriac? 1 1.2. Why Study Classical Syriac 3 1.3. Purpose and Aims of this Textbook 4 1.4. Introduction to the Syriac Alphabet 4 1.5. Writing Letters in the Estrangela Script 7 1.6. Homework 7 1.1. What is Classical Syriac? What is Classical Syriac? The answer to that question will often depend on the interests of the person asking the question as well as the interests of the person answering the question. For the person interested in ancient Semitic languages, Classical Syriac is a late dialect of Aramaic that was widely used in what is now southeastern Turkey, Syria, Iraq, and Iran from the beginning of the common era all of the way into the medieval period and beyond. For the person interested in textual criticism of either the Old Testament or the New Testament, Classical Syriac is the language that was used in a number of important witnesses that we have to the text of both the Old and New Testament. For the person interested in church history, especially the patristic period, Classical Syriac is a welcome and, in some cases, surprising addition to the better known languages that were used to produce important material for the life and faith of the Christian church. For the person interested in the rise and spread of Islam or in the history of the Crusades, Classical Syriac is a language that opens up lesser-known sources that are of incredible value for understanding the people, beliefs, and events associated with these time periods. -

Internationalization and Math

Internationalization and Math Test collection Made by ckepper • English • 2 articles • 156 pages Contents Internationalization 1. Arabic alphabet . 3 2. Bengali alphabet . 27 3. Chinese script styles . 47 4. Hebrew language . 54 5. Iotation . 76 6. Malayalam . 80 Math Formulas 7. Maxwell's equations . 102 8. Schrödinger equation . 122 Appendix 9. Article ourS ces and Contributors . 152 10. Image Sources, Licenses and Contributors . 154 Internationalization Arabic alphabet Arabic Alphabet Type Abjad Languages Arabic Time peri- 356 AD to the present od Egyptian • Proto-Sinaitic ◦ Phoenician Parent ▪ Aramaic systems ▪ Syriac ▪ Nabataean ▪ Arabic Al- phabet Arabic alphabet | Article 1 fo 2 3 َْ Direction Right-to-left األ ْب َج ِد َّية :The Arabic alphabet (Arabic ا ْل ُح ُروف al-ʾabjadīyah al-ʿarabīyah, or ا ْل َع َربِ َّية ISO ْ al-ḥurūf al-ʿarabīyah) or Arabic Arab, 160 ال َع َربِ َّية 15924 abjad is the Arabic script as it is codi- Unicode fied for writing Arabic. It is written Arabic alias from right to left in a cursive style and includes 28 letters. Most letters have • U+0600–U+06FF contextual letterforms. Arabic • U+0750–U+077F Originally, the alphabet was an abjad, Arabic Supplement with only consonants, but it is now con- • U+08A0–U+08FF sidered an "impure abjad". As with other Arabic Extended-A abjads, such as the Hebrew alphabet, • U+FB50–U+FDFF scribes later devised means of indicating Unicode Arabic Presentation vowel sounds by separate vowel diacrit- range Forms-A ics. • U+FE70–U+FEFF Arabic Presentation Consonants Forms-B • U+1EE00–U+1EEFF The basic Arabic alphabet contains 28 Arabic Mathematical letters. -

The Syntax of Chaldean Neo-Aramaic

Florida Atlantic University Undergraduate Research Journal THE SYNTAX OF CHALDEAN NEO-ARAMAIC Catrin Seepo & Michael Hamilton Dorothy F. Schmidt College of Arts and Letters 1. Introduction and the Canaanite branches of Northwest Semitic was relatively recent” (p.6). When the big empires, such as Chaldean Neo-Aramaic is a Semitic language Babylonian, Assyrian, and Persian, used Aramaic as spoken by the Chaldean Christians in Iraq and around their official language, which marked the next period of the world. There are many dialects of Chaldean Neo- Aramaic, and that is the Official, Imperial, or Standard Aramaic, and in this research, I will be examining ACNA, Literary Aramaic. After the falling of the Persian Empire, 1 which is spoken in the town of Alqosh in the Plains regional variations of Aramaic had emerged due to the of Nineveh in the northern part of Iraq. Currently, the lack of language standardization. The third period of language is in decline and is understudied. Therefore, Aramaic, Middle Aramaic, was spoken during the time of this paper attempts to preserve the ACNA by generally Jesus Christ. The time between the second and the ninth describing its syntax, and specifically determining its centuries C.E. marked ‘Late’ Aramaic. Finally, Modern word order, which typically refers to the order of subject Aramaic is currently spoken by most Christians in Iraq (S), verb (V) and object (O) in a sentence. and Syria, southeastern Turkey (near Tur Abdin), and Mandeans in southern Iran (p.6-7). 1.1 Historical Overview Modern Aramaic has various variations, one Chaldean Neo-Aramaic is a northeastern of which is Chaldean Neo-Aramaic. -

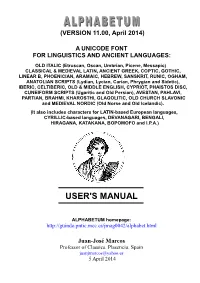

ALPHABETUM Unicode Font for Ancient Scripts

(VERSION 11.00, April 2014) A UNICODE FONT FOR LINGUISTICS AND ANCIENT LANGUAGES: OLD ITALIC (Etruscan, Oscan, Umbrian, Picene, Messapic) CLASSICAL & MEDIEVAL LATIN, ANCIENT GREEK, COPTIC, GOTHIC, LINEAR B, PHOENICIAN, ARAMAIC, HEBREW, SANSKRIT, RUNIC, OGHAM, ANATOLIAN SCRIPTS (Lydian, Lycian, Carian, Phrygian and Sidetic), IBERIC, CELTIBERIC, OLD & MIDDLE ENGLISH, CYPRIOT, PHAISTOS DISC, CUNEIFORM SCRIPTS (Ugaritic and Old Persian), AVESTAN, PAHLAVI, PARTIAN, BRAHMI, KHAROSTHI, GLAGOLITIC, OLD CHURCH SLAVONIC and MEDIEVAL NORDIC (Old Norse and Old Icelandic). (It also includes characters for LATIN-based European languages, CYRILLIC-based languages, DEVANAGARI, BENGALI, HIRAGANA, KATAKANA, BOPOMOFO and I.P.A.) USER'S MANUAL ALPHABETUM homepage: http://guindo.pntic.mec.es/jmag0042/alphabet.html Juan-José Marcos Professor of Classics. Plasencia. Spain [email protected] 5 April 2014 TABLE OF CONTENTS Chapter Page 1. Introduction 3 2. Font installation 3 3. Encoding system 4 4. Software requirements 5 5. Unicode coverage in ALPHABETUM 5 6. Precomposed characters and combining diacriticals 6 7. Private Use Area 7 8. Classical Latin 8 9. Ancient (polytonic) Greek 12 10. Old & Middle English 16 11. I.P.A. International Phonetic Alphabet 17 12. Publishing characters 17 13. Miscellaneous characters 17 14. Esperanto 18 15. Latin-based European languages 19 16. Cyrillic-based languages 21 17. Hebrew 22 18. Devanagari (Sanskrit) 23 19. Bengali 24 20. Hiragana and Katakana 25 21. Bopomofo 26 22. Gothic 27 23. Ogham 28 24. Runic 29 25. Old Nordic 30 26. Old Italic (Etruscan, Oscan, Umbrian, Picene etc) 32 27. Iberic and Celtiberic 37 28. Ugaritic 40 29. Old Persian 40 30. Phoenician 41 31.