Spectral Density Estimation for Random Fields Via Periodic Embeddings

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Moving Average Filters

CHAPTER 15 Moving Average Filters The moving average is the most common filter in DSP, mainly because it is the easiest digital filter to understand and use. In spite of its simplicity, the moving average filter is optimal for a common task: reducing random noise while retaining a sharp step response. This makes it the premier filter for time domain encoded signals. However, the moving average is the worst filter for frequency domain encoded signals, with little ability to separate one band of frequencies from another. Relatives of the moving average filter include the Gaussian, Blackman, and multiple- pass moving average. These have slightly better performance in the frequency domain, at the expense of increased computation time. Implementation by Convolution As the name implies, the moving average filter operates by averaging a number of points from the input signal to produce each point in the output signal. In equation form, this is written: EQUATION 15-1 Equation of the moving average filter. In M &1 this equation, x[ ] is the input signal, y[ ] is ' 1 % y[i] j x [i j ] the output signal, and M is the number of M j'0 points used in the moving average. This equation only uses points on one side of the output sample being calculated. Where x[ ] is the input signal, y[ ] is the output signal, and M is the number of points in the average. For example, in a 5 point moving average filter, point 80 in the output signal is given by: x [80] % x [81] % x [82] % x [83] % x [84] y [80] ' 5 277 278 The Scientist and Engineer's Guide to Digital Signal Processing As an alternative, the group of points from the input signal can be chosen symmetrically around the output point: x[78] % x[79] % x[80] % x[81] % x[82] y[80] ' 5 This corresponds to changing the summation in Eq. -

Measurement Techniques of Ultra-Wideband Transmissions

Rec. ITU-R SM.1754-0 1 RECOMMENDATION ITU-R SM.1754-0* Measurement techniques of ultra-wideband transmissions (2006) Scope Taking into account that there are two general measurement approaches (time domain and frequency domain) this Recommendation gives the appropriate techniques to be applied when measuring UWB transmissions. Keywords Ultra-wideband( UWB), international transmissions, short-duration pulse The ITU Radiocommunication Assembly, considering a) that intentional transmissions from devices using ultra-wideband (UWB) technology may extend over a very large frequency range; b) that devices using UWB technology are being developed with transmissions that span numerous radiocommunication service allocations; c) that UWB technology may be integrated into many wireless applications such as short- range indoor and outdoor communications, radar imaging, medical imaging, asset tracking, surveillance, vehicular radar and intelligent transportation; d) that a UWB transmission may be a sequence of short-duration pulses; e) that UWB transmissions may appear as noise-like, which may add to the difficulty of their measurement; f) that the measurements of UWB transmissions are different from those of conventional radiocommunication systems; g) that proper measurements and assessments of power spectral density are key issues to be addressed for any radiation, noting a) that terms and definitions for UWB technology and devices are given in Recommendation ITU-R SM.1755; b) that there are two general measurement approaches, time domain and frequency domain, with each having a particular set of advantages and disadvantages, recommends 1 that techniques described in Annex 1 to this Recommendation should be considered when measuring UWB transmissions. * Radiocommunication Study Group 1 made editorial amendments to this Recommendation in the years 2018 and 2019 in accordance with Resolution ITU-R 1. -

Spectrum Estimation Using Periodogram, Bartlett and Welch

Spectrum estimation using Periodogram, Bartlett and Welch Guido Schuster Slides follow closely chapter 8 in the book „Statistical Digital Signal Processing and Modeling“ by Monson H. Hayes and most of the figures and formulas are taken from there 1 Introduction • We want to estimate the power spectral density of a wide- sense stationary random process • Recall that the power spectrum is the Fourier transform of the autocorrelation sequence • For an ergodic process the following holds 2 Introduction • The main problem of power spectrum estimation is – The data x(n) is always finite! • Two basic approaches – Nonparametric (Periodogram, Bartlett and Welch) • These are the most common ones and will be presented in the next pages – Parametric approaches • not discussed here since they are less common 3 Nonparametric methods • These are the most commonly used ones • x(n) is only measured between n=0,..,N-1 • Ensures that the values of x(n) that fall outside the interval [0,N-1] are excluded, where for negative values of k we use conjugate symmetry 4 Periodogram • Taking the Fourier transform of this autocorrelation estimate results in an estimate of the power spectrum, known as the Periodogram • This can also be directly expressed in terms of the data x(n) using the rectangular windowed function xN(n) 5 Periodogram of white noise 32 samples 6 Performance of the Periodogram • If N goes to infinity, does the Periodogram converge towards the power spectrum in the mean squared sense? • Necessary conditions – asymptotically unbiased: – variance -

Spatial Domain Low-Pass Filters

Low Pass Filtering Why use Low Pass filtering? • Remove random noise • Remove periodic noise • Reveal a background pattern 1 Effects on images • Remove banding effects on images • Smooth out Img-Img mis-registration • Blurring of image Types of Low Pass Filters • Moving average filter • Median filter • Adaptive filter 2 Moving Ave Filter Example • A single (very short) scan line of an image • {1,8,3,7,8} • Moving Ave using interval of 3 (must be odd) • First number (1+8+3)/3 =4 • Second number (8+3+7)/3=6 • Third number (3+7+8)/3=6 • First and last value set to 0 Two Dimensional Moving Ave 3 Moving Average of Scan Line 2D Moving Average Filter • Spatial domain filter • Places average in center • Edges are set to 0 usually to maintain size 4 Spatial Domain Filter Moving Average Filter Effects • Reduces overall variability of image • Lowers contrast • Noise components reduced • Blurs the overall appearance of image 5 Moving Average images Median Filter The median utilizes the median instead of the mean. The median is the middle positional value. 6 Median Example • Another very short scan line • Data set {2,8,4,6,27} interval of 5 • Ranked {2,4,6,8,27} • Median is 6, central value 4 -> 6 Median Filter • Usually better for filtering • - Less sensitive to errors or extremes • - Median is always a value of the set • - Preserves edges • - But requires more computation 7 Moving Ave vs. Median Filtering Adaptive Filters • Based on mean and variance • Good at Speckle suppression • Sigma filter best known • - Computes mean and std dev for window • - Values outside of +-2 std dev excluded • - If too few values, (<k) uses value to left • - Later versions use weighting 8 Adaptive Filters • Improvements to Sigma filtering - Chi-square testing - Weighting - Local order histogram statistics - Edge preserving smoothing Adaptive Filters 9 Final PowerPoint Numerical Slide Value (The End) 10. -

An Information Theoretic Algorithm for Finding Periodicities in Stellar Light Curves Pablo Huijse, Student Member, IEEE, Pablo A

IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 1, NO. 1, JANUARY 2012 1 An Information Theoretic Algorithm for Finding Periodicities in Stellar Light Curves Pablo Huijse, Student Member, IEEE, Pablo A. Estevez*,´ Senior Member, IEEE, Pavlos Protopapas, Pablo Zegers, Senior Member, IEEE, and Jose´ C. Pr´ıncipe, Fellow Member, IEEE Abstract—We propose a new information theoretic metric aligned to Earth. Periodic drops in brightness are observed for finding periodicities in stellar light curves. Light curves due to the mutual eclipses between the components of the are astronomical time series of brightness over time, and are system. Although most stars have at least some variation in characterized as being noisy and unevenly sampled. The proposed metric combines correntropy (generalized correlation) with a luminosity, current ground based survey estimations indicate periodic kernel to measure similarity among samples separated that 3% of the stars varying more than the sensitivity of the by a given period. The new metric provides a periodogram, called instruments and ∼1% are periodic [2]. Correntropy Kernelized Periodogram (CKP), whose peaks are associated with the fundamental frequencies present in the data. Detecting periodicity and estimating the period of stars is The CKP does not require any resampling, slotting or folding of high importance in astronomy. The period is a key feature scheme as it is computed directly from the available samples. for classifying variable stars [3], [4]; and estimating other CKP is the main part of a fully-automated pipeline for periodic light curve discrimination to be used in astronomical survey parameters such as mass and distance to Earth [5]. -

Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation

Computers, Materials & Continua Tech Science Press DOI:10.32604/cmc.2021.014752 Article Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation Dah-Jing Jwo1, *, Wei-Yeh Chang1 and I-Hua Wu2 1Department of Communications, Navigation and Control Engineering, National Taiwan Ocean University, Keelung, 202-24, Taiwan 2Innovative Navigation Technology Ltd., Kaohsiung, 801, Taiwan *Corresponding Author: Dah-Jing Jwo. Email: [email protected] Received: 01 October 2020; Accepted: 08 November 2020 Abstract: This paper revisits the characteristics of windowing techniques with various window functions involved, and successively investigates spectral leak- age mitigation utilizing the Welch method. The discrete Fourier transform (DFT) is ubiquitous in digital signal processing (DSP) for the spectrum anal- ysis and can be efciently realized by the fast Fourier transform (FFT). The sampling signal will result in distortion and thus may cause unpredictable spectral leakage in discrete spectrum when the DFT is employed. Windowing is implemented by multiplying the input signal with a window function and windowing amplitude modulates the input signal so that the spectral leakage is evened out. Therefore, windowing processing reduces the amplitude of the samples at the beginning and end of the window. In addition to selecting appropriate window functions, a pretreatment method, such as the Welch method, is effective to mitigate the spectral leakage. Due to the noise caused by imperfect, nite data, the noise reduction from Welch’s method is a desired treatment. The nonparametric Welch method is an improvement on the peri- odogram spectrum estimation method where the signal-to-noise ratio (SNR) is high and mitigates noise in the estimated power spectra in exchange for frequency resolution reduction. -

Windowing Design and Performance Assessment for Mitigation of Spectrum Leakage

E3S Web of Conferences 94, 03001 (2019) https://doi.org/10.1051/e3sconf/20199403001 ISGNSS 2018 Windowing Design and Performance Assessment for Mitigation of Spectrum Leakage Dah-Jing Jwo 1,*, I-Hua Wu 2 and Yi Chang1 1 Department of Communications, Navigation and Control Engineering, National Taiwan Ocean University 2 Pei-Ning Rd., Keelung 202, Taiwan 2 Bison Electronics, Inc., Neihu District, Taipei 114, Taiwan Abstract. This paper investigates the windowing design and performance assessment for mitigation of spectral leakage. A pretreatment method to reduce the spectral leakage is developed. In addition to selecting appropriate window functions, the Welch method is introduced. Windowing is implemented by multiplying the input signal with a windowing function. The periodogram technique based on Welch method is capable of providing good resolution if data length samples are selected optimally. Windowing amplitude modulates the input signal so that the spectral leakage is evened out. Thus, windowing reduces the amplitude of the samples at the beginning and end of the window, altering leakage. The influence of various window functions on the Fourier transform spectrum of the signals was discussed, and the characteristics and functions of various window functions were explained. In addition, we compared the differences in the influence of different data lengths on spectral resolution and noise levels caused by the traditional power spectrum estimation and various window-function-based Welch power spectrum estimations. 1 Introduction knowledge. Due to computer capacity and limitations on processing time, only limited time samples can actually The spectrum analysis [1-4] is a critical concern in many be processed during spectrum analysis and data military and civilian applications, such as performance processing of signals, i.e., the original signals need to be test of electric power systems and communication cut off, which induces leakage errors. -

Understanding the Lomb-Scargle Periodogram

Understanding the Lomb-Scargle Periodogram Jacob T. VanderPlas1 ABSTRACT The Lomb-Scargle periodogram is a well-known algorithm for detecting and char- acterizing periodic signals in unevenly-sampled data. This paper presents a conceptual introduction to the Lomb-Scargle periodogram and important practical considerations for its use. Rather than a rigorous mathematical treatment, the goal of this paper is to build intuition about what assumptions are implicit in the use of the Lomb-Scargle periodogram and related estimators of periodicity, so as to motivate important practical considerations required in its proper application and interpretation. Subject headings: methods: data analysis | methods: statistical 1. Introduction The Lomb-Scargle periodogram (Lomb 1976; Scargle 1982) is a well-known algorithm for de- tecting and characterizing periodicity in unevenly-sampled time-series, and has seen particularly wide use within the astronomy community. As an example of a typical application of this method, consider the data shown in Figure 1: this is an irregularly-sampled timeseries showing a single ob- ject from the LINEAR survey (Sesar et al. 2011; Palaversa et al. 2013), with un-filtered magnitude measured 280 times over the course of five and a half years. By eye, it is clear that the brightness of the object varies in time with a range spanning approximately 0.8 magnitudes, but what is not immediately clear is that this variation is periodic in time. The Lomb-Scargle periodogram is a method that allows efficient computation of a Fourier-like power spectrum estimator from such unevenly-sampled data, resulting in an intuitive means of determining the period of oscillation. -

Quantum Noise and Quantum Measurement

Quantum noise and quantum measurement Aashish A. Clerk Department of Physics, McGill University, Montreal, Quebec, Canada H3A 2T8 1 Contents 1 Introduction 1 2 Quantum noise spectral densities: some essential features 2 2.1 Classical noise basics 2 2.2 Quantum noise spectral densities 3 2.3 Brief example: current noise of a quantum point contact 9 2.4 Heisenberg inequality on detector quantum noise 10 3 Quantum limit on QND qubit detection 16 3.1 Measurement rate and dephasing rate 16 3.2 Efficiency ratio 18 3.3 Example: QPC detector 20 3.4 Significance of the quantum limit on QND qubit detection 23 3.5 QND quantum limit beyond linear response 23 4 Quantum limit on linear amplification: the op-amp mode 24 4.1 Weak continuous position detection 24 4.2 A possible correlation-based loophole? 26 4.3 Power gain 27 4.4 Simplifications for a detector with ideal quantum noise and large power gain 30 4.5 Derivation of the quantum limit 30 4.6 Noise temperature 33 4.7 Quantum limit on an \op-amp" style voltage amplifier 33 5 Quantum limit on a linear-amplifier: scattering mode 38 5.1 Caves-Haus formulation of the scattering-mode quantum limit 38 5.2 Bosonic Scattering Description of a Two-Port Amplifier 41 References 50 1 Introduction The fact that quantum mechanics can place restrictions on our ability to make measurements is something we all encounter in our first quantum mechanics class. One is typically presented with the example of the Heisenberg microscope (Heisenberg, 1930), where the position of a particle is measured by scattering light off it. -

Optimal and Adaptive Subband Beamforming

Optimal and Adaptive Subband Beamforming Principles and Applications Nedelko Grbi´c Ronneby, June 2001 Department of Telecommunications and Signal Processing Blekinge Institute of Technology, S-372 25 Ronneby, Sweden c Nedelko Grbi´c ISBN 91-7295-002-1 ISSN 1650-2159 Published 2001 Printed by Kaserntryckeriet AB Karlskrona 2001 Sweden v Preface This doctoral thesis summarizes my work in the field of array signal processing. Frequency domain processing comprise a significant part of the material. The work is mainly aimed at speech enhancement in communication systems such as confer- ence telephony and handsfree mobile telephony. The work has been carried out at Department of Telecommunications and Signal Processing at Blekinge Institute of Technology. Some of the work has been conducted in collaboration with Ericsson Mobile Communications and Australian Telecommunications Research Institute. The thesis consists of seven stand alone parts: Part I Optimal and Adaptive Beamforming for Speech Signals Part II Structures and Performance Limits in Subband Beamforming Part III Blind Signal Separation using Overcomplete Subband Representation Part IV Neural Network based Adaptive Microphone Array System for Speech Enhancement Part V Design of Oversampled Uniform DFT Filter Banks with Delay Specifica- tion using Quadratic Optimization Part VI Design of Oversampled Uniform DFT Filter Banks with Reduced Inband Aliasing and Delay Constraints Part VII A New Pilot-Signal based Space-Time Adaptive Algorithm vii Acknowledgments I owe sincere gratitude to Professor Sven Nordholm with whom most of my work has been conducted. There have been enormous amount of inspiration and valuable discussions with him. Many thanks also goes to Professor Ingvar Claesson who has made every possible effort to make my study complete and rewarding. -

Review of Smoothing Methods for Enhancement of Noisy Data from Heavy-Duty LHD Mining Machines

E3S Web of Conferences 29, 00011 (2018) https://doi.org/10.1051/e3sconf/20182900011 XVIIth Conference of PhD Students and Young Scientists Review of smoothing methods for enhancement of noisy data from heavy-duty LHD mining machines Jacek Wodecki1, Anna Michalak2, and Paweł Stefaniak2 1Machinery Systems Division, Wroclaw University of Science and Technology, Wroclaw, Poland 2KGHM Cuprum R&D Ltd., Wroclaw, Poland Abstract. Appropriate analysis of data measured on heavy-duty mining machines is essential for processes monitoring, management and optimization. Some particular classes of machines, for example LHD (load-haul-dump) machines, hauling trucks, drilling/bolting machines etc. are characterized with cyclicity of operations. In those cases, identification of cycles and their segments or in other words – simply data segmen- tation is a key to evaluate their performance, which may be very useful from the man- agement point of view, for example leading to introducing optimization to the process. However, in many cases such raw signals are contaminated with various artifacts, and in general are expected to be very noisy, which makes the segmentation task very difficult or even impossible. To deal with that problem, there is a need for efficient smoothing meth- ods that will allow to retain informative trends in the signals while disregarding noises and other undesired non-deterministic components. In this paper authors present a review of various approaches to diagnostic data smoothing. Described methods can be used in a fast and efficient way, effectively cleaning the signals while preserving informative de- terministic behaviour, that is a crucial to precise segmentation and other approaches to industrial data analysis. -

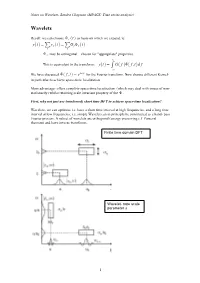

Wavelets T( )= T( ) G F( ) F ,T ( )D F

Notes on Wavelets- Sandra Chapman (MPAGS: Time series analysis) Wavelets Recall: we can choose ! f (t ) as basis on which we expand, ie: y(t ) = " y f (t ) = "G f ! f (t ) f f ! f may be orthogonal – chosen for "appropriate" properties. # This is equivalent to the transform: y(t ) = $ G( f )!( f ,t )d f "# We have discussed !( f ,t ) = e2!ift for the Fourier transform. Now choose different Kernel- in particular to achieve space-time localization. Main advantage- offers complete space-time localization (which may deal with issues of non- stationarity) whilst retaining scale invariant property of the ! . First, why not just use (windowed) short time DFT to achieve space-time localization? Wavelets- we can optimize i.e. have a short time interval at high frequencies, and a long time interval at low frequencies; i.e. simple Wavelet can in principle be constructed as a band- pass Fourier process. A subset of wavelets are orthogonal (energy preserving c.f Parseval theorem) and have inverse transforms. Finite time domain DFT Wavelet- note scale parameter s 1 Notes on Wavelets- Sandra Chapman (MPAGS: Time series analysis) So at its simplest, a wavelet transform is simply a collection of windowed band pass filters applied to the Fourier transform- and this is how wavelet transforms are often computed (as in Matlab). However we will want to impose some desirable properties, invertability (orthogonality) and completeness. Continuous Fourier transform: " m 1 T / 2 2!ifmt !2!ifmt x(t ) = Sme , fm = Sm = x(t )e dt # "!T / 2 m=!" T T ! i( n!m)x with orthogonality: e dx = 2!"mn "!! " x(t ) = # S( f )e2!ift df continuous Fourier transform pair: !" " S( f ) = # x(t )e!2!ift dt !" Continuous Wavelet transform: $ W !,a = x t " * t dt ( ) % ( ) ! ,a ( ) #$ 1 $ & $ ) da x(t) = ( W (!,a)"! ! ,a d! + 2 C % % a " 0 '#$ * 1 $ t # " ' Where the mother wavelet is ! " ,a (t) = ! & ) where ! is the shift parameter and a a % a ( is the scale (dilation) parameter (we can generalize to have a scaling function a(t)).