Moving Average Filters

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 2 Time Series and Forecasting

Chapter 2 Time series and Forecasting 2.1 Introduction Data are frequently recorded at regular time intervals, for instance, daily stock market indices, the monthly rate of inflation or annual profit figures. In this Chapter we think about how to display and model such data. We will consider how to detect trends and seasonal effects and then use these to make forecasts. As well as review the methods covered in MAS1403, we will also consider a class of time series models known as autore- gressive moving average models. Why is this topic useful? Well, making forecasts allows organisations to make better decisions and to plan more efficiently. For instance, reliable forecasts enable a retail outlet to anticipate demand, hospitals to plan staffing levels and manufacturers to keep appropriate levels of inventory. 2.2 Displaying and describing time series A time series is a collection of observations made sequentially in time. When observations are made continuously, the time series is said to be continuous; when observations are taken only at specific time points, the time series is said to be discrete. In this course we consider only discrete time series, where the observations are taken at equal intervals. The first step in the analysis of time series is usually to plot the data against time, in a time series plot. Suppose we have the following four–monthly sales figures for Turner’s Hangover Cure as described in Practical 2 (in thousands of pounds): Jan–Apr May–Aug Sep–Dec 2006 8 10 13 2007 10 11 14 2008 10 11 15 2009 11 13 16 We could enter these data into a single column (say column C1) in Minitab, and then click on Graph–Time Series Plot–Simple–OK; entering C1 in Series and then clicking OK gives the graph shown in figure 2.1. -

Demand Forecasting

BIZ2121 Production & Operations Management Demand Forecasting Sung Joo Bae, Associate Professor Yonsei University School of Business Unilever Customer Demand Planning (CDP) System Statistical information: shipment history, current order information Demand-planning system with promotional demand increase, and other detailed information (external market research, internal sales projection) Forecast information is relayed to different distribution channel and other units Connecting to POS (point-of-sales) data and comparing it to forecast data is a very valuable ways to update the system Results: reduced inventory, better customer service Forecasting Forecasts are critical inputs to business plans, annual plans, and budgets Finance, human resources, marketing, operations, and supply chain managers need forecasts to plan: ◦ output levels ◦ purchases of services and materials ◦ workforce and output schedules ◦ inventories ◦ long-term capacities Forecasting Forecasts are made on many different variables ◦ Uncertain variables: competitor strategies, regulatory changes, technological changes, processing times, supplier lead times, quality losses ◦ Different methods are used Judgment, opinions of knowledgeable people, average of experience, regression, and time-series techniques ◦ No forecast is perfect Constant updating of plans is important Forecasts are important to managing both processes and supply chains ◦ Demand forecast information can be used for coordinating the supply chain inputs, and design of the internal processes (especially -

Power Grid Topology Classification Based on Time Domain Analysis

Power Grid Topology Classification Based on Time Domain Analysis Jia He, Maggie X. Cheng and Mariesa L. Crow, Fellow, IEEE Abstract—Power system monitoring is significantly im- all depend on the correct network topology information. proved with the use of Phasor Measurement Units (PMUs). Topology change, no matter what caused it, must be The availability of PMU data and recent advances in ma- immediately updated. An unattended topology error may chine learning together enable advanced data analysis that lead to cascading power outages and cause large scale is critical for real-time fault detection and diagnosis. This blackout ( [2], [3]). In this paper, we demonstrate that paper focuses on the problem of power line outage. We use a machine learning framework and consider the problem using a machine learning approach and real-time mea- of line outage identification as a classification problem in surement data we can timely and accurately identify the machine learning. The method is data-driven and does outage location. We leverage PMU data and consider not rely on the results of other analysis such as power the task of identifying the location of line outage as flow analysis and solving power system equations. Since it a classification problem. The methods can be applied does not involve using power system parameters to solve in real-time. Although training a classifier can be time- equations, it is applicable even when such parameters are consuming, the prediction of power line status can be unavailable. The proposed method uses only voltage phasor done in real-time. angles obtained from PMUs. -

Logistic-Weighted Regression Improves Decoding of Finger Flexion from Electrocorticographic Signals

Logistic-weighted Regression Improves Decoding of Finger Flexion from Electrocorticographic Signals Weixuan Chen*-IEEE Student Member, Xilin Liu-IEEE Student Member, and Brian Litt-IEEE Senior Member Abstract— One of the most interesting applications of brain linear Wiener filter [7–10]. To take into account the computer interfaces (BCIs) is movement prediction. With the physiological, physical, and mechanical constraints that development of invasive recording techniques and decoding affect the flexion of limbs, some studies applied switching algorithms in the past ten years, many single neuron-based and models [11] or Bayesian models [12,13] to the results of electrocorticography (ECoG)-based studies have been able to linear regressions above. Other studies have explored the decode trajectories of limb movements. As the output variables utility of non-linear methods, including neural networks [14– are continuous in these studies, a regression model is commonly 17], multilinear perceptrons [18], and support vector used. However, the decoding of limb movements is not a pure machines [18], but they tend to have difficulty with high regression problem, because the trajectories can be apparently dimensional features and limited training data [13]. classified into a motion state and a resting state, which result in a binary property overlooked by previous studies. In this Nevertheless, the studies of limb movement translation paper, we propose an algorithm called logistic-weighted are in fact not pure regression problems, because the limbs regression to make use of the property, and apply the algorithm are not always under the motion state. Whether it is during an to a BCI system decoding flexion of human fingers from ECoG experiment or in the daily life, the resting state of the limbs is signals. -

Time Series and Forecasting

Time Series and Forecasting Time Series • A time series is a sequence of measurements over time, usually obtained at equally spaced intervals – Daily – Monthly – Quarterly – Yearly 1 Time Series Example Dow Jones Industrial Average 12000 11000 10000 9000 Closing Value Closing 8000 7000 1/3/00 5/3/00 9/3/00 1/3/01 5/3/01 9/3/01 1/3/02 5/3/02 9/3/02 1/3/03 5/3/03 9/3/03 Date Components of a Time Series • Secular Trend –Linear – Nonlinear • Cyclical Variation – Rises and Falls over periods longer than one year • Seasonal Variation – Patterns of change within a year, typically repeating themselves • Residual Variation 2 Components of a Time Series Y=T+C+S+Rtt tt t Time Series with Linear Trend Yt = a + b t + et 3 Time Series with Linear Trend AOL Subscribers 30 25 20 15 10 5 Number of Subscribers (millions) 0 2341234123412341234123 1995 1996 1997 1998 1999 2000 Quarter Time Series with Linear Trend Average Daily Visits in August to Emergency Room at Richmond Memorial Hospital 140 120 100 80 60 40 Average Daily Visits Average Daily 20 0 12345678910 Year 4 Time Series with Nonlinear Trend Imports 180 160 140 120 100 80 Imports (MM) Imports 60 40 20 0 1986 1988 1990 1992 1994 1996 1998 Year Time Series with Nonlinear Trend • Data that increase by a constant amount at each successive time period show a linear trend. • Data that increase by increasing amounts at each successive time period show a curvilinear trend. • Data that increase by an equal percentage at each successive time period can be made linear by applying a logarithmic transformation. -

Discrete-Time Fourier Transform 7

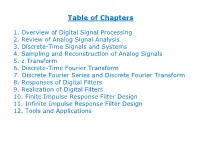

Table of Chapters 1. Overview of Digital Signal Processing 2. Review of Analog Signal Analysis 3. Discrete-Time Signals and Systems 4. Sampling and Reconstruction of Analog Signals 5. z Transform 6. Discrete-Time Fourier Transform 7. Discrete Fourier Series and Discrete Fourier Transform 8. Responses of Digital Filters 9. Realization of Digital Filters 10. Finite Impulse Response Filter Design 11. Infinite Impulse Response Filter Design 12. Tools and Applications Chapter 1: Overview of Digital Signal Processing Chapter Intended Learning Outcomes: (i) Understand basic terminology in digital signal processing (ii) Differentiate digital signal processing and analog signal processing (iii) Describe basic digital signal processing application areas H. C. So Page 1 Signal: . Anything that conveys information, e.g., . Speech . Electrocardiogram (ECG) . Radar pulse . DNA sequence . Stock price . Code division multiple access (CDMA) signal . Image . Video H. C. So Page 2 0.8 0.6 0.4 0.2 0 vowel of "a" -0.2 -0.4 -0.6 0 0.005 0.01 0.015 0.02 time (s) Fig.1.1: Speech H. C. So Page 3 250 200 150 100 ECG 50 0 -50 0 0.5 1 1.5 2 2.5 time (s) Fig.1.2: ECG H. C. So Page 4 1 0.5 0 -0.5 transmitted pulse -1 0 0.2 0.4 0.6 0.8 1 time 1 0.5 0 -0.5 received pulse received t -1 0 0.2 0.4 0.6 0.8 1 time Fig.1.3: Transmitted & received radar waveforms H. C. So Page 5 Radar transceiver sends a 1-D sinusoidal pulse at time 0 It then receives echo reflected by an object at a range of Reflected signal is noisy and has a time delay of which corresponds to round trip propagation time of radar pulse Given the signal propagation speed, denoted by , is simply related to as: (1.1) As a result, the radar pulse contains the object range information H. -

Comparative Analysis of Gaussian Filter with Wavelet Denoising for Various Noises Present in Images

ISSN (Print) : 0974-6846 Indian Journal of Science and Technology, Vol 9(47), DOI: 10.17485/ijst/2016/v9i47/106843, December 2016 ISSN (Online) : 0974-5645 Comparative Analysis of Gaussian Filter with Wavelet Denoising for Various Noises Present in Images Amanjot Singh1,2* and Jagroop Singh3 1I.K.G. P.T.U., Jalandhar – 144603,Punjab, India; 2School of Electronics and Electrical Engineering, Lovely Professional University, Phagwara - 144411, Punjab, India; [email protected] 3Department of Electronics and Communication Engineering, DAVIET, Jalandhar – 144008, Punjab, India; [email protected] Abstract Objectives: This paper is providing a comparative performance analysis of wavelet denoising with Gaussian filter applied variouson images noises contaminated usually present with various in images. noises. Wavelet Gaussian transform filter is ais basic used filter to convert used in the image images processing. to wavelet Its response domain. isBased varying on with its kernel sizes that have also been shown in analysis. Wavelet based de-noisingMethods/Analysis: is also one of the way of removing thresholding operations in wavelet domain noise could be removed from images. In this paper, image quality matrices like PSNR andFindings MSE have been compared for the various types of noises in images for different denoising methods. Moreover, the behavior of different methods for image denoising have been graphically shown in paper with MATLAB based simulations. : In the end wavelet based de-noising methods has been compared with Gaussian basedKeywords: filter. The Denoising, paper provides Gaussian a review Filter, MSE,of filters PSNR, and SNR, their Thresholding, denoising analysis Wavelet under Transform different noise conditions. 1. Introduction is of bell shaped. -

MPA15-16 a Baseband Pulse Shaping Filter for Gaussian Minimum Shift Keying

A BASEBAND PULSE SHAPING FILTER FOR GAUSSIAN MINIMUM SHIFT KEYING 1 2 3 3 N. Krishnapura , S. Pavan , C. Mathiazhagan ,B.Ramamurthi 1 Department of Electrical Engineering, Columbia University, New York, NY 10027, USA 2 Texas Instruments, Edison, NJ 08837, USA 3 Department of Electrical Engineering, Indian Institute of Technology, Chennai, 600036, India Email: [email protected] measurement results. ABSTRACT A quadrature mo dulation scheme to realize the Gaussian pulse shaping is used in digital commu- same function as Fig. 1 can be derived. In this pap er, nication systems like DECT, GSM, WLAN to min- we consider only the scheme shown in Fig. 1. imize the out of band sp ectral energy. The base- band rectangular pulse stream is passed through a 2. GAUSSIAN FREQUENCY SHIFT lter with a Gaussian impulse resp onse b efore fre- KEYING GFSK quency mo dulating the carrier. Traditionally this The output of the system shown in Fig. 1 can b e describ ed is done by storing the values of the pulse shap e by in a ROM and converting it to an analog wave- Z t form with a DAC followed by a smo othing lter. g d 1 y t = cos 2f t +2k c f This pap er explores a fully analog implementation 1 of an integrated Gaussian pulse shap er, which can where f is the unmo dulated carrier frequency, k is the c f result in a reduced power consumption and chip mo dulating index k =0:25 for Gaussian Minimum Shift f area. Keying|GMSK[1] and g denotes the convolution of the rectangular bit stream bt with values in f1; 1g 1. -

Random Signals

Chapter 8 RANDOM SIGNALS Signals can be divided into two main categories - deterministic and random. The term random signal is used primarily to denote signals, which have a random in its nature source. As an example we can mention the thermal noise, which is created by the random movement of electrons in an electric conductor. Apart from this, the term random signal is used also for signals falling into other categories, such as periodic signals, which have one or several parameters that have appropriate random behavior. An example is a periodic sinusoidal signal with a random phase or amplitude. Signals can be treated either as deterministic or random, depending on the application. Speech, for example, can be considered as a deterministic signal, if one specific speech waveform is considered. It can also be viewed as a random process if one considers the ensemble of all possible speech waveforms in order to design a system that will optimally process speech signals, in general. The behavior of stochastic signals can be described only in the mean. The description of such signals is as a rule based on terms and concepts borrowed from probability theory. Signals are, however, a function of time and such description becomes quickly difficult to manage and impractical. Only a fraction of the signals, known as ergodic, can be handled in a relatively simple way. Among those signals that are excluded are the class of the non-stationary signals, which otherwise play an essential part in practice. Working in frequency domain is a powerful technique in signal processing. While the spectrum is directly related to the deterministic signals, the spectrum of a ran- dom signal is defined through its correlation function. -

Designing Filters Using the Digital Filter Design Toolkit Rahman Jamal, Mike Cerna, John Hanks

NATIONAL Application Note 097 INSTRUMENTS® The Software is the Instrument ® Designing Filters Using the Digital Filter Design Toolkit Rahman Jamal, Mike Cerna, John Hanks Introduction The importance of digital filters is well established. Digital filters, and more generally digital signal processing algorithms, are classified as discrete-time systems. They are commonly implemented on a general purpose computer or on a dedicated digital signal processing (DSP) chip. Due to their well-known advantages, digital filters are often replacing classical analog filters. In this application note, we introduce a new digital filter design and analysis tool implemented in LabVIEW with which developers can graphically design classical IIR and FIR filters, interactively review filter responses, and save filter coefficients. In addition, real-world filter testing can be performed within the digital filter design application using a plug-in data acquisition board. Digital Filter Design Process Digital filters are used in a wide variety of signal processing applications, such as spectrum analysis, digital image processing, and pattern recognition. Digital filters eliminate a number of problems associated with their classical analog counterparts and thus are preferably used in place of analog filters. Digital filters belong to the class of discrete-time LTI (linear time invariant) systems, which are characterized by the properties of causality, recursibility, and stability. They can be characterized in the time domain by their unit-impulse response, and in the transform domain by their transfer function. Obviously, the unit-impulse response sequence of a causal LTI system could be of either finite or infinite duration and this property determines their classification into either finite impulse response (FIR) or infinite impulse response (IIR) system. -

Finite Impulse Response (FIR) Digital Filters (II) Ideal Impulse Response Design Examples Yogananda Isukapalli

Finite Impulse Response (FIR) Digital Filters (II) Ideal Impulse Response Design Examples Yogananda Isukapalli 1 • FIR Filter Design Problem Given H(z) or H(ejw), find filter coefficients {b0, b1, b2, ….. bN-1} which are equal to {h0, h1, h2, ….hN-1} in the case of FIR filters. 1 z-1 z-1 z-1 z-1 x[n] h0 h1 h2 h3 hN-2 hN-1 1 1 1 1 1 y[n] Consider a general (infinite impulse response) definition: ¥ H (z) = å h[n] z-n n=-¥ 2 From complex variable theory, the inverse transform is: 1 n -1 h[n] = ò H (z)z dz 2pj C Where C is a counterclockwise closed contour in the region of convergence of H(z) and encircling the origin of the z-plane • Evaluating H(z) on the unit circle ( z = ejw ) : ¥ H (e jw ) = åh[n]e- jnw n=-¥ 1 p h[n] = ò H (e jw )e jnwdw where dz = jejw dw 2p -p 3 • Design of an ideal low pass FIR digital filter H(ejw) K -2p -p -wc 0 wc p 2p w Find ideal low pass impulse response {h[n]} 1 p h [n] = H (e jw )e jnwdw LP ò 2p -p 1 wc = Ke jnwdw 2p ò -wc Hence K h [n] = sin(nw ) n = 0, ±1, ±2, …. ±¥ LP np c 4 Let K = 1, wc = p/4, n = 0, ±1, …, ±10 The impulse response coefficients are n = 0, h[n] = 0.25 n = ±4, h[n] = 0 = ±1, = 0.225 = ±5, = -0.043 = ±2, = 0.159 = ±6, = -0.053 = ±3, = 0.075 = ±7, = -0.032 n = ±8, h[n] = 0 = ±9, = 0.025 = ±10, = 0.032 5 Non Causal FIR Impulse Response We can make it causal if we shift hLP[n] by 10 units to the right: K h [n] = sin((n -10)w ) LP (n -10)p c n = 0, 1, 2, …. -

Signals and Systems Lecture 8: Finite Impulse Response Filters

Signals and Systems Lecture 8: Finite Impulse Response Filters Dr. Guillaume Ducard Fall 2018 based on materials from: Prof. Dr. Raffaello D’Andrea Institute for Dynamic Systems and Control ETH Zurich, Switzerland G. Ducard 1 / 46 Outline 1 Finite Impulse Response Filters Definition General Properties 2 Moving Average (MA) Filter MA filter as a simple low-pass filter Fast MA filter implementation Weighted Moving Average Filter 3 Non-Causal Moving Average Filter Non-Causal Moving Average Filter Non-Causal Weighted Moving Average Filter 4 Important Considerations Phase is Important Differentiation using FIR Filters Frequency-domain observations Higher derivatives G. Ducard 2 / 46 Finite Impulse Response Filters Moving Average (MA) Filter Definition Non-Causal Moving Average Filter General Properties Important Considerations Outline 1 Finite Impulse Response Filters Definition General Properties 2 Moving Average (MA) Filter MA filter as a simple low-pass filter Fast MA filter implementation Weighted Moving Average Filter 3 Non-Causal Moving Average Filter Non-Causal Moving Average Filter Non-Causal Weighted Moving Average Filter 4 Important Considerations Phase is Important Differentiation using FIR Filters Frequency-domain observations Higher derivatives G. Ducard 3 / 46 Finite Impulse Response Filters Moving Average (MA) Filter Definition Non-Causal Moving Average Filter General Properties Important Considerations FIR filters : definition The class of causal, LTI finite impulse response (FIR) filters can be captured by the difference equation M−1 y[n]= bku[n − k], Xk=0 where 1 M is the number of filter coefficients (also known as filter length), 2 M − 1 is often referred to as the filter order, 3 and bk ∈ R are the filter coefficients that describe the dependence on current and previous inputs.