Spectrum Estimation Using Periodogram, Bartlett and Welch

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Information Theoretic Algorithm for Finding Periodicities in Stellar Light Curves Pablo Huijse, Student Member, IEEE, Pablo A

IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 1, NO. 1, JANUARY 2012 1 An Information Theoretic Algorithm for Finding Periodicities in Stellar Light Curves Pablo Huijse, Student Member, IEEE, Pablo A. Estevez*,´ Senior Member, IEEE, Pavlos Protopapas, Pablo Zegers, Senior Member, IEEE, and Jose´ C. Pr´ıncipe, Fellow Member, IEEE Abstract—We propose a new information theoretic metric aligned to Earth. Periodic drops in brightness are observed for finding periodicities in stellar light curves. Light curves due to the mutual eclipses between the components of the are astronomical time series of brightness over time, and are system. Although most stars have at least some variation in characterized as being noisy and unevenly sampled. The proposed metric combines correntropy (generalized correlation) with a luminosity, current ground based survey estimations indicate periodic kernel to measure similarity among samples separated that 3% of the stars varying more than the sensitivity of the by a given period. The new metric provides a periodogram, called instruments and ∼1% are periodic [2]. Correntropy Kernelized Periodogram (CKP), whose peaks are associated with the fundamental frequencies present in the data. Detecting periodicity and estimating the period of stars is The CKP does not require any resampling, slotting or folding of high importance in astronomy. The period is a key feature scheme as it is computed directly from the available samples. for classifying variable stars [3], [4]; and estimating other CKP is the main part of a fully-automated pipeline for periodic light curve discrimination to be used in astronomical survey parameters such as mass and distance to Earth [5]. -

Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation

Computers, Materials & Continua Tech Science Press DOI:10.32604/cmc.2021.014752 Article Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation Dah-Jing Jwo1, *, Wei-Yeh Chang1 and I-Hua Wu2 1Department of Communications, Navigation and Control Engineering, National Taiwan Ocean University, Keelung, 202-24, Taiwan 2Innovative Navigation Technology Ltd., Kaohsiung, 801, Taiwan *Corresponding Author: Dah-Jing Jwo. Email: [email protected] Received: 01 October 2020; Accepted: 08 November 2020 Abstract: This paper revisits the characteristics of windowing techniques with various window functions involved, and successively investigates spectral leak- age mitigation utilizing the Welch method. The discrete Fourier transform (DFT) is ubiquitous in digital signal processing (DSP) for the spectrum anal- ysis and can be efciently realized by the fast Fourier transform (FFT). The sampling signal will result in distortion and thus may cause unpredictable spectral leakage in discrete spectrum when the DFT is employed. Windowing is implemented by multiplying the input signal with a window function and windowing amplitude modulates the input signal so that the spectral leakage is evened out. Therefore, windowing processing reduces the amplitude of the samples at the beginning and end of the window. In addition to selecting appropriate window functions, a pretreatment method, such as the Welch method, is effective to mitigate the spectral leakage. Due to the noise caused by imperfect, nite data, the noise reduction from Welch’s method is a desired treatment. The nonparametric Welch method is an improvement on the peri- odogram spectrum estimation method where the signal-to-noise ratio (SNR) is high and mitigates noise in the estimated power spectra in exchange for frequency resolution reduction. -

Windowing Design and Performance Assessment for Mitigation of Spectrum Leakage

E3S Web of Conferences 94, 03001 (2019) https://doi.org/10.1051/e3sconf/20199403001 ISGNSS 2018 Windowing Design and Performance Assessment for Mitigation of Spectrum Leakage Dah-Jing Jwo 1,*, I-Hua Wu 2 and Yi Chang1 1 Department of Communications, Navigation and Control Engineering, National Taiwan Ocean University 2 Pei-Ning Rd., Keelung 202, Taiwan 2 Bison Electronics, Inc., Neihu District, Taipei 114, Taiwan Abstract. This paper investigates the windowing design and performance assessment for mitigation of spectral leakage. A pretreatment method to reduce the spectral leakage is developed. In addition to selecting appropriate window functions, the Welch method is introduced. Windowing is implemented by multiplying the input signal with a windowing function. The periodogram technique based on Welch method is capable of providing good resolution if data length samples are selected optimally. Windowing amplitude modulates the input signal so that the spectral leakage is evened out. Thus, windowing reduces the amplitude of the samples at the beginning and end of the window, altering leakage. The influence of various window functions on the Fourier transform spectrum of the signals was discussed, and the characteristics and functions of various window functions were explained. In addition, we compared the differences in the influence of different data lengths on spectral resolution and noise levels caused by the traditional power spectrum estimation and various window-function-based Welch power spectrum estimations. 1 Introduction knowledge. Due to computer capacity and limitations on processing time, only limited time samples can actually The spectrum analysis [1-4] is a critical concern in many be processed during spectrum analysis and data military and civilian applications, such as performance processing of signals, i.e., the original signals need to be test of electric power systems and communication cut off, which induces leakage errors. -

Understanding the Lomb-Scargle Periodogram

Understanding the Lomb-Scargle Periodogram Jacob T. VanderPlas1 ABSTRACT The Lomb-Scargle periodogram is a well-known algorithm for detecting and char- acterizing periodic signals in unevenly-sampled data. This paper presents a conceptual introduction to the Lomb-Scargle periodogram and important practical considerations for its use. Rather than a rigorous mathematical treatment, the goal of this paper is to build intuition about what assumptions are implicit in the use of the Lomb-Scargle periodogram and related estimators of periodicity, so as to motivate important practical considerations required in its proper application and interpretation. Subject headings: methods: data analysis | methods: statistical 1. Introduction The Lomb-Scargle periodogram (Lomb 1976; Scargle 1982) is a well-known algorithm for de- tecting and characterizing periodicity in unevenly-sampled time-series, and has seen particularly wide use within the astronomy community. As an example of a typical application of this method, consider the data shown in Figure 1: this is an irregularly-sampled timeseries showing a single ob- ject from the LINEAR survey (Sesar et al. 2011; Palaversa et al. 2013), with un-filtered magnitude measured 280 times over the course of five and a half years. By eye, it is clear that the brightness of the object varies in time with a range spanning approximately 0.8 magnitudes, but what is not immediately clear is that this variation is periodic in time. The Lomb-Scargle periodogram is a method that allows efficient computation of a Fourier-like power spectrum estimator from such unevenly-sampled data, resulting in an intuitive means of determining the period of oscillation. -

Optimal and Adaptive Subband Beamforming

Optimal and Adaptive Subband Beamforming Principles and Applications Nedelko Grbi´c Ronneby, June 2001 Department of Telecommunications and Signal Processing Blekinge Institute of Technology, S-372 25 Ronneby, Sweden c Nedelko Grbi´c ISBN 91-7295-002-1 ISSN 1650-2159 Published 2001 Printed by Kaserntryckeriet AB Karlskrona 2001 Sweden v Preface This doctoral thesis summarizes my work in the field of array signal processing. Frequency domain processing comprise a significant part of the material. The work is mainly aimed at speech enhancement in communication systems such as confer- ence telephony and handsfree mobile telephony. The work has been carried out at Department of Telecommunications and Signal Processing at Blekinge Institute of Technology. Some of the work has been conducted in collaboration with Ericsson Mobile Communications and Australian Telecommunications Research Institute. The thesis consists of seven stand alone parts: Part I Optimal and Adaptive Beamforming for Speech Signals Part II Structures and Performance Limits in Subband Beamforming Part III Blind Signal Separation using Overcomplete Subband Representation Part IV Neural Network based Adaptive Microphone Array System for Speech Enhancement Part V Design of Oversampled Uniform DFT Filter Banks with Delay Specifica- tion using Quadratic Optimization Part VI Design of Oversampled Uniform DFT Filter Banks with Reduced Inband Aliasing and Delay Constraints Part VII A New Pilot-Signal based Space-Time Adaptive Algorithm vii Acknowledgments I owe sincere gratitude to Professor Sven Nordholm with whom most of my work has been conducted. There have been enormous amount of inspiration and valuable discussions with him. Many thanks also goes to Professor Ingvar Claesson who has made every possible effort to make my study complete and rewarding. -

Introduction to Time Series Analysis. Lecture 19

Introduction to Time Series Analysis. Lecture 19. 1. Review: Spectral density estimation, sample autocovariance. 2. The periodogram and sample autocovariance. 3. Asymptotics of the periodogram. 1 Estimating the Spectrum: Outline We have seen that the spectral density gives an alternative view of • stationary time series. Given a realization x ,...,x of a time series, how can we estimate • 1 n the spectral density? One approach: replace γ( ) in the definition • · ∞ f(ν)= γ(h)e−2πiνh, h=−∞ with the sample autocovariance γˆ( ). · Another approach, called the periodogram: compute I(ν), the squared • modulus of the discrete Fourier transform (at frequencies ν = k/n). 2 Estimating the spectrum: Outline These two approaches are identical at the Fourier frequencies ν = k/n. • The asymptotic expectation of the periodogram I(ν) is f(ν). We can • derive some asymptotic properties, and hence do hypothesis testing. Unfortunately, the asymptotic variance of I(ν) is constant. • It is not a consistent estimator of f(ν). 3 Review: Spectral density estimation If a time series X has autocovariance γ satisfying { t} ∞ γ(h) < , then we define its spectral density as h=−∞ | | ∞ ∞ f(ν)= γ(h)e−2πiνh h=−∞ for <ν< . −∞ ∞ 4 Review: Sample autocovariance Idea: use the sample autocovariance γˆ( ), defined by · n−|h| 1 γˆ(h)= (x x¯)(x x¯), for n<h<n, n t+|h| − t − − t=1 as an estimate of the autocovariance γ( ), and then use · n−1 fˆ(ν)= γˆ(h)e−2πiνh h=−n+1 for 1/2 ν 1/2. − ≤ ≤ 5 Discrete Fourier transform For a sequence (x1,...,xn), define the discrete Fourier transform (DFT) as (X(ν0),X(ν1),...,X(νn−1)), where 1 n X(ν )= x e−2πiνkt, k √n t t=1 and ν = k/n (for k = 0, 1,...,n 1) are called the Fourier frequencies. -

The Periodogram • Recall: the Discrete Fourier Transform D Ωj = N Xte , J = 0

The Periodogram • Recall: the discrete Fourier transform n −1=2 X −2πi! t d !j = n xte j ; j = 0; 1; : : : ; n − 1; t=1 • and the periodogram 2 I !j = d !j ; j = 0; 1; : : : ; n − 1; • where !j is one of the Fourier frequencies j ! = : j n 1 Sine and Cosine Transforms • For j = 0; 1; : : : ; n − 1, n −1=2 X −2πi! t d !j = n xte j t=1 n n −1=2 X −1=2 X = n xt cos 2π!jt − i × n xt sin 2π!jt t=1 t=1 = dc !j − i × ds !j : • dc !j and ds !j are the cosine transform and sine trans- form, respectively, of x1; x2; : : : ; xn. 2 2 • The periodogram is I !j = dc !j + ds !j . 2 Sampling Distributions • For convenience, suppose that n is odd: n = 2m + 1. • White noise: orthogonality properties of sines and cosines mean that dc(!1), ds(!1), dc(!2), ds(!2),..., dc(!m), ds(!m) 1 2 have zero mean, variance 2σw, and are uncorrelated. • Gaussian white noise: dc(!1), ds(!1), dc(!2), ds(!2),..., 1 2 dc(!m), ds(!m) are i.i.d. N 0; 2σw . 1 2 2 • So for Gaussian white noise, I !j ∼ 2σw × χ2. 3 • General case: dc(!1), ds(!1), dc(!2), ds(!2),..., dc(!m), ds(!m) have zero mean and are approximately uncorrelated, and h i h i 1 var dc !j ≈ var ds !j ≈ 2fx !j ; where fx !j is the spectral density function. • If xt is Gaussian, 2 2 Ix !j dc !j + ds !j = approximately 2 1 1 ∼ χ2; 2fx !j 2fx !j and Ix(!1), Ix(!2),..., Ix(!m) are approximately indepen- dent. -

Basic Definitions and the Spectral Estimation Problem

Basic Definitions and The Spectral Estimation Problem Lecture 1 Lecture notes to accompany Introduction to Spectral Analysis Slide L1–1 by P. Stoica and R. Moses, Prentice Hall, 1997 Informal Definition of Spectral Estimation Given: A finite record of a signal. Determine: The distribution of signal power over frequency. signal spectral density t ω ω ω+∆ω π t=1, 2, ... = ! (angular) frequency in radians/(sampling interval) = !=2 f =frequencyincycles/(samplinginterval) Lecture notes to accompany Introduction to Spectral Analysis Slide L1–2 by P. Stoica and R. Moses, Prentice Hall, 1997 Applications Temporal Spectral Analysis Vibration monitoring and fault detection Hidden periodicity finding Speech processing and audio devices Medical diagnosis Seismology and ground movement study Control systems design Radar, Sonar Spatial Spectral Analysis Source location using sensor arrays Lecture notes to accompany Introduction to Spectral Analysis Slide L1–3 by P. Stoica and R. Moses, Prentice Hall, 1997 Deterministic Signals 1 y (t)g = f discrete-time deterministic data t=1 sequence 1 X 2 y (t)j < 1 If: j t=1 1 X i! t Y (! ) (t)e Then: = y t=1 exists and is called the Discrete-Time Fourier Transform (DTFT) Lecture notes to accompany Introduction to Spectral Analysis Slide L1–4 by P. Stoica and R. Moses, Prentice Hall, 1997 Energy Spectral Density Parseval's Equality: Z 1 X 1 2 jy (t)j (! )d! = S 2 t=1 where 4 2 S (! ) = jY (! )j = Energy Spectral Density We can write 1 X i! k (k )e S (! ) = k =1 where 1 X (k ) = y (t)y (t k ) t=1 Lecture notes to accompany Introduction to Spectral Analysis Slide L1–5 by P. -

State-Space Multitaper Spectrogram Algorithms: Theory and Applications

State-Space Multitaper Spectrogram Algorithms: Theory and Applications by Michael K. Behr S.B., Massachusetts Institute of Technology (2015) Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY June 2016 © Massachusetts Institute of Technology 2016. All rights reserved. Author...................................................................... Department of Electrical Engineering and Computer Science May 20, 2016 Certified by. Dr. Emery N. Brown Professor Thesis Supervisor Accepted by................................................................. Dr. Christopher J. Terman Chairman, Masters of Engineering Thesis Committee State-Space Multitaper Spectrogram Algorithms: Theory and Applications by Michael K. Behr Submitted to the Department of Electrical Engineering and Computer Science on May 20, 2016, in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science Abstract I present the state-space multitaper approach for analyzing non-stationary time series. Non- stationary time series are commonly divided into small time windows for analysis, but ex- isting methods lose predictive power by analyzing each window independently, even though nearby windows have similar spectral properties. The state-space multitaper algorithm com- bines two approaches for spectral analysis: the state-space approach models the relations between nearby windows, and the multitaper approach balances a bias-variance tradeoff in- herent in Fourier analysis of finite interval data. I illustrate an application of the algorithm to real-time anesthesia monitoring, which could prevent traumatic cases of intraoperative awareness. I discuss issues including a real-time implementation and modeling the system’s noise parameters. -

Broadband Sparse Array Focusing Via Spatial Periodogram Averaging

1 Broadband Sparse Array Focusing Via Spatial Periodogram Averaging and Correlation Resampling Yang Liu, John R Buck, Senior Member, IEEE Abstract—This paper proposes two coherent broadband fo- propagating electromagnetic or acoustic field [4] [10]. This cusing algorithms for spatial correlation estimation using sparse paper considers the problem of enumerating and estimating linear arrays. Both algorithms decompose the time-domain array the DOAs of more sources than sensors using sparse arrays data into disjoint frequency bands through discrete Fourier transform or filter banks to obtain broadband frequency-domain for temporally broadband signals. snapshots. The periodogram averaging (AP) algorithm starts When the incoming sources are broadband in temporal in the frequency domain by estimating the broadband spatial frequency, it is possible to combine the spectral information periodograms for all bands and then averaging them to reinforce from multiple frequency bands to improve the precision of the sources’ spatial spectral information. Taking inverse spatial the spatial correlation estimates. Properly combining data Fourier transform of the combined spatial periodogram esti- mates the focused spatial correlations. Alternatively, the spatial across frequency bands reduces the large number of snapshots correlation resampling (SCR) algorithm directly computes the required in sparse array processing. This approach will be es- spatial correlations for each band and then rescales the spatial pecially useful in acoustical scenarios, which are often limited sampling rate to align at a focused frequency. The resampled in available snapshots due to the relatively slow propagation spatial correlations from all frequency bands are then averaged to speed for sound, large array apertures and non-stationary estimate the focused spatial correlations. -

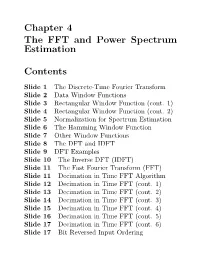

Chapter 4 the FFT and Power Spectrum Estimation Contents

Chapter 4 The FFT and Power Spectrum Estimation Contents Slide 1 The Discrete-Time Fourier Transform Slide 2 Data Window Functions Slide 3 Rectangular Window Function (cont. 1) Slide 4 Rectangular Window Function (cont. 2) Slide 5 Normalization for Spectrum Estimation Slide 6 The Hamming Window Function Slide 7 Other Window Functions Slide 8 The DFT and IDFT Slide 9 DFT Examples Slide 10 The Inverse DFT (IDFT) Slide 11 The Fast Fourier Transform (FFT) Slide 11 Decimation in Time FFT Algorithm Slide 12 Decimation in Time FFT (cont. 1) Slide 13 Decimation in Time FFT (cont. 2) Slide 14 Decimation in Time FFT (cont. 3) Slide 15 Decimation in Time FFT (cont. 4) Slide 16 Decimation in Time FFT (cont. 5) Slide 17 Decimation in Time FFT (cont. 6) Slide 17 Bit Reversed Input Ordering Slide 18 C Decimation in Time FFT Program Slide 19 C FFT Program (cont. 1) Slide 20 C FFT Program (cont. 2) Slide 21 C FFT Program (cont. 3) Slide 22 C FFT Program (cont. 4) Slide 23 C FFT Program (cont. 5) Slide 24 C FFT Program (cont. 6) Slide 25 C FFT Program (cont. 7) Slide 26 Estimating Power Spectra by FFT’s Slide 26 The Periodogram and Sample Autocorrelation Function Slide 27 Justification for Using the Periodogram Slide 28 Averaging Periodograms Slide 29 Efficient Method for Computing the Sum of the Periodograms of Two Real Sequences Slide 30 Experiment 4.1 The FFT Slide 31 FFT Experiments (cont. 1) Slide 32 FFT Experiments (cont. 2) Slide 33 FFT Experiments (cont. 3) Slide 34 Experiment 4.2 Power Spectrum Estimation Slide 35 Making a Spectrum Analyzer Slide 35 Ping-Pong Buffers 4-ii Slide 36 Ping-Pong Buffers (cont.) Slide 37 Spectrum Estimation (cont. -

Discrete-Time Signal Processing

Massachusetts Institute of Technology Department of Electrical Engineering and Computer Science 6.341: Discrete-Time Signal Processing OpenCourseWare 2006 Lecture 18 Periodogram Reading: Sections 10.6 and 10.7 in Oppenheim, Schafer & Buck (OSB). We begin this lecture by introducing three common illusions in spectral analysis: THREE ILLUSIONS ² If you can’t see it, it’s not there. (the picket fence effect) ² The more zero padding, the better the spectral resolution. (resolution vs. sampling, spectral smearing) ² For a random process, as the data record length ! 1, the magnitude-squared of the DTFT converges to the power spectral density. (the periodogram) In the last lecture, we discussed the first and the second illusions. The picket fence effect re sults from the spectral sampling imposed by the DFT and can be avoided using zero padding. However, zero padding does not improve the spectral resolution which depends on the shape and length of the window. In this lecture, we will see the third illusion which relates to spectral analysis of stochastic signals. For a deterministic signal, more data (i.e. longer window) results in better frequency resolution, but this does not hold for a stochastic signal. The power spectral density(PSD) of a random process is useful for the following purposes: 1. System Identification 2 - - Pyy (Ω) = Pxx(Ω)jH (jΩ) j x(t) h(t) y(t) Pyx(Ω) = Pxx(Ω)H (jΩ) From the PSDs of input and output, we can estimate the frequency response of the system. 1 2. Noise Removal Consider a signal: x(t) = s(t) + ´(t), where s(t) is a deterministic signal, and ´(t) is a stochastic noise process.