An Invitation to Mathematical Physics and Its History

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Brief History of the Early Development of Theoretical and Experimental Fluid Dynamics

Brief History of the Early Development of Theoretical and Experimental Fluid Dynamics John D. Anderson Jr. Aeronautics Division, National Air and Space Museum, Smithsonian Institution, Washington, DC, USA 1 INTRODUCTION 1 Introduction 1 2 Early Greek Science: Aristotle and Archimedes 2 As you read these words, there are millions of modern engi- neering devices in operation that depend in part, or in total, 3 DA Vinci’s Fluid Dynamics 2 on the understanding of fluid dynamics – airplanes in flight, 4 The Velocity-Squared Law 3 ships at sea, automobiles on the road, mechanical biomedi- 5 Newton and the Sine-Squared Law 5 cal devices, and so on. In the modern world, we sometimes take these devices for granted. However, it is important to 6 Daniel Bernoulli and the Pressure-Velocity pause for a moment and realize that each of these machines Concept 7 is a miracle in modern engineering fluid dynamics wherein 7 Henri Pitot and the Invention of the Pitot Tube 9 many diverse fundamental laws of nature are harnessed and 8 The High Noon of Eighteenth Century Fluid combined in a useful fashion so as to produce a safe, efficient, Dynamics – Leonhard Euler and the Governing and effective machine. Indeed, the sight of an airplane flying Equations of Inviscid Fluid Motion 10 overhead typifies the laws of aerodynamics in action, and it 9 Inclusion of Friction in Theoretical Fluid is easy to forget that just two centuries ago, these laws were Dynamics: the Works of Navier and Stokes 11 so mysterious, unknown or misunderstood as to preclude a flying machine from even lifting off the ground; let alone 10 Osborne Reynolds: Understanding Turbulent successfully flying through the air. -

The Bernoulli Edition the Collected Scientific Papers of the Mathematicians and Physicists of the Bernoulli Family

Bernoulli2005.qxd 24.01.2006 16:34 Seite 1 The Bernoulli Edition The Collected Scientific Papers of the Mathematicians and Physicists of the Bernoulli Family Edited on behalf of the Naturforschende Gesellschaft in Basel and the Otto Spiess-Stiftung, with support of the Schweizerischer Nationalfonds and the Verein zur Förderung der Bernoulli-Edition Bernoulli2005.qxd 24.01.2006 16:34 Seite 2 The Scientific Legacy Èthe Bernoullis' contributions to the theory of oscillations, especially Daniel's discovery of of the Bernoullis the main theorems on stationary modes. Johann II considered, but rejected, a theory of Modern science is predominantly based on the transversal wave optics; Jacob II came discoveries in the fields of mathematics and the tantalizingly close to formulating the natural sciences in the 17th and 18th centuries. equations for the vibrating plate – an Eight members of the Bernoulli family as well as important topic of the time the Bernoulli disciple Jacob Hermann made Èthe important steps Daniel Bernoulli took significant contributions to this development in toward a theory of errors. His efforts to the areas of mathematics, physics, engineering improve the apparatus for measuring the and medicine. Some of their most influential inclination of the Earth's magnetic field led achievements may be listed as follows: him to the first systematic evaluation of ÈJacob Bernoulli's pioneering work in proba- experimental errors bility theory, which included the discovery of ÈDaniel's achievements in medicine, including the Law of Large Numbers, the basic theorem the first computation of the work done by the underlying all statistical analysis human heart. -

Leonhard Euler: His Life, the Man, and His Works∗

SIAM REVIEW c 2008 Walter Gautschi Vol. 50, No. 1, pp. 3–33 Leonhard Euler: His Life, the Man, and His Works∗ Walter Gautschi† Abstract. On the occasion of the 300th anniversary (on April 15, 2007) of Euler’s birth, an attempt is made to bring Euler’s genius to the attention of a broad segment of the educated public. The three stations of his life—Basel, St. Petersburg, andBerlin—are sketchedandthe principal works identified in more or less chronological order. To convey a flavor of his work andits impact on modernscience, a few of Euler’s memorable contributions are selected anddiscussedinmore detail. Remarks on Euler’s personality, intellect, andcraftsmanship roundout the presentation. Key words. LeonhardEuler, sketch of Euler’s life, works, andpersonality AMS subject classification. 01A50 DOI. 10.1137/070702710 Seh ich die Werke der Meister an, So sehe ich, was sie getan; Betracht ich meine Siebensachen, Seh ich, was ich h¨att sollen machen. –Goethe, Weimar 1814/1815 1. Introduction. It is a virtually impossible task to do justice, in a short span of time and space, to the great genius of Leonhard Euler. All we can do, in this lecture, is to bring across some glimpses of Euler’s incredibly voluminous and diverse work, which today fills 74 massive volumes of the Opera omnia (with two more to come). Nine additional volumes of correspondence are planned and have already appeared in part, and about seven volumes of notebooks and diaries still await editing! We begin in section 2 with a brief outline of Euler’s life, going through the three stations of his life: Basel, St. -

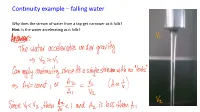

Continuity Example – Falling Water

Continuity example – falling water Why does the stream of water from a tap get narrower as it falls? Hint: Is the water accelerating as it falls? Answer: The fluid accelerates under gravity so the velocity is higher down lower. Since Av = const (i.e. A is inversely proportional to v), the cross sectional area, and the radius, is smaller down lower Bernoulli’s principle Bernoulli conducted experiments like this: https://youtu.be/9DYyGYSUhIc (from 2:06) A fluid accelerates when entering a narrow section of tube, increasing its kinetic energy (=½mv2) Something must be doing work on the parcel. But what? Bernoulli’s principle resolves this dilemma. Daniel Bernoulli “An increase in the speed of an ideal fluid is accompanied 1700-1782 by a drop in its pressure.” The fluid is pushed from behind Examples of Bernoulli’s principle Aeroplane wings high v, low P Compressible fluids? Bernoulli’s equation OK if not too much compression. Venturi meters Measure velocity from pressure difference between two points of known cross section. Bernoulli’s equation New Concept: A fluid parcel of volume V at pressure P has a pressure potential energy Epot-p = PV. It also has gravitational potential energy: Epot-g = mgh Ignoring friction, the energy of the parcel as it flows must be constant: Ek + Epot = const 1 mv 2 + PV + mgh = const ) 2 For incompressible fluids, we can divide by volume, showing conservation of energy 1 density: ⇢v 2 + P + ⇢gh = const 2 This is Bernoulli’s equation. Bernoulli equation example – leak in water tank A full water tank 2m tall has a hole 5mm diameter near the base. -

Leonhard Euler - Wikipedia, the Free Encyclopedia Page 1 of 14

Leonhard Euler - Wikipedia, the free encyclopedia Page 1 of 14 Leonhard Euler From Wikipedia, the free encyclopedia Leonhard Euler ( German pronunciation: [l]; English Leonhard Euler approximation, "Oiler" [1] 15 April 1707 – 18 September 1783) was a pioneering Swiss mathematician and physicist. He made important discoveries in fields as diverse as infinitesimal calculus and graph theory. He also introduced much of the modern mathematical terminology and notation, particularly for mathematical analysis, such as the notion of a mathematical function.[2] He is also renowned for his work in mechanics, fluid dynamics, optics, and astronomy. Euler spent most of his adult life in St. Petersburg, Russia, and in Berlin, Prussia. He is considered to be the preeminent mathematician of the 18th century, and one of the greatest of all time. He is also one of the most prolific mathematicians ever; his collected works fill 60–80 quarto volumes. [3] A statement attributed to Pierre-Simon Laplace expresses Euler's influence on mathematics: "Read Euler, read Euler, he is our teacher in all things," which has also been translated as "Read Portrait by Emanuel Handmann 1756(?) Euler, read Euler, he is the master of us all." [4] Born 15 April 1707 Euler was featured on the sixth series of the Swiss 10- Basel, Switzerland franc banknote and on numerous Swiss, German, and Died Russian postage stamps. The asteroid 2002 Euler was 18 September 1783 (aged 76) named in his honor. He is also commemorated by the [OS: 7 September 1783] Lutheran Church on their Calendar of Saints on 24 St. Petersburg, Russia May – he was a devout Christian (and believer in Residence Prussia, Russia biblical inerrancy) who wrote apologetics and argued Switzerland [5] forcefully against the prominent atheists of his time. -

Bernoulli? Perhaps, but What About Viscosity?

Volume 6, Issue 1 - 2007 TTTHHHEEE SSSCCCIIIEEENNNCCCEEE EEEDDDUUUCCCAAATTTIIIOOONNN RRREEEVVVIIIEEEWWW Ideas for enhancing primary and high school science education Did you Know? Fish Likely Feel Pain Studies on Rainbow Trout in the United Kingdom lead us to conclude that these fish very likely feel pain. They have pain receptors that look virtually identical to the corresponding receptors in humans, have very similar mechanical and thermal thresholds to humans, suffer post-traumatic stress disorders (some of which are almost identical to human stress reactions), and respond to morphine--a pain killer--by ceasing their abnormal behaviour. How, then, might a freshly-caught fish be treated without cruelty? Perhaps it should be plunged immediately into icy water (which slows the metabolism, allowing the fish to sink into hibernation and then anaesthesia) and then removed from the icy water and placed gently on ice, allowing it to suffocate. Bernoulli? Perhaps, but What About Viscosity? Peter Eastwell Science Time Education, Queensland, Australia [email protected] Abstract Bernoulli’s principle is being misunderstood and consequently misused. This paper clarifies the issues involved, hypothesises as to how this unfortunate situation has arisen, provides sound explanations for many everyday phenomena involving moving air, and makes associated recommendations for teaching the effects of moving fluids. "In all affairs, it’s a healthy thing now and then to hang a question mark on the things you have long taken for granted.” Bertrand Russell I was recently asked to teach Bernoulli’s principle to a class of upper primary students because, as the Principal told me, she didn’t feel she had a sufficient understanding of the concept. -

The Collected Scientific Papers of the Mathematicians and Physicists of the Bernoulli Family

The Collected Scientific Papers of the Mathematicians and Physicists of the Bernoulli Family Edited on behalf of the Naturforschende Gesellschaft in Basel and the Otto Spiess-Stiftung, with support of the Schweizerischer Nationalfonds and the Verein zur Förderung der Bernoulli-Edition The Scientific Legacy of the Bernoullis Modern science is predominantly based on the discoveries in the fields of mathematics and the natural sciences in the 17th and 18th centuries. Eight members of the Bernoulli family as well as the Bernoulli disciple Jacob Hermann made significant contributions to this development in the areas of mathematics, physics, engineering and medicine. Some of their most influential achievements may be listed as follows: • Jacob Bernoulli's pioneering work in probability theory, which included the discovery of the Law of Large Numbers, the basic theorem underlying all statistical analysis • Jacob's determination of the form taken by a loaded beam, the first attempt at a systematic formulation of elasticity theory • the calculus of variations, invented by Jacob and by his brother Johann. Variational principles are the basis of much of modern physics • the contributions of both Jacob and Johann to analysis, differential geometry and mechanics, which developed and disseminated Leibniz's calculus • the formulation of Newtonian mechanics in the differential form by which we know it today, pioneered by Jacob Hermann and by Johann I Bernoulli • Johann's work on hydrodynamics. Not well known until recently, it is now highly regarded by historians of physics • Daniel Bernoulli's energy theorem for stationary flow, universally used in hydrodynamics and aerodynamics, and his derivation of Boyle's law, which for the first time explains macroscopic properties of gases by molecular motion, thus marking the beginning of kinetic gas theory • the Bernoullis' contributions to the theory of oscillations, especially Daniel's discovery of the main theorems on stationary modes. -

Daniel Bernoulli (1700 – 1782)

Daniel Bernoulli (1700 – 1782) From Wikipedia, the free encyclopedia, http://en.wikipedia.org/wiki/Daniel_Bernoulli Daniel Bernoulli (Groningen, 29 January 1700 – Basel, 17 March 1782) was a Swiss mathematician and was one of the many prominent mathematicians in the Bernoulli family. He is particularly remembered for his applications of mathematics to mechanics, especially fluid mechanics, and for his pioneering work in probability and statistics. Bernoulli's work is still studied at length by many schools of science throughout the world. Early life: Daniel Bernoulli was born in Groningen, in the Netherlands into a family of distinguished mathematicians. The son of Johann Bernoulli (one of the "early developers" of calculus), nephew of Jakob Bernoulli (who "was the first to discover the theory of probability"), and older brother of Johann II, Daniel Bernoulli has been described as "by far the ablest of the younger Harpers". He is said to have had a bad relationship with his father, Johann. Upon both of them entering and tying for first place in a scientific contest at the University of Paris, Johann, unable to bear the "shame" of being compared as Daniel's equal, banned Daniel from his house. Johann Bernoulli also plagiarized some key ideas from Daniel's book Hydrodynamica in his own book Hydraulica which he backdated to before Hydrodynamica. Despite Daniel's attempts at reconciliation, his father carried the grudge until his death. When Daniel was seven, his younger brother Johann II Bernoulli was born. Around schooling age, his father, Johann Bernoulli, encouraged him to study business, there being poor rewards awaiting a mathematician. -

Lecture 29. Bernouilli Brothers

Lecture 29. Bernouilli Brothers Figure 29.1 Jacob Bernoulli and Johann Bernouli The Bernoulli brothers joined Leibniz after 1687. Before 1700 these men had found most of our undergraduate calculus, including the calculus of variation. Bernoulli brothers The ancestors of the Bernoulli family originally lived in Holland. In 1583, they migrated to Switzerland and settled at Basel. Bernoulli family is an extraordi- nary Swiss family from Basel that produced eight outstanding mathematicians within three generations. Jacob(1654-1705) and Johann (1667-1748) are considered the most important founders of calculus (with the exception of Newton and Leibniz). Following his father's wish, Jacob studied theology and entered the ministry. But he developed a passion for mathematics in 1676. Jacob Bernoulli basically taught himself in these subjects and went on to lecture in experimental physics at the University of Basel. Jacob Bernoulli became a professor of mathematics in the same university in 1687. Around the time, his younger brother Johann, meeting the wishes of his father to go into commerce, enrolled at the university to study medicine. However, Johann secretly studied 193 mathematics with his brother Jacob. Just over two years, Johann's mathematical level was close to his brother's. In 1684, Leibniz's most important work on calculus published. Jacob and Johann quickly realized the importance and significance of this work, and began to become familiar with calculus through a correspondence with Gottfried Leibniz. At the time, Leibniz's publica- tions on the calculus were very obscure to mathematicians and the Bernoulli brothers were the first to try to understand and apply Leibniz's theories. -

The Struggle for the Range of Telephone Communication Before the Invention of the Audion Lee De Forest

ITM Web of Conferences 30, 16002 (2019) https://doi.org/10.1051/itmconf /201930160 02 CriMiCo'2019 The struggle for the range of telephone communication before the invention of the audion Lee de Forest Victor M. Pestrikov1, and Pavel P. Yermolov2 1Saint-Petersburg State University of Film and Television 13, Pravda Str., Saint-Petersburg, 191119, Russian Federation 2Sevastopol State University, 33, Universitetskaya Str., Sevastopol, 299053, Russian Federation Abstract. The increase in the range of laying telephone lines at the beginning of the 20th century raised the issue of high-quality telephone signal transmission for scientists and engineers. The innovative technology for solving the problem was based on the “Heaviside condition”. AT&T engineer George Campbell, who received a patent for the design of load coils, was engaged in this task. He demonstrated that a telephone line loaded with coils can transmit clear voice signals twice as far as unloaded telephone lines. At about the same time, Columbia University professor Michael Pupin dealt with this problem, who also received a patent for a similar concept in priority over Campbell's application. As a result, load coils became known as “Pupin coils”. Ironically, Campbell lost the patent priority battle. The proposed innovation eliminated time delays and distortions in the signal, thereby significantly increasing the transmission speed. 1 Introduction The operation of long-distance telephone lines, at the beginning of the 20th century, revealed a number of their shortcomings, among which the main thing was noted - the low quality of human speech transmission [1]. The arising problem required its quick solution. New cable designs were needed that were fundamentally different from the existing ones, especially telegraph cables. -

Daniel Bernoulli Biography

Daniel Bernoulli Biography IN Mathematicians, Physicists ALSO KNOWN AS Daniel Bernovllivs FAMOUS AS Mathematician NATIONALITY Swiss RELIGION Calvinism BORN ON 08 February 1700 AD Famous 8th February Birthdays ZODIAC SIGN Aquarius Aquarius Men BORN IN Groningen DIED ON 17 March 1782 AD PLACE OF DEATH Basel FATHER Johann Bernoulli SIBLINGS Nicolaus II Bernoulli EDUCATION University of Basel Daniel Bernoulli was a Swiss mathematician and physicist who did pioneering work in the field of fluid dynamics and kinetic theory of gases. He investigated not only mathematics and physics but also achieved considerable success in exploring other fields such as medicine, physiology, mechanics, astronomy, and oceanography. Born in a distinguished family of mathematicians, he was encouraged by his father to pursue a business career. After obtaining his Master of Arts degree, he studied medicine and was also privately tutored in mathematics by his father. Subsequently, he made a name for himself and was called to St. Petersburg, where he spent several fruitful years teaching mathematics. During this time, he wrote important texts on the theory of mechanics, including a first version of his famous treatise on hydrodynamics. Later, he served as a professor of anatomy and botany in Basel before being appointed to the chair of physics. There he taught physics for the next 26 years and also produced several other excellent scientific works during his term. In one of his most remarkable works ‘Hydrodynamica’ which was a milestone in the theory of the flowing behavior of liquids, he developed the theory of watermills, windmills, water pumps and water propellers. But, undoubtedly, his most significant contribution to sciences would be the ‘Bernoulli Theorem’ which still remains the general principle of hydrodynamics and aerodynamics, and forms the basis of modern aviation Career In 1723-24, he published one of his earliest mathematical works titled ‘Exercitationes quaedam Mathematicae’ (Mathematical Exercises). -

A History of Engineering & Science in the Bell System

A History of Engineering and Science in the Bell System The Early Years (1875-1925) A History of Engineering and Science in the deal with developments in specific areas of Bell System: The Early Years (1875-1925) offers technology that led towards its realization. a detailed view of the first fifty years of tele The evolution of station apparatus, wire and phone technology. The narrative goes well wireless (radio) transmission, switching, and beyond a simple statement of events to deal various non-voice services is described in de with the "how" and "why" of technological tail. Other chapters cover the development innovation. It examines the underlying of materials and components for these new motivations and evaluates the long-term im communications systems, the origins of portance of the engineering, scientific, and quality control techniques, and the begin organizational achievements that were the nings of scientific research in the Bell System. substance of the first five decades of re An early chapter is devoted to the corporate markable progress in telecommunications. structures of the period since these organiza While this technical history is primarily an tional arrangements provided a continuing account of Bell System achievements, the synergy of business planning and advancing treatment has not been constrained by cor technological skills. porate boundaries and thus recognizes fundamental contributions originating out side of the System. A History of Engineering and Science in the During the half-century covered by The Bell System: The Early Years (1875-1925) is Early Years, a whole new field of technology illustrated with over 500 photographs and evolved.