Evaluation and Interpretation of Convolutional-Recurrent Networks For

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Preliminary Assessment of the Native Fish Stocks of Jasper National Park

A Preliminary Assessment of the Native Fish Stocks of Jasper National Park David W. Mayhood Part 3 of a Fish Management Plan for Jasper National Park Freshwater Research Limited A Preliminary Assessment of the Native Fish Stocks of Jasper National Park David W. Mayhood FWR Freshwater Research Limited Calgary, Alberta Prepared for Canadian Parks Service Jasper National Park Jasper, Alberta Part 3 of a Fish Management Plan for Jasper National Park July 1992 Cover & Title Page. Alexander Bajkov’s drawings of bull trout from Jacques Lake, Jasper National Park (Bajkov 1927:334-335). Top: Bajkov’s Figure 2, captioned “Head of specimen of Salvelinus alpinus malma, [female], 500 mm. in length from Jaques [sic] Lake.” Bottom: Bajkov’s Figure 3, captioned “Head of specimen of Salvelinus alpinus malma, [male], 590 mm. in length, from Jaques [sic] Lake.” Although only sketches, Bajkov’s figures well illustrate the most characteristic features of this most characteristic Jasper native fish. These are: the terminal mouth cleft bisecting the anterior profile at its midpoint, the elongated head with tapered snout, flat skull, long lower jaw, and eyes placed high on the head (Cavender 1980:300-302; compare with Cavender’s Figure 3). The head structure of bull trout is well suited to an ambush-type predatory style, in which the charr rests on the bottom and watches for prey to pass over. ABSTRACT I conducted an extensive survey of published and unpublished documents to identify the native fish stocks of Jasper National Park, describe their original condition, determine if there is anything unusual or especially significant about them, assess their present condition, outline what is known of their biology and life history, and outline what measures should be taken to manage and protect them. -

Exploration in the Rocky Mountains North of the Yellowhead Pass Author(S): J

Exploration in the Rocky Mountains North of the Yellowhead Pass Author(s): J. Norman Collie Source: The Geographical Journal, Vol. 39, No. 3 (Mar., 1912), pp. 223-233 Published by: geographicalj Stable URL: http://www.jstor.org/stable/1778435 Accessed: 12-06-2016 07:31 UTC Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at http://about.jstor.org/terms JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. Wiley, The Royal Geographical Society (with the Institute of British Geographers) are collaborating with JSTOR to digitize, preserve and extend access to The Geographical Journal This content downloaded from 155.69.24.171 on Sun, 12 Jun 2016 07:31:04 UTC All use subject to http://about.jstor.org/terms EXPLORATION IN THE ROCKY MOUNTAINS. 223 overtures to Bhutan and Nepal, which have been rejected by these states, and I am very glad they have been. The Chinese should not be allowed on the Indian side of the Himalayas. The President : We will conclude with a vote of thanks to Mr. Rose for his excellent paper. EXPLORATION IN THE ROCKY MOUNTAINS NORTH OF THE YELLOWHEAD PASS.* By J. NORMAN OOLLIE, Ph.D., LL.D., F.R.S., F.R.G.S., etc. The part of the Koeky mountains, that run north through what is now the Dominion of Canada, have only in the last twenty-five years been made accessible to the ordinary traveller. -

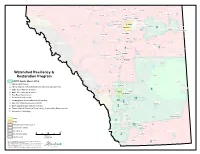

Watershed Resiliency and Restoration Program Maps

VU32 VU33 VU44 VU36 V28A 947 U Muriel Lake UV 63 Westlock County VU M.D. of Bonnyville No. 87 18 U18 Westlock VU Smoky Lake County 28 M.D. of Greenview No. 16 VU40 V VU Woodlands County Whitecourt County of Barrhead No. 11 Thorhild County Smoky Lake Barrhead 32 St. Paul VU County of St. Paul No. 19 Frog Lake VU18 VU2 Redwater Elk Point Mayerthorpe Legal Grande Cache VU36 U38 VU43 V Bon Accord 28A Lac Ste. Anne County Sturgeon County UV 28 Gibbons Bruderheim VU22 Morinville VU Lamont County Edson Riv Eds er on R Lamont iver County of Two Hills No. 21 37 U15 I.D. No. 25 Willmore Wilderness Lac Ste. Anne VU V VU15 VU45 r Onoway e iv 28A S R UV 45 U m V n o o Chip Lake e k g Elk Island National Park of Canada y r R tu i S v e Mundare r r e Edson 22 St. Albert 41 v VU i U31 Spruce Grove VU R V Elk Island National Park of Canada 16A d Wabamun Lake 16A 16A 16A UV o VV 216 e UU UV VU L 17 c Parkland County Stony Plain Vegreville VU M VU14 Yellowhead County Edmonton Beaverhill Lake Strathcona County County of Vermilion River VU60 9 16 Vermilion VU Hinton County of Minburn No. 27 VU47 Tofield E r i Devon Beaumont Lloydminster t h 19 21 VU R VU i r v 16 e e U V r v i R y Calmar k o Leduc Beaver County m S Leduc County Drayton Valley VU40 VU39 R o c k y 17 Brazeau County U R V i Viking v e 2A r VU 40 VU Millet VU26 Pigeon Lake Camrose 13A 13 UV M U13 VU i V e 13A tt V e Elk River U R County of Wetaskiwin No. -

Milebymile.Com Personal Road Trip Guide Alberta Highway #93 "Icefields Parkway, Jasper to Lake Louise, Banff"

MileByMile.com Personal Road Trip Guide Alberta Highway #93 "Icefields Parkway, Jasper to Lake Louise, Banff" Kms ITEM SUMMARY 0.0 Junction of Highways #93 This highway is a toll highway, They have a seniors rate. & #16 Yellowhead Route NOTE, There is no FUEL, for 156kms. This highway passes through Jasper and Banff National Parks. Altitude: 3471 feet 0.0 The Town of Jasper, East To Hinton, Alberta, Edson, Alberta, Edmonton, Alberta. For travel Alberta - Junction of East see Milebymile.com - Alberta Road Map Travel Guide, Edmonton Highways #93 & #16 to Jasper, Alberta/British Columbia Border, for driving directions. Yellowhead Route - Jasper Altitude: 3471 feet National Park 0.0 Junction of Highways #93 West to Prince George, B.C., Kamloops, B.C.. & #16 Yellowhead Route - For travel West see Milebymile.com - Alberta Road Map Travel Guide, Jasper National Park Edmonton to Jasper, Alberta/British Columbia Border for driving directions. Altitude: 3471 feet 0.7 Pull Out Area Miette River bridge crossing - Jasper National Park. Altitude: 3432 feet 1.8 Access Road - Jasper Whistlers Campground, AB; Camping, 100 elec and 604 non elec sites. National Park, AB Jasper Tramway, Jasper National Park Whistlers International Hostel, AB. Altitude: 3419 feet 3.4 Wapiti Campground - Camping 40 elec sites, 57 non elec. Jasper National Park. This campground is open all year. Altitude: 3504 feet 5.0 Beckers Chalet Accommodations Altitude: 3543 feet 5.0 View from highway. Driving south, Jasper National Park, Alberta. Altitude: 3560 feet 6.1 Icefields Parkway -Jasper Toll Gate, They have a Seniors rate you have to ask for it. -

1999-2010 Canadian Heritage River Monitoring Report

Athabasca River: 1999-2010 Canadian Heritage River Monitoring Report April 2011 Cover Photos (left to right): Athabasca Falls, Jasper Lake Sand Dunes, Bridge at Old Fort Point Photos by: Parks Canada (left), J. Deagle (middle, right) Également offert en français © Her Majesty the Queen in right of Canada, represented by the Chief Executive Officer of Parks Canada, 2011 ISBN: 978-1-100-18504-0 Catalog No.: R64-410/2011E-PDF CHR MONITORING REPORT: ATHABASCA RIVER ii Table of Contents Foreword .......................................................................................................................................... 1 Acknowledgements .......................................................................................................................... 1 1.0 Executive Summary .................................................................................................................. 2 2.0 Introduction ............................................................................................................................. 2 3.0 Background .............................................................................................................................. 4 3.1 Policy Context ....................................................................................................................... 5 3.2 Nomination Values ................................................................................................................7 4.0 Chronology of Events ............................................................................................................. -

Flood Watch Update

Flood Watch Update Issued at: 10:50 AM, June 7, 2007 Issued by: River Forecast Centre, Flow Forecasting Flood Watch Update for North and Central Alberta A Flood Watch remains in effect for the following rivers and their tributaries in the Athabasca River basin: • Miette River near Jasper • Athabasca River upstream of Hinton (including Hinton and Jasper) A Flood Watch remains in effect for the following river and its tributaries in the North Saskatchewan River basin: • Clearwater River including Prairie Creek A Flood Watch remains in effect for the following river and its tributaries in the Red Deer River basin: • Red Deer River upstream of Dickson Dam A Flood Watch remains in effect for the following rivers and its tributaries in the Bow River basin: • Bow River upstream of and including Banff • Jumpingpound Creek A Flood Watch has been downgraded to a High Streamflow Advisory for the following streams in the North Saskatchewan River basin: • Brazeau River upstream of the Brazeau Dam • Ram River These streams have peaked and are currently falling slowly. High Streamflow Advisory Update for North and Central Alberta A High Streamflow Advisory remains in effect for following rivers and their tributaries in the Smoky River basin: • Smoky River near Grande Cache • Kakwa River • Wapiti River near Grande Prairie A High Streamflow Advisory remains in effect for following rivers and their tributaries in the Athabasca River basin: • Sunwapta River • Athabasca River downstream of Hinton (including the Town of Athabasca and Fort McMurray) A High -

Glaciers of the Canadian Rockies

Glaciers of North America— GLACIERS OF CANADA GLACIERS OF THE CANADIAN ROCKIES By C. SIMON L. OMMANNEY SATELLITE IMAGE ATLAS OF GLACIERS OF THE WORLD Edited by RICHARD S. WILLIAMS, Jr., and JANE G. FERRIGNO U.S. GEOLOGICAL SURVEY PROFESSIONAL PAPER 1386–J–1 The Rocky Mountains of Canada include four distinct ranges from the U.S. border to northern British Columbia: Border, Continental, Hart, and Muskwa Ranges. They cover about 170,000 km2, are about 150 km wide, and have an estimated glacierized area of 38,613 km2. Mount Robson, at 3,954 m, is the highest peak. Glaciers range in size from ice fields, with major outlet glaciers, to glacierets. Small mountain-type glaciers in cirques, niches, and ice aprons are scattered throughout the ranges. Ice-cored moraines and rock glaciers are also common CONTENTS Page Abstract ---------------------------------------------------------------------------- J199 Introduction----------------------------------------------------------------------- 199 FIGURE 1. Mountain ranges of the southern Rocky Mountains------------ 201 2. Mountain ranges of the northern Rocky Mountains ------------ 202 3. Oblique aerial photograph of Mount Assiniboine, Banff National Park, Rocky Mountains----------------------------- 203 4. Sketch map showing glaciers of the Canadian Rocky Mountains -------------------------------------------- 204 5. Photograph of the Victoria Glacier, Rocky Mountains, Alberta, in August 1973 -------------------------------------- 209 TABLE 1. Named glaciers of the Rocky Mountains cited in the chapter -

Prepared For

Terasen Pipelines (Trans Mountain) Inc. Wetlands TMX - Anchor Loop Project November 2005 EXECUTIVE SUMMARY With the TMX - Anchor Loop Project (the “Project”) Terasen Pipelines (Trans Mountain) Inc. (“Terasen Pipelines”) proposes to loop a portion of its existing National Energy Board (“NEB”) regulated oil pipeline system (the “Trans Mountain pipeline” or “Trans Mountain”) to increase the capacity of the Trans Mountain pipeline to meet growing shipper demand. The Project involves the construction of 158 km of 812 mm or 914 mm (32-inch or 36-inch) diameter pipe between a location west of Hinton, Alberta at Kilometre Post/Kilometre Loop (“KP/KL") 310.1 and a location near Rearguard, British Columbia (BC) (KP/KL 468.0). The Project also includes the installation of two new pump stations at locations along the Trans Mountain pipeline, one in Alberta at Wolf (KP 188.0) and one in BC, at Chappel (KP 555.5), and the installation of associated aboveground facilities including block valves at several locations and a receiving trap for pipeline cleaning and inspection tools at a location near Rearguard, BC (KP/KL 468.0). Construction of the Project will require temporary construction camps and other temporary work yards. The Project will traverse federal, provincial and private lands, including Jasper National Park (JNP) in Alberta and Mount Robson Provincial Park (MRPP) in BC. Two pipeline route options were assessed in detail for the TMX - Anchor Loop Project, namely the Proposed Route and the Existing Route (i.e., the Trans Mountain pipeline). Both route options are evaluated within this report. Wetland resources and function have been identified as a Valued Ecosystem Component (VEC) for the environmental assessment of the Project. -

Jasper National Park

Jasper National Park may be reached by motor from A list of accommodations in the park with rates follows:— RECREATIONAL FACILITIES JASPER NATIONAL PARK points in the northwestern United States via Kingsgate, Cranbrook, and Kootenay National Park, British Columbia, Town of Jaspei Accommodation Rates per day The town of Jasper forms a centre for recreation. Summer ALBERTA over Highway No. 4 and the Banff-Windermere Highway Astoria Hotel 32 rooms Single $2-$3 ; double $3-$4.50 (Eur.) sports which may be enjoyed under ideal conditions include Athabasca Hotel 53 rooms Single $2.50-$4: double $4-$6; Suites CANADA (IB), to Banff Park, and thence to Jasper via the Banff- $7-$l2 (Eur. plan) hiking, riding, motoring, mountain climbing, boating, fishing, Jasper Highway. An alternative route is available by way Pyramid Hotel 21 rooms Single $1-$1.50; double $2.50-$4 bathing, tennis, and golf. In winter, curling, ski-ing, skating. DEPARTMENT OF MINES AND RESOURCES (Eur.) and snow-shoeing are available to the visitor. LANDS, PARKS AND FORESTS BRANCH PURPOSE OF NATIONAL PARKS of Glacier National Park, Montana, Waterton Lakes National NATIONAL PARKS BUREAU Park, Macleod, Calgary, and Banff. Vicinity of Jasper— 1 °42 *Jasper Park Lodge (Ace. 650 Single $9 up; double $16 up (Amer. Bathing and Swimming.—Outdoor bathing may be The National Parks of Canada are areas of outstanding beauty Following are the distances from well-known points to enjoyed at Lakes Annette and Edith, five miles from Jasper, MAP OF and interest which have been dedicated to the people of Canada (C.N.R.) persons) plan) according to type of accom- Jasper, headquarters of Jasper National Park:— (JLac Beauvert, 4 miles from Jasper") modation desired, where dressing-rooms are available. -

Visitor Guide

Visitor Guide If you see wildlife on the road while driving, STAY IN YOUR VEHICLE. Photo: Rogier Gruys Discovery trail Également offert en français For COVID-19 information go to: jasper-alberta.com/covid Photo: Ryan Bray Contents Welcome Jasper is the largest national park in the Canadian Rockies. Safety is your Responsibility 4 The park is over 11,000 square kilometres. Explore all five travel Share the Roads 5 regions in Jasper National Park. Hike, bike, paddle, or simply breathe in the scenery. The choice is yours. Water Sports and Invasive Species 6 We respectfully acknowledge that Jasper National Park is Fort St. James National Historic Site 7 in Treaty Six and Eight territories as well as the traditional Five Park Areas to Explore 8+9 territories of the Beaver, Cree, Ojibway, Shuswap, Stoney and Métis Nations. We mention this to honor and be thankful for Around Town 10 their contributions to building our park, province and nation. Maligne Valley 12 Parks Canada wishes you a warm welcome. Enjoy your visit! Jasper East & Miette Hot Springs 14 Mount Edith Cavell 16 Icefields Parkway 17 Icefields Parkway Driving Map 18+19 Wildlife Identification 20 Species at Risk 21 Human Food Kills Wildlife 22 Park Regulations 23 Winter in Jasper 24 Campgrounds 26 Why are the trees red? 27 Directory 27 Mountain Parks Map 28 Photo: Drew McDonald Photo: Drew 2 Photo: Nicole Covey Jasper Townsite e v i 15 r D 11 t h g u a n n Explore the ways less travelled o 11 2 C 15 See legend 8e With millions of visitors every year, our roads and on p. -

Northern Rockies

TransCanada Ecotours® TransCanada “This book wonderfully Let the Northern Rockies Ecotour set your compass Discover the secrets of a spectacular interlaces the physical, for a remarkable journey of discovery through the Canadian landscape with the biological and historical northern part of the Canadian Rocky Mountain qualities of one of Parks World Heritage site. 265 photos, 22 maps and TransCanada Ecotours® Canada’s iconic regions. 133 Ecopoints present the region’s rich First Nations An entertaining but highly history, exploits of early fur-traders, artists, mission- aries, tourists, and scientists, and the ongoing inter- educational account that Northern play of people, wildlife and their inspiring mountain will captivate all who ecosystem. The authors draw on current research traverse the Northern to discuss key environmental issues such as climate Rockies be it by bike, car, Rockies change and biodiversity conservation. train or vicariously from their living room.” You will explore the eastern foothills (Chapter One) Highway Guide ending in historic Grande Cache, then travel west on NIK LOPOUKHINE CHAIR IUCN WORLD COMMISSION the Yellowhead Highway (Chapter Two) following ON PROTECTED AREAS major rivers across the continental divide from Hinton Guide Northern Rockies Highway to Valemount, British Columbia. In the concluding Chapter Three, the Ecotour turns southwards from Jasper along the spectacular Icefields Parkway through the Rockies, ending near Lake Louise. See the full route map on page 5 INSTITUTE RESEARCH FOOTHILLS $24.95 P.O. Box 6330 A lavishly illustrated driving guide 133 points of interest, 265 photos, 1176 Switzer Drive to the landscapes, geology, ecology, 22 maps that include: Hinton, Alberta T7V 1X6 culture, people and history of the Hinton – Cadomin – Grande Cache – Canada Northern Rockies Region of Alberta Jasper – Valemount – and the and British Columbia. -

Birds of Jasper National Park, Alberta, Canada

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Wildlife Damage Management, Internet Center Other Publications in Wildlife Management for 1955 BIRDS OF JASPER NATIONAL PARK, ALBERTA, CANADA Ian McTaggart Cowan Canadian Wildlife Service Follow this and additional works at: https://digitalcommons.unl.edu/icwdmother Part of the Environmental Sciences Commons Cowan, Ian McTaggart, "BIRDS OF JASPER NATIONAL PARK, ALBERTA, CANADA" (1955). Other Publications in Wildlife Management. 67. https://digitalcommons.unl.edu/icwdmother/67 This Article is brought to you for free and open access by the Wildlife Damage Management, Internet Center for at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Other Publications in Wildlife Management by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. "'" ', ...... ,.,~·A WILDLIFE MANAGEMENT BULLETIN ~. iorcstltion , 'ark Commission RECEIVED JUll :1955 'I ~ •. -.--.-.~ ..... M. o.~ .• ~ .. __ .... ___ . ; " Or.tt ....... -- .. •... '~"".-'."'fi' '* , I" DEPARTMENT OF NORTHERN AFFAIRS AND NATIONAL RESOURCES NATIONAL PARKS BRANCH CANADIAN WILDLIFE SERVICE SERIES 2 OTTAWA NUMBER 8 JUNE 1955 , CANADA DEPARTMENT OF NORTHERN AFFAIRS AND NATIONAL RESOURCES NATIONAL PARKS BRANCH CANADIAN WILDLIFE SERVICE BIRDS OF JASPER NATIONAL PARK, ALBERTA, CANADA by Ian McTaggart Cowan WILDLIFE MANAGEMErfr BULLETIN SERIFS 2 NUMBER 8 Issued under the authority of The Minister of Northern Affairs and National Resources Ottawa 1955 Contents