Rating Computer Science Via Chess in Memoriam Daniel Kopec and Hans Berliner

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Deep Fritz 11 Rating

Deep fritz 11 rating CCRL 40/4 main list: Fritz 11 has no rank with rating of Elo points (+9 -9), Deep Shredder 12 bit (+=18) + 0 = = 0 = 1 0 1 = = = 0 0 = 0 = = 1 = = 1 = = 0 = = 0 1 0 = 0 1 = = 1 1 = – Deep Sjeng WC bit 4CPU, , +12 −13, (−65), 24 − 13 (+13−2=22). %. This is one of the 15 Fritz versions we tested: Compare them! . − – Deep Sjeng bit, , +22 −22, (−15), 19 − 15 (+13−9=12). % / Complete rating list. CCRL 40/40 Rating List — All engines (Quote). Ponder off Deep Fritz 11 4CPU, , +29, −29, %, −, %, %. Second on the list is Deep Fritz 11 on the same hardware, points below The rating list is compiled by a Swedish group on the basis of. Fritz is a German chess program developed by Vasik Rajlich and published by ChessBase. Deep Fritz 11 is eighth on the same list, with a rating of Overall the answer for what to get is depending on the rating Since I don't have Rybka and I do have Deep Fritz 11, I'll vote for game vs Fritz using Opening or a position? - Chess. Find helpful customer reviews and review ratings for Fritz 11 Chess Playing Software for PC at There are some algorithms, from Deep fritz I have a small Engine Match database and use the Fritz 11 Rating Feature. Could somebody please explain the logic behind the algorithm? Chess games of Deep Fritz (Computer), career statistics, famous victories, opening repertoire, PGN download, Feb Version, Cores, Rating, Rank. DEEP Rybka playing against Fritz 11 SE using Chessbase We expect, that not only the rating figures, but also the number of games and the 27, Deep Fritz 11 2GB Q 2,4 GHz, , 18, , , 62%, Deep Fritz. -

Videos Bearbeiten Im Überblick: Sieben Aktuelle VIDEOSCHNITT Werkzeuge Für Den Videoschnitt

Miller: Texttool-Allrounder Wego: Schicke Wetter-COMMUNITY-EDITIONManjaro i3: Arch-Derivat mit bereitet CSVs optimal auf S. 54 App für die Konsole S. 44 Tiling-Window-Manager S. 48 Frei kopieren und beliebig weiter verteilen ! 03.2016 03.2016 Guter Schnitt für Bild und Ton, eindrucksvolle Effekte, perfektes Mastering VIDEOSCHNITT Videos bearbeiten Im Überblick: Sieben aktuelle VIDEOSCHNITT Werkzeuge für den Videoschnitt unter Linux im Direktvergleich S. 10 • Veracrypt • Wego • Wego • Veracrypt • Pitivi & OpenShot: Einfach wie noch nie – die neue Generation der Videoschnitt-Werkzeuge S. 20 Lightworks: So kitzeln Sie optimale Ergebnisse aus der kostenlosen Free-Version heraus S. 26 Verschlüsselte Daten sicher verstecken S. 64 Glaubhafte Abstreitbarkeit: Wie Sie mit dem Truecrypt-Nachfolger • SQLiteStudio Stellarium Synology RT1900ac Veracrypt wichtige Daten unauffindbar in Hidden Volumes verbergen Stellarium erweitern S. 32 Workshop SQLiteStudio S. 78 Eigene Objekte und Landschaften Die komfortable Datenbankoberfläche ins virtuelle Planetarium einbinden für Alltagsprogramme auf dem Desktop Top-Distris • Anydesk • Miller PyChess • auf zwei Heft-DVDs ANYDESK • MILLER • PYCHESS • STELLARIUM • VERACRYPT • WEGO • • WEGO • VERACRYPT • STELLARIUM • PYCHESS • MILLER • ANYDESK EUR 8,50 EUR 9,35 sfr 17,00 EUR 10,85 EUR 11,05 EUR 11,05 2 DVD-10 03 www.linux-user.de Deutschland Österreich Schweiz Benelux Spanien Italien 4 196067 008502 03 Editorial Old and busted? Jörg Luther Chefredakteur Sehr geehrte Leserinnen und Leser, viele kleinere, innovative Distributionen seit einem Jahrzehnt kommen Desktop- Immer öfter stellen wir uns aber die haben damit erst gar nicht angefangen und Notebook-Systeme nur noch mit Frage, ob es wirklich noch Sinn ergibt, oder sparen es sich schon lange. Open- 64-Bit-CPUs, sodass sich die Zahl der moderne Distributionen überhaupt Suse verzichtet seit Leap 42.1 darauf; das 32-Bit-Systeme in freier Wildbahn lang- noch als 32-Bit-Images beizulegen. -

The Check Is in the Mail June 2007

The Check Is in the Mail June 2007 NOTICE: I will be out of the office from June 16 through June 25 to teach at Castle Chess Camp in Atlanta, Georgia. During that GM Cesar Augusto Blanco-Gramajo time I will be unable to answer any of your email, US mail, telephone calls, or GAME OF THE MONTH any other form of communication. Cesar’s provisional USCF rating is 2463. NOTICE #2 As you watch this game unfold, you can The email address for USCF almost see Blanco’s rating go upwards. correspondence chess matters has changed to [email protected] RUY LOPEZ (C67) White: Cesar Blanco (2463) ICCF GRANDMASTER in 2006 Black: Benjamin Coraretti (0000) ELECTRONIC KNIGHTS 2006 Electronic Knights Cesar Augusto Blanco-Gramajo, born 1.e4 e5 2.Nf3 Nc6 3.Bb5 Nf6 4.0–0 January 14, 1959, is a Guatemalan ICCF Nxe4 5.d4 Nd6 6.Bxc6 dxc6 7.dxe5 Grandmaster now living in the US and Ne4 participating in the 2006 Electronic An unusual sideline that seems to be Knights. Cesar has had an active career gaining in popularity lately. in ICCF (playing over 800 games there) and sports a 2562 ICCF rating along 8.Qe2 with the GM title which was awarded to him at the ICCF Congress in Ostrava in White chooses to play the middlegame 2003. He took part in the great Rest of as opposed to the endgame after 8. the World vs. Russia match, holding Qxd8+. With an unstable Black Knight down Eleventh Board and making two and quick occupancy of the d-file, draws against his Russian opponent. -

Issue 16, June 2019 -...CHESSPROBLEMS.CA

...CHESSPROBLEMS.CA Contents 1 Originals 746 . ISSUE 16 (JUNE 2019) 2019 Informal Tourney....... 746 Hors Concours............ 753 2 Articles 755 Andreas Thoma: Five Pendulum Retros with Proca Anticirce.. 755 Jeff Coakley & Andrey Frolkin: Multicoded Rebuses...... 757 Arno T¨ungler:Record Breakers VIII 766 Arno T¨ungler:Pin As Pin Can... 768 Arno T¨ungler: Circe Series Tasks & ChessProblems.ca TT9 ... 770 3 ChessProblems.ca TT10 785 4 Recently Honoured Canadian Compositions 786 5 My Favourite Series-Mover 800 6 Blast from the Past III: Checkmate 1902 805 7 Last Page 808 More Chess in the Sky....... 808 Editor: Cornel Pacurar Collaborators: Elke Rehder, . Adrian Storisteanu, Arno T¨ungler Originals: [email protected] Articles: [email protected] Chess drawing by Elke Rehder, 2017 Correspondence: [email protected] [ c Elke Rehder, http://www.elke-rehder.de. Reproduced with permission.] ISSN 2292-8324 ..... ChessProblems.ca Bulletin IIssue 16I ORIGINALS 2019 Informal Tourney T418 T421 Branko Koludrovi´c T419 T420 Udo Degener ChessProblems.ca's annual Informal Tourney Arno T¨ungler Paul R˘aican Paul R˘aican Mirko Degenkolbe is open for series-movers of any type and with ¥ any fairy conditions and pieces. Hors concours compositions (any genre) are also welcome! ! Send to: [email protected]. " # # ¡ 2019 Judge: Dinu Ioan Nicula (ROU) ¥ # 2019 Tourney Participants: ¥!¢¡¥£ 1. Alberto Armeni (ITA) 2. Rom´eoBedoni (FRA) C+ (2+2)ser-s%36 C+ (2+11)ser-!F97 C+ (8+2)ser-hsF73 C+ (12+8)ser-h#47 3. Udo Degener (DEU) Circe Circe Circe 4. Mirko Degenkolbe (DEU) White Minimummer Leffie 5. Chris J. Feather (GBR) 6. -

Catur Komputer Dari Wikipedia Bahasa Indonesia, Ensiklopedia Bebas

Deep Blue Dari Wikipedia bahasa Indonesia, ensiklopedia bebas Belum Diperiksa Deep Blue Deep Blue adalah sebuah komputer catur buatan IBM. Deep Blue adalah komputer pertama yang memenangkan sebuah permainan catur melawan seorang juara dunia (Garry Kasparov) dalam waktu standar sebuah turnamen catur. Kemenangan pertamanya (dalam pertandingan atau babak pertama) terjadi pada 10 Februari 1996, dan merupakan permainan yang sangat terkenal. Namun Kasparov kemudian memenangkan 3 pertandingan lainnya dan memperoleh hasil remis pada 2 pertandingan selanjutnya, sehingga mengalahkan Deep Blue dengan hasil 4-2. Deep Blue lalu diupgrade lagi secara besar-besaran dan kembali bertanding melawan Kasparov pada Mei 1997. Dalam pertandingan enam babak tersebut Deep Blue menang dengan hasil 3,5- 2,5. Babak terakhirnya berakhir pada 11 Mei. Deep Blue menjadi komputer pertama yang mengalahkan juara dunia bertahan. Komputer ini saat ini sudah "dipensiunkan" dan dipajang di Museum Nasional Sejarah Amerika (National Museum of American History),Amerika Serikat. http://id.wikipedia.org/wiki/Deep_Blue Catur komputer Dari Wikipedia bahasa Indonesia, ensiklopedia bebas Komputer catur dengan layar LCD pada 1990-an Catur komputer adalah arsitektur komputer yang memuat perangkat keras dan perangkat lunak komputer yang mampu bermain caturtanpa kendali manusia. Catur komputer berfungsi sebagai alat hiburan sendiri (yang membolehkan pemain latihan atau hiburan jika lawan manusia tidak ada), sebagai alat bantu kepada analisis catur, untuk pertandingan catur komputer dan penelitian untuk kognisi manusia. Kategori Deep Blue (chess computer) From Wikipedia, the free encyclopedia Deep Blue Deep Blue was a chess-playing computer developed by IBM. On May 11, 1997, the machine, with human intervention between games, won the second six-game match against world champion Garry Kasparov, two to one, with three draws.[1] Kasparov accused IBM of cheating and demanded a rematch. -

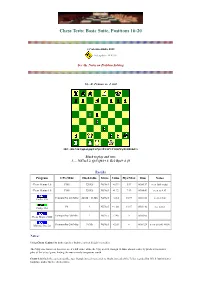

Chess Tests: Basic Suite, Positions 16-20

Chess Tests: Basic Suite, Positions 16-20 (c) Valentin Albillo, 2020 Last update: 14/01/98 See the Notes on Problem Solving 16.- Z. Franco vs. J. Gil FEN: rbb1N1k1/pp1n1ppp/8/2Pp4/3P4/4P3/P1Q2PPq/R1BR1K2/ b Black to play and win: 1. ... Nd7xc5 2. Qc5 Qh1+ 3. Ke2 Bg4+ 4. f3 Results Program CPU/Mhz Hash table Move Value Plys/Max Time Notes Chess Genius 1.0 P100 320 Kb Nd7xc5 +0.39 5/17 00:00:17 sees little value Chess Genius 1.0 P100 320 Kb Nd7xc5 +1.72 7/19 00:04:49 sees to 4. f3 Pentium Pro 200 MHz 24 Mb + 16 Mb Nd7xc5 +2.14 10/19 00:01:20 seen at 14s Crafty 12.6 P6 ? Nd7xc5 +1.181 11/17 00:01:46 see notes Crafty 13.3 Pentium Pro 200 Mhz ? Nd7xc5 +2.46 9 00:03:05 Chess Master 5500 Pentium Pro 200 Mhz 10 Mb Nd7xc5 +2.65 6 00:01:24 seen at 0:00, +0.00 MChess Pro 5.0 Notes: Using Chess Genius 1.0, both searches find the correct Knight's sacrifice. The 5-ply one, however, does not see it's full value, while the 7-ply search, though 16 times slower, correctly predicts the next 6 plies of the actual game, finding the move nearly two pawns worth. Crafty 12.6 finds the correct sacrifice too, though it needs to search to 10 ply, instead of the 7 plies required by CG1.0, but its better hardware makes for the shortest time. -

Learning Long-Term Chess Strategies from Databases

Mach Learn (2006) 63:329–340 DOI 10.1007/s10994-006-6747-7 TECHNICAL NOTE Learning long-term chess strategies from databases Aleksander Sadikov · Ivan Bratko Received: March 10, 2005 / Revised: December 14, 2005 / Accepted: December 21, 2005 / Published online: 28 March 2006 Springer Science + Business Media, LLC 2006 Abstract We propose an approach to the learning of long-term plans for playing chess endgames. We assume that a computer-generated database for an endgame is available, such as the king and rook vs. king, or king and queen vs. king and rook endgame. For each position in the endgame, the database gives the “value” of the position in terms of the minimum number of moves needed by the stronger side to win given that both sides play optimally. We propose a method for automatically dividing the endgame into stages characterised by different objectives of play. For each stage of such a game plan, a stage- specific evaluation function is induced, to be used by minimax search when playing the endgame. We aim at learning playing strategies that give good insight into the principles of playing specific endgames. Games played by these strategies should resemble human expert’s play in achieving goals and subgoals reliably, but not necessarily as quickly as possible. Keywords Machine learning . Computer chess . Long-term Strategy . Chess endgames . Chess databases 1. Introduction The standard approach used in chess playing programs relies on the minimax principle, efficiently implemented by alpha-beta algorithm, plus a heuristic evaluation function. This approach has proved to work particularly well in situations in which short-term tactics are prevalent, when evaluation function can easily recognise within the search horizon the con- sequences of good or bad moves. -

Draft – Not for Circulation

A Gross Miscarriage of Justice in Computer Chess by Dr. Søren Riis Introduction In June 2011 it was widely reported in the global media that the International Computer Games Association (ICGA) had found chess programmer International Master Vasik Rajlich in breach of the ICGA‟s annual World Computer Chess Championship (WCCC) tournament rule related to program originality. In the ICGA‟s accompanying report it was asserted that Rajlich‟s chess program Rybka contained “plagiarized” code from Fruit, a program authored by Fabien Letouzey of France. Some of the headlines reporting the charges and ruling in the media were “Computer Chess Champion Caught Injecting Performance-Enhancing Code”, “Computer Chess Reels from Biggest Sporting Scandal Since Ben Johnson” and “Czech Mate, Mr. Cheat”, accompanied by a photo of Rajlich and his wife at their wedding. In response, Rajlich claimed complete innocence and made it clear that he found the ICGA‟s investigatory process and conclusions to be biased and unprofessional, and the charges baseless and unworthy. He refused to be drawn into a protracted dispute with his accusers or mount a comprehensive defense. This article re-examines the case. With the support of an extensive technical report by Ed Schröder, author of chess program Rebel (World Computer Chess champion in 1991 and 1992) as well as support in the form of unpublished notes from chess programmer Sven Schüle, I argue that the ICGA‟s findings were misleading and its ruling lacked any sense of proportion. The purpose of this paper is to defend the reputation of Vasik Rajlich, whose innovative and influential program Rybka was in the vanguard of a mid-decade paradigm change within the computer chess community. -

Super Human Chess Engine

SUPER HUMAN CHESS ENGINE FIDE Master / FIDE Trainer Charles Storey PGCE WORLD TOUR Young Masters Training Program SUPER HUMAN CHESS ENGINE Contents Contents .................................................................................................................................................. 1 INTRODUCTION ....................................................................................................................................... 2 Power Principles...................................................................................................................................... 4 Human Opening Book ............................................................................................................................. 5 ‘The Core’ Super Human Chess Engine 2020 ......................................................................................... 6 Acronym Algorthims that make The Storey Human Chess Engine ......................................................... 8 4Ps Prioritise Poorly Placed Pieces ................................................................................................... 10 CCTV Checks / Captures / Threats / Vulnerabilities ...................................................................... 11 CCTV 2.0 Checks / Checkmate Threats / Captures / Threats / Vulnerabilities ............................. 11 DAFiii Attack / Features / Initiative / I for tactics / Ideas (crazy) ................................................. 12 The Fruit Tree analysis process ............................................................................................................ -

SHOWCASE BC 831 Funding Offers for BC Artists

SHOWCASE BC 831 Funding Offers for BC Artists Funding offers were sent to the following artists on April 17 + April 21, 2020. Artists have until May 15, 2020, to accept the grant. A Million Dollars in Pennies ArkenFire Booty EP Abraham Arkora BOSLEN ACTORS Art d’Ecco Bratboy Adam Bailie Art Napoleon Bre McDaniel Adam Charles Wilson Asha Diaz Brent Joseph Adam Rosenthal Asheida Brevner Adam Winn Ashleigh Adele Ball Bridal Party Adera A-SLAM Bring The Noise Adewolf Astrocolor Britt A.M. Adrian Chalifour Autogramm Brooke Maxwell A-DUB AutoHeart Bruce Coughlan Aggression Aza Nabuko Buckman Coe Aidan Knight Babe Corner Bukola Balogan Air Stranger Balkan Shmalkan Bunnie Alex Cuba bbno$ Bushido World Music Alex Little and The Suspicious Beamer Wigley C.R. Avery Minds Bear Mountain Cabins In The Clouds Alex Maher Bedouin Soundclash Caitlin Goulet Alexander Boynton Jr. Ben Cottrill Cam Blake Alexandria Maillot Ben Dunnill Cam Penner Alien Boys Ben Klick Camaro 67 Alisa Balogh Ben Rogers Capri Everitt Alpas Collective Beth Marie Anderson Caracas the Band Alpha Yaya Diallo Betty and The Kid Cari Burdett Amber Mae Biawanna Carlos Joe Costa Andrea Superstein Big John Bates Noirchestra Carmanah Andrew Judah Big Little Lions Carsen Gray Andrew Phelan Black Mountain Whiskey Carson Hoy Angela Harris Rebellion Caryn Fader Angie Faith Black Wizard Cassandra Maze Anklegod Blackberry Wood Cassidy Waring Annette Ducharme Blessed Cayla Brooke Antoinette Blonde Diamond Chamelion Antonio Larosa Blue J Ché Aimee Dorval Anu Davaasuren Blue Moon Marquee Checkmate -

Oral History of Hans Berliner

Oral History of Hans Berliner Interviewed by: Gardner Hendrie Recorded: March 7, 2005 Riviera Beach, Florida Total Running Time: 2:33:00 CHM Reference number: X3131.2005 © 2005 Computer History Museum CHM Ref: X3131.2005 © 2005 Computer History Museum Page 1 of 65 Q: Who has graciously agreed to do an oral history for the Computer History Museum. Thank you very much, Hans. Hans Berliner: Oh, you’re most welcome. Q: O.k. I think where I’d like to start is maybe a little further back than you might expect. I’d like to know if you could share with us a little bit about your family background. The environment that you grew up in. Your mother and father, what they did. Your brothers and sisters. Hans Berliner: O.k. Q: Where you were born. That sort of thing. Hans Berliner: O.k. I was born in Berlin in 1929, and we immigrated to the United States, very fortunately, in 1937, to Washington, D.C. As far as the family goes, my great uncle, who was my grandfather’s brother, was involved in telephone work at the turn of the previous century. And he actually owned the patent on the carbon receiver for the telephone. And they started a telephone company in Hanover, Germany, based upon his telephone experience. And he, later on, when Edison had patented the cylinder for recording, he’d had enough experience with sound recording that he said, “that’s pretty stupid”. And he decided to do the recording on a disc, and he successfully defended his patent in the Supreme Court, and so the patent on the phono disc belongs to Emile Berliner, who was my grand uncle. -

Kasparov's Nightmare!! Bobby Fischer Challenges IBM to Simul IBM

California Chess Journal Volume 20, Number 2 April 1st 2004 $4.50 Kasparov’s Nightmare!! Bobby Fischer Challenges IBM to Simul IBM Scrambles to Build 25 Deep Blues! Past Vs Future Special Issue • Young Fischer Fires Up S.F. • Fischer commentates 4 Boyscouts • Building your “Super Computer” • Building Fischer’s Dream House • Raise $500 playing chess! • Fischer Articles Galore! California Chess Journal Table of Con tents 2004 Cal Chess Scholastic Championships The annual scholastic tourney finishes in Santa Clara.......................................................3 FISCHER AND THE DEEP BLUE Editor: Eric Hicks Contributors: Daren Dillinger A miracle has happened in the Phillipines!......................................................................4 FM Eric Schiller IM John Donaldson Why Every Chess Player Needs a Computer Photographers: Richard Shorman Some titles speak for themselves......................................................................................5 Historical Consul: Kerry Lawless Founding Editor: Hans Poschmann Building Your Chess Dream Machine Some helpful hints when shopping for a silicon chess opponent........................................6 CalChess Board Young Fischer in San Francisco 1957 A complet accounting of an untold story that happened here in the bay area...................12 President: Elizabeth Shaughnessy Vice-President: Josh Bowman 1957 Fischer Game Spotlight Treasurer: Richard Peterson One game from the tournament commentated move by move.........................................16 Members at