Improving the Camera Dynamics of Casual Video

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Toland Asc Digital Assistant

TOLAND ASC DIGITAL ASSISTANT PAINTING WITH LIGHT or more than 70 years, the American Cinematographer Manual has been the key technical resource for cinematographers around the world. Chemical Wed- ding is proud to be partners with the American Society of Cinematographers in bringing you Toland ASC Digital Assistant for the iPhone or iPod Touch. FCinematography is a strange and wonderful craft that combines cutting-edge technology, skill and the management of both time and personnel. Its practitioners “paint” with light whilst juggling some pretty challenging logistics. We think it fitting to dedicate this application to Gregg Toland, ASC, whose work on such classic films as Citizen Kane revolutionized the craft of cinematography. While not every aspect of the ASC Manual is included in Toland, it is designed to give solutions to most of cinematography’s technical challenges. This application is not meant to replace the ASC Manual, but rather serve as a companion to it. We strongly encourage you to refer to the manual for a rich and complete understanding of cin- ematography techniques. The formulae that are the backbone of this application can be found within the ASC Manual. The camera and lens data have largely been taken from manufacturers’ speci- fications and field-tested where possible. While every effort has been made to perfect this application, Chemical Wedding and the ASC offer Toland on an “as is” basis; we cannot guarantee that Toland will be infallible. That said, Toland has been rigorously tested by some extremely exacting individuals and we are confident of its accuracy. Since many issues related to cinematography are highly subjective, especially with re- gard to Depth of Field and HMI “flicker” speeds, the results Toland provides are based upon idealized scenarios. -

The General Idea Behind Editing in Narrative Film Is the Coordination of One Shot with Another in Order to Create a Coherent, Artistically Pleasing, Meaningful Whole

Chapter 4: Editing Film 125: The Textbook © Lynne Lerych The general idea behind editing in narrative film is the coordination of one shot with another in order to create a coherent, artistically pleasing, meaningful whole. The system of editing employed in narrative film is called continuity editing – its purpose is to create and provide efficient, functional transitions. Sounds simple enough, right?1 Yeah, no. It’s not really that simple. These three desired qualities of narrative film editing – coherence, artistry, and meaning – are not easy to achieve, especially when you consider what the film editor begins with. The typical shooting phase of a typical two-hour narrative feature film lasts about eight weeks. During that time, the cinematography team may record anywhere from 20 or 30 hours of film on the relatively low end – up to the 240 hours of film that James Cameron and his cinematographer, Russell Carpenter, shot for Titanic – which eventually weighed in at 3 hours and 14 minutes by the time it reached theatres. Most filmmakers will shoot somewhere in between these extremes. No matter how you look at it, though, the editor knows from the outset that in all likelihood less than ten percent of the film shot will make its way into the final product. As if the sheer weight of the available footage weren’t enough, there is the reality that most scenes in feature films are shot out of sequence – in other words, they are typically shot in neither the chronological order of the story nor the temporal order of the film. -

Cinematography

CINEMATOGRAPHY ESSENTIAL CONCEPTS • The filmmaker controls the cinematographic qualities of the shot – not only what is filmed but also how it is filmed • Cinematographic qualities involve three factors: 1. the photographic aspects of the shot 2. the framing of the shot 3. the duration of the shot In other words, cinematography is affected by choices in: 1. Photographic aspects of the shot 2. Framing 3. Duration of the shot 1. Photographic image • The study of the photographic image includes: A. Range of tonalities B. Speed of motion C. Perspective 1.A: Tonalities of the photographic image The range of tonalities include: I. Contrast – black & white; color It can be controlled with lighting, filters, film stock, laboratory processing, postproduction II. Exposure – how much light passes through the camera lens Image too dark, underexposed; or too bright, overexposed Exposure can be controlled with filters 1.A. Tonality - cont Tonality can be changed after filming: Tinting – dipping developed film in dye Dark areas remain black & gray; light areas pick up color Toning - dipping during developing of positive print Dark areas colored light area; white/faintly colored 1.A. Tonality - cont • Photochemically – based filmmaking can have the tonality fixed. Done by color timer or grader in the laboratory • Digital grading used today. A scanner converts film to digital files, creating a digital intermediate (DI). DI is adjusted with software and scanned back onto negative 1.B.: Speed of motion • Depends on the relation between the rate at which -

Basics of Cinematography HCID 521

University of Washington Basics of Cinematography HCID 521 January 2015 Justin Hamacher University of Washington Cinematography Basics INTRODUCTION 2 Justin Hamacher Overview University of Washington 30% SENIOR ON-SHORE 3 Justin Hamacher University of Washington Cinematography Principles Storyboarding 4 Justin Hamacher University of Washington Cinematography Principles 5 Justin Hamacher University of Washington Planes of the Image • Background = part of the image that is the furthest distance from the camera • Middle ground = midpoint within the image • Foreground = part of the image that is the closest to the camera Justin Hamacher University of Washington Framing Framing = using the borders of the cinematic image (the film frame) to select and compose what is visible onscreen In filming, the frame is formed by the viewfinder on the camera In projection, it is formed by the screen Justin Hamacher University of Washington Cropping Cropping refers to the removal of the outer parts of an image to improve framing, accentuate subject matter or change aspect ratio. Justin Hamacher University of Washington Framing: Camera Height Relative height of the camera in relation to eye-level At eye level Below eye level Justin Hamacher University of Washington Framing: Camera Level The camera’s relative horizontal position in relation to the horizon • Parallel to horizon • Canted framing Justin Hamacher University of Washington Framing: Camera Angle Vantage point imposed on image by camera’s position Straight-On High Angle Low Angle Justin Hamacher University of Washington Speed of Motion Rate at which images are recorded and projected The standard frame rate for movies is 24 frames per second Filming at higher rate (>24 fps) results in motion appearing slowed-down when projected at 24 fps Filming at a lower rate (<24 fps) results in motion appearing sped-up when projected at 24 fps. -

Choosing Between Communication Studies and Film Studies

Choosing Between Communication Studies and Film Studies Many students with an interest in media arts come to UNCW. They often struggle with whether to major in Communication Studies (COM) or Film Studies (FST). This brief position statement is designed to help in that decision. Common Ground Both programs have at least three things in common. First, they share a common set of technologies and software. Both shoot projects in digital video. Both use Adobe Creative Suite for manipulation of digital images, in particular, Adobe Premiere for video editing. Second, they both address the genre of documentaries. Documentaries blend the interests of both “news” and “narrative” in compelling ways and consequently are of interest to both departments. Finally, both departments are “studies” departments: Communication Studies and Film Studies. Those labels indicate that issues such as history, criticism and theories matter and form the context for the study of any particular skills. Neither department is attempting to compete with Full Sail or other technical training institutes. Critical thinking and application of theory to practice are critical to success in FST and COM. Communication Studies The primary purposes for the majority of video projects are to inform and persuade. Creativity and artistry are encouraged within a wide variety of client- centered and audience-centered production genres. With rare exception, projects are approached with the goal of local or regional broadcast. Many projects are service learning oriented such as creating productions for area non-profit organizations. Students will create public service announcements (PSA), news and sports programming, interview and entertainment prog- rams, training videos, short form documentaries and informational and promotional videos. -

Module Title Introduction to Cinematography

Module Title Introduction to Cinematography (New) Programme(s)/Course Film Practice Level 5 Semester 1 Ref No: Credit Value 20 CAT Points Student Study hours Contact hours: 48 Student managed learning hours: 152 Pre-requisite learning None Co-requisites None Excluded None combinations Module Coordinator TBA Parent School Division of Film and Media, School of Arts & Creative Industries Parent Course Film Practice Description This Module provides both skills-based training in the use of High Definition (HD) cameras as well as the opportunity to study the techniques and aesthetics of cinematography. Students will be exposed to the particular demands and possibilities of working with High Definition cameras and editing workflows, and will be asked to shoot scenes according to specified aesthetic and dramatic criteria. Students will be encouraged to work from their own scripts as developed in the adjacent filmmaking workshops, thereby creating a system of feedback where learning outcomes in one part of the course feed into another. Aims The aims of this Module are to: Train students to work proficiently with HD cameras. Introduce students to methods for managing and editing HD video resources. Develop students’ ability to manipulate cameras to achieve specific stylistic and dramatic effects. Introduce students to the standards, practices and techniques of HD cinematography Learning outcomes On successful completion of this module students will be able to: Knowledge and Understanding 1. Work with a range of Digital Cinema Cameras to capture appropriately exposed, focused and colour balanced images. Intellectual Skills 2. Translate ideas into shot-sequences. 3. Translate internal states into visible action in effectively composed images. -

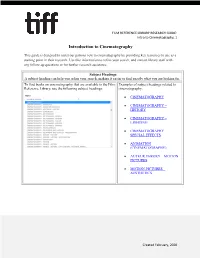

Introduction to Cinematography

FILM REFERENCE LIBRARY RESEARCH GUIDE: Intro to Cinematography, 1 Introduction to Cinematography This guide is designed to assist our patrons new to cinematography by providing key resources to use as a starting point in their research. Use this information to refine your search, and contact library staff with any follow-up questions or for further research assistance. Subject Headings A subject heading can help you refine your search, making it easier to find exactly what you are looking for. To find books on cinematography that are available in the Film Examples of subject headings related to Reference Library, use the following subject headings: cinematography: CINEMATOGRAPHY CINEMATOGRAPHY – HISTORY CINEMATOGRAPHY – LIGHTING CINEMATOGRAPHY – SPECIAL EFFECTS ANIMATION (CINEMATOGRAPHY) AUTEUR THEORY – MOTION PICTURES MOTION PICTURES – AESTHETICS Created February, 2020 FILM REFERENCE LIBRARY RESEARCH GUIDE: Intro to Cinematography, 2 Recommended Books Books provide a comprehensive overview of a larger topic, making them an excellent resource to start your research with. Chromatic cinema : a history of screen color by Richard Misek. Publisher: Wiley-Blackwell, 2010 Cinematography : theory and practice : imagemaking for cinematographers and directors by Blain Brown. Publisher: Routledge, 2016 Digital compositing for film and video : production workflows and techniques by Steve Wright. Publisher: Routledge, Taylor & Francis Group, 2018 Every frame a Rembrandt : art and practice of cinematography by Andrew Laszlo. Publisher: Focal Press, 2000 Hollywood Lighting from the Silent Era to Film Noir by Patrick Keating. Publisher: Columbia University Press, 2010 The aesthetics and psychology of the cinema by Jean Mitry. Publisher: Indiana State University Press, 1997 The art of the cinematographer : a survey and interviews with five masters by Leonard Martin. -

Cinematography in the Piano

Cinematography in The Piano Amber Inman, Alex Emry, and Phil Harty The Argument • Through Campion’s use of cinematography, the viewer is able to closely follow the mental processes of Ada as she decides to throw her piano overboard and give up the old life associated with it for a new one. The viewer is also able to distinctly see Ada’s plan change to include throwing herself in with the piano, and then her will choosing life. The Clip [Click image or blue dot to play clip] The Argument • Through Campion’s use of cinematography, the viewer is able to closely follow the mental processes of Ada as she decides to throw her piano overboard and give up the old life associated with it for a new one. The viewer is also able to distinctly see her plan change to include throwing herself in with the piano, and then her will choosing life. Tilt Shot • Flora is framed between Ada and Baines – She, being the middle man in their conversations, is centered between them, and the selective focus brings our attention to this relationship. • Tilt down to close up of hands meeting – This tilt shows that although Flora interprets for them, there is a nonverbal relationship that exists outside of the need for her translation. • Cut to Ada signing – This mid-range shot allows the viewer to see Ada’s quick break from Baines’ hand to issue the order to throw the piano overboard. The speed with which Ada breaks away shows that this was a planned event. Tilt Shot [Click image or blue dot to play clip] Close-up of Oars • Motif – The oars and accompanying chants serve as a motif within this scene. -

The Lens: Focal Length Control of Perspective in the Image Is Very Impor- 5.21 Wide-Angle Distortion in Mikhail Tant to the Filmmaker

The Phongraphic Image r69 The lens of a photographic camera does roughly what your eye does. It gath- ers light from the scene and transmits that light onto the flat surface of the film to form an image that represents size, depth, and other dimensions of the scene. One difference between the eye and the camera,, though, is that photographic lenses may be changed, and each type of lens will render perspective in different ways. If two different lenses photograph the same scene, the perspective relations in the result- ing images could be drastically different. A wide-angle lens could exaggerate the depth you see down the track or could make the foreground trees and buildings seem to bulge; atelephoto lens could drastically reduce the depth, making the trees seem very close together and nearly the same size. The Lens: Focal Length Control of perspective in the image is very impor- 5.21 Wide-angle distortion in Mikhail tant to the filmmaker. The chief variable in the process is the focal length of the Kalatozov's The Cranes Are Flyipg. lens. In technical terms, the focal length is the distance from the center of the lens to the point where light rays converge to a point of focus on the film. The focal length alters the perceived magnification, depth, and scale of things in the image. We usually distinguish three sorts of lenses on the basis of their effects on perspective: 1. The short-focal-length (wide-angle) lens. In 35mm-gauge cinematography, a lens of less than 35mm in focal length is considered a wide-angle lens. -

Visual Communication AAS Degree: Video & Post

Visual Communication AAS Degree: Video & Post Production A Program Overview of the Arts, Humanities, Communication & Design Area of Study Updated as of May 2019 Programs At-A-Glance Visual Communication AAS: Video & Post Production Track Available at LSC-Kingwood, LSC-North Harris and LSC-Westway Park LoneStar.edu/Visual-Communication-AAS- Video-Production 1 Median Wage: $46,284 Video and Post Production Certificate Available at LSC-Kingwood, LSC-North he goal of the visual communication program is to create a stimulating Harris and LSC-Westway Park learning environment for students where they can pursue their specific Tinterest within five areas of professional study: graphic design, LoneStar.edu/Video-Production-Certificate multimedia, web design, video and post production and 3-D animation. As preparation for employment in a range of design disciplines, students gain an understanding of ideation, visual organization, typography, and production tools and technology, including their application and the Visual Communication AAS degrees and creation, reproduction, and distribution of visual information. certificates are also available in graphic design, 3D animation, multimedia, and Courses in the video production program provide training in the theory and web design tracks. technical processes used in a modern commercial video production studio. Students are given hands-on instruction in the operation of professional cameras, lighting, sound, and video editing equipment, script writing and storyboarding to create video for a variety of delivery platforms; movies, television, commercials, online and other new media. Companies that hire video & post production students include a wide array of different levels of employment. The visual communication associate of applied science degree is awarded for successful completion of 60 credit hours that include a common core of academic and a selection of technical courses. -

Cinematography and Character Depiction

doi: 10.5789/4-2-6 Global Media Journal African Edition 2010 Vol 4 (2) Cinematography and character depiction William Francis Nicholson Abstract This essay investigates the ways in which cinematography can be used in depicting characters effectively in the motion picture medium. Since an aspiring filmmaker may be overwhelmed by the expansive field of cinematography, this essay aims to demystify and systematise this aspect of filmmaking. It combines information from written sources (mostly text books on filmmaking and cinematography) with observations made from viewing recent and older feature films. The knowledge is organised under the three main headings of lighting, camera view point and the camera’s mode of perception. The outcome is an accessible and systematised foundation for film makers to consult as an entry point into understanding the relationship between character depiction and cinematography: “Cinematography captures and expresses what a character is feeling – their attitude towards the rest of the world, their interior state” Ian Gabriel, director of Forgiveness (2004) [personal interview 2009]. Introduction Cinematography is the aspect of filmmaking that determines how the world of a story is visually presented to an audience. The most important aspect of story telling is the portrayal of characters with whom the audience is invited to identify. Therefore I feel it will be of great value for an aspiring young filmmaker such as myself to come to understand the many elements of cinematography that can be used to depict aspects of character. The aim of this essay is to present these cinematographic elements in a coherent way that provides the filmmaker with an inventory of the ‘toolbox’ at her disposal. -

The Essential Reference Guide for Filmmakers

THE ESSENTIAL REFERENCE GUIDE FOR FILMMAKERS IDEAS AND TECHNOLOGY IDEAS AND TECHNOLOGY AN INTRODUCTION TO THE ESSENTIAL REFERENCE GUIDE FOR FILMMAKERS Good films—those that e1ectively communicate the desired message—are the result of an almost magical blend of ideas and technological ingredients. And with an understanding of the tools and techniques available to the filmmaker, you can truly realize your vision. The “idea” ingredient is well documented, for beginner and professional alike. Books covering virtually all aspects of the aesthetics and mechanics of filmmaking abound—how to choose an appropriate film style, the importance of sound, how to write an e1ective film script, the basic elements of visual continuity, etc. Although equally important, becoming fluent with the technological aspects of filmmaking can be intimidating. With that in mind, we have produced this book, The Essential Reference Guide for Filmmakers. In it you will find technical information—about light meters, cameras, light, film selection, postproduction, and workflows—in an easy-to-read- and-apply format. Ours is a business that’s more than 100 years old, and from the beginning, Kodak has recognized that cinema is a form of artistic expression. Today’s cinematographers have at their disposal a variety of tools to assist them in manipulating and fine-tuning their images. And with all the changes taking place in film, digital, and hybrid technologies, you are involved with the entertainment industry at one of its most dynamic times. As you enter the exciting world of cinematography, remember that Kodak is an absolute treasure trove of information, and we are here to assist you in your journey.