Quality Assessment of the Alaska Ceiling and Visibility Analysis Product, Version 1.0

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Aviation Advisory Board Meeting Minutes August 5-6, 2010 in Unalakleet, Alaska

Aviation Advisory Board Meeting Minutes August 5-6, 2010 in Unalakleet, Alaska Chairman Lee Ryan called meeting to order at 9:05am. PRESENT: Lee Ryan, Jim Dodson, Tom George, Tom Nicolos, Mike Salazar, Mike Stedman, Judy McKenzie, Frank Neitz, Steve Strait EXCUSED ABSENCE: Al Orot, Ken Lythgoe OTHERS IN ATTENDANCE: Marc Luiken (DOT&PF), Rebecca Cronkhite (DOT&PF), Jeff Roach (DOT&PF), Linda Bustamante (DOT&PF), Commissioner Leo von Scheben (DOT&PF), Harry Johnson, Jr. - Unalakleet Airport Manager, Laura Lawrence – Staff to Senator Donny Olson, Representative Neal Foster, Chuck Degnan, Jim Tweto. MINUTES: Approved by the board prior to meeting – via email. Agenda Addition – Add time for public comments to agenda which could happen throughout the day as the public stops in for the meeting. Announcement from Deputy Commissioner: Deputy Commissioner Luiken thanked Chairman Lee Ryan for hosting the meeting in Unalakleet and welcomed new board members, Tom Nicolos and Mike Stedman. AGENDA: Alaska International Airports System (AIAS) and Statewide Aviation Update: Deputy Commissioner Luiken provided an overview of the AIAS. Marketing efforts of the Anchorage Airport include: 1. Plans to hire two key positions - marketing and air service development. 2. Interview with Supply Chain Management for an online story 3. Air Cargo Summit – International carriers invited to meet with representative from U.S. DOT to better understand the unique cargo transfer rights available in Alaska and to review fuel supply issues. The State is conducting a study to review fuel storage and all aspects of fuel availability at the international airports. Public Comment: Junior Johnson, Unalakleet Airport Manager expressed concern over Emmonak Airport not having a village contractor. -

(Asos) Implementation Plan

AUTOMATED SURFACE OBSERVING SYSTEM (ASOS) IMPLEMENTATION PLAN VAISALA CEILOMETER - CL31 November 14, 2008 U.S. Department of Commerce National Oceanic and Atmospheric Administration National Weather Service / Office of Operational Systems/Observing Systems Branch National Weather Service / Office of Science and Technology/Development Branch Table of Contents Section Page Executive Summary............................................................................ iii 1.0 Introduction ............................................................................... 1 1.1 Background.......................................................................... 1 1.2 Purpose................................................................................. 2 1.3 Scope.................................................................................... 2 1.4 Applicable Documents......................................................... 2 1.5 Points of Contact.................................................................. 4 2.0 Pre-Operational Implementation Activities ............................ 6 3.0 Operational Implementation Planning Activities ................... 6 3.1 Planning/Decision Activities ............................................... 7 3.2 Logistic Support Activities .................................................. 11 3.3 Configuration Management (CM) Activities....................... 12 3.4 Operational Support Activities ............................................ 12 4.0 Operational Implementation (OI) Activities ......................... -

Notice of Adjustments to Service Obligations

Served: May 12, 2020 UNITED STATES OF AMERICA DEPARTMENT OF TRANSPORTATION OFFICE OF THE SECRETARY WASHINGTON, D.C. CONTINUATION OF CERTAIN AIR SERVICE PURSUANT TO PUBLIC LAW NO. 116-136 §§ 4005 AND 4114(b) Docket DOT-OST-2020-0037 NOTICE OF ADJUSTMENTS TO SERVICE OBLIGATIONS Summary By this notice, the U.S. Department of Transportation (the Department) announces an opportunity for incremental adjustments to service obligations under Order 2020-4-2, issued April 7, 2020, in light of ongoing challenges faced by U.S. airlines due to the Coronavirus (COVID-19) public health emergency. With this notice as the initial step, the Department will use a systematic process to allow covered carriers1 to reduce the number of points they must serve as a proportion of their total service obligation, subject to certain restrictions explained below.2 Covered carriers must submit prioritized lists of points to which they wish to suspend service no later than 5:00 PM (EDT), May 18, 2020. DOT will adjudicate these requests simultaneously and publish its tentative decisions for public comment before finalizing the point exemptions. As explained further below, every community that was served by a covered carrier prior to March 1, 2020, will continue to receive service from at least one covered carrier. The exemption process in Order 2020-4-2 will continue to be available to air carriers to address other facts and circumstances. Background On March 27, 2020, the President signed the Coronavirus Aid, Recovery, and Economic Security Act (the CARES Act) into law. Sections 4005 and 4114(b) of the CARES Act authorize the Secretary to require, “to the extent reasonable and practicable,” an air carrier receiving financial assistance under the Act to maintain scheduled air transportation service as the Secretary deems necessary to ensure services to any point served by that air carrier before March 1, 2020. -

APPENDIX a Document Index

APPENDIX A Document Index Alaska Aviation System Plan Document Index - 24 April 2008 Title Reference # Location / Electronic and/or Paper Copy Organization / Author Pub. Date Other Comments / Notes / Special Studies AASP's Use 1-2 AASP #1 1 WHPacific / Electronic & Paper Copies DOT&PF / TRA/Farr Jan-86 Report plus appendix AASP #2 DOT&PF / TRA-BV Airport 2 WHPacific / Electronic & Paper Copies Mar-96 Report plus appendix Consulting Statewide Transportation Plans Use 10 -19 2030 Let's Get Moving! Alaska Statewide Long-Range http://dot.alaska.gov/stwdplng/areaplans/lrtpp/SWLRTPHo 10 DOT&PF Feb-08 Technical Appendix also available Transportation Policy Plan Update me.shtml Regional Transportation Plans Use 20-29 Northwest Alaska Transportation Plan This plan is the Community Transportation Analysis -- there is 20 http://dot.alaska.gov/stwdplng/areaplans/nwplan.shtml DOT&PF Feb-04 also a Resource Transportation Analysis, focusing on resource development transportation needs Southwest Alaska Transportation Plan 21 http://dot.alaska.gov/stwdplng/areaplans/swplan.shtml DOT&PF / PB Consult Sep-04 Report & appendices available Y-K Delta Transportation Plan 22 http://dot.alaska.gov/stwdplng/areaplans/ykplan.shtml DOT&PF Mar-02 Report & appendices available Prince William Sound Area Transportation Plan 23 http://dot.alaska.gov/stwdplng/areaplans/pwsplan.shtml DOT&PF / Parsons Brinokerhoff Jul-01 Report & relevant technical memos available Southeast Alaska Transportation Plan http://www.dot.state.ak.us/stwdplng/projectinfo/ser/newwave 24 DOT&PF Aug-04 -

2004 Annual Report on Aviation

NEW YORK STATE ANNUAL REPORT ON AVIATION Includes Legislative Mandates for: Inventory of General Aviation Facilities and Status Report for the Airport Improvement and Revitalization Program (AIR 99) February 1, 2004 New York State Department of Transportation Passenger Transportation Division Aviation Services Bureau 50 Wolf Road Albany, NY 12232 GEORGE E. PATAKI JOSEPH H. BOARDMAN GOVERNOR www.dot.state.ny.us COMMISSIONER TABLE OF CONTENTS PAGE I. INTRODUCTION..............................................................................................................1 II. EXECUTIVE SUMMARY OF DATA.............................................................................2 Map of Public Use Airports .................................................................................................3 III. INVENTORY OF AIRPORTS.........................................................................................4 Table A - Number of and Activity at NYS Aviation Facilities by Type .............................4 Table B - Commercial Service Airports by County, Name, Usage, and Class....................5 Table C - General Aviation Airports by County Name, Usage, and Class..........................6 Table D - Public Use Heliports by County, Name, Usage, and Class ...............................10 Table E - Public Use Seaplane Bases by County, Name, Usage, and Class......................11 IV. AIRPORT ACTIVITY AND SERVICE........................................................................12 Findings..............................................................................................................................12 -

State of Alaska Itb Number 2515H029 Amendment Number One (1)

STATE OF ALASKA ITB NUMBER 2515H029 AMENDMENT NUMBER ONE (1) AMENDMENT ISSUING OFFICE: Department of Transportation & Public Facilities Statewide Contracting & Procurement P.O. Box 112500 (3132 Channel Drive, Room 145) Juneau, Alaska 99811-2500 THIS IS NOT AN ORDER DATE AMENDMENT ISSUED: February 9, 2015 ITB TITLE: De-icing Chemicals ITB OPENING DATE AND TIME: February 27, 2015 @ 2:00 PM Alaska Time The following changes are required: 1. Attachment A, DOT/PF Maintenance Stations identifying the address and contact information and is added to this ITB. This is a mandatory return Amendment. Your bid may be considered non-responsive and rejected if this signed amendment is not received [in addition to your bid] by the bid opening date and time. Becky Gattung Procurement Officer PHONE: (907) 465-8949 FAX: (907) 465-2024 NAME OF COMPANY DATE PRINTED NAME SIGNATURE ITB 2515H029 - De-icing Chemicals ATTACHMENT A DOT/PF Maintenance Stations SOUTHEAST REGION F.O.B. POINT Contact Name: Contact Phone: Cell: Juneau: 6860 Glacier Hwy., Juneau, AK 99801 Eric Wilkerson 465-1787 723-7028 Gustavus: Gustavus Airport, Gustavus, AK 99826 Brad Rider 697-2251 321-1514 Haines: 720 Main St., Haines, AK 99827 Matt Boron 766-2340 314-0334 Hoonah: 700 Airport Way, Hoonah, AK 99829 Ken Meserve 945-3426 723-2375 Ketchikan: 5148 N. Tongass Hwy. Ketchikan, AK 99901 Loren Starr 225-2513 617-7400 Klawock: 1/4 Mile Airport Rd., Klawock, AK 99921 Tim Lacour 755-2229 401-0240 Petersburg: 288 Mitkof Hwy., Petersburg, AK 99833 Mike Etcher 772-4624 518-9012 Sitka: 605 Airport Rd., Sitka, AK 99835 Steve Bell 966-2960 752-0033 Skagway: 2.5 Mile Klondike Hwy., Skagway, AK 99840 Missy Tyson 983-2323 612-0201 Wrangell: Airport Rd., Wrangell, AK 99929 William Bloom 874-3107 305-0450 Yakutat: Yakutat Airport, Yakutat, AK 99689 Robert Lekanof 784-3476 784-3717 1 of 6 ITB 2515H029 - De-icing Chemicals ATTACHMENT A DOT/PF Maintenance Stations NORTHERN REGION F.O.B. -

Invitation to Bid Invitation Number 2519H037

INVITATION TO BID INVITATION NUMBER 2519H037 RETURN THIS BID TO THE ISSUING OFFICE AT: Department of Transportation & Public Facilities Statewide Contracting & Procurement P.O. Box 112500 (3132 Channel Drive, Suite 350) Juneau, Alaska 99811-2500 THIS IS NOT AN ORDER DATE ITB ISSUED: January 24, 2019 ITB TITLE: De-icing Chemicals SEALED BIDS MUST BE SUBMITTED TO THE STATEWIDE CONTRACTING AND PROCUREMENT OFFICE AND MUST BE TIME AND DATE STAMPED BY THE PURCHASING SECTION PRIOR TO 2:00 PM (ALASKA TIME) ON FEBRUARY 14, 2019 AT WHICH TIME THEY WILL BE PUBLICLY OPENED. DELIVERY LOCATION: See the “Bid Schedule” DELIVERY DATE: See the “Bid Schedule” F.O.B. POINT: FINAL DESTINATION IMPORTANT NOTICE: If you received this solicitation from the State’s “Online Public Notice” web site, you must register with the Procurement Officer listed on this document to receive subsequent amendments. Failure to contact the Procurement Officer may result in the rejection of your offer. BIDDER'S NOTICE: By signature on this form, the bidder certifies that: (1) the bidder has a valid Alaska business license, or will obtain one prior to award of any contract resulting from this ITB. If the bidder possesses a valid Alaska business license, the license number must be written below or one of the following forms of evidence must be submitted with the bid: • a canceled check for the business license fee; • a copy of the business license application with a receipt date stamp from the State's business license office; • a receipt from the State’s business license office for -

ABS RTF Report

Alaska Satellite Interconnect Equipment Replacement and FY2020 Request: $500,000 System Upgrade Reference No: 61878 AP/AL: Appropriation Project Type: Equipment / Commodities Category: General Government Location: Statewide House District: Statewide (HD 1-40) Impact House District: Statewide (HD 1-40) Contact: Cheri Lowenstein Estimated Project Dates: 07/01/2019 - 06/30/2024 Contact Phone: (907)465-5655 Brief Summary and Statement of Need: This infrastructure provides a critical link for emergency communications, video, and audio services to bush, rural, and urban Alaskan audiences and broadcasters. The project work scope includes system design, equipment purchase, installation, and commissioning of equipment that will sustain and improve existing service levels by replacing the end-of-life/ end-of-service satellite distribution system currently in place. Funding: FY2020 FY2021 FY2022 FY2023 FY2024 FY2025 Total 1197 AK Cap $500,000 $500,000 Inc Total: $500,000 $0 $0 $0 $0 $0 $500,000 State Match Required One-Time Project Phased - new Phased - underway On-Going 0% = Minimum State Match % Required Amendment Mental Health Bill Operating & Maintenance Costs: Amount Staff Project Development: 0 0 Ongoing Operating: 0 0 One-Time Startup: 0 Totals: 0 0 Prior Funding History / Additional Information: Project Description/Justification: The primary purpose of the State of Alaska's satellite infrastructure is supporting Alaska Rural Communications Services (ARCS); the state owned and operated rural television service, and delivery of noncommercial broadcasting, distance education, and emergency communications services. These funds will allow State of Alaska (SOA) to meet their statutory responsibilities by ensuring delivery of these mission critical public services. This system of multiple statewide public and emergency communications services is a State of Alaska owned distribution platform. -

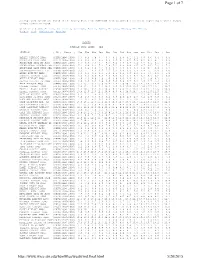

Page 1 of 7 5/20/2015

Page 1 of 7 Average wind speeds are based on the hourly data from 1996-2006 from automated stations at reporting airports (ASOS) unless otherwise noted. Click on a State: Arizona , California , Colorado , Hawaii , Idaho , Montana , Nevada , New Mexico , Oregon , Utah , Washington , Wyoming ALASKA AVERAGE WIND SPEED - MPH STATION | ID | Years | Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec | Ann AMBLER AIRPORT AWOS |PAFM|1996-2006| 6.7 8.5 7.9 7.7 6.7 5.3 4.8 5.1 6.1 6.8 6.6 6.4 | 6.5 ANAKTUVUK PASS AWOS |PAKP|1996-2006| 8.9 9.0 9.1 8.6 8.6 8.5 8.1 8.5 7.6 8.2 9.3 9.1 | 8.6 ANCHORAGE INTL AP ASOS |PANC|1996-2006| 6.7 6.0 7.5 7.7 8.7 8.2 7.8 6.8 7.1 6.6 6.1 6.1 | 7.1 ANCHORAGE-ELMENDORF AFB |PAED|1996-2006| 7.3 6.9 8.1 7.6 7.8 7.2 6.8 6.4 6.5 6.7 6.5 7.2 | 7.1 ANCHORAGE-LAKE HOOD SEA |PALH|1996-2006| 4.9 4.2 5.8 5.7 6.6 6.3 5.8 4.8 5.3 5.2 4.7 4.4 | 5.3 ANCHORAGE-MERRILL FLD |PAMR|1996-2006| 3.2 3.1 4.4 4.7 5.5 5.2 4.8 4.0 3.9 3.8 3.1 2.9 | 4.0 ANIAK AIRPORT AWOS |PANI|1996-2006| 4.9 6.6 6.5 6.4 5.6 4.5 4.2 4.0 4.6 5.5 5.5 4.1 | 5.1 ANNETTE AIRPORT ASOS |PANT|1996-2006| 9.2 8.2 8.9 7.8 7.4 7.0 6.2 6.4 7.2 8.3 8.6 9.8 | 8.0 ANVIK AIRPORT AWOS |PANV|1996-2006| 7.6 7.3 6.9 5.9 5.0 3.9 4.0 4.4 4.7 5.2 5.9 6.3 | 5.5 ARCTIC VILLAGE AP AWOS |PARC|1996-2006| 2.8 2.8 4.2 4.9 5.8 7.0 6.9 6.7 5.2 4.0 2.7 3.3 | 4.6 ATKA AIRPORT AWOS |PAAK|2000-2006| 15.1 15.1 13.1 15.0 13.4 12.4 11.9 10.7 13.5 14.5 14.7 14.4 | 13.7 BARROW AIRPORT ASOS |PABR|1996-2006| 12.2 13.1 12.4 12.1 12.4 11.5 12.6 12.5 12.6 14.0 13.7 13.1 | 12.7 BARTER ISLAND AIRPORT |PABA|1996-2006| -

Design Temperature Limit Reference Guide (2019 Edition)

ENERGY STAR Single-Family New Homes ENERGY STAR Multifamily New Construction Design Temperature Limit Reference Guide (2019 Edition) These 2019 Edition limits are permitted to be used with any National HVAC Design Report, and are required to be used for all National HVAC Design Reports generated on or after 10-01-2020 Introduction One requirement of the ENERGY STAR Single-Family New Homes and Multifamily New Construction (MFNC) programs is to use outdoor design temperatures that do not exceed the maximum cooling season temperature and minimum heating season temperature listed in this reference guide for the state and county, or territory, in which the home is to be certified. Only two exceptions apply: 1. Jurisdiction-Specified Temperatures: If the outdoor design temperatures to be used in load calculations are specified by the jurisdiction where the home will be certified, then these specified temperatures shall be used. 2. Temperature Exception Request: In rare cases, the designer may believe that an exception to the limits in the reference guide are warranted for a particular state and county, or territory. If so, the designer must complete and submit a Design Temperature Exception Request, including a justification for the exception, to [email protected] for review and approval prior to the home’s certification. To obtain the most accurate load calculations, EPA recommends that designers always use the ACCA Manual J, 8th edition, 1% cooling season design temperature and 99% heating season design temperature for the weather location that is geographically closest to the home to be certified. How to Use this Reference Guide 1. -

State of Alaska the Legislature

131ectioll I)istrict State of Alaska The Legislature -- JUNEAU ALA8KA THE BUDGET BY ELECTION DISTRICT The enclosed report lists elements of the budget by election district for the House of Representatives. The report presents the following three types of information for each election district: 1. Positions approved by the Legislature; 2. Capital Budget Projects; 3. Bond and Special Appropriations projects. The report lists whole budget line items only, (amounts added to statewide/areawide budget items for a specific location are not listed) and is intended to provide some indication of th~ level of increased or new state programs and services within any given district. When used in conjunction with the State Salaries by Location Report it should give a relatively good indication of the level of state expenditures within a given election district. TABLE OF CONTENTS ELECTION DISTRICT DISTRICT NAME PAGE NO. ~ PROJECTS POSITIONS* 01 Ketchikan 3 109-' 02 Wrange11~Petersburg 7 110 03 Sitka 11 III 04 Juneau 15 112 05 Cordova-Va1dez-Seward 23 119-- -06 Palmer-Wasi11a-Matanuska 29 120 07 - 12 'Anchorage 35 121 13 Kenai-Soldotna-Homer 49 129 '-' 14 Kodiak 53 130 15 Aleutian Islands-Kodiak 57 131 16 Dillingham-Bristol Bay 63 ' 132 17 Bethel-Lower Kuskokwim 69 133 18 Ga1ena-McGrath-Hooper Bay 75 134· 19 Nenana-Fort.Yukon-Tok 81 135 20 Fairbanks 87 136 21 Barrow-Kotzebue 97 140 ----- , 22 Nome-Seward Peninsula 103 141 * yellow section SPECIAL APPROPRIATIONS, BONDS AND CAPITAL PROJECTS BY ELECTION DISTRICT ($ millions - all funds) 1977 Session 1978 -

Final Documents/Your Two Cents—August 2018

Final Documents/Your Two Cents—August 2018 This list includes Federal Register (FR) publications such as rules, Advisory Circulars (ACs), policy statements and related material of interest to ARSA members. The date shown is the date of FR publication or other official release. Proposals opened for public comment represent your chance to provide input on rules and policies that will affect you. Agencies must provide the public notice and an opportunity for comment before their rules or policies change. Your input matters. Comments should be received before the indicated due date; however, agencies often consider comments they receive before drafting of the final document begins. Hyperlinks provided in blue text take you to the full document. If this link is broken, go to http://www.regulation.gov. In the keyword or ID field, type “FAA” followed by the docket number. August 1, 2018 FAA Final rules Rule: Amendment of Class D Airspace; Erie, PA Published 08/01/2018 Docket #: FAA-2018-0679 Effective date 08/01/2018 This action amends the legal description of the Class D airspace at Erie International Airport/Tom Ridge Field, Erie, PA, by correcting a printing error in the latitude coordinate symbols for the airport. This action does not affect the boundaries or operating requirements of the airspace. Final Rule: Amendment of Class E Airspace; Lyons, KS Published 08/01/2018 Docket #: FAA-2018-0139 Effective date 11/08/2018 This action modifies Class E airspace extending upward from 700 feet above the surface, at Lyons- Rice Municipal Airport, Lyons, KS. This action is necessary due to the decommissioning of the Lyons non-directional radio beacon (NDB), and cancellation of the NDB approach, and would enhance the safety and management of standard instrument approach procedures for instrument flight rules (IFR) operations at this airport.