Towards a Backpressure-Based Transport Protocol for the Tor Network

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Modeling and Performance Evaluation of LTE Networks with Different TCP Variants Ghassan A

World Academy of Science, Engineering and Technology International Journal of Electronics and Communication Engineering Vol:5, No:3, 2011 Modeling and Performance Evaluation of LTE Networks with Different TCP Variants Ghassan A. Abed, Mahamod Ismail, and Kasmiran Jumari Abstract—Long Term Evolution (LTE) is a 4G wireless Comparing the performance of 3G and its evolution to LTE, broadband technology developed by the Third Generation LTE does not offer anything unique to improve spectral Partnership Project (3GPP) release 8, and it's represent the efficiency, i.e. bps/Hz. LTE improves system performance by competitiveness of Universal Mobile Telecommunications System using wider bandwidths if the spectrum is available [2]. (UMTS) for the next 10 years and beyond. The concepts for LTE systems have been introduced in 3GPP release 8, with objective of Universal Terrestrial Radio Access Network Long-Term high-data-rate, low-latency and packet-optimized radio access Evolution (UTRAN LTE), also known as Evolved UTRAN technology. In this paper, performance of different TCP variants (EUTRAN) is a new radio access technology proposed by the during LTE network investigated. The performance of TCP over 3GPP to provide a very smooth migration towards fourth LTE is affected mostly by the links of the wired network and total generation (4G) network [3]. EUTRAN is a system currently bandwidth available at the serving base station. This paper describes under development within 3GPP. LTE systems is under an NS-2 based simulation analysis of TCP-Vegas, TCP-Tahoe, TCP- Reno, TCP-Newreno, TCP-SACK, and TCP-FACK, with full developments now, and the TCP protocol is the most widely modeling of all traffics of LTE system. -

A Generic Data Exchange System for F2F Networks

The Retroshare project The GXS system Decentralize your app! A Generic Data Exchange System for F2F Networks Cyril Soler C.Soler The GXS System 03 Feb. 2018 1 / 19 The Retroshare project The GXS system Decentralize your app! Outline I Overview of Retroshare I The GXS system I Decentralize your app! C.Soler The GXS System 03 Feb. 2018 2 / 19 The Retroshare project The GXS system Decentralize your app! The Retroshare Project I Mesh computers using signed TLS over TCP/UDP/Tor/I2P; I anonymous end-to-end encrypted FT with swarming; I mail, IRC chat, forums, channels; I available on Mac OS, Linux, Windows, (+ Android). C.Soler The GXS System 03 Feb. 2018 3 / 19 The Retroshare project The GXS system Decentralize your app! The Retroshare Project I Mesh computers using signed TLS over TCP/UDP/Tor/I2P; I anonymous end-to-end encrypted FT with swarming; I mail, IRC chat, forums, channels; I available on Mac OS, Linux, Windows. C.Soler The GXS System 03 Feb. 2018 3 / 19 The Retroshare project The GXS system Decentralize your app! The Retroshare Project I Mesh computers using signed TLS over TCP/UDP/Tor/I2P; I anonymous end-to-end encrypted FT with swarming; I mail, IRC chat, forums, channels; I available on Mac OS, Linux, Windows. C.Soler The GXS System 03 Feb. 2018 3 / 19 The Retroshare project The GXS system Decentralize your app! The Retroshare Project I Mesh computers using signed TLS over TCP/UDP/Tor/I2P; I anonymous end-to-end encrypted FT with swarming; I mail, IRC chat, forums, channels; I available on Mac OS, Linux, Windows. -

Anonymous Rate Limiting with Direct Anonymous Attestation

Anonymous rate limiting with Direct Anonymous Attestation Alex Catarineu Philipp Claßen Cliqz GmbH, Munich Konark Modi Josep M. Pujol 25.09.18 Crypto and Privacy Village 2018 Data is essential to build services 25.09.18 Crypto and Privacy Village 2018 Problems with Data Collection IP UA Timestamp Message Payload Cookie Type 195.202.XX.XX FF.. 2018-07-09 QueryLog [face, facebook.com] Cookie=966347bfd 14:01 1e550 195.202.XX.XX Chrome.. 2018-07-09 Page https://analytics.twitter.com/user/konark Cookie=966347bfd 14:06 modi 1e55040434abe… 195.202.XX.XX Chrome.. 2018-07-09 QueryLog [face, facebook.com] Cookie=966347bfd 14:10 1e55040434abe… 195.202.XX.XX Chrome.. 2018-07-09 Page https://booking.com/hotels/barcelona Cookie=966347bfd 16:15 1e55040434abe… 195.202.XX.XX Chrome.. 2018-07-09 QueryLog [face, facebook.com] Cookie=966347bfd 14:10 1e55040434abe… 195.202.XX.XX FF.. 2018-07-09 Page https://shop.flixbus.de/user/resetting/res Cookie=966347bfd 18:40 et/hi7KTb1Pxa4lXqKMcwLXC0XzN- 1e55040434abe… 47Tt0Q 25.09.18 Crypto and Privacy Village 2018 Anonymous data collection Timestamp Message Type Payload 2018-07-09 14 Querylog [face, facebook.com] 2018-07-09 14 Querylog [boo, booking.com] 2018-07-09 14 Page https://booking.com/hotels/barcelona 2018-07-09 14 Telemetry [‘engagement’: 0 page loads last week, 5023 page loads last month] More details: https://gist.github.com/solso/423a1104a9e3c1e3b8d7c9ca14e885e5 http://josepmpujol.net/public/papers/big_green_tracker.pdf 25.09.18 Crypto and Privacy Village 2018 Motivation: Preventing attacks on anonymous data collection Timestamp Message Type Payload 2018-07-09 14 querylog [book, booking.com] 2018-07-09 14 querylog [fac, facebook.com] … …. -

Analysing TCP Performance When Link Experiencing Packet Loss

Analysing TCP performance when link experiencing packet loss Master of Science Thesis [in the Programme Networks and Distributed System] SHAHRIN CHOWDHURY KANIZ FATEMA Chalmers University of Technology University of Gothenburg Department of Computer Science and Engineering Göteborg, Sweden, October 2013 The Author grants to Chalmers University of Technology and University of Gothenburg the non-exclusive right to publish the Work electronically and in a non-commercial purpose make it accessible on the Internet. The Author warrants that he/she is the author to the Work, and warrants that the Work does not contain text, pictures or other material that violates copyright law. The Author shall, when transferring the rights of the Work to a third party (for example a publisher or a company), acknowledge the third party about this agreement. If the Author has signed a copyright agreement with a third party regarding the Work, the Author warrants hereby that he/she has obtained any necessary permission from this third party to let Chalmers University of Technology and University of Gothenburg store the Work electronically and make it accessible on the Internet. Analysing TCP performance when link experiencing packet loss SHAHRIN CHOWDHURY, KANIZ FATEMA © SHAHRIN CHOWDHURY, October 2013. © KANIZ FATEMA, October 2013. Examiner: TOMAS OLOVSSON Chalmers University of Technology University of Gothenburg Department of Computer Science and Engineering SE-412 96 Göteborg Sweden Telephone + 46 (0)31-772 1000 Department of Computer Science and Engineering Göteborg, Sweden October 2013 Acknowledgement We are grateful to our supervisor and examiner Tomas Olovsson for his valuable time and assistance in compilation for this thesis. -

Latency and Throughput Optimization in Modern Networks: a Comprehensive Survey Amir Mirzaeinnia, Mehdi Mirzaeinia, and Abdelmounaam Rezgui

READY TO SUBMIT TO IEEE COMMUNICATIONS SURVEYS & TUTORIALS JOURNAL 1 Latency and Throughput Optimization in Modern Networks: A Comprehensive Survey Amir Mirzaeinnia, Mehdi Mirzaeinia, and Abdelmounaam Rezgui Abstract—Modern applications are highly sensitive to com- On one hand every user likes to send and receive their munication delays and throughput. This paper surveys major data as quickly as possible. On the other hand the network attempts on reducing latency and increasing the throughput. infrastructure that connects users has limited capacities and These methods are surveyed on different networks and surrond- ings such as wired networks, wireless networks, application layer these are usually shared among users. There are some tech- transport control, Remote Direct Memory Access, and machine nologies that dedicate their resources to some users but they learning based transport control, are not very much commonly used. The reason is that although Index Terms—Rate and Congestion Control , Internet, Data dedicated resources are more secure they are more expensive Center, 5G, Cellular Networks, Remote Direct Memory Access, to implement. Sharing a physical channel among multiple Named Data Network, Machine Learning transmitters needs a technique to control their rate in proper time. The very first congestion network collapse was observed and reported by Van Jacobson in 1986. This caused about a I. INTRODUCTION thousand time rate reduction from 32kbps to 40bps [3] which Recent applications such as Virtual Reality (VR), au- is about a thousand times rate reduction. Since then very tonomous cars or aerial vehicles, and telehealth need high different variations of the Transport Control Protocol (TCP) throughput and low latency communication. -

Lab 6: Understanding Traditional TCP Congestion Control (HTCP, Cubic, Reno)

NETWORK TOOLS AND PROTOCOLS Lab 6: Understanding Traditional TCP Congestion Control (HTCP, Cubic, Reno) Document Version: 06-14-2019 Award 1829698 “CyberTraining CIP: Cyberinfrastructure Expertise on High-throughput Networks for Big Science Data Transfers” Lab 6: Understanding Traditional TCP Congestion Control Contents Overview ............................................................................................................................. 3 Objectives............................................................................................................................ 3 Lab settings ......................................................................................................................... 3 Lab roadmap ....................................................................................................................... 3 1 Introduction to TCP ..................................................................................................... 3 1.1 TCP review ............................................................................................................ 4 1.2 TCP throughput .................................................................................................... 4 1.3 TCP packet loss event ........................................................................................... 5 1.4 Impact of packet loss in high-latency networks ................................................... 6 2 Lab topology............................................................................................................... -

DARK WEB INVESTIGATION GUIDE Contents 1

DARK WEB INVESTIGATION GUIDE Contents 1. Introduction 3 2. Setting up Chrome for Dark Web Access 5 3. Setting up Virtual Machines for Dark Web Access 9 4. Starting Points for Tor Investigations 20 5. Technical Clues for De-Anonymizing Hidden Services 22 5.1 Censys.io SSL Certificates 23 5.2 Searching Shodan for Hidden Services 24 5.3 Checking an IP Address for Tor Usage 24 5.4 Additional Resources 25 6. Conclusion 26 2 Dark Web Investigation Guide 1 1. Introduction 3 Introduction 1 There is a lot of confusion about what the dark web is vs. the deep web. The dark web is part of the Internet that is not accessible through traditional means. It requires that you use a technology like Tor (The Onion Router) or I2P (Invisible Internet Project) in order to access websites, email or other services. The deep web is slightly different. The deep web is made of all the webpages or entire websites that have not been crawled by a search engine. This could be because they are hidden behind paywalls or require a username and password to access. We are going to be setting up access to the dark web with a focus on the Tor network. We are going to accomplish this in two different ways. The first way is to use the Tor Browser to get Google Chrome connected to the the Tor network. This is the less private and secure option, but it is the easiest to set up and use and is sufficient for accessing material on the dark web. -

The Effects of Different Congestion Control Algorithms Over Multipath Fast Ethernet Ipv4/Ipv6 Environments

Proceedings of the 11th International Conference on Applied Informatics Eger, Hungary, January 29–31, 2020, published at http://ceur-ws.org The Effects of Different Congestion Control Algorithms over Multipath Fast Ethernet IPv4/IPv6 Environments Szabolcs Szilágyi, Imre Bordán Faculty of Informatics, University of Debrecen, Hungary [email protected] [email protected] Abstract The TCP has been in use since the seventies and has later become the predominant protocol of the internet for reliable data transfer. Numerous TCP versions has seen the light of day (e.g. TCP Cubic, Highspeed, Illinois, Reno, Scalable, Vegas, Veno, etc.), which in effect differ from each other in the algorithms used for detecting network congestion. On the other hand, the development of multipath communication tech- nologies is one today’s relevant research fields. What better proof of this, than that of the MPTCP (Multipath TCP) being integrated into multiple operating systems shortly after its standardization. The MPTCP proves to be very effective for multipath TCP-based data transfer; however, its main drawback is the lack of support for multipath com- munication over UDP, which can be important when forwarding multimedia traffic. The MPT-GRE software developed at the Faculty of Informatics, University of Debrecen, supports operation over both transfer protocols. In this paper, we examine the effects of different TCP congestion control mechanisms on the MPTCP and the MPT-GRE multipath communication technologies. Keywords: congestion control, multipath communication, MPTCP, MPT- GRE, transport protocols. 1. Introduction In 1974, the TCP was defined in RFC 675 under the Transmission Control Pro- gram name. Later, the Transmission Control Program was split into two modular Copyright © 2020 for this paper by its authors. -

An Analysis of Private Browsing Modes in Modern Browsers

An Analysis of Private Browsing Modes in Modern Browsers Gaurav Aggarwal Elie Bursztein Collin Jackson Dan Boneh Stanford University CMU Stanford University Abstract Even within a single browser there are inconsistencies. We study the security and privacy of private browsing For example, in Firefox 3.6, cookies set in public mode modes recently added to all major browsers. We first pro- are not available to the web site while the browser is in pose a clean definition of the goals of private browsing private mode. However, passwords and SSL client cer- and survey its implementation in different browsers. We tificates stored in public mode are available while in pri- conduct a measurement study to determine how often it is vate mode. Since web sites can use the password man- used and on what categories of sites. Our results suggest ager as a crude cookie mechanism, the password policy that private browsing is used differently from how it is is inconsistent with the cookie policy. marketed. We then describe an automated technique for Browser plug-ins and extensions add considerable testing the security of private browsing modes and report complexity to private browsing. Even if a browser ad- on a few weaknesses found in the Firefox browser. Fi- equately implements private browsing, an extension can nally, we show that many popular browser extensions and completely undermine its privacy guarantees. In Sec- plugins undermine the security of private browsing. We tion 6.1 we show that many widely used extensions un- propose and experiment with a workable policy that lets dermine the goals of private browsing. -

The Potential Harms of the Tor Anonymity Network Cluster Disproportionately in Free Countries

The potential harms of the Tor anonymity network cluster disproportionately in free countries Eric Jardinea,1,2, Andrew M. Lindnerb,1, and Gareth Owensonc,1 aDepartment of Political Science, Virginia Tech, Blacksburg, VA 24061; bDepartment of Sociology, Skidmore College, Saratoga Springs, NY 12866; and cCyber Espion Ltd, Portsmouth PO2 0TP, United Kingdom Edited by Douglas S. Massey, Princeton University, Princeton, NJ, and approved October 23, 2020 (received for review June 10, 2020) The Tor anonymity network allows users to protect their privacy However, substantial evidence has shown that the preponder- and circumvent censorship restrictions but also shields those ance of Onion/Hidden Services traffic connects to illicit sites (7). distributing child abuse content, selling or buying illicit drugs, or With this important caveat in mind, our data also show that the sharing malware online. Using data collected from Tor entry distribution of potentially harmful and beneficial uses is uneven, nodes, we provide an estimation of the proportion of Tor network clustering predominantly in politically free regimes. In particular, users that likely employ the network in putatively good or bad the average rate of likely malicious use of Tor in our data for ways. Overall, on an average country/day, ∼6.7% of Tor network countries coded by Freedom House as “not free” is just 4.8%. In users connect to Onion/Hidden Services that are disproportion- countries coded as “free,” the percentage of users visiting Onion/ ately used for illicit purposes. We also show that the likely balance Hidden Services as a proportion of total daily Tor use is nearly of beneficial and malicious use of Tor is unevenly spread globally twice as much or ∼7.8%. -

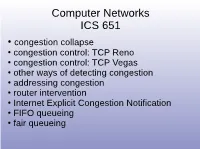

Computer Networks ICS

Computer Networks ICS 651 ● congestion collapse ● congestion control: TCP Reno ● congestion control: TCP Vegas ● other ways of detecting congestion ● addressing congestion ● router intervention ● Internet Explicit Congestion Notification ● FIFO queueing ● fair queueing Router Congestion • assume a fast router • two gigabit-ethernet links receiving lots of outgoing data • one (relatively) slow internet link (10 Mb/s uplink speed) sending the outgoing data • if the two links send more than a combined 10 Mb/s over an extended period, the router buffers will fill up • eventually the router will have to discard data due to congestion: more data being sent than the line can carry Congestion Collapse • assume a fixed timeout • if I have n bytes/second to send, I send them • if they get dropped, I retransmit them (total 2n bytes/second, 3n bytes/second, ...) • when there is congestion, packets get dropped • if everybody retransmits with fixed timeout, the amount of data sent grows, increasing congestion • so some congestion (enough to drop packets) causes more congestion!!!! • eventually, very little data gets through, most is discarded • the network is (nearly) down TCP Reno • exponential backoff: if retransmit timer was t before retransmission, it becomes 2t after retransmission • careful RTT measurements give retransmission as soon as possible, but no sooner • keep a congestion window: • effective window is lesser of: (1) flow control window, and (2) congestion window • congestion window is kept only on the sender, and never communicated between -

Technical and Legal Overview of the Tor Anonymity Network

Emin Çalışkan, Tomáš Minárik, Anna-Maria Osula Technical and Legal Overview of the Tor Anonymity Network Tallinn 2015 This publication is a product of the NATO Cooperative Cyber Defence Centre of Excellence (the Centre). It does not necessarily reflect the policy or the opinion of the Centre or NATO. The Centre may not be held responsible for any loss or harm arising from the use of information contained in this publication and is not responsible for the content of the external sources, including external websites referenced in this publication. Digital or hard copies of this publication may be produced for internal use within NATO and for personal or educational use when for non- profit and non-commercial purpose, provided that copies bear a full citation. www.ccdcoe.org [email protected] 1 Technical and Legal Overview of the Tor Anonymity Network 1. Introduction .................................................................................................................................... 3 2. Tor and Internet Filtering Circumvention ....................................................................................... 4 2.1. Technical Methods .................................................................................................................. 4 2.1.1. Proxy ................................................................................................................................ 4 2.1.2. Tunnelling/Virtual Private Networks ............................................................................... 5