Cpus, Gpus and Accelerators X86 Intel Roadmap for 2019

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference 319433-037 MAY 2019 Intel technologies features and benefits depend on system configuration and may require enabled hardware, software, or service activation. Learn more at intel.com, or from the OEM or retailer. No computer system can be absolutely secure. Intel does not assume any liability for lost or stolen data or systems or any damages resulting from such losses. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifica- tions. Current characterized errata are available on request. This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Intel does not guarantee the availability of these interfaces in any future product. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling 1- 800-548-4725, or by visiting http://www.intel.com/design/literature.htm. Intel, the Intel logo, Intel Deep Learning Boost, Intel DL Boost, Intel Atom, Intel Core, Intel SpeedStep, MMX, Pentium, VTune, and Xeon are trademarks of Intel Corporation in the U.S. -

GPU Developments 2018

GPU Developments 2018 2018 GPU Developments 2018 © Copyright Jon Peddie Research 2019. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR. -

A Superscalar Out-Of-Order X86 Soft Processor for FPGA

A Superscalar Out-of-Order x86 Soft Processor for FPGA Henry Wong University of Toronto, Intel [email protected] June 5, 2019 Stanford University EE380 1 Hi! ● CPU architect, Intel Hillsboro ● Ph.D., University of Toronto ● Today: x86 OoO processor for FPGA (Ph.D. work) – Motivation – High-level design and results – Microarchitecture details and some circuits 2 FPGA: Field-Programmable Gate Array ● Is a digital circuit (logic gates and wires) ● Is field-programmable (at power-on, not in the fab) ● Pre-fab everything you’ll ever need – 20x area, 20x delay cost – Circuit building blocks are somewhat bigger than logic gates 6-LUT6-LUT 6-LUT6-LUT 3 6-LUT 6-LUT FPGA: Field-Programmable Gate Array ● Is a digital circuit (logic gates and wires) ● Is field-programmable (at power-on, not in the fab) ● Pre-fab everything you’ll ever need – 20x area, 20x delay cost – Circuit building blocks are somewhat bigger than logic gates 6-LUT 6-LUT 6-LUT 6-LUT 4 6-LUT 6-LUT FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel code and hardware accelerators need effort – Less effort if soft processors got faster 5 FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel code and hardware accelerators need effort – Less effort if soft processors got faster 6 FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel -

2021 Proxy Statement

2021 Proxy Statement Notice of Annual Stockholders’ Meeting Investor Engagement To see how we responded to your Who We Met With feedback, see page 41. Total Contacted Total Engaged Director Engaged 50.4% 39.5% 29.7% O/S O/S O/S Intel’s outstanding shares (o/s) caculated as of September 30,2020 We are proud of our long-standing and robust investor engagement program. Our integrated outreach team, led by our Investor Relations group, Corporate Responsibility office, Human Resources office, and Corporate Secretary office, engages proactively with our stockholders, maintaining a two-way, year-round governance calendar as shown in this graphic. During 2020, our engagement program addressed our executive compensation program, corporate governance best practices, our Board’s operation and experience, and our commitment to addressing environmental and social responsibility issues that are critical to our business. Through direct participation in our engagement efforts and through briefings from our engagement teams, our directors are able to monitor developments in corporate governance and social responsibility and benefit from our stockholders’ perspectives on these topics. In consultation with our Board, we seek to thoughtfully adopt and apply developing practices in a manner that best supports our business and our culture. Summer Winter Review annual meeting Incorporate input results to determine from investor meetings Annual appropriate next into annual meeting planning steps, and prioritize and enhance governance Stockholders’ post annual meeting and compensation investor engagement practices and disclosures Meeting focus areas when warranted Fall Spring Hold post- Conduct pre-annual annual meeting meeting investor meetings investor meetings to solicit feedback and report to the to answer questions and Board, Compensation understand investor Committee, and Corporate views on proxy Governance and Nominating matters Committee Additional detail on specific topics and initiatives we have adopted is discussed under “Investor Engagement” on page 40. -

Intel® Quartus® Prime Design Suite Version 18.1 Update Release Notes

Intel® Quartus® Prime Design Suite Version 18.1 Update Release Notes Updated for Intel® Quartus® Prime Design Suite: 18.1.1 Standard Edition Subscribe RN-01080-18.1.1.0 | 2019.04.17 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. Intel® Quartus® Prime Design Suite Version 18.1 Update Release Notes........................ 3 2. Issues Addressed in Update 1......................................................................................... 4 2.1. Intel Quartus Prime Pro Edition Software.................................................................. 4 2.2. Intel Quartus Prime Standard Edition Software.......................................................... 7 2.3. IP and IP Cores..................................................................................................... 8 2.4. DSP Builder for Intel FPGAs...................................................................................12 2.5. Intel High Level Synthesis Compiler........................................................................12 2.6. Intel FPGA SDK for OpenCL*................................................................................. 13 3. Issues Addressed in Update 2....................................................................................... 15 3.1. Intel Quartus Prime Pro Edition Software.................................................................15 3.2. IP and IP Cores................................................................................................... 15 3.3. Intel FPGA SDK for OpenCL.................................................................................. -

Accurate Throughput Prediction of Basic Blocks on Recent Intel Microarchitectures

Accurate Throughput Prediction of Basic Blocks on Recent Intel Microarchitectures Andreas Abel and Jan Reineke Saarland University Saarland Informatics Campus Saarbrücken, Germany abel, [email protected] ABSTRACT been shown to be relatively low; Chen et al. [12] found that Tools to predict the throughput of basic blocks on a spe- the average error of previous tools compared to measurements cific microarchitecture are useful to optimize software per- is between 9% and 36%. formance and to build optimizing compilers. In recent work, We have identified two main reasons that contribute to several such tools have been proposed. However, the accuracy these discrepancies between measurements and predictions: of their predictions has been shown to be relatively low. (1) the microarchitectural models of previous tools are not In this paper, we identify the most important factors for detailed enough; (2) evaluations were partly based on un- these inaccuracies. To a significant degree these inaccura- suitable benchmarks, and biased and inaccurate throughput cies are due to elements and parameters of the pipelines of measurements. In this paper, we address both of these issues. recent CPUs that are not taken into account by previous tools. In the first part, we develop a pipeline model that is signif- A primary reason for this is that the necessary details are icantly more detailed than those of previous tools. It is appli- often undocumented. In this paper, we build more precise cable to all Intel Core microarchitectures released in the last models of relevant components by reverse engineering us- decade; the differences between these microarchitectures can ing microbenchmarks. -

Intel® Industrial Iot Workshop Security for Industrial Platforms

Intel® Industrial IoT workshop Security for industrial platforms Gopi K. Agrawal Security Architect IOTG Technical Sales & Marketing Intel Corporation Legal © 2018 Intel Corporation No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. Intel technologies' features and benefits depend on system configuration and may require enabled hardware, software or service activation. Performance varies depending on system configuration. No computer system can be absolutely secure. Check with your system manufacturer or retailer or learn more at www.intel.com. Intel, the Intel logo, are trademarks of Intel Corporation in the U.S. and/or other countries. *Other names and brands may be claimed as the property of others. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Performance varies depending on system configuration. No computer system can be absolutely secure. -

Demystifying Internet of Things Security Successful Iot Device/Edge and Platform Security Deployment — Sunil Cheruvu Anil Kumar Ned Smith David M

Demystifying Internet of Things Security Successful IoT Device/Edge and Platform Security Deployment — Sunil Cheruvu Anil Kumar Ned Smith David M. Wheeler Demystifying Internet of Things Security Successful IoT Device/Edge and Platform Security Deployment Sunil Cheruvu Anil Kumar Ned Smith David M. Wheeler Demystifying Internet of Things Security: Successful IoT Device/Edge and Platform Security Deployment Sunil Cheruvu Anil Kumar Chandler, AZ, USA Chandler, AZ, USA Ned Smith David M. Wheeler Beaverton, OR, USA Gilbert, AZ, USA ISBN-13 (pbk): 978-1-4842-2895-1 ISBN-13 (electronic): 978-1-4842-2896-8 https://doi.org/10.1007/978-1-4842-2896-8 Copyright © 2020 by The Editor(s) (if applicable) and The Author(s) This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. Open Access This book is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made. The images or other third party material in this book are included in the book’s Creative Commons license, unless indicated otherwise in a credit line to the material. -

Lecture Note 7. IA: History and Features

System Programming Lecture Note 7. IA: History and Features October 30, 2020 Jongmoo Choi Dept. of Software Dankook University http://embedded.dankook.ac.kr/~choijm (Copyright © 2020 by Jongmoo Choi, All Rights Reserved. Distribution requires permission) Objectives Discuss Issues on ISA (Instruction Set Architecture) ü Opcode and operand addressing modes Apprehend how ISA affects system program ü Context switch, memory alignment, stack overflow protection Describe the history of IA (Intel Architecture) Grasp the key technologies in recent IA ü Pipeline and Moore’s law Refer to Chapter 3, 4 in the CSAPP and Intel SW Developer Manual 2 Issues on ISA (1/2) Consideration on ISA (Instruction Set Architecture) asm_sum: addl $1, %ecx movl -4(%ebx, %ebp, 4), %eax call func1 leave ü opcode issues § how many? (add vs. inc è RISC vs. CISC) § multi functions? (SISD vs. SIMD vs. MIMD …) ü operand issues § fixed vs. variable operands f bits n bits n bits n bits § fixed: how many? opcode operand 1 operand 2 operand 3 § operand addressing modes f bits n bits n bits ü performance issues opcode operand 1 operand 2 § pipeline f bits n bits § superscalar opcode operand 1 § multicore 3 Issues on ISA (2/2) Features of IA (Intel Architecture) ü Basically CISC (Complex Instruction Set Computing) § Variable length instruction § Variable number of operands (0~3) § Diverse operand addressing modes § Stack based function call § Supporting SIMD (Single Instruction Multiple Data) ü Try to take advantage of RISC (Reduced Instruction Set Computing) § Micro-operations -

EARNINGS Presentation Disclosures

Q2 2020 EARNINGS Presentation Disclosures This presentation contains non-GAAP financial measures. Earnings per share (EPS), gross margin, and operating margin are presented on a non- GAAP basis unless otherwise indicated, and this presentation also includes a non-GAAP free cash flow (FCF) measure. The Appendix provides a reconciliation of these measures to the most directly comparable GAAP financial measure. The non-GAAP financial measures disclosed by Intel should not be considered a substitute for, or superior to, the financial measures prepared in accordance with GAAP. Please refer to “Explanation of Non-GAAP Measures” in Intel's quarterly earnings release for a detailed explanation of the adjustments made to the comparable GAAP measures, the ways management uses the non-GAAP measures, and the reasons why management believes the non-GAAP measures provide investors with useful supplemental information. Statements in this presentation that refer to business outlook, future plans, and expectations are forward-looking statements that involve a number of risks and uncertainties. Words such as "anticipate," "expect," "intend," "goals," "plans," "believe," "seek," "estimate," "continue,“ “committed,” “on-track,” ”positioned,” “launching,” "may," "will," “would,” "should," “could,” and variations of such words and similar expressions are intended to identify such forward-looking statements. Statements that refer to or are based on estimates, forecasts, projections, uncertain events or assumptions, including statements relating to total addressable market (TAM) or market opportunity, future products and technology and the expected availability and benefits of such products and technology, including our 10nm and 7nm process technologies, products, and product designs, and anticipated trends in our businesses or the markets relevant to them, also identify forward-looking statements. -

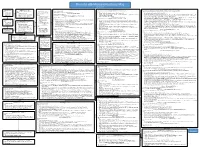

The Intel X86 Microarchitectures Map Version 3.4

The Intel x86 Microarchitectures Map Version 3.4 Willamette (2000, 180 nm) Skylake (2015, 14 nm to 14 nm++) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P6 (1995, 0.50 to 0.35 μm) Series: Alternative Names: i686 Alternative Names: NetBurst, Pentium 4, Pentium IV, P4, , Pentium 4 180 nm Alternative Names: SKL (Desktop and Mobile), SKX (Server) (Note : all U and Y processors are MCPs) Alternative Names: iAPX 386, 386, i386 P5 (1993, 0.80 to 0.35 μm) • 16-bit data bus: Series: Pentium Pro (used in desktops and servers) Series: Series: Series: Alternative Names: Pentium, 8086 (iAPX 86) Variant: Klamath (1997, 0.35 μm) • Desktop: Pentium 4 1.x (except those with a suffix), Pentium 4 2.0 • Desktop: Desktop 6th Generation Core i5 (i5-6xxx, S-H, 14 nm) • Desktop/Server: i386DX 80586, 586, i586 • 8-bit data bus: Alternative Names: Pentium II, PII • Desktop lower-performance: Celeron 1.x (where x is a single digit between 5-9, except Celeron 1.6 SL7EZ, • Desktop higher-performance: Desktop 6th Generation Core i7 (i7-6xxx, S-H , 14 nm), Desktop 7th Generation Core i7 X (i7-7xxxX, X, 14 nm+), • Desktop lower-performance: i386SX Series: 8088 (iAPX 88) Series: Pentium II 233/266/300 steppings C0 and C1 (Klamath, used in desktops) Willamette-128), Celeron 2.0 SL68F Desktop 7th Generation Core i9 X (i9-7xxxXE, i9-7xxxX, X, 14 nm+), Desktop 9th Generation Core i7 X (i7-9xxxX, X, 14 nm++), Desktop 9th • Mobile: i386SL, 80376, i386EX, • Desktop/Server: P5, P54C New instructions: Deschutes (1998, 0.25 to 0.18 μm) • Server: Foster (Xeon), Foster-MP (Xeon) -

Intel® Tiger Lake-UP3 Solution

Intel® Tiger Lake-UP3 Solution ® Intel Tiger Lake-UP3 Platform Overview Graphics Media, and Display Security and Manageability ● New Xe (Gen12) Gfx Engine with up to 96 Eus ● Intel® Total Memory Encryption (Intel® TME) ● 4 independent display units (4 x 4K or 2 x 8K) * Hardware based encryption to protect entire memory contents ● Image processing unit IPU6 from physical attacks * Up to 27MP image capture ● Intel® AES-NI * Up to 4K@60fps video performance * A new, hardware based AES-NI instruction set to protect * Support for 4 concurrent streams private keys from malware * VTIO and RGB-IR for facial recognitionn * Keys are no longer exposed and handlers are utilized to perform encrypt/decrypt operations ● Control-Flow Enforcement Technology AI Experience Enhanced Features ● Vector Neural Network Instruction (VNNI) improves ● Up to 4 cores inferencing workload performance ● In-Band ECC available ● Common industry wide AI frameworks optimized on Tiger ● PCIe 4.0 (off CPU complex), 12 HSIO lanes (off PCH complex) Lake architecture (via OpenVINO™) ● Improved Thunderbolt™ data performance ● Intel® Deep Learning Boost ● Integrated Type C subsystem with for USB4 compliance ● AI/DL Instruction Sets including VNNI and CV/AI applications ® Intel Tiger Lake-UP3 Platform Block Diagram eDP DDR4/LPDDR4x (2ch) www.ieiworld.com 2 DDI PCIe 4.0 (1x4) TIGER LAKE-UP3 USB 3.2 Gen2 Thunderbolt™ 3 USB 3.2 Gen1 Hi-Speed USB 2.0 PCIe 3.0 CERTIFIED USB ™ SATA 6Gb/s Intel® Wireless-AC TIGER LAKE Intel® LAN PHY 2.5GbE TSN MAC ® PCH-LP Intel About IEI-2021-V10 ®