Towards Securing the Internet of Things with QUIC

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ipv6-Ipsec And

IPSec and SSL Virtual Private Networks ITU/APNIC/MICT IPv6 Security Workshop 23rd – 27th May 2016 Bangkok Last updated 29 June 2014 1 Acknowledgment p Content sourced from n Merike Kaeo of Double Shot Security n Contact: [email protected] Virtual Private Networks p Creates a secure tunnel over a public network p Any VPN is not automagically secure n You need to add security functionality to create secure VPNs n That means using firewalls for access control n And probably IPsec or SSL/TLS for confidentiality and data origin authentication 3 VPN Protocols p IPsec (Internet Protocol Security) n Open standard for VPN implementation n Operates on the network layer Other VPN Implementations p MPLS VPN n Used for large and small enterprises n Pseudowire, VPLS, VPRN p GRE Tunnel n Packet encapsulation protocol developed by Cisco n Not encrypted n Implemented with IPsec p L2TP IPsec n Uses L2TP protocol n Usually implemented along with IPsec n IPsec provides the secure channel, while L2TP provides the tunnel What is IPSec? Internet IPSec p IETF standard that enables encrypted communication between peers: n Consists of open standards for securing private communications n Network layer encryption ensuring data confidentiality, integrity, and authentication n Scales from small to very large networks What Does IPsec Provide ? p Confidentiality….many algorithms to choose from p Data integrity and source authentication n Data “signed” by sender and “signature” verified by the recipient n Modification of data can be detected by signature “verification” -

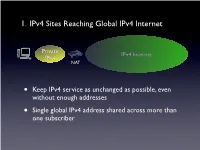

1. Ipv4 Sites Reaching Global Ipv4 Internet

1. IPv4 Sites Reaching Global IPv4 Internet Private IPv4 Internet IPv4 NAT • Keep IPv4 service as unchanged as possible, even without enough addresses • Single global IPv4 address shared across more than one subscriber SP IPv6 Network Private Tunnel for IPv4 (public, private, port-limited, etc....) IPv4 Internet IPv4 • Scenario #2 - Service Providers Running out of Private IPv4 space • IPv4 / IPv6 encapsulations/tunnels • Tunnels setup by DHCP, Routing, etc. between a GW and Router • Wherever the NAT lands, it is important that the user keeps control of it • Provides a path to delivering IPv6 SP IPv6 Network Tunnel for IPv4 (public, private, port-limited, etc....) IPv4 Internet • Scenario #3a “Wireless Greenfield” • IPv4 / IPv6 encapsulations/tunnels • Tunnels setup between a host and a Router • IPv4 binding for host applications, transport over IPv6 • Wherever the NAT lands, it is important that the user keeps control of it 3 - 5 Translation Options IPv6 Internet IPv4 Internet IPv6 IPv4 My IPv6 Network IPv6 Internet IPv4 Internet • “Scenario #3” • NAT64/DNS64.... - Stateful, DNSSEC Challenges, DNS64 location, etc. My IPv6 Network IPv6 Internet IPv4 Internet • “Scenario #5” • IVI - NAT-PT..... Expose only certain IPv6 servers, etc. MY IPv4 Network IPv6 Internet IPv4 Internet • “Scenario #4” • NAT64 - 1:1, Stateless, DNSSEC OK, no DNS64 MY IPv4 Network IPv6 Internet IPv4 Internet • Already solved by existing transition mechanisms?? (teredo, etc). Scenarios 1 - 5 1. IPv4 Sites Reaching Global IPv4 Internet Private IPv4 Internet IPv4 NAT • Keep IPv4 service as unchanged as possible, even without enough addresses • Single global IPv4 address shared across more than one subscriber 2. Service Providers Running out of Private IPv4 space ISP Private IPv4 Private Network IPv4 IPv4 Internet • Service Providers with large, privately addressed, IPv4 networks • Organic growth plus pressure to free global addresses for customer use contribute to the problem • The SP Private networks in question generally do not need to reach the Internet at large 3. -

Internet Protocol Suite

InternetInternet ProtocolProtocol SuiteSuite Srinidhi Varadarajan InternetInternet ProtocolProtocol Suite:Suite: TransportTransport • TCP: Transmission Control Protocol • Byte stream transfer • Reliable, connection-oriented service • Point-to-point (one-to-one) service only • UDP: User Datagram Protocol • Unreliable (“best effort”) datagram service • Point-to-point, multicast (one-to-many), and • broadcast (one-to-all) InternetInternet ProtocolProtocol Suite:Suite: NetworkNetwork z IP: Internet Protocol – Unreliable service – Performs routing – Supported by routing protocols, • e.g. RIP, IS-IS, • OSPF, IGP, and BGP z ICMP: Internet Control Message Protocol – Used by IP (primarily) to exchange error and control messages with other nodes z IGMP: Internet Group Management Protocol – Used for controlling multicast (one-to-many transmission) for UDP datagrams InternetInternet ProtocolProtocol Suite:Suite: DataData LinkLink z ARP: Address Resolution Protocol – Translates from an IP (network) address to a network interface (hardware) address, e.g. IP address-to-Ethernet address or IP address-to- FDDI address z RARP: Reverse Address Resolution Protocol – Translates from a network interface (hardware) address to an IP (network) address AddressAddress ResolutionResolution ProtocolProtocol (ARP)(ARP) ARP Query What is the Ethernet Address of 130.245.20.2 Ethernet ARP Response IP Source 0A:03:23:65:09:FB IP Destination IP: 130.245.20.1 IP: 130.245.20.2 Ethernet: 0A:03:21:60:09:FA Ethernet: 0A:03:23:65:09:FB z Maps IP addresses to Ethernet Addresses -

The Internet Protocol, Version 4 (Ipv4)

Today’s Lecture I. IPv4 Overview The Internet Protocol, II. IP Fragmentation and Reassembly Version 4 (IPv4) III. IP and Routing IV. IPv4 Options Internet Protocols CSC / ECE 573 Fall, 2005 N.C. State University copyright 2005 Douglas S. Reeves 1 copyright 2005 Douglas S. Reeves 2 Internet Protocol v4 (RFC791) Functions • A universal intermediate layer • Routing IPv4 Overview • Fragmentation and reassembly copyright 2005 Douglas S. Reeves 3 copyright 2005 Douglas S. Reeves 4 “IP over Everything, Everything Over IP” IP = Basic Delivery Service • Everything over IP • IP over everything • Connectionless delivery simplifies router design – TCP, UDP – Dialup and operation – Appletalk – ISDN – Netbios • Unreliable, best-effort delivery. Packets may be… – SCSI – X.25 – ATM – Ethernet – lost (discarded) – X.25 – Wi-Fi – duplicated – SNA – FDDI – reordered – Sonet – ATM – Fibre Channel – Sonet – and/or corrupted – Frame Relay… – … – Remote Direct Memory Access – Ethernet • Even IP over IP! copyright 2005 Douglas S. Reeves 5 copyright 2005 Douglas S. Reeves 6 1 IPv4 Datagram Format IPv4 Header Contents 0 4 8 16 31 •Version (4 bits) header type of service • Functions version total length (in bytes) length (x4) prec | D T R C 0 •Header Length x4 (4) flags identification fragment offset (x8) 1. universal 0 DF MF s •Type of Service (8) e time-to-live (next) protocol t intermediate layer header checksum y b (hop count) identifier •Total Length (16) 0 2 2. routing source IP address •Identification (16) 3. fragmentation and destination IP address reassembly •Flags (3) s •Fragment Offset ×8 (13) e t 4. Options y IP options (if any) b •Time-to-Live (8) 0 4 ≤ •Protocol Identifier (8) s e t •Header Checksum (16) y b payload 5 •Source IP Address (32) 1 5 5 6 •Destination IP Address (32) ≤ •IP Options (≤ 320) copyright 2005 Douglas S. -

SOCKS Protocol Version 6

SOCKS Protocol Version 6 draft-olteanu-intarea-socks-6-08 Vladimir Olteanu IETF 106 What’s new ● DNS provided by SOCKS ● Options for Happy Eyeballs at the proxy Clients need DNS-like features ● A and AAAA – LD_PRELOAD for non-SOCKS-aware apps: gedaddrinfo() separate from connect() – Happy Eyeballs: need to do queries separately ● TXT – ESNI ● MX, Service Binding, etc. – <Insert future use case here> Providing DNS-like features ● Individual SOCKS options (removed in -08) – Have to keep up with use cases – Duplicate DNS functionality – Until -07: A, AAAA, PTR ● Having the client use DNS – Hard to convey policies: resolver IPs, plaintext / over TLS / over HTTPS etc., maybe credentials, etc. – Provide a DNS proxy Why not separate DNS from SOCKS? Client Proxy Server HTTP/SOCKS :1080 HTTP :80 DNS :53 Why not separate DNS from SOCKS? Client Proxy Server HTTP/SOCKS :1080 HTTP :80 DNS :53 WHICH TOR CIRCUIT? ● Need context for DNS query – Otherwise: privacy leaks, suboptimal CDN use DNS provided by SOCKS ● Clients make CONNECT request to 0.0.0.0:53 – Proxy needn’t provide a valid bind address ● Plaintext DNS over SOCKS (opt. over TLS) – TCP by default: SOCKS + UDP more cumbersome to use ● Implementation in Sixtysocks – Run separate DNS proxy locally – Translate 0.0.0.0:53 to 127.0.0.1:53 Happy Eyeballs ● RFC 8305: resolve and connect to a server using both IPv4 and IPv6, keep only one connection – Failover from IPv6 to IPv4 – Better responsiveness if one is faster ● Clients can implement Happy Eyeballs locally – Have DNS + CONNECT Happy Eyeballs: -

61A Lecture 35 Distributed Computing Internet Protocol Transmission

Announcements • Homework 9 (6 pts) due Wednesday 11/26 @ 11:59pm ! Homework Party Monday 6pm-8pm in 2050 VLSB • Guest in live lecture, TA Soumya Basu, on Monday 11/24 • Optional Scheme recursive art contest due Monday 12/1 @ 11:59pm 61A Lecture 35 • No lecture on Wednesday 11/26 (turkey) • No lab on Tuesday 11/25 & Wednesday 11/26 • The week of 12/1: Homework 10 due Wednesday 12/3 & Quiz 3 due Thursday 12/4 on SQL Monday, November 24 ! The lab on SQL (12/2 & 12/3) will be an excellent place to get homework help 2 Distributed Computing A distributed computing application consists of multiple programs running on multiple computers that together coordinate to perform some task. • Computation is performed in parallel by many computers. • Information can be restricted to certain computers. • Redundancy and geographic diversity improve reliability. Distributed Computing Characteristics of distributed computing: • Computers are independent — they do not share memory. • Coordination is enabled by messages passed across a network. • Individual programs have differentiating roles. Distributed computing for large-scale data processing: • Databases respond to queries over a network. • Data sets can be partitioned across multiple machines (next lecture). 4 Network Messages Computers communicate via messages: sequences of bytes transmitted over a network. Messages can serve many purposes: • Send data to another computer • Request data from another computer • Instruct a program to call a function on some arguments. Internet Protocol • Transfer a program to be executed by another computer. Messages conform to a message protocol adopted by both the sender (to encode the message) & receiver (to interpret the message). -

Domain Name System 1 Domain Name System

Domain Name System 1 Domain Name System The Domain Name System (DNS) is a hierarchical distributed naming system for computers, services, or any resource connected to the Internet or a private network. It associates various information with domain names assigned to each of the participating entities. A Domain Name Service translates queries for domain names (which are easier to understand and utilize when accessing the internet) into IP addresses for the purpose of locating computer services and devices worldwide. An often-used analogy to explain the Domain Name System is that it serves as the phone book for the Internet by translating human-friendly computer hostnames into IP addresses. For example, the domain name www.example.com translates to the addresses 192.0.43.10 (IPv4) and 2620:0:2d0:200::10 (IPv6). The Domain Name System makes it possible to assign domain names to groups of Internet resources and users in a meaningful way, independent of each entity's physical location. Because of this, World Wide Web (WWW) hyperlinks and Internet contact information can remain consistent and constant even if the current Internet routing arrangements change or the participant uses a mobile device. Internet domain names are easier to remember than IP addresses such as 208.77.188.166 (IPv4) or 2001:db8:1f70::999:de8:7648:6e8 (IPv6). Users take advantage of this when they recite meaningful Uniform Resource Locators (URLs) and e-mail addresses without having to know how the computer actually locates them. The Domain Name System distributes the responsibility of assigning domain names and mapping those names to IP addresses by designating authoritative name servers for each domain. -

Nist Sp 800-77 Rev. 1 Guide to Ipsec Vpns

NIST Special Publication 800-77 Revision 1 Guide to IPsec VPNs Elaine Barker Quynh Dang Sheila Frankel Karen Scarfone Paul Wouters This publication is available free of charge from: https://doi.org/10.6028/NIST.SP.800-77r1 C O M P U T E R S E C U R I T Y NIST Special Publication 800-77 Revision 1 Guide to IPsec VPNs Elaine Barker Quynh Dang Sheila Frankel* Computer Security Division Information Technology Laboratory Karen Scarfone Scarfone Cybersecurity Clifton, VA Paul Wouters Red Hat Toronto, ON, Canada *Former employee; all work for this publication was done while at NIST This publication is available free of charge from: https://doi.org/10.6028/NIST.SP.800-77r1 June 2020 U.S. Department of Commerce Wilbur L. Ross, Jr., Secretary National Institute of Standards and Technology Walter Copan, NIST Director and Under Secretary of Commerce for Standards and Technology Authority This publication has been developed by NIST in accordance with its statutory responsibilities under the Federal Information Security Modernization Act (FISMA) of 2014, 44 U.S.C. § 3551 et seq., Public Law (P.L.) 113-283. NIST is responsible for developing information security standards and guidelines, including minimum requirements for federal information systems, but such standards and guidelines shall not apply to national security systems without the express approval of appropriate federal officials exercising policy authority over such systems. This guideline is consistent with the requirements of the Office of Management and Budget (OMB) Circular A-130. Nothing in this publication should be taken to contradict the standards and guidelines made mandatory and binding on federal agencies by the Secretary of Commerce under statutory authority. -

Guidelines for the Secure Deployment of Ipv6

Special Publication 800-119 Guidelines for the Secure Deployment of IPv6 Recommendations of the National Institute of Standards and Technology Sheila Frankel Richard Graveman John Pearce Mark Rooks NIST Special Publication 800-119 Guidelines for the Secure Deployment of IPv6 Recommendations of the National Institute of Standards and Technology Sheila Frankel Richard Graveman John Pearce Mark Rooks C O M P U T E R S E C U R I T Y Computer Security Division Information Technology Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899-8930 December 2010 U.S. Department of Commerce Gary Locke, Secretary National Institute of Standards and Technology Dr. Patrick D. Gallagher, Director GUIDELINES FOR THE SECURE DEPLOYMENT OF IPV6 Reports on Computer Systems Technology The Information Technology Laboratory (ITL) at the National Institute of Standards and Technology (NIST) promotes the U.S. economy and public welfare by providing technical leadership for the nation’s measurement and standards infrastructure. ITL develops tests, test methods, reference data, proof of concept implementations, and technical analysis to advance the development and productive use of information technology. ITL’s responsibilities include the development of technical, physical, administrative, and management standards and guidelines for the cost-effective security and privacy of sensitive unclassified information in Federal computer systems. This Special Publication 800-series reports on ITL’s research, guidance, and outreach efforts in computer security and its collaborative activities with industry, government, and academic organizations. National Institute of Standards and Technology Special Publication 800-119 Natl. Inst. Stand. Technol. Spec. Publ. 800-119, 188 pages (Dec. 2010) Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. -

Internet Address Space: Economic Considerations in the Management of Ipv4”, OECD Digital Economy Papers, No

Please cite this paper as: OECD (2008-06-18), “Internet Address Space: Economic Considerations in the Management of IPv4”, OECD Digital Economy Papers, No. 145, OECD Publishing, Paris. http://dx.doi.org/10.1787/230461618475 OECD Digital Economy Papers No. 145 Internet Address Space ECONOMIC CONSIDERATIONS IN THE MANAGEMENT OF IPV4 OECD DSTI/ICCP(2007)20/FINAL FOREWORD The report provides an analysis of economic considerations associated with the transition from IPv4 to IPv6. It provides background analysis supporting the forthcoming ICCP-organised Ministerial-level meeting on ―The Future of the Internet Economy‖, to take place in Seoul, Korea on 17-18 June 2008. This report was prepared by Ms. Karine Perset of the OECD‘s Directorate for Science Technology and Industry. It was declassified by the ICCP Committee at its 54th Session on 5-7 March 2008. It is published under the responsibility of the Secretary-General of the OECD. This paper has greatly benefited from the expert input of Geoff Huston from APNIC, David Conrad from the IANA, Patrick Grossetête from CISCO Systems, Bill Woodcock from Packet Clearing House, Marcelo Bagnulo Braun from the University of Madrid, Alain Durand from Comcast, and Vincent Bataille from Mulot Déclic, although interpretations, unless otherwise stated, are those of the author. 2 DSTI/ICCP(2007)20/FINAL TABLE OF CONTENTS FOREWORD ................................................................................................................................................... 2 MAIN POINTS .............................................................................................................................................. -

Guidelines on Firewalls and Firewall Policy

Special Publication 800-41 Revision 1 Guidelines on Firewalls and Firewall Policy Recommendations of the National Institute of Standards and Technology Karen Scarfone Paul Hoffman NIST Special Publication 800-41 Guidelines on Firewalls and Firewall Revision 1 Policy Recommendations of the National Institute of Standards and Technology Karen Scarfone Paul Hoffman C O M P U T E R S E C U R I T Y Computer Security Division Information Technology Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899-8930 September 2009 U.S. Department of Commerce Gary Locke, Secretary National Institute of Standards and Technology Patrick D. Gallagher, Deputy Director GUIDELINES ON FIREWALLS AND FIREWALL POLICY Reports on Computer Systems Technology The Information Technology Laboratory (ITL) at the National Institute of Standards and Technology (NIST) promotes the U.S. economy and public welfare by providing technical leadership for the nation’s measurement and standards infrastructure. ITL develops tests, test methods, reference data, proof of concept implementations, and technical analysis to advance the development and productive use of information technology. ITL’s responsibilities include the development of technical, physical, administrative, and management standards and guidelines for the cost-effective security and privacy of sensitive unclassified information in Federal computer systems. This Special Publication 800-series reports on ITL’s research, guidance, and outreach efforts in computer security and its collaborative activities with industry, government, and academic organizations. National Institute of Standards and Technology Special Publication 800-41 Revision 1 Natl. Inst. Stand. Technol. Spec. Publ. 800-41 rev1, 48 pages (Sep. 2009) Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. -

Configuring Ipv4 for Gigabit Ethernet Interfaces

Send documentation comments to [email protected] CHAPTER 46 Configuring IPv4 for Gigabit Ethernet Interfaces Cisco MDS 9000 Family supports IP version 4 (IPv4) on Gigabit Ethernet interfaces. This chapter describes how to configure IPv4 addresses and other IPv4 features. This chapter includes the following topics: • About IPv4, page 46-1 • Basic Gigabit Ethernet Configuration for IPv4, page 46-2 • Verifying Gigabit Ethernet Connectivity, page 46-4 • VLANs, page 46-5 • Configuring Static IPv4 Routing, page 46-7 • IPv4-ACLs, page 46-7 • ARP Cache, page 46-8 • Displaying IPv4 Statistics, page 46-9 • Default Settings, page 46-10 About IPv4 Both FCIP and iSCSI rely on TCP/IP for network connectivity. On each IPS module or MPS-14/2 module, connectivity is provided in the form of Gigabit Ethernet interfaces that are appropriately configured. This section covers the steps required to configure IP for subsequent use by FCIP and iSCSI. Note For information about configuring FCIP, see Chapter 41, “Configuring FCIP.” For information about configuring iSCSI, see Chapter 43, “Configuring iSCSI.” A new port mode, called IPS, is defined for Gigabit Ethernet ports on each IPS module or MPS-14/2 module. IP storage ports are implicitly set to IPS mode, so it can only be used to perform iSCSI and FCIP storage functions. IP storage ports do not bridge Ethernet frames or route other IP packets. Each IPS port represents a single virtual Fibre Channel host in the Fibre Channel SAN. All the iSCSI hosts connected to this IPS port are merged and multiplexed through the single Fibre Channel host.