System Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Freenas® 11.0 User Guide

FreeNAS® 11.0 User Guide June 2017 Edition FreeNAS® IS © 2011-2017 iXsystems FreeNAS® AND THE FreeNAS® LOGO ARE REGISTERED TRADEMARKS OF iXsystems FreeBSD® IS A REGISTERED TRADEMARK OF THE FreeBSD Foundation WRITTEN BY USERS OF THE FreeNAS® network-attached STORAGE OPERATING system. VERSION 11.0 CopYRIGHT © 2011-2017 iXsystems (https://www.ixsystems.com/) CONTENTS WELCOME....................................................1 TYPOGRAPHIC Conventions...........................................2 1 INTRODUCTION 3 1.1 NeW FeaturES IN 11.0..........................................3 1.2 HarDWARE Recommendations.....................................4 1.2.1 RAM...............................................5 1.2.2 The OperATING System DeVICE.................................5 1.2.3 StorAGE Disks AND ContrOLLERS.................................6 1.2.4 Network INTERFACES.......................................7 1.3 Getting Started WITH ZFS........................................8 2 INSTALLING AND UpgrADING 9 2.1 Getting FreeNAS® ............................................9 2.2 PrEPARING THE Media.......................................... 10 2.2.1 On FreeBSD OR Linux...................................... 10 2.2.2 On WindoWS.......................................... 11 2.2.3 On OS X............................................. 11 2.3 Performing THE INSTALLATION....................................... 12 2.4 INSTALLATION TROUBLESHOOTING...................................... 18 2.5 UpgrADING................................................ 19 2.5.1 Caveats:............................................ -

Web Vmstat Any Distros, Especially Here’S Where Web Vmstat Comes Those Targeted at In

FOSSPICKS Sparkling gems and new releases from the world of FOSSpicks Free and Open Source Software Mike Saunders has spent a decade mining the internet for free software treasures. Here’s the result of his latest haul… Shiny statistics in a browser Web VMStat any distros, especially Here’s where Web VMStat comes those targeted at in. It’s a system monitor that runs Madvanced users, ship an HTTP server, so you can connect with shiny system monitoring tools to it via a web browser and see on the desktop. Conky is one such fancy CSS-driven charts. Before you tool, while GKrellM was all the rage install it, you’ll need to get the in the last decade, and they are websocketd utility, which you can genuinely useful for keeping tabs find at https://github.com/ on your boxes, especially when joewalnes/websocketd. Helpfully, you’re an admin in charge of the developer has made pre- various servers. compiled executables available, so Now, pretty much all major you can just grab the 32-bit or distros include a useful command 64-bit tarball, extract it and there line tool for monitoring system you have it: websocketd. (Of course, Here’s the standard output for vmstat – not very interesting, right? resource usage: vmstat. Enter if you’re especially security vmstat 1 in a terminal window and conscious, you can compile it from copy the aforementioned you’ll see a regularly updating (once its source code.) websocketd into the same place. per second) bunch of statistics, Next, clone the Web VMStat Git Then just enter: showing CPU usage, free RAM, repository (or grab the Zip file and ./run swap usage and so forth. -

Educational Directory, 1

DEPARTMENT OF THEINTERIOR BUREAU OF EDUCATION BULLETIN, 1922, No.50, EDUCATIONALDIRECTORY 1922-1923 WASHINGTON GOVERNMENT PRINTING OFFICE 1923 A u ADDITIONAL COPIES OP THIS PUBLICATION MAY BE PROCURED rams THE SUPERINTENDENT OF DOCUMENTS GOVERNMENT PRINTING OFFICE WASHINGTON, AT 115 CENTS PER COPY PURCHASER AGREES NOT TO RESELL 1SR DISTRIBUTE THIS COPT TOR PROT1T.-P1111. RES. S7, APPROVED MAY 11, 1923 IL CONTENTS. I. The United StatesBureau of Education Page: II. Principal State school officers 1 III. County and other local 3 superintendents of schools.- 13 IV. Superintendents of prIblic schools in cities and towns. 46 V. Presidents of universities andcolleges VI. Presidents of junior 67 77 VII. Heads 9f departm nts ofeducation 78 N111 I. Presidentsor deans of schools of theology 87 IX. Presidents or deans of schools of law 90 X. Presidents or deans of schools of tiielicine 92 XI. Presidents or deans of schools of dentistry 94 Presidents or deans of schools of pharmacy.. XII I. Presidents of schools of 94' osteopathy 96 X IV. Presidents or deans of srliools of veterinary medicine 96 XV. Presidents, etc.. of institutionsfor the training of teachers: 1. Presidents of teachers' colleges. 96 II. Principals of normal training schools: 1. Public normal sclu 99 2. Private normal selfols 104 'III. Directors of kindergarten training incolleges, normal schools, and kindergarten training 84110eild 105 XVI. Directors of.summer schools 109 XVII. Librarians of Public and society Librai 126 XVIII. Executive officers of State library 151 X IX. Directors of librafy schools 152 X X. Educational boards and foundations X X I. Church. educational boards and 152 societies. -

Top 15 ERP Software Vendors – 2010

Top 15 ERP Software Vendors – 2010 Profiles of the Leading ERP Vendors Find the best ERP system for your company. For more information visit Business-Software.com/ERP. About ERP Software Enterprise resource planning (ERP) is not a new concept. It was introduced more than 40 years ago, when the first ERP system was created to improve inventory control and management at manufacturing firms. Throughout the 70’s and 80’s, as the number of companies deploying ERP increased, its scope expanded quite a bit to include various production and materials management functions, although it was designed primarily for use in manufacturing plants. In the 1990’s, vendors came to realize that other types of business could benefit from ERP, and that in order for a business to achieve true organizational efficiency, it needed to link all its internal business processes in a cohesive and coordinated way. As a result, ERP was transformed into a broad-reaching environment that encompassed all activities across the back office of a company. What is ERP? An ERP system combines methodologies with software and hardware components to integrate numerous critical back-office functions across a company. Made up of a series of “modules”, or applications that are seamlessly linked together through a common database, an ERP system enables various departments or operating units such as Accounting and Finance, Human Resources, Production, and Fulfillment and Distribution to coordinate activities, share information, and collaborate. Key Benefits for Your Company ERP systems are designed to enhance all aspects of key operations across a company’s entire back-office – from planning through execution, management, and control. -

Avoiding the Top 10 Software Security Design Flaws

AVOIDING THE TOP 10 SOFTWARE SECURITY DESIGN FLAWS Iván Arce, Kathleen Clark-Fisher, Neil Daswani, Jim DelGrosso, Danny Dhillon, Christoph Kern, Tadayoshi Kohno, Carl Landwehr, Gary McGraw, Brook Schoenfield, Margo Seltzer, Diomidis Spinellis, Izar Tarandach, and Jacob West CONTENTS Introduction ..................................................................................................................................................... 5 Mission Statement ..........................................................................................................................................6 Preamble ........................................................................................................................................................... 7 Earn or Give, but Never Assume, Trust ...................................................................................................9 Use an Authentication Mechanism that Cannot be Bypassed or Tampered With .................... 11 Authorize after You Authenticate ...........................................................................................................13 Strictly Separate Data and Control Instructions, and Never Process Control Instructions Received from Untrusted Sources ........................................................................................................... 14 Define an Approach that Ensures all Data are Explicitly Validated .............................................16 Use Cryptography Correctly .................................................................................................................... -

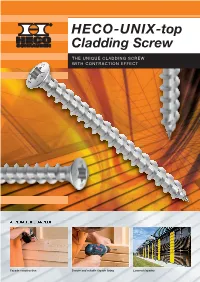

HECO-UNIX-Top Cladding Screw Into the Timber

HECO-UNIX -top Cladding Screw THE UNIQUE CLADDING SCREW WITH CONTRACTION EFFECT APPLICATION EXAMPLES Façade construction Secure and reliable façade fixing Louvred façades 1 2 3 The variable pitch of the HECO-UNIX full The smaller thread pitch means that the Gap free axial fixing of the timber board thread takes hold boards are clamped firmly together via the HECO-UNIX full thread PRODUCT CHARACTERISTICS The HECO-UNIX-top Cladding Screw into the timber. The façade is secured axially via with contraction effect the thread, which increases the pull-out strength. HECO-UNIX-top full thread This results in fewer fastening points and an Timber façades are becoming increasingly popular ultimately more economical façade construction. Contraction effect thanks to the full in both new builds and renovations. This applica - Thanks to the full thread, the sole function of thread with variable thread pitch tion places high demands on fastenings. The the head is to fit the screw drive. As such, the Axial fixing of component façade must be securely fixed to the sub-structure HECO-UNIX-top façade screw has a small and the fixings should be invisible or attractive raised head, which allows simple, concealed Reduced spreading effect thanks to to look at. In addition, the structure must be installation preventing any water penetration the HECO-TOPIX ® tip permanently sound if it is constantly exposed to through the screw. The screws are made of the weather. The HECO-UNIX-top façade screw stainless steel A2, which safely eliminates the Raised countersunk head is the perfect solution to all of these requirements. -

Tigersharc DSP Hardware Specification, Revision 1.0.2, Direct Memory Access

7 DIRECT MEMORY ACCESS Figure 7-0. Table 7-0. Listing 7-0. Overview Direct Memory Access (DMA) is a mechanism for transferring data with- out core being involved. The TigerSHARC® DSP’s on-chip DMA controller relieves the core processor of the burden of moving data between internal memory and an external device, external memory, or between link ports and internal or external memory. The fully-integrated DMA controller allows the TigerSHARC® DSP core processor, or an external device, to specify data transfer operations and return to normal processing while the DMA controller carries out the data transfers in the background. The TigerSHARC® DSP DMA competes with other masters for internal memory access. For more information, see “Architecture and Microarchi- tecture Overview” on page 6-7. This conflict is minimized due to the large internal memory bandwidth that is available. The DMA includes 14 DMA channels, four of which are dedicated to external memory devices, eight to link ports, and two to AutoDMA registers. TigerSHARC DSP Hardware Specification 7 - 1 Overview Figure 7-1 shows a block diagram of the TigerSHARC® DSP’s DMA controller. TRANSMITTER RECEIVER TCB TCB REGISTERS REGISTERS Internal DMA DMA CONTROLLER Bus Requests Interface Figure 7-1. DMA Block Diagram Data Transfers — General Information The DMA controller can perform several types of data transfers: • Internal memory ⇒ external memory and memory-mapped periph- erals • Internal memory ⇒ internal memory of other TigerSHARC® DSPs residing on the cluster bus • Internal memory ⇒ host processor • Internal memory ⇒ link port I/O • External memory ⇒ external peripherals 7 - 2 TigerSHARC DSP Hardware Specification Direct Memory Access • External memory ⇒ internal memory • External memory ⇒ link port I/O • Link port I/O ⇒ internal memory • Link port I/O ⇒ external memory • Cluster bus master via AutoDMA registers ⇒ internal memory Internal-to-internal memory transfers are not directly supported. -

Performance, Scalability on the Server Side

Performance, Scalability on the Server Side John VanDyk Presented at Des Moines Web Geeks 9/21/2009 Who is this guy? History • Apple // • Macintosh • Windows 3.1- Server 2008R2 • Digital Unix (Tru64) • Linux (primarily RHEL) • FreeBSD Systems Iʼve worked with over the years. Languages • Perl • Userland Frontier™ • Python • Java • Ruby • PHP Languages Iʼve worked with over the years (Userland Frontier™ʼs integrated language is UserTalk™) Open source developer since 2000 Perl/Python/PHP MySQL Apache Linux The LAMP stack. Time to Serve Request Number of Clients Performance vs. scalability. network in network out RAM CPU Storage These are the basic laws of physics. All bottlenecks are caused by one of these four resources. Disk-bound •To o l s •iostat •vmstat Determine if you are disk-bound by measuring throughput. vmstat (BSD) procs memory page disk faults cpu r b w avm fre flt re pi po fr sr tw0 in sy cs us sy id 0 2 0 799M 842M 27 0 0 0 12 0 23 344 2906 1549 1 1 98 3 3 0 869M 789M 5045 0 0 0 406 0 10 1311 17200 5301 12 4 84 3 5 0 923M 794M 5219 0 0 0 5178 0 27 1825 21496 6903 35 8 57 1 2 0 931M 784M 909 0 0 0 146 0 12 955 9157 3570 8 4 88 blocked plenty of RAM, idle processes no swapping CPUs A disk-bound FreeBSD machine. b = blocked for resources fr = pages freed/sec cs = context switches avm = active virtual pages in = interrupts flt = memory page faults sy = system calls per interval vmstat (RHEL5) # vmstat -S M 5 25 procs ---------memory-------- --swap- ---io--- --system- -----cpu------ r b swpd free buff cache si so bi bo in cs us sy id wa st 1 0 0 1301 194 5531 0 0 0 29 1454 2256 24 20 56 0 0 3 0 0 1257 194 5531 0 0 0 40 2087 2336 34 27 39 0 0 2 0 0 1183 194 5531 0 0 0 53 1658 2763 33 28 39 0 0 0 0 0 1344 194 5531 0 0 0 34 1807 2125 29 19 52 0 0 no blocked busy but not processes overloaded CPU in = interrupts/sec cs = context switches/sec wa = time waiting for I/O Solving disk bottlenecks • Separate spindles (logs and databases) • Get rid of atime updates! • Minimize writes • Move temp writes to /dev/shm Overview of what weʼre about to dive into. -

Xcode Package from App Store

KH Computational Physics- 2016 Introduction Setting up your computing environment Installation • MAC or Linux are the preferred operating system in this course on scientific computing. • Windows can be used, but the most important programs must be installed – python : There is a nice package ”Enthought Python Distribution” http://www.enthought.com/products/edudownload.php – C++ and Fortran compiler – BLAS&LAPACK for linear algebra – plotting program such as gnuplot Kristjan Haule, 2016 –1– KH Computational Physics- 2016 Introduction Software for this course: Essentials: • Python, and its packages in particular numpy, scipy, matplotlib • C++ compiler such as gcc • Text editor for coding (for example Emacs, Aquamacs, Enthought’s IDLE) • make to execute makefiles Highly Recommended: • Fortran compiler, such as gfortran or intel fortran • BLAS& LAPACK library for linear algebra (most likely provided by vendor) • open mp enabled fortran and C++ compiler Useful: • gnuplot for fast plotting. • gsl (Gnu scientific library) for implementation of various scientific algorithms. Kristjan Haule, 2016 –2– KH Computational Physics- 2016 Introduction Installation on MAC • Install Xcode package from App Store. • Install ‘‘Command Line Tools’’ from Apple’s software site. For Mavericks and lafter, open Xcode program, and choose from the menu Xcode -> Open Developer Tool -> More Developer Tools... You will be linked to the Apple page that allows you to access downloads for Xcode. You wil have to register as a developer (free). Search for the Xcode Command Line Tools in the search box in the upper left. Download and install the correct version of the Command Line Tools, for example for OS ”El Capitan” and Xcode 7.2, Kristjan Haule, 2016 –3– KH Computational Physics- 2016 Introduction you need Command Line Tools OS X 10.11 for Xcode 7.2 Apple’s Xcode contains many libraries and compilers for Mac systems. -

BSD UNIX Toolbox 1000+ Commands for Freebsd, Openbsd

76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page iii BSD UNIX® TOOLBOX 1000+ Commands for FreeBSD®, OpenBSD, and NetBSD®Power Users Christopher Negus François Caen 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page ii 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page i BSD UNIX® TOOLBOX 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page ii 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page iii BSD UNIX® TOOLBOX 1000+ Commands for FreeBSD®, OpenBSD, and NetBSD®Power Users Christopher Negus François Caen 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page iv BSD UNIX® Toolbox: 1000+ Commands for FreeBSD®, OpenBSD, and NetBSD® Power Users Published by Wiley Publishing, Inc. 10475 Crosspoint Boulevard Indianapolis, IN 46256 www.wiley.com Copyright © 2008 by Wiley Publishing, Inc., Indianapolis, Indiana Published simultaneously in Canada ISBN: 978-0-470-37603-4 Manufactured in the United States of America 10 9 8 7 6 5 4 3 2 1 Library of Congress Cataloging-in-Publication Data is available from the publisher. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600. Requests to the Publisher for permis- sion should be addressed to the Legal Department, Wiley Publishing, Inc., 10475 Crosspoint Blvd., Indianapolis, IN 46256, (317) 572-3447, fax (317) 572-4355, or online at http://www.wiley.com/go/permissions. -

Absolute BSD—The Ultimate Guide to Freebsd Table of Contents Absolute BSD—The Ultimate Guide to Freebsd

Absolute BSD—The Ultimate Guide to FreeBSD Table of Contents Absolute BSD—The Ultimate Guide to FreeBSD............................................................................1 Dedication..........................................................................................................................................3 Foreword............................................................................................................................................4 Introduction........................................................................................................................................5 What Is FreeBSD?...................................................................................................................5 How Did FreeBSD Get Here?..................................................................................................5 The BSD License: BSD Goes Public.......................................................................................6 The Birth of Modern FreeBSD.................................................................................................6 FreeBSD Development............................................................................................................7 Committers.........................................................................................................................7 Contributors........................................................................................................................8 Users..................................................................................................................................8 -

Linux Performance Tools

Linux Performance Tools Brendan Gregg Senior Performance Architect Performance Engineering Team [email protected] @brendangregg This Tutorial • A tour of many Linux performance tools – To show you what can be done – With guidance for how to do it • This includes objectives, discussion, live demos – See the video of this tutorial Observability Benchmarking Tuning Stac Tuning • Massive AWS EC2 Linux cloud – 10s of thousands of cloud instances • FreeBSD for content delivery – ~33% of US Internet traffic at night • Over 50M subscribers – Recently launched in ANZ • Use Linux server tools as needed – After cloud monitoring (Atlas, etc.) and instance monitoring (Vector) tools Agenda • Methodologies • Tools • Tool Types: – Observability – Benchmarking – Tuning – Static • Profiling • Tracing Methodologies Methodologies • Objectives: – Recognize the Streetlight Anti-Method – Perform the Workload Characterization Method – Perform the USE Method – Learn how to start with the questions, before using tools – Be aware of other methodologies My system is slow… DEMO & DISCUSSION Methodologies • There are dozens of performance tools for Linux – Packages: sysstat, procps, coreutils, … – Commercial products • Methodologies can provide guidance for choosing and using tools effectively • A starting point, a process, and an ending point An#-Methodologies • The lack of a deliberate methodology… Street Light An<-Method 1. Pick observability tools that are: – Familiar – Found on the Internet – Found at random 2. Run tools 3. Look for obvious issues Drunk Man An<-Method • Tune things at random until the problem goes away Blame Someone Else An<-Method 1. Find a system or environment component you are not responsible for 2. Hypothesize that the issue is with that component 3. Redirect the issue to the responsible team 4.