Android Boot and Its Optimization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Linux 2.4 Kernel's Startup Procedure

The Linux 2.4 Kernel’s Startup Procedure William Gatliff 1. Overview This paper describes the Linux 2.4 kernel’s startup process, from the moment the kernel gets control of the host hardware until the kernel is ready to run user processes. Along the way, it covers the programming environment Linux expects at boot time, how peripherals are initialized, and how Linux knows what to do next. 2. The Big Picture Figure 1 is a function call diagram that describes the kernel’s startup procedure. As it shows, kernel initialization proceeds through a number of distinct phases, starting with basic hardware initialization and ending with the kernel’s launching of /bin/init and other user programs. The dashed line in the figure shows that init() is invoked as a kernel thread, not as a function call. Figure 1. The kernel’s startup procedure. Figure 2 is a flowchart that provides an even more generalized picture of the boot process, starting with the bootloader extracting and running the kernel image, and ending with running user programs. Figure 2. The kernel’s startup procedure, in less detail. The following sections describe each of these function calls, including examples taken from the Hitachi SH7750/Sega Dreamcast version of the kernel. 3. In The Beginning... The Linux boot process begins with the kernel’s _stext function, located in arch/<host>/kernel/head.S. This function is called _start in some versions. Interrupts are disabled at this point, and only minimal memory accesses may be possible depending on the capabilities of the host hardware. -

Question Bank Mca Semester V Vol. I

QUESTION BANK MCA SEMESTER V VOL. I 1 FOR PRIVATE CIRCULATION The Questions contained in this booklet have been prepared by the faculty of the Institute from the sources believed to be reliable. Neither the Institute nor the faculty gives any guarantee with respect to completeness or accuracy of the contents contained in the booklet and shall in no event be liable for any errors, omissions or damages arising out of use of the matter contained in the booklet. The Institute and the faculty specifically disclaim any implied warranty as to merchantability or fitness of the information for any particular purpose. 2 QUESTION BANK LINUX PROGRAMMING MCA 301 3 QUESTION BANK LINUX PROGRAMMING - MCA 301 MCA V UNIT - I I Test Your Skills: (a) State Whether the Following Statements are True or False: 1 The “no” option informs the machine that there is no grace period or time to wait for other users to log off. 2 Telnet command is used for changing the run level or state of the computer without rebooting. 3 IP address is a unique identifier for the system. 4 Transmission control protocol (TCP) builds on top of UPS and certifies that each message has been received. 5 Cron is known for arranging the files in the disk in chronological manner. 6 ARP (Address Resolution Protocol) converts IP address to MAC Address. 7 UNIX is a command-based system. 8 A program is synonymous with a process. 9 All UNIX flavors today offer a Command User Interface. 10 The operating system allocates memory for the program and loads the program to the allocated memory. -

The Linux Startup Process

The Linux startup process Jerry Feldman <[email protected]> The Linux Expertise Center Hewlett-Packard Company Document produced via OpenOffice.org Overview ● The Linux boot process – GRUB. This is the default for X86/Linux – LILO – Other boot loaders ● The Linux Operating modes – Single-user mode – Multi-user mode. ● Run Levels – What are run levels – What are the Linux standard run levels – How Linux manages run levels 2 The Linux Boot process ● The PC boot process is a 3-stage boot process that begins with the BIOS executing a short program that is stored in the Master Boot Record (MBR) of the first physical drive. Since this stage 1 boot loader needs to fit in the MBR, it is limited to 512 bytes and is normally written in assembly language. There are a number of boot loaders that can load Linux. ● GRUB and LILO are the most commonly used ones on X86 hardware. ® ® ● EFI is used on the Intel Itanium family. 3 The GRand Unified Bootloader The GRand Unified Bootloader (GRUB) is default boot loader on most distributions today. It has the capability to load a number of different operating systems. 1.The stage 1 boot resides in the MBR and contains the sector number of the stage 2 boot that is usually located in the /boot/grub directory on Linux. 2.The stage 2 boot loader presents a boot menu to the user based on /boot/grub/grub.conf or menu.lst. This contains a boot script. It is the stage2 loader actually loads the Linux kernel or 4 other OS. -

Implementing Full Device Cloning on the NVIDIA Jetson Platform

Implementing Full Device Cloning on the NVIDIA Jetson Platform March 2, 2018 Calvin Chen Linh Hoang Connor Smith Advisor: Professor Mark Claypool Sponsor - Mentor : NVIDIA - Jimmy Zhang Abstract NVIDIA’s Linux For Tegra development team provides support for many tools which exist on the Jetson-TX2 embedded AI computing device. While tools to clone the user partitions of the Jetson-TX2 exist, no tool exists to fully backup and restore all of the partitions and configurations of these boards. The goal of this project was to develop a tool that allows a user to create a complete clone of the Jetson-TX2 board’s partitions and their contents. We developed a series of bash scripts that fully backup the partitions and configurations of one Jetson-TX2 board and restore them onto another board of the same type. 1 Table of Contents Abstract 1 Table of Contents 2 1 Introduction 3 2 Background 5 2.1 Preliminary Research 5 2.1.1 UEFI Booting and Flashing 5 2.1.2 Tegra Linux Driver Package 8 2.1.3 Network File System 8 2.1.4 Jetson TX2 Bootloader 10 2.2 Linux Terminology, RCM boot and Initial ramdisk 12 2.2.1 Linux Terminology 12 2.2.2 USB Recovery Mode (RCM) Boot 13 2.2.3 Initial Ramdisk 14 2.3 Associated Technologies 15 2.3.1 Linux tools 15 2.3.2 Serial Console 16 3 Methodology 17 4 Product 20 4.1 Backup Script 20 4.2 Restore Script 22 4.3 Initial ramdisk (initrd) 24 4.4 Script Development Guidelines 26 4.4.1 Use Case 26 4.4.2 Integrity 26 4.4.3 Performance 27 5 Future Work 29 6 Conclusions 30 7 Bibliography 31 2 1 Introduction The Jetson-TX2 is a low power, high performance embedded artificial intelligence supercomputer utilized in many modern technologies. -

Effective Cache Apportioning for Performance Isolation Under

Effective Cache Apportioning for Performance Isolation Under Compiler Guidance Bodhisatwa Chatterjee Sharjeel Khan Georgia Institute of Technology Georgia Institute of Technology Atlanta, USA Atlanta, USA [email protected] [email protected] Santosh Pande Georgia Institute of Technology Atlanta, USA [email protected] Abstract cache partitioning to divide the LLC among the co-executing With a growing number of cores per socket in modern data- applications in the system. Ideally, a cache partitioning centers where multi-tenancy of a diverse set of applications scheme obtains overall gains in system performance by pro- must be efficiently supported, effective sharing of the last viding a dedicated region of cache memory to high-priority level cache is a very important problem. This is challenging cache-intensive applications and ensures security against because modern workloads exhibit dynamic phase behaviour cache-sharing attacks by the notion of isolated execution in - their cache requirements & sensitivity vary across different an otherwise shared LLC. Apart from achieving superior execution points. To tackle this problem, we propose Com- application performance and improving system throughput CAS, a compiler guided cache apportioning system that pro- [7, 20, 31], cache partitioning can also serve a variety of pur- vides smart cache allocation to co-executing applications in a poses - improving system power and energy consumption system. The front-end of Com-CAS is primarily a compiler- [6, 23], ensuring fairness in resource allocation [26, 36] and framework equipped with learning mechanisms to predict even enabling worst case execution-time analysis of real-time cache requirements, while the backend consists of allocation systems [18]. -

A Reliable Booting System for Zynq Ultrascale+ Mpsoc Devices

A reliable booting system for Zynq Ultrascale+ MPSoC devices An embedded solution that provides fallbacks in different parts of the Zynq MPSoC booting process, to assure successful booting into a Linux operating system. A thesis presented for the Bachelor of Science in Electrical Engineering at the University of Applied Sciences Utrecht Name Nekija Dˇzemaili Student ID 1702168 University supervisor Corn´eDuiser CERN supervisors Marc Dobson & Petr Zejdlˇ Field of study Electrical Engineering (embedded systems) CERN-THESIS-2021-031 17/03/2021 February 15th, 2021 Geneva, Switzerland A reliable booting system for Zynq Ultrascale+ MPSoC devices Disclaimer The board of the foundation HU University of Applied Sciences in Utrecht does not accept any form of liability for damage resulting from usage of data, resources, methods, or procedures as described in this report. Duplication without consent of the author or the college is not permitted. If the graduation assignment is executed within a company, explicit consent of the company is necessary for duplication or copying of text from this report. Het bestuur van de Stichting Hogeschool Utrecht te Utrecht aanvaardt geen enkele aansprakelijkheid voor schade voortvloeiende uit het gebruik van enig gegeven, hulpmiddel, werkwijze of procedure in dit verslag beschreven. Vermenigvuldiging zonder toestemming van de auteur(s) en de school is niet toegestaan. Indien het afstudeerwerk in een bedrijf is verricht, is voor vermenigvuldiging of overname van tekst uit dit verslag eveneens toestemming van het bedrijf vereist. N. Dˇzemaili page 1 of 110 A reliable booting system for Zynq Ultrascale+ MPSoC devices Preface This thesis was written for the BSc Electrical Engineering degree of the HU University of Applied Sciences Utrecht, the Netherlands. -

MX-19.2 Users Manual

MX-19.2 Users Manual v. 20200801 manual AT mxlinux DOT org Ctrl-F = Search this Manual Ctrl+Home = Return to top Table of Contents 1 Introduction...................................................................................................................................4 1.1 About MX Linux................................................................................................................4 1.2 About this Manual..............................................................................................................4 1.3 System requirements..........................................................................................................5 1.4 Support and EOL................................................................................................................6 1.5 Bugs, issues and requests...................................................................................................6 1.6 Migration............................................................................................................................7 1.7 Our positions......................................................................................................................8 1.8 Notes for Translators.............................................................................................................8 2 Installation...................................................................................................................................10 2.1 Introduction......................................................................................................................10 -

Next-Generation Cluster Management Architecture and Software

Next-generation cluster management architecture and software Paul Peltz Jr., J. Lowell Wofford High Performance Computing Division Los Alamos National Laboratory Los Alamos, NM, USA fpeltz,[email protected] Abstract—Over the last six decades, Los Alamos National and unpredictable nature of booting large-scale HPC systems Laboratory (LANL) has acquired, accepted, and integrated over has created an expensive problem to HPC institutions. The 100 new HPC systems, from MANIAC in 1952 to Trinity in 2017. downtime and administrator time required to boot has led to These systems range from small clusters to large supercomputers. The high performance computing (HPC) system architecture has longer maintenance windows and longer time before these progressively changed over this time as well; from single system systems can be returned to users. A fresh look at HPC system images to complex, interdependent service infrastructures within architecture is required in order to address these problems. a large HPC system. The authors are proposing a redesign of the current HPC system architecture to help reduce downtime II. CURRENT HPC SYSTEM and provide a more resilient architectural design. ARCHITECTURE Index Terms—Systems Integration; Systems Architecture; Cluster The current HPC system design is no longer viable to the large-scale HPC institutions. The system state is binary, I. INTRODUCTION because it is either up or down and making changes to the system requires a full system reboot. This is due to a number High performance computing (HPC) systems have always of reasons including tightly coupled interconnects, undefined been a challenge to build, boot, and maintain. This has only API, and a lack of workload manager and system state been compounded by the needs of the scientific community integration. -

Understanding-Systemd.Pdf

Understanding Systemd Linux distributions are adopting or planning to adopt the systemd init system fast. systemd is a suite of system management daemons, libraries, and utilities designed as a central management and conguration platform for the Linux computer operating system. Described by its authors as a “basic building block” for an operating system, systemd primarily aims to replace the Linux init system (the rst process executed in user space during the Linux startup process) inherited from UNIX System V and Berkeley Software Distribution (BSD). The name systemd adheres to the Unix convention of making daemons easier to distinguish by having the letter d as the last letter of the filename. systemd is designed for Linux and programmed exclusively for the Linux API. It is published as free and open-source software under the terms of the GNU Lesser General Public License (LGPL) version 2.1 or later. The design of systemd generated signicant controversy within the free software community, leading the critics to argue that systemd’s architecture violates the Unix philosophy and that it will eventually form a system of interlocking dependencies. However, as of 2015 most major Linux distributions have adopted it as their default init system. Lennart Poettering and Kay Sievers, software engineers that initially developed systemd, sought to surpass the efciency of the init daemon in several ways. They wanted to improve the software framework for expressing dependencies, to allow more processing to be done concurrently or in parallel during system booting, and to reduce the computational overhead of the shell. Poettering describes systemd development as “never nished, never complete, but tracking progress of technology”. -

Porting Guides

Kunpeng BoostKit for SDS Porting Guides Issue 03 Date 2021-03-23 HUAWEI TECHNOLOGIES CO., LTD. Copyright © Huawei Technologies Co., Ltd. 2021. All rights reserved. No part of this document may be reproduced or transmitted in any form or by any means without prior written consent of Huawei Technologies Co., Ltd. Trademarks and Permissions and other Huawei trademarks are trademarks of Huawei Technologies Co., Ltd. All other trademarks and trade names mentioned in this document are the property of their respective holders. Notice The purchased products, services and features are stipulated by the contract made between Huawei and the customer. All or part of the products, services and features described in this document may not be within the purchase scope or the usage scope. Unless otherwise specified in the contract, all statements, information, and recommendations in this document are provided "AS IS" without warranties, guarantees or representations of any kind, either express or implied. The information in this document is subject to change without notice. Every effort has been made in the preparation of this document to ensure accuracy of the contents, but all statements, information, and recommendations in this document do not constitute a warranty of any kind, express or implied. Issue 03 (2021-03-23) Copyright © Huawei Technologies Co., Ltd. i Kunpeng BoostKit for SDS Porting Guides Contents Contents 1 Bcache Porting Guide (CentOS 7.6).................................................................................... 1 1.1 Introduction.............................................................................................................................................................................. -

Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 15 SP1

SUSE Linux Enterprise Server 15 SP1 Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 15 SP1 Provides information about how to manage storage devices on a SUSE Linux Enterprise Server. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xi 1 Available Documentation xi 2 Giving Feedback xiii 3 Documentation Conventions xiii 4 Product Life Cycle and Support xv Support Statement for SUSE Linux Enterprise Server xvi • Technology Previews xvii I FILE SYSTEMS AND MOUNTING 1 1 Overview of -

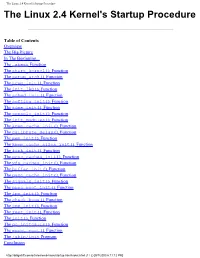

The Linux 2.4 Kernel's Startup Procedure the Linux 2.4 Kernel's Startup Procedure

The Linux 2.4 Kernel's Startup Procedure The Linux 2.4 Kernel's Startup Procedure Table of Contents Overview The Big Picture In The Beginning... The _stext Function The start_kernel() Function The setup_arch() Function The trap_init() Function The init_IRQ() Function The sched_init() Function The softirq_init() Function The time_init() Function The console_init() Function The init_modules() Function The kmem_cache_init() Function The calibrate_delay() Function The mem_init() Function The kmem_cache_sizes_init() Function The fork_init() Function The proc_caches_init() Function The vfs_caches_init() Function The buffer_init() Function The page_cache_init() Function The signals_init() Function The proc_root_init() Function The ipc_init() Function The check_bugs() Function The smp_init() Function The rest_init() Function The init() Function The do_initcalls() Function The mount_root() Function The /sbin/init Program Conclusion http://billgatliff.com/articles/emb-linux/startup.html/index.html (1 / 2) [9/7/2003 6:11:12 PM] The Linux 2.4 Kernel's Startup Procedure Copyright About the Author Overview This paper describes the Linux 2.4 kernel's startup process, from the moment the kernel gets control of the host hardware until the kernel is ready to run user processes. Along the way, it covers the programming environment Linux expects at boot time, how peripherals are initialized, and how Linux knows what to do next. Next >>> The Big Picture http://billgatliff.com/articles/emb-linux/startup.html/index.html (2 / 2) [9/7/2003 6:11:12 PM] The Big Picture <<< Previous Next >>> The Big Picture Figure 1 is a function call diagram that describes the kernel's startup procedure. As it shows, kernel initialization proceeds through a number of distinct phases, starting with basic hardware initialization and ending with the kernel's launching of /bin/init and other user programs.