Understanding-Systemd.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Job Scheduling in Java Applications Job Schedulers Let Developers Focus on Their Applications and Not on Scheduling Details

flux.ly File Orchestration Solutions for Banking and Finance Reading Time: 8 minutes Job Scheduling in Java Applications Job schedulers let developers focus on their applications and not on scheduling details. Reading Time: 8 minutes Scheduling tasks in J2EE applications is a common need. There is more to job scheduling than running backups at 3 o'clock in the morning. Customer relationship management (CRM) applications, for example, need to contact customers periodically during the sales cycle. File transfer applications need to transmit and receives files on a regular basis, except on holidays. Administrative applications need to send reminder emails to notify employees and customers about important events. All these enterprise applications can benefit from job scheduling. This article first explores the need for job scheduling in J2EE applications. Section 2 explains why job scheduling can add critical functionality to enterprise applications. In Section 3, related work is reviewed. Section 4 presents a set of requirements for job scheduling software in J2EE applications. Finally, Section 5 walks through the design of an enterprise application that uses job scheduling. The need for enterprise job scheduling Many enterprise applications can benefit from job scheduling. Sales and customer relationship software, for example, needs to contact customers over the course of months and years. For example: 1. Followups are sent 10 and 30 days after a potential customer first contacts the sales organization. 2. Reminder notices are sent to existing customers 60 and 20 days before the customer's support contract expires. 3. If the customer does not contact the technical support department for 90 days, a followup is sent asking if the customer needs any help with the product or service. -

The Linux Kernel Module Programming Guide

The Linux Kernel Module Programming Guide Peter Jay Salzman Michael Burian Ori Pomerantz Copyright © 2001 Peter Jay Salzman 2007−05−18 ver 2.6.4 The Linux Kernel Module Programming Guide is a free book; you may reproduce and/or modify it under the terms of the Open Software License, version 1.1. You can obtain a copy of this license at http://opensource.org/licenses/osl.php. This book is distributed in the hope it will be useful, but without any warranty, without even the implied warranty of merchantability or fitness for a particular purpose. The author encourages wide distribution of this book for personal or commercial use, provided the above copyright notice remains intact and the method adheres to the provisions of the Open Software License. In summary, you may copy and distribute this book free of charge or for a profit. No explicit permission is required from the author for reproduction of this book in any medium, physical or electronic. Derivative works and translations of this document must be placed under the Open Software License, and the original copyright notice must remain intact. If you have contributed new material to this book, you must make the material and source code available for your revisions. Please make revisions and updates available directly to the document maintainer, Peter Jay Salzman <[email protected]>. This will allow for the merging of updates and provide consistent revisions to the Linux community. If you publish or distribute this book commercially, donations, royalties, and/or printed copies are greatly appreciated by the author and the Linux Documentation Project (LDP). -

It's Complicated but It's Probably Already Booting Your Computer

FAQ SYSTEMD SYSTEMD It’s complicated but it’s probably already booting your computer. dynamically connect to your network, a runlevel of 1 for a single-user mode, GRAHAM MORRISON while syslogd pools all the system runlevel 3 for the same command messages together to create a log of prompt we described earlier, and Surely the ‘d’ in Systemd is everything important. Another daemon, runlevel 5 to launch a graphical a typo? though it lacks the ‘d’, is init – famous environment. Changing this for your No –it’s a form of Unix notation for being the first process that runs on next boot often involved editing the used to signify a daemon. your system. /etc/inittab file, and you’d soon get used to manually starting and stopping You mean like those little Isn’t init used to switch your own services simply by executing devils inhabiting Dante’s between the command-line the scripts you found. underworld? and the graphical desktop? There is a link in that Unix usage For many of us, yes. This was the You seem to be using the past of the term daemon supposedly main way of going from the tense for all this talk about the comes from Greek mythology, where desktop to a command line and back init daemon… daemons invisibly wove their magic again without trying to figure out which That’s because the and benign influence. The word is today processes to kill or start manually. aforementioned Systemd wants more commonly spelt ‘demon’, which Typing init 3 would typically close any to put init in the past. -

Flexible Lustre Management

Flexible Lustre management Making less work for Admins ORNL is managed by UT-Battelle for the US Department of Energy How do we know Lustre condition today • Polling proc / sysfs files – The knocking on the door model – Parse stats, rpc info, etc for performance deviations. • Constant collection of debug logs – Heavy parsing for common problems. • The death of a node – Have to examine kdumps and /or lustre dump Origins of a new approach • Requirements for Linux kernel integration. – No more proc usage – Migration to sysfs and debugfs – Used to configure your file system. – Started in lustre 2.9 and still on going. • Two ways to configure your file system. – On MGS server run lctl conf_param … • Directly accessed proc seq_files. – On MSG server run lctl set_param –P • Originally used an upcall to lctl for configuration • Introduced in Lustre 2.4 but was broken until lustre 2.12 (LU-7004) – Configuring file system works transparently before and after sysfs migration. Changes introduced with sysfs / debugfs migration • sysfs has a one item per file rule. • Complex proc files moved to debugfs • Moving to debugfs introduced permission problems – Only debugging files should be their. – Both debugfs and procfs have scaling issues. • Moving to sysfs introduced the ability to send uevents – Item of most interest from LUG 2018 Linux Lustre client talk. – Both lctl conf_param and lctl set_param –P use this approach • lctl conf_param can set sysfs attributes without uevents. See class_modify_config() – We get life cycle events for free – udev is now involved. What do we get by using udev ? • Under the hood – uevents are collect by systemd and then processed by udev rules – /etc/udev/rules.d/99-lustre.rules – SUBSYSTEM=="lustre", ACTION=="change", ENV{PARAM}=="?*", RUN+="/usr/sbin/lctl set_param '$env{PARAM}=$env{SETTING}’” • You can create your own udev rule – http://reactivated.net/writing_udev_rules.html – /lib/udev/rules.d/* for examples – Add udev_log="debug” to /etc/udev.conf if you have problems • Using systemd for long task. -

Geek Guide > Beyond Cron

GEEK GUIDE BEYOND CRON Table of Contents Ease of Use ..................................................................... 8 Multi-Server-Friendly .................................................... 10 Dependency Management ............................................ 13 Easy to Visualize ........................................................... 16 Delegation of Authority ................................................. 18 Management by Exception ........................................... 21 Flexible Scheduling ....................................................... 23 Revision Control ........................................................... 24 Conclusion .................................................................... 24 MIKE DIEHL has been using Linux since the days when Slackware came on 14 5.25” floppy disks and installed kernel version 0.83. He has built and managed several servers configured with either hardware or software RAID storage under Linux, and he has hands-on experience with both the VMware and KVM virtual machine architectures. Mike has written numerous articles for Linux Journal on a broad range of subjects, and he has a Bachelor’s degree in Mathematics with a minor in Computer Science. He lives in Blythewood, South Carolina, with his wife and four sons. 2 GEEK GUIDE BEYOND CRON GEEK GUIDES: Mission-critical information for the most technical people on the planet. Copyright Statement © 2015 Linux Journal. All rights reserved. This site/publication contains materials that have been created, developed or -

Getting to Grips with Unix and the Linux Family

Getting to grips with Unix and the Linux family David Chiappini, Giulio Pasqualetti, Tommaso Redaelli Torino, International Conference of Physics Students August 10, 2017 According to the booklet At this end of this session, you can expect: • To have an overview of the history of computer science • To understand the general functioning and similarities of Unix-like systems • To be able to distinguish the features of different Linux distributions • To be able to use basic Linux commands • To know how to build your own operating system • To hack the NSA • To produce the worst software bug EVER According to the booklet update At this end of this session, you can expect: • To have an overview of the history of computer science • To understand the general functioning and similarities of Unix-like systems • To be able to distinguish the features of different Linux distributions • To be able to use basic Linux commands • To know how to build your own operating system • To hack the NSA • To produce the worst software bug EVER A first data analysis with the shell, sed & awk an interactive workshop 1 at the beginning, there was UNIX... 2 ...then there was GNU 3 getting hands dirty common commands wait till you see piping 4 regular expressions 5 sed 6 awk 7 challenge time What's UNIX • Bell Labs was a really cool place to be in the 60s-70s • UNIX was a OS developed by Bell labs • they used C, which was also developed there • UNIX became the de facto standard on how to make an OS UNIX Philosophy • Write programs that do one thing and do it well. -

The GNOME Census: Who Writes GNOME?

The GNOME Census: Who writes GNOME? Dave Neary & Vanessa David, Neary Consulting © Neary Consulting 2010: Some rights reserved Table of Contents Introduction.........................................................................................3 What is GNOME?.............................................................................3 Project governance...........................................................................3 Why survey GNOME?.......................................................................4 Scope and methodology...................................................................5 Tools and Observations on Data Quality..........................................7 Results and analysis...........................................................................10 GNOME Project size.......................................................................10 The Long Tail..................................................................................11 Effects of commercialisation..........................................................14 Who does the work?.......................................................................15 Who maintains GNOME?................................................................17 Conclusions........................................................................................22 References.........................................................................................24 Appendix 1: Modules included in survey...........................................25 2 Introduction What -

State of Linux Audio in 2009 Linux Plumbers Conference 2009

State of Linux Audio in 2009 Linux Plumbers Conference 2009 Lennart Poettering [email protected] September 2009 Lennart Poettering State of Linux Audio in 2009 Who Am I? Software Engineer at Red Hat, Inc. Developer of PulseAudio, Avahi and a few other Free Software projects http://0pointer.de/lennart/ [email protected] IRC: mezcalero Lennart Poettering State of Linux Audio in 2009 Perspective Lennart Poettering State of Linux Audio in 2009 So, what happened since last LPC? Lennart Poettering State of Linux Audio in 2009 RIP: EsounD is officially gone. Lennart Poettering State of Linux Audio in 2009 (at least on Fedora) RIP: OSS is officially gone. Lennart Poettering State of Linux Audio in 2009 RIP: OSS is officially gone. (at least on Fedora) Lennart Poettering State of Linux Audio in 2009 Audio API Guide http://0pointer.de/blog/projects/guide-to-sound-apis Lennart Poettering State of Linux Audio in 2009 We also make use of high-resolution timers on the desktop by default. We now use realtime scheduling on the desktop by default. Lennart Poettering State of Linux Audio in 2009 We now use realtime scheduling on the desktop by default. We also make use of high-resolution timers on the desktop by default. Lennart Poettering State of Linux Audio in 2009 2s Buffers Lennart Poettering State of Linux Audio in 2009 Mixer abstraction? Due to user-friendliness, i18n, meta data (icons, ...) We moved a couple of things into the audio server: Timer-based audio scheduling; mixing; flat volume/volume range and granularity extension; integration of volume sliders; mixer abstraction; monitoring Lennart Poettering State of Linux Audio in 2009 We moved a couple of things into the audio server: Timer-based audio scheduling; mixing; flat volume/volume range and granularity extension; integration of volume sliders; mixer abstraction; monitoring Mixer abstraction? Due to user-friendliness, i18n, meta data (icons, ...) Lennart Poettering State of Linux Audio in 2009 udev integration: meta data, by-path/by-id/.. -

Ivoyeur: Inotify

COLUMNS iVoyeur inotify DAVE JOSEPHSEN Dave Josephsen is the he last time I changed jobs, the magnitude of the change didn’t really author of Building a sink in until the morning of my first day, when I took a different com- Monitoring Infrastructure bination of freeways to work. The difference was accentuated by the with Nagios (Prentice Hall T PTR, 2007) and is Senior fact that the new commute began the same as the old one, but on this morn- Systems Engineer at DBG, Inc., where he ing, at a particular interchange, I would zig where before I zagged. maintains a gaggle of geographically dispersed It was an unexpectedly emotional and profound metaphor for the change. My old place was server farms. He won LISA ‘04’s Best Paper off to the side, and down below, while my future was straight ahead, and literally under award for his co-authored work on spam construction. mitigation, and he donates his spare time to the SourceMage GNU Linux Project. The fact that it was under construction was poetic but not surprising. Most of the roads I [email protected] travel in the Dallas/Fort Worth area are under construction and have been for as long as anyone can remember. And I don’t mean a lane closed here or there. Our roads drift and wan- der like leaves in the water—here today and tomorrow over there. The exits and entrances, neither a part of this road or that, seem unable to anticipate the movements of their brethren, and are constantly forced to react. -

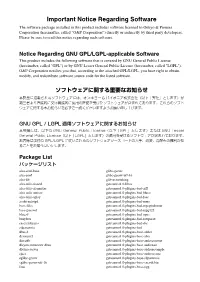

Important Notice Regarding Software

Important Notice Regarding Software The software package installed in this product includes software licensed to Onkyo & Pioneer Corporation (hereinafter, called “O&P Corporation”) directly or indirectly by third party developers. Please be sure to read this notice regarding such software. Notice Regarding GNU GPL/LGPL-applicable Software This product includes the following software that is covered by GNU General Public License (hereinafter, called "GPL") or by GNU Lesser General Public License (hereinafter, called "LGPL"). O&P Corporation notifies you that, according to the attached GPL/LGPL, you have right to obtain, modify, and redistribute software source code for the listed software. ソフトウェアに関する重要なお知らせ 本製品に搭載されるソフトウェアには、オンキヨー & パイオニア株式会社(以下「弊社」とします)が 第三者より直接的に又は間接的に使用の許諾を受けたソフトウェアが含まれております。これらのソフト ウェアに関する本お知らせを必ずご一読くださいますようお願い申し上げます。 GNU GPL / LGPL 適用ソフトウェアに関するお知らせ 本製品には、以下の GNU General Public License(以下「GPL」とします)または GNU Lesser General Public License(以下「LGPL」とします)の適用を受けるソフトウェアが含まれております。 お客様は添付の GPL/LGPL に従いこれらのソフトウェアソースコードの入手、改変、再配布の権利があ ることをお知らせいたします。 Package List パッケージリスト alsa-conf-base glibc-gconv alsa-conf glibc-gconv-utf-16 alsa-lib glib-networking alsa-utils-alsactl gstreamer1.0-libav alsa-utils-alsamixer gstreamer1.0-plugins-bad-aiff alsa-utils-amixer gstreamer1.0-plugins-bad-bluez alsa-utils-aplay gstreamer1.0-plugins-bad-faac avahi-autoipd gstreamer1.0-plugins-bad-mms base-files gstreamer1.0-plugins-bad-mpegtsdemux base-passwd gstreamer1.0-plugins-bad-mpg123 bluez5 gstreamer1.0-plugins-bad-opus busybox gstreamer1.0-plugins-bad-rawparse -

The Linux 2.4 Kernel's Startup Procedure

The Linux 2.4 Kernel’s Startup Procedure William Gatliff 1. Overview This paper describes the Linux 2.4 kernel’s startup process, from the moment the kernel gets control of the host hardware until the kernel is ready to run user processes. Along the way, it covers the programming environment Linux expects at boot time, how peripherals are initialized, and how Linux knows what to do next. 2. The Big Picture Figure 1 is a function call diagram that describes the kernel’s startup procedure. As it shows, kernel initialization proceeds through a number of distinct phases, starting with basic hardware initialization and ending with the kernel’s launching of /bin/init and other user programs. The dashed line in the figure shows that init() is invoked as a kernel thread, not as a function call. Figure 1. The kernel’s startup procedure. Figure 2 is a flowchart that provides an even more generalized picture of the boot process, starting with the bootloader extracting and running the kernel image, and ending with running user programs. Figure 2. The kernel’s startup procedure, in less detail. The following sections describe each of these function calls, including examples taken from the Hitachi SH7750/Sega Dreamcast version of the kernel. 3. In The Beginning... The Linux boot process begins with the kernel’s _stext function, located in arch/<host>/kernel/head.S. This function is called _start in some versions. Interrupts are disabled at this point, and only minimal memory accesses may be possible depending on the capabilities of the host hardware. -

Absolute BSD—The Ultimate Guide to Freebsd Table of Contents Absolute BSD—The Ultimate Guide to Freebsd

Absolute BSD—The Ultimate Guide to FreeBSD Table of Contents Absolute BSD—The Ultimate Guide to FreeBSD............................................................................1 Dedication..........................................................................................................................................3 Foreword............................................................................................................................................4 Introduction........................................................................................................................................5 What Is FreeBSD?...................................................................................................................5 How Did FreeBSD Get Here?..................................................................................................5 The BSD License: BSD Goes Public.......................................................................................6 The Birth of Modern FreeBSD.................................................................................................6 FreeBSD Development............................................................................................................7 Committers.........................................................................................................................7 Contributors........................................................................................................................8 Users..................................................................................................................................8