Protection in Grasshopper: a Persistent Operating System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

(J. Bayko) Date: 12 Jun 92 18:49:18 GMT Newsgroups: Alt.Folklore.Computers,Comp.Arch Subject: Great Microprocessors of the Past and Present

From: [email protected] (J. Bayko) Date: 12 Jun 92 18:49:18 GMT Newsgroups: alt.folklore.computers,comp.arch Subject: Great Microprocessors of the Past and Present I had planned to submit an updated version of the Great Microprocessor list after I’d completed adding new processors, checked out little bits, and so on... That was going to take a while... But then I got to thinking of all the people stuck with the origional error-riddled version. All these poor people who’d kept it, or perhaps placed in in an FTP site, or even - using it for reference??! In good conscience, I decided I can’t leave that erratic old version lying out there, where people might think it’s accurate. So here is an interim release. There will be more to it later - the 320xx will be included eventually, for example. But at least this has been debugged greatly. Enjoy... John Bayko. — Great Microprocessors of the Past and Present (V 3.2.1) Section One: Before the Great Dark Cloud. ————————— Part I: The Intel 4004 (1972) The first single chip CPU was the Intel 4004, a 4-bit processor meant for a calculator. It processed data in 4 bits, but its instructions were 8 bits long. Internally, it featured four 12 bit(?) registers which acted as an internal evaluation stack. The Stack Pointer pointed to one of these registers, not a memory location (only CALL and RET instructions operated on the Stack Pointer). There were also sixteen 4-bit (or eight 8-bit) general purpose registers The 4004 had 46 instructions. -

Implementation of Distributed Orthogonal Persistence Using

Þs-9.15 Implementation of Distributed Orthogonal Persistence llsing Virtual Memory Francis Vaughan Thesis submitted for the degree of Doctor of Philosophy in The University of Adelaide (Faculty of Mathematical and Computer Sciences) December 1994 ,Au,***ol.eJ \ qq 5 Abstract Persistent object systems greatly simplify programming tasks, since they hide the traditional distinction between short-term and long-term storage from the applications programmer. As a result, the programmer can operate at a level of abstraction in which short-term and long-term data are treated uniformly. In the past most persistent systems have been constructed above conventional operating systems and have not supported any form of distributed programming paradigm. In this thesis we explore the implementation of orthogonally persistent systems that make direct use of fhe attributes of paged virtual memory found in the majority of conventional computing platforms. These attributes are exploited to support object movement for persistent storage to addressable memory, to aid in garbage collection, to provide the illusion of larger storage spaces than the underlying architecture allows, and to provide distribution of the persistent system. The thesis further explores the different models of distribution, notably a one world model in which a single persistent space exists, and a federated one in which many co- operating spaces exist. It explores communication mechanisms between federated spaces and the problems of maintaining consistency between separate persistent spaces in a manner which ensures both a reliable and resilient computational environment. In particular characterising the interdependencies using vector clocks and the manner in which vector time can be used to provide a complete mechanism for ensuring reliable and resilient computation. -

A Hardware Implementation of a Knowledge Manipulation System for Real Time Engineering Applications

A Hardware Implementation of a Knowledge Manipulation System for Real Time Engineering Applications by Stephen Hudson B.Sc. (Hons) Doctor of Philosophy University of Edinburgh March, 199 Table Of Contents Table of Contents . 1 Acknowledgement............................................................................... V Declaration........................................................................................ V Abstract............................................................................................ Vi Abbreviations...................................................................................... VU Listof Figures ...................................................................................ix Listof Photographs ............................................................................. X Listof Tables ..................................................................................... X Chapter 1 Introduction .......................................................... 1 1 .1 Background ............................................................................ 1 1.2 Chapter Summary .................................................................... 2 Chapter 2 Intelligent Systems .............................................. 4 2.1 Introduction ............................................................................ 4 2.2 Al Techniques ......................................................................... 8 2.2.1 Production Systems .......................................................... -

Compilerbau Für Die Common Language Runtime

Compilerbau für die Common Language Runtime Aufbau des GCC . Löwis © 2006 Martin v Quellorganistation: Probleme • Modularisierung des Compilers: – verschiedene Übersetzungsphasen (Präprozessor, Compiler, Assembler, Linker) – verschiedene Frontends und Backends – Compiler-Compiler: Programme, die Quelltext des Compilers generieren – Comilerabhängige Laufzeitbibliotheken • Portabilität, Cross-Compilerbau: – Buildsystem: System, auf dem der Compiler übersetzt wird – Hostsystem: System, auf dem der Compiler läuft – Targetsystem: System, für das der Compiler Code erzeugt – gleichzeitige Installation von Compilern für verschiedene Targets . Löwis © 2006 Martin v Compilerbau 2 Modularisierung: Toplevel-Verzeichnis • Cygnus configure: Ein Build-Lauf soll gesamte Werkzeugkette für Host/Target-Kombination erzeugen können – Overlay-Struktur: gcc, binutils (as, ld), gdb, make(?) können alle in das gleiche Verzeichnis integriert werden, und “am Stück” übersetzt werden • gcc: enthält Compiler, Laufzeitbibliotheken, Supporttools für Compiler – gcc: Verzeichnis für GCC selbst – fixincludes:Übersetzer zur Anpassung von Headerfiles – boehm-gc, libada, libffi, libgfortran, libjava, libmudflap, ›libobjc, libruntime, libssp, libstdc++v3: Laufzeitbibliotheken . Löwis – libcpp, libiberty, libintl: vom Compiler selbst verwendete Bibliotheken © 2006 Martin v Compilerbau 3 gcc • Verzeichnis gcc selbst: Buildprozess, C compiler, Definition von Compiler-Datenstrukturen (tree, RTL), middle end • config: Backends – je ein Unterverzeichnis pro Prozessor • doc: texinfo-Dokumentation -

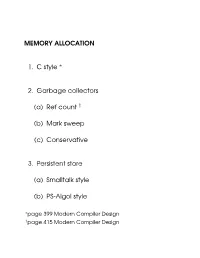

MEMORY ALLOCATION 1. C Style 2. Garbage Collectors (A) Ref Count (B

MEMORY ALLOCATION 1. C style ∗ 2. Garbage collectors (a) Ref count y (b) Mark sweep (c) Conservative 3. Persistent store (a) Smalltalk style (b) PS-Algol style ∗page 399 Modern Compiler Design ypage 415 Modern Compiler Design Malloc Data structure used size in bytes 16 1 free bits free Stack 32 0 program variables allocated block 16 0 48 1 free heap 1 MALLOC algorithm int heap[HSIZE]; char *scan(int s) {int i; for(i=0;i<HSIZE;i+=heap[i]> >2) if(heap[i]&1&&heap[i]&0xfffffffe>(s+4)) { heap[i]^=1; return &heap[i+1]; } return 0; } char *malloc(int size) { char *p=scan(size); if(p)return p; merge(); p=scan(size); if(p)return p; heapoverflow(); } 2 FREE ALGORITHM This simply toggles the free bit. free(char *p){heap[((int)p> >2)-1]^=1;} Merge algorithm merge() { int i; for(i=0;i<HSIZE;i+=heap[i]> >2) if(heap[i]&1&&heap[heap[i]> >2]&1) heap[i]+=(heap[heap[i]> >2]^1); } 3 Problem May have to chase long list of allocated blocks before a free block is found. Solution Use a free list 4 freepntr 16 1 free bits 32 0 chain free blocks allocated together block 16 0 48 1 heap 5 Problem the head of the free list will accumulate lots of small and unusable blocks, scanning these will slow access down Solution use two free pointers 1. points at the head of the free-list 2. points at the last allocated item on the free list when allocating use the second pointer to initi- ate the search, only when it reaches the end of the heap, do we re-initialise it from the first pointer. -

Eindhoven University of Technology MASTER the Design of A

Eindhoven University of Technology MASTER The design of a microprocessor with an object oriented architecture van Hamersveld, F.P. Award date: 1992 Link to publication Disclaimer This document contains a student thesis (bachelor's or master's), as authored by a student at Eindhoven University of Technology. Student theses are made available in the TU/e repository upon obtaining the required degree. The grade received is not published on the document as presented in the repository. The required complexity or quality of research of student theses may vary by program, and the required minimum study period may vary in duration. General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain The Design of a Microprocessor with an Object Oriented Architecture F.P. van Hamersveld Faculty of Electrical Engineering Digital Systems Group Eindhoven University of Technology Eindhoven, february 1992 Supervisor: Prof. Jr. M.P.J. Stevens Coach: Jr. A.c. Verschueren The Department of Electrical Engineering of the Eindhoven University of Technology does not accept any responsibility regarding the contents of student project- and graduation reports. Abstract Abstract Object oriented programming is being used more often. -

FINAL Systemnahe Programmierung

Arb eitsb erichte aus der Informatik Technical Rep orts and Working Pap ers Fachho chschule Nordostniedersachsen Volgershall 1, D-21339 Luneburg Phone: xx49.4131.677175 Fax: xx49.4131.677140 F IN A L F achho chschule Nordostniedersachsen IN formatik A rb eitsb erichte L uneburg Systemnahe Programmierung 3.,uberarbeitete Au age 1999 Ulrich Ho mann FINAL, 9. Jahrgang Heft 1, Juli 1999, ISSN 0939-8821 Inhaltsverzeichnis 1 Einleitung .................................................................................................................. 1 2 Das Modell eines Rechners ....................................................................................... 4 2.1 Funktionsweise eines Rechners ........................................................................ 7 2.2 Eine Hardware/Software-Schnittstelle: Der Interruptmechanismus ................. 18 2.3 Aspekte der Gerätesteuerung und des Ein/Ausgabekonzepts ........................... 21 3 Aspekte der Rechnerprogrammierung ...................................................................... 27 3.1 Höhere Programmiersprachen und Assembler-Sprachen in der Systempro- grammierung ........................................................................................................... 35 3.2 Adreßräume und das Prozeßkonzept ................................................................ 36 3.3 Beispiele von Speichermodellen ...................................................................... 44 3.3.1 Beispiel: Der Real Mode des INTEL 80x86 ........................................... -

Database Implementation on an Object-Oriented Processor Architecture

DATABASE IMPLEMENTATION ON AN OBJECT-ORIENTED PROCESSOR ARCHITECTURE A thesis submitted in partial fulfilment of the requirements of the University of Abertay Dundee for the degree of Doctor of Philosophy Louis David Natanson University of Abertay Dundee July 4, 1995 I certify that this thesis is the true and accurate version of the thesis approved by the examiners. Director of Studies Abstract The advent of an object-oriented processor, the REKURSIV, allowed the possibility of investigating the application of object-oriented techniques to all the levels of a software system’s architecture. This work is concerned with the implementation of a database system on the REKURSIV. A database system was implemented with an architecture structured as • An external level provided by DEAL, a database query language with functions. • A conceptual level consisting of an implementation of the relational algebra. • an internal level provided by the REKURSIV system. The mapping of the external to the conceptual levels is achieved through a recursive descent interpreter which was machine generated from a syntax specification. The software providing the conceptual level was systematically derived from a formal algebraic specification of the relational algebra. The internal level was experimentally investigated to quantify the na ture of the contribution made to computational power by the REKURSIV’s architectural innovations. The contributions made by this work are: • the methodology exposed for program derivation (in class based lan guages) from algebraic specifications; • the treatment of the notion of domain within formal specification; • the development of a top-down parser generator; • the establishment of a quantitative perfomance profile for the REKUR SIV. -

Object-Oriented Computer Architecture

VI Object-Oriented Computer Architecture: - Concepts and Issues -The REKURSIV Object-Oriented Computer Architecture D. M. Harland Rapporteur: G. Dixon REKURSN Slides Object-Oriented Computer Architecture Two lectures for the International Seminar on Objecc-Oriemed Computing Systems Newcastle. September 6-9. 1988. To be presented ~Y : David M HDrland Technical Director. Linn Sman Computing ltd. Gla:<;gow G4S OOPS Issues ami Professor of Computer Architecture, Stralhclyde University . (I). ()bjed-Orienled Computer Archi.~ture: Concepts and Is.'iues 1llis lecrure will imroduce the relevant concepts and set out the architectural issues complexity which arose during the design of me hardware developed by Linn for the object-oriented progcanuning paradigm. multiple pondipI Issues covered will include: absaw:tioo mecIu!nisma Stores : typea Object persistence poIymorphiIm Object swapping Object security (unique identifiers, range checking. symbolic activation) expressiviry Processors : paralIeliIm < High level instructions (no scmamit gap. even higher ordered and recursive ) verilUlbiliJy H 1bc: MIPS rate (clock. rates, caches, pipelines and prefelch units) ~ 1_01)' Ttchnolog, : Software? orr lhe shelf7 Semi-custom? Full-custom? (2, The REKURSIV Object-Oriented Cumputer Architedure This lecrure will concentrale on the: praclicalilies of configuring the REKURSIV 10 a variety of differcm application domains and will discuss topics such as Microcodina; an object-based insuuclion scI, languale lnte&Ulion. Process convnunicllion, Garbage collection, . The furure (e.g. disttibuted object stores). Various examples wiJl be given. Demonstrations : A simulator for the microcoded architecture 10 run o n a Sun using X-windows will be available. as will a REKURSIV accelerator board for a Sun. R~fuenu : REKU RSIV Objec t - O ri~fIf~d Compllfu Arc"iUClllr~. -

Garbage Collection

DOLPHIN: PERSISTENT, OBJECT-ORIENTED AND NETWORKED A REPORT SUBMITTED TO THE DEPARTMENT OF COMPUTER SCIENCE UNIVERSITY OF STRATHCLYDE CONSISTING OF A DOCTOR OF PHILOSOPHY THESIS DISSERTATION By Gordon W. Russell February 1995 The copyright of this thesis belongs to the author under the terms of the United Kingdom Copyright Acts as qualified by University of Strathclyde Regulation . Due acknowledgement must always be made of the use of any material contained in, or derived from, this thesis. c Copyright 1995 iii Abstract This thesis addresses the problems associated with the design of a new generation of high-performance computer systems for use with object-oriented languages. The major contribution is a novel design for the utilization of object addressing and multiple stacks within RISC-style processors, and a pro- posal for object-addressed stable electronic memory capable of supporting much higher performance than contemporary designs based on the use of disk backing store. The impact of the new designs in discussed and evaluated by simulation within the context of object-oriented system design. iv Publications and Acknowledgements A number of publications have been created from this work: Four machine descriptions and critiques have been reproduced in [Russell and Cockshott, 1993a] from an early draft of chapter 3. This paper is also available as a technical report [Russell and Cockshott, 1993b]. A technical report discussing DAIS' caching strategy has been produced [Russell and Shaw, 1993a]. A cut down version of chapter 5, discussing only the cache structure and its advantages, appears in [Russell et al., 1995]. DAIS' register file is based on a dynamically-resizing shift register. -

Data Movement in the Grasshopper Operating System Basser

Data Movement in the Grasshopper Operating System Technical Report Number 501 December, 1995 Rex di Bona ISBN 0 86758 996 5 Basser Department of Computer Science University of Sydney NSW 2006 1 1 Introduction Computer environments have changed dramatically in recent years, from the large centralised mainframe to the networked collections of workstation style machines. The power of workstations is increasing, but the common paradigms used to manage data on these workstations are still fundamentally the same as those employed by the earliest machines. Persistent systems adopt a radically different approach. Unlike conventional systems which clearly distinguish between short-term computational storage and long-term ®le storage, persistent systems abstract over the longevity of data and provide a single abstraction of storage. This thesis examines the issues involved with the movement of data in persistent systems. We are concerned with the differences in the requirements placed on the data movement layer by persistent and non-persistent operating systems. These differences are considered in relation to the Grasshopper operating system. This thesis investigates the movement of data in the Grasshopper Operating System, and describes approaches to allow us to provide the functionality required by the operating system. Grasshopper offers the user a seamlessly networked environment, offering persistence and data security unparalleled by conventional workstation environments. To support this environment the paradigms employed in the movement of data are radically different from those employed by conventional systems. There is no concept of ®les. Processes and address spaces become orthogonal. The network is used to connect machines, and is not an entity in itself. -

Architectural and Operating System Support for Orthogonal Persistence

Architectural and Operating System Support for Orthogonal Persistence John Rosenberg University of Sydney, Australia ABSTRACT: Over the past ten years much research ef- fort has been expended in attempting to build systems which support orthogonal persistence. Such systems al- low all data to persist for an arbitrary length of time, possibly longer than the creating program, and support access and manþlation of data in a uniform manner, regardless of how long it persists. Persistent systems are usually based on a persistent store which provides storage for objects. Most existing persistent systems have been developed above conventional architectures and/or operating qystems. In this paper we argue that conventional architectures provide an inappropriate base for persistent object systems and that we must look towards new architectures if we are to achieve ac- ceptable performance. The examples given are based on the Monads architecture which provides explicit hardware support for persistence and objects. @ Cornputing Systems, Vol. 5 . No. 3 . Summer 1992 305 1. Introduction Over the past ten years much research effort has been expended in at- tempting to build systems which support orthogonal persistence [1, 2, 4, 5, 6,71. The idea behind persistence [12] is simple: that all data in a system should be able to persist (survive) for as long as that data is required. Orthogonal persistence means that all data types may be per- sistent and that data may be manipulated in a uniform manner regard- less of the length of time it persists. Orthogonal persistence is not found in contemporary operating systems, nor most programming lan- guages or database systems.