Garbage Collection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Object Management System Concepts: Supporting Integrated Office Workstation Applications

Object Management System Concepts: Supporting Integrated Office Workstation Applications by Stanley Benjamin Zdonik, Jr. S.B., Massachusetts Institute of Technology (1970) S.M., Massachusetts Institute of Technology (1980) E.E., Massachusetts Institute of Technology (1980) Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy at the Massachusetts Institute of Technology May 1983 © Massachusetts Institute of Technology 1983 Signature of Author............... .. ..... .... Department of Electric~l Eng~neering and Computer Science May 13, 1983 Certified by . .* .* .. Michael Hammer Thesis Supervisor Accepted . ...... .-.----4 p . Arthur C. Smith Chairman, Departmental Committee on Graduate Students Object Management System Concepts: Supporting Integrated Office Workstation Applications by Stanley B. Zdonik, Jr. Submitted to the Department of Electrical Engineering and Computer Science on May 13, 1983, in partial fulfillment of the requirements for the Degree of Doctor of Philosophy Abstract The capabilities of a system for storing and retrieving office style objects are described in this work. Traditional file systems provide facilities for the storage and retrieval of objects that are created in user programs, but the semantics of these objects are not available to the file system. Database management systems provide a means of describing the semantics of objects using a single basic paradigm, the record. This model is inadequate for describing the richer semantics of office objects. An object management system combines the advantages of both a file system and a database management system in that it can store arbitrarily defined programming language objects and at the same time maintain a high-level description of their meaning. This work presents a high-level model of data that can be used to describe office objects more effectively than data processing oriented models. -

A VLSI Architecture for Enhancing Software Reliability Kanad Ghose Iowa State University

Iowa State University Capstones, Theses and Retrospective Theses and Dissertations Dissertations 1988 A VLSI architecture for enhancing software reliability Kanad Ghose Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/rtd Part of the Computer Sciences Commons Recommended Citation Ghose, Kanad, "A VLSI architecture for enhancing software reliability " (1988). Retrospective Theses and Dissertations. 9345. https://lib.dr.iastate.edu/rtd/9345 This Dissertation is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Retrospective Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. INFORMATION TO USERS The most advanced technology has been used to photo graph and reproduce this manuscript from the microfilm master. UMI films the original text directly from the copy submitted. Thus, some dissertation copies are in typewriter face, while others may be from a computer printer. In the unlikely event that the author did not send UMI a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyrighted material had to be removed, a note will indicate the deletion. Oversize materials (e.g., maps, drawings, charts) are re produced by sectioning the original, beginning at the upper left-hand comer and continuing from left to right in equal sections with small overlaps. Each oversize page is available as one exposure on a standard 35 mm slide or as a 17" x 23" black and white photographic print for an additional charge. -

(J. Bayko) Date: 12 Jun 92 18:49:18 GMT Newsgroups: Alt.Folklore.Computers,Comp.Arch Subject: Great Microprocessors of the Past and Present

From: [email protected] (J. Bayko) Date: 12 Jun 92 18:49:18 GMT Newsgroups: alt.folklore.computers,comp.arch Subject: Great Microprocessors of the Past and Present I had planned to submit an updated version of the Great Microprocessor list after I’d completed adding new processors, checked out little bits, and so on... That was going to take a while... But then I got to thinking of all the people stuck with the origional error-riddled version. All these poor people who’d kept it, or perhaps placed in in an FTP site, or even - using it for reference??! In good conscience, I decided I can’t leave that erratic old version lying out there, where people might think it’s accurate. So here is an interim release. There will be more to it later - the 320xx will be included eventually, for example. But at least this has been debugged greatly. Enjoy... John Bayko. — Great Microprocessors of the Past and Present (V 3.2.1) Section One: Before the Great Dark Cloud. ————————— Part I: The Intel 4004 (1972) The first single chip CPU was the Intel 4004, a 4-bit processor meant for a calculator. It processed data in 4 bits, but its instructions were 8 bits long. Internally, it featured four 12 bit(?) registers which acted as an internal evaluation stack. The Stack Pointer pointed to one of these registers, not a memory location (only CALL and RET instructions operated on the Stack Pointer). There were also sixteen 4-bit (or eight 8-bit) general purpose registers The 4004 had 46 instructions. -

Secure Foundational Exabyte Hpc Systems for 2020 and Beyond Sv/128 - Risc-V

SECURE FOUNDATIONAL EXABYTE HPC SYSTEMS FOR 2020 AND BEYOND SV/128 - RISC-V Steven J. Wallach ([email protected]) Presentation Outline • Background Material (Part 1) • Previous efforts/research on protection • Full Proposal (Part 2) • 128 bit logical address • 64 bit Unique Object ID • First implementation (Part 3 & 4) • RISC-V SV128 ([21] Github) • 32 bit Object ID • Programmer Visible State • Hardware 훍-State [19] • Contemporary security issues March 2020 - SV128 - BSC 2 What’s Next • “The end of Moore’s law could be the best thing that has happened in computing since the beginning of Moore’s law. Confronting the end of an epoch should enable a new era of creativity by encouraging computer scientists to invent biologically inspired devices, circuits, and architectures implemented using recently emerging technologies. “ [6] R. Stanley Williams, “The End of Moore’s Law”, Computing in Science & Engineering, IEEE CS and AIP, March/April 2017 March 2020 - SV128 - BSC 3 OBJECTIVES THE BEST BENCHMARK IS THE ONE YOUR COMPETITION CAN NOT RUN • Why a 128 bit address space? • Security • Cluster wide shared virtual address • Heterogeneous Nodes • Time to do something different not just keep adding more bits • Begin the decade of Exascale computing on a scalable technology • Software/OS oriented • Upward Compatible with RV32 and RV64 • Otherwise we will continue to implement and support the sins of our parents/grandparents. • We can now begin to design & build SECURE PROGRAMMABLE EXABYTE (ZETABYTE) distributed memory systems March 2020 - SV128 -

Intel 432 System Summary: Manager's Perspective

INTEL 432 SYSTEM SUMMARY: MANAGER'S PERSPECTIVE Manual Order Number: 171867-001 Copyright © 1981 Intel Corporation Intel Corporation, 3065 Bowers Avenue, Santa Clara, California 95051 Additional copies of this manual or other Intel literature may be obtained from: Literature Department Intel Corporation 3065 Bowers Avenue Santa Clara, CA 95051 The information in this document is subject to change without notice. Intel Corporation makes no warranty of any kind with regard to this material, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. Intel Corporation assumes no responsibility for any errors that may appear in this document. Intel Corporation makes no commitment to update nor to keep current the information contained in this document. Intel Corporation assumes no responsibility for the use of any circuitry other than circuitry embodied in an Intel product. No other circuit patent licenses are implied. Intel software products are copyrighted by and shall remain the property of Intel Corporation. Use, duplication or disclosure is subject to restrictions stated in Intel's software license, or as defined in ASPR 7-104.9(a)(9). No part of this document may be copied or reproduced in any form or by any means without the prior written consent of Intel Corporation. The following are trademarks of Intel Corporation and its affiliates and may be used only to identify Intel products: BXP Intelevision Micromap CREDIT Intellec Multibus i iRMX Multimodule ICE iSBC Plug-A-Bubble iCS iSBX PROMPT im Library Manager Promware INSITE MCS RMX/80 Intel Megachassis System 2000 Intel Micromainframe UPI pScope and the combination of ICE, iCS, iMMX, iRMX, iSBC, iSBX, MCS, or RMX and a numerical suffix. -

Implementation of Distributed Orthogonal Persistence Using

Þs-9.15 Implementation of Distributed Orthogonal Persistence llsing Virtual Memory Francis Vaughan Thesis submitted for the degree of Doctor of Philosophy in The University of Adelaide (Faculty of Mathematical and Computer Sciences) December 1994 ,Au,***ol.eJ \ qq 5 Abstract Persistent object systems greatly simplify programming tasks, since they hide the traditional distinction between short-term and long-term storage from the applications programmer. As a result, the programmer can operate at a level of abstraction in which short-term and long-term data are treated uniformly. In the past most persistent systems have been constructed above conventional operating systems and have not supported any form of distributed programming paradigm. In this thesis we explore the implementation of orthogonally persistent systems that make direct use of fhe attributes of paged virtual memory found in the majority of conventional computing platforms. These attributes are exploited to support object movement for persistent storage to addressable memory, to aid in garbage collection, to provide the illusion of larger storage spaces than the underlying architecture allows, and to provide distribution of the persistent system. The thesis further explores the different models of distribution, notably a one world model in which a single persistent space exists, and a federated one in which many co- operating spaces exist. It explores communication mechanisms between federated spaces and the problems of maintaining consistency between separate persistent spaces in a manner which ensures both a reliable and resilient computational environment. In particular characterising the interdependencies using vector clocks and the manner in which vector time can be used to provide a complete mechanism for ensuring reliable and resilient computation. -

210620-004 Literature Guide Sep Oct 1984.Pdf

INTRODUCTiON Welcome to tile intel Ut,~raHm~ Cwcie --- a full·fledged libfilry of ter.hnical support documenta tion for today's leadino .nemary ano ITIlcroproC8?sor component and system products. This comprehensive literature selection guide is a tool to help you, the Intel customer, during product selection, desiqn and operation. It is for this reason tha'l we \ieep its contents up to date. THE NEED FOR SUPPORT DOCUMENTATION As systems design becomes mcr'easlngi\! software-dependent, development time and costs will continue to rise. To help reduce, both systems ilnd en9ill8ering costs, Intel will be deslgn- ami manufacturing products Wilich will integrate more and mors software functions into system hardware. ThiS ('I' complex, hlgflly inte9rated product 'will require substantial support clocumentation, Wli! tY' incoi'poraleC) into the Intel Literature, Guilie as !11ese products emerge. - HOW TO ORDER When ordering from Tim; Utera(uP?, GUide. please use ~he order form located at the front of thio; bookiet. To 'facjHtat~} on1t;(, pleas6 tH:: SUit'} to endose H'j8 You \lv~a always receive the editjop (Y: Hny PUhUc2tion you or~jer. to change.) PleaSE} \Ilif:jte (ntt~i's Literatufe Departil~ent JOu[; Bc:vvers /\venue, Santa C1a(8., CA 95051, lol' additionai infonllation. Please note and as.',umes riO r,::!~;pol;:,ib;iity tor Ci~I}' err~)fS wl"lIe!llnay appear in ;i'formCltlon cnntc'iinecl h,~'ein :ntel retain::, thE: nghr tc. make any wirnout notice MUL PROMPT, MCS "0 code and i~:, 110t Sci.:::nces Corp0railon Intei ~~:o~poration LITERATURE In addition to the product line Handbooks listed below. -

A Hardware Implementation of a Knowledge Manipulation System for Real Time Engineering Applications

A Hardware Implementation of a Knowledge Manipulation System for Real Time Engineering Applications by Stephen Hudson B.Sc. (Hons) Doctor of Philosophy University of Edinburgh March, 199 Table Of Contents Table of Contents . 1 Acknowledgement............................................................................... V Declaration........................................................................................ V Abstract............................................................................................ Vi Abbreviations...................................................................................... VU Listof Figures ...................................................................................ix Listof Photographs ............................................................................. X Listof Tables ..................................................................................... X Chapter 1 Introduction .......................................................... 1 1 .1 Background ............................................................................ 1 1.2 Chapter Summary .................................................................... 2 Chapter 2 Intelligent Systems .............................................. 4 2.1 Introduction ............................................................................ 4 2.2 Al Techniques ......................................................................... 8 2.2.1 Production Systems .......................................................... -

P-1935-J-Conc-Biblio

5.0 Conclusions The intent of this document has been: (1) to provide a comprehensive overview of the important properties of traditional capability-based systems, (2) to point out the advantages and deficiencies of such systems with regard to the NCSC [TCSEC83] requirements, (3) to outline some possible approaches for the elimination of such deficiencies, and (4) to compare the properties of such systems to those of descriptor-based systems (with which the computer security community has been somewhat more familiar). Thus, this document can be used as a background document by both evaluators and designers of capability-based systems. 'In both cases, the reader should make use of the references provided in this document in order to help him understand some of its more subtle conclusions. [For the readers with special research and/or development interests in this area, an extensive bibliography is also provided as an appendix to this paper.] The research work necessary for this paper has led to the following findings. First, the notion of a "traditional" capability-based system can be defined based on a set of properties which are common to many capability-based systems. These properties are found in the areas of capability-based addressing and protection, and they support a number of general security and integrity policies. The discussion of the set of common properties has been essential to the investigation of the TCSEC impact on traditional capability systems. Without such a defmition, the impact and corresponding analysis would be questionable at best, because no general conclusions could be drawn from individual case studies. -

Compilerbau Für Die Common Language Runtime

Compilerbau für die Common Language Runtime Aufbau des GCC . Löwis © 2006 Martin v Quellorganistation: Probleme • Modularisierung des Compilers: – verschiedene Übersetzungsphasen (Präprozessor, Compiler, Assembler, Linker) – verschiedene Frontends und Backends – Compiler-Compiler: Programme, die Quelltext des Compilers generieren – Comilerabhängige Laufzeitbibliotheken • Portabilität, Cross-Compilerbau: – Buildsystem: System, auf dem der Compiler übersetzt wird – Hostsystem: System, auf dem der Compiler läuft – Targetsystem: System, für das der Compiler Code erzeugt – gleichzeitige Installation von Compilern für verschiedene Targets . Löwis © 2006 Martin v Compilerbau 2 Modularisierung: Toplevel-Verzeichnis • Cygnus configure: Ein Build-Lauf soll gesamte Werkzeugkette für Host/Target-Kombination erzeugen können – Overlay-Struktur: gcc, binutils (as, ld), gdb, make(?) können alle in das gleiche Verzeichnis integriert werden, und “am Stück” übersetzt werden • gcc: enthält Compiler, Laufzeitbibliotheken, Supporttools für Compiler – gcc: Verzeichnis für GCC selbst – fixincludes:Übersetzer zur Anpassung von Headerfiles – boehm-gc, libada, libffi, libgfortran, libjava, libmudflap, ›libobjc, libruntime, libssp, libstdc++v3: Laufzeitbibliotheken . Löwis – libcpp, libiberty, libintl: vom Compiler selbst verwendete Bibliotheken © 2006 Martin v Compilerbau 3 gcc • Verzeichnis gcc selbst: Buildprozess, C compiler, Definition von Compiler-Datenstrukturen (tree, RTL), middle end • config: Backends – je ein Unterverzeichnis pro Prozessor • doc: texinfo-Dokumentation -

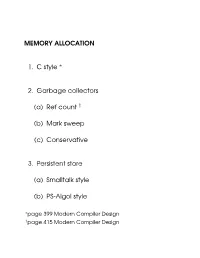

MEMORY ALLOCATION 1. C Style 2. Garbage Collectors (A) Ref Count (B

MEMORY ALLOCATION 1. C style ∗ 2. Garbage collectors (a) Ref count y (b) Mark sweep (c) Conservative 3. Persistent store (a) Smalltalk style (b) PS-Algol style ∗page 399 Modern Compiler Design ypage 415 Modern Compiler Design Malloc Data structure used size in bytes 16 1 free bits free Stack 32 0 program variables allocated block 16 0 48 1 free heap 1 MALLOC algorithm int heap[HSIZE]; char *scan(int s) {int i; for(i=0;i<HSIZE;i+=heap[i]> >2) if(heap[i]&1&&heap[i]&0xfffffffe>(s+4)) { heap[i]^=1; return &heap[i+1]; } return 0; } char *malloc(int size) { char *p=scan(size); if(p)return p; merge(); p=scan(size); if(p)return p; heapoverflow(); } 2 FREE ALGORITHM This simply toggles the free bit. free(char *p){heap[((int)p> >2)-1]^=1;} Merge algorithm merge() { int i; for(i=0;i<HSIZE;i+=heap[i]> >2) if(heap[i]&1&&heap[heap[i]> >2]&1) heap[i]+=(heap[heap[i]> >2]^1); } 3 Problem May have to chase long list of allocated blocks before a free block is found. Solution Use a free list 4 freepntr 16 1 free bits 32 0 chain free blocks allocated together block 16 0 48 1 heap 5 Problem the head of the free list will accumulate lots of small and unusable blocks, scanning these will slow access down Solution use two free pointers 1. points at the head of the free-list 2. points at the last allocated item on the free list when allocating use the second pointer to initi- ate the search, only when it reaches the end of the heap, do we re-initialise it from the first pointer. -

An Overview of Ada 202X 159

TThehe journaljournal forfor thethe internationalinternational AdaAda communitycommunity AdaAda UserUser Volume 41 Journal Number 3 Journal September 2020 Editorial 121 Quarterly News Digest 122 Conference Calendar 149 Forthcoming Events 156 Special Contribution J. Cousins An Overview of Ada 202x 159 Articles from the 20th International Real-Time Ada Workshop L.M. Pinho, S. Royuela, E. Quiñones Real-Time Issues in the Ada Parallel model with OpenMP 177 J. Garrido, D. Pisonero Fuentes, J.A. de la Puente, J. Zamorano Vectorization Challenges in Digital Signal Processing 183 Puzzle J. Barnes The Problem of the Nested Squares 187 In memoriam: Ian Christopher Wand 188 Produced by Ada-Europe Editor in Chief António Casimiro University of Lisbon, Portugal [email protected] Ada User Journal Editorial Board Luís Miguel Pinho Polytechnic Institute of Porto, Portugal Associate Editor [email protected] Jorge Real Universitat Politècnica de València, Spain Deputy Editor [email protected] Patricia López Martínez Universidad de Cantabria, Spain Assistant Editor [email protected] Kristoffer N. Gregertsen SINTEF, Norway Assistant Editor [email protected] Dirk Craeynest KU Leuven, Belgium Events Editor [email protected] Alejandro R. Mosteo Centro Universitario de la Defensa, Zaragoza, Spain News Editor [email protected] Ada-Europe Board Tullio Vardanega (President) Italy University of Padua Dirk Craeynest (Vice-President) Belgium Ada-Belgium & KU Leuven Dene Brown (General Secretary) United Kingdom SysAda Limited Ahlan Marriott (Treasurer) Switzerland White Elephant GmbH Luís Miguel Pinho (Ada User Journal) Portugal Polytechnic Institute of Porto António Casimiro (Ada User Journal) Portugal University of Lisbon Ada-Europe General Secretary Dene Brown Tel: +44 2891 520 560 SysAda Limited Email: [email protected] Signal Business Center URL: www.ada-europe.org 2 Innotec Drive BT19 7PD Bangor Northern Ireland, UK Information on Subscriptions and Advertisements Ada User Journal (ISSN 1381-6551) is published in one volume of four issues.