Implementation of Distributed Orthogonal Persistence Using

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

S-Algol Reference Manual Ron Morrison

S-algol Reference Manual Ron Morrison University of St. Andrews, North Haugh, Fife, Scotland. KY16 9SS CS/79/1 1 Contents Chapter 1. Preface 2. Syntax Specification 3. Types and Type Rules 3.1 Universe of Discourse 3.2 Type Rules 4. Literals 4.1 Integer Literals 4.2 Real Literals 4.3 Boolean Literals 4.4 String Literals 4.5 Pixel Literals 4.6 File Literal 4.7 pntr Literal 5. Primitive Expressions and Operators 5.1 Boolean Expressions 5.2 Comparison Operators 5.3 Arithmetic Expressions 5.4 Arithmetic Precedence Rules 5.5 String Expressions 5.6 Picture Expressions 5.7 Pixel Expressions 5.8 Precedence Table 5.9 Other Expressions 6. Declarations 6.1 Identifiers 6.2 Variables, Constants and Declaration of Data Objects 6.3 Sequences 6.4 Brackets 6.5 Scope Rules 7. Clauses 7.1 Assignment Clause 7.2 if Clause 7.3 case Clause 7.4 repeat ... while ... do ... Clause 7.5 for Clause 7.6 abort Clause 8. Procedures 8.1 Declarations and Calls 8.2 Forward Declarations 2 9. Aggregates 9.1 Vectors 9.1.1 Creation of Vectors 9.1.2 upb and lwb 9.1.3 Indexing 9.1.4 Equality and Equivalence 9.2 Structures 9.2.1 Creation of Structures 9.2.2 Equality and Equivalence 9.2.3 Indexing 9.3 Images 9.3.1 Creation of Images 9.3.2 Indexing 9.3.3 Depth Selection 9.3.4 Equality and Equivalence 10. Input and Output 10.1 Input 10.2 Output 10.3 i.w, s.w and r.w 10.4 End of File 11. -

Lecture Notes in Computer Science

Orthogonal Persistence Revisited Alan Dearle, Graham N.C. Kirby and Ron Morrison School of Computer Science, University of St Andrews, North Haugh, St Andrews, Fife KY16 9SX, Scotland {al, graham, ron}@cs.st-andrews.ac.uk Abstract. The social and economic importance of large bodies of programs and data that are potentially long-lived has attracted much attention in the commercial and research communities. Here we concentrate on a set of methodologies and technologies called persistent programming. In particular we review programming language support for the concept of orthogonal persistence, a technique for the uniform treatment of objects irrespective of their types or longevity. While research in persistent programming has become unfashionable, we show how the concept is beginning to appear as a major component of modern systems. We relate these attempts to the original principles of orthogonal persistence and give a few hints about how the concept may be utilised in the future. 1 Introduction The aim of persistent programming is to support the design, construction, maintenance and operation of long-lived, concurrently accessed and potentially large bodies of data and programs. When research into persistent programming began, persistent application systems were supported by disparate mechanisms, each based upon different philosophical assumptions and implementation technologies [1]. The mix of technologies typically included naming, type and binding schemes combined with different database systems, storage architectures and query languages. The incoherence in these technologies increased the cost both intellectually and mechanically of building persistent application systems. The complexity distracted the application builder from the task in hand to concentrate on mastering the multiplicity of programming systems, and the mappings amongst them, rather than the application being developed. -

(J. Bayko) Date: 12 Jun 92 18:49:18 GMT Newsgroups: Alt.Folklore.Computers,Comp.Arch Subject: Great Microprocessors of the Past and Present

From: [email protected] (J. Bayko) Date: 12 Jun 92 18:49:18 GMT Newsgroups: alt.folklore.computers,comp.arch Subject: Great Microprocessors of the Past and Present I had planned to submit an updated version of the Great Microprocessor list after I’d completed adding new processors, checked out little bits, and so on... That was going to take a while... But then I got to thinking of all the people stuck with the origional error-riddled version. All these poor people who’d kept it, or perhaps placed in in an FTP site, or even - using it for reference??! In good conscience, I decided I can’t leave that erratic old version lying out there, where people might think it’s accurate. So here is an interim release. There will be more to it later - the 320xx will be included eventually, for example. But at least this has been debugged greatly. Enjoy... John Bayko. — Great Microprocessors of the Past and Present (V 3.2.1) Section One: Before the Great Dark Cloud. ————————— Part I: The Intel 4004 (1972) The first single chip CPU was the Intel 4004, a 4-bit processor meant for a calculator. It processed data in 4 bits, but its instructions were 8 bits long. Internally, it featured four 12 bit(?) registers which acted as an internal evaluation stack. The Stack Pointer pointed to one of these registers, not a memory location (only CALL and RET instructions operated on the Stack Pointer). There were also sixteen 4-bit (or eight 8-bit) general purpose registers The 4004 had 46 instructions. -

This Thesis Has Been Submitted in Fulfilment of the Requirements for a Postgraduate Degree (E.G

This thesis has been submitted in fulfilment of the requirements for a postgraduate degree (e.g. PhD, MPhil, DClinPsychol) at the University of Edinburgh. Please note the following terms and conditions of use: • This work is protected by copyright and other intellectual property rights, which are retained by the thesis author, unless otherwise stated. • A copy can be downloaded for personal non-commercial research or study, without prior permission or charge. • This thesis cannot be reproduced or quoted extensively from without first obtaining permission in writing from the author. • The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the author. • When referring to this work, full bibliographic details including the author, title, awarding institution and date of the thesis must be given. EVALUATION OF FUNCTIONAL DATA MODELS FOR DATABASE DESIGN AND USE by KRISHNARAO GURURAO KULKARNI Ph. D. University of Edinburgh 1983 To my wife, Rama and daughter, Bhuvana Acknowledgements I am deeply indebted to my thesis supervisor, Dr. Malcolm Atkinson, for his constant guidance, help, and encouragement. for his highly incisive comments on the writing of this thesis, and for a host of other reasons. It is indeed a great pleasure to acknowledge his contribution. I am also greatly indebted to Dr. David Rees, who supervised me during the initial six months. His help and guidance during that time was invaluable. I am thankful to many of my friends In the Department for their kind advice from time to time. In particular, I would like to thank Paul Cockshott, Ken Chisholm, Pedro Hepp, Segun Owoso, George Ross, and Rob Procter for many useful discussions. -

Napier88 Reference Manual Release 2.2.1

Napier88 Reference Manual Release 2.2.1 July 1996 Ron Morrison Fred Brown* Richard Connor Quintin Cutts† Alan Dearle‡ Graham Kirby Dave Munro University of St Andrews, North Haugh, St Andrews, Fife KY16 9SS, Scotland. *Department of Computer Science, University of Adelaide, South Australia 5005, Australia. †University of Glasgow, Lilybank Gardens, Glasgow G12 8QQ, Scotland. ‡University of Stirling, Stirling FK9 4LA, Scotland. This document should be referenced as: “Napier88 Reference Manual (Release 2.2.1)”. University of St Andrews (1996). Contents 1 INTRODUCTION ............................................................................. 5 2 CONTEXT FREE SYNTAX SPECIFICATION.......................................... 8 3 TYPES AND TYPE RULES ................................................................ 9 3.1 UNIVERSE OF DISCOURSE...............................................................................................9 3.2 THE TYPE ALGEBRA..................................................................................................... 10 3.2.1 Aliasing............................................................................................................... 10 3.2.2 Recursive Definitions............................................................................................. 10 3.2.3 Type Operators...................................................................................................... 11 3.2.4 Recursive Operators .............................................................................................. -

A Conceptual Language for Querying Object Oriented Data

A Conceptual Language for Querying Ob ject Oriented Data Peter J Barclay and Jessie B Kennedy Computer Studies Dept., Napier University 219 Colinton Road, Edinburgh EH14 1DJ Abstract. Avariety of languages have been prop osed for ob ject oriented database systems in order to provide facilities for ad hoc querying. How- ever, in order to mo del at the conceptual level, an ob ject oriented schema de nition language must itself provide facilities for describing the b ehaviour of data. This pap er demonstrates that with only mo dest extensions, sucha schema de nition language mayserve as a query notation. These extensions are concerned solely with supp orting the interactive nature of ad hoc query- ing, providing facilities for naming and displaying query op erations and their results. 1 Overview Section 2 reviews the background to this work; its ob jectives are outlined in section 3. Section 4 describ es NOODL constructs which are used to de ne behaviour within schemata, and section 5 examines how these may b e extended for interactive use. The resulting query notation is evaluated in section 6. Section 7 outlines some further work and section 8 concludes. 2 Background NOM (the Napier Ob ject Mo del) is a simple data mo del intended to allow ob ject oriented mo delling of data at a conceptual level; it was rst presented in [BK91] and is describ ed fully in [Bar93]. NOM has b een used to mo del [BK92a] and to supp ort the implementation [BFK92] of novel database applications, and also for the investigation of sp eci c mo delling issues such as declarativeintegrity constraints and activeness [BK92b] and the incorp oration of views [BK93] in ob ject oriented data mo dels. -

Semantic Integrity for Persistent Objects 1 Introduction

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Repository@Napier Semantic Integrity for Persistent Ob jects Peter J Barclayand Jessie B Kennedy Napier Polytechnic, Craiglo ckhart Campus 219 Colinton Road, Edinburgh EH14 1DJ e-mail: [email protected] and [email protected] Abstract Mo delling constructs for sp ecifying semantic integrity are reviewed, and their implicit execution semantics discussed. An integrity mainte- nance mo del based on these constructs is presented. An implementa- tion of this mo del in a p ersistent programming language is describ ed, allowing exible automated dynamic integrity management for appli- cations up dating a p ersistent store; this implementation is based on an event-driven architecture. p ersistent programming, conceptual mo delling, semantic integrity, active ob ject-oriented databases, co de generation 1 Intro duction Napier88 [MBCD89 ], [DCBM89 ] is a high-level, strongly-typ ed, blo ck struc- tured programming language with orthogonal p ersistence [Co c82 ]; that is, ob jects of anytyp e created by programs can outlive the execution of the pro- gram which created them. Persistentobjectscanbereusedinatyp e-secure wayby subsequent executions of the same program, or by other programs. Persistent languages are well-suited to the construction of data-intensive applications [Co o90 ]; programs are written to manipulate data, and the in- built (and transparent) p ersistence mechanism provides for its storage and retrieval. This article describ es an integrity management system (IMS) written in Napier88; this forms part of a larger system which supp orts the development of p ersistent application systems [BK92 ]. -

A Hardware Implementation of a Knowledge Manipulation System for Real Time Engineering Applications

A Hardware Implementation of a Knowledge Manipulation System for Real Time Engineering Applications by Stephen Hudson B.Sc. (Hons) Doctor of Philosophy University of Edinburgh March, 199 Table Of Contents Table of Contents . 1 Acknowledgement............................................................................... V Declaration........................................................................................ V Abstract............................................................................................ Vi Abbreviations...................................................................................... VU Listof Figures ...................................................................................ix Listof Photographs ............................................................................. X Listof Tables ..................................................................................... X Chapter 1 Introduction .......................................................... 1 1 .1 Background ............................................................................ 1 1.2 Chapter Summary .................................................................... 2 Chapter 2 Intelligent Systems .............................................. 4 2.1 Introduction ............................................................................ 4 2.2 Al Techniques ......................................................................... 8 2.2.1 Production Systems .......................................................... -

RYAN MURPHY and DAVID MILLER the Couple Has Made an Extraordinary $10 Million Donation in Honor of Their Son, Who Was Treated for Cancer at CHLA

imagineFALL 2018 RYAN MURPHY AND DAVID MILLER The couple has made an extraordinary $10 million donation in honor of their son, who was treated for cancer at CHLA. ABOUT US The mission of Children’s Hospital Los Angeles is to create hope and build healthier futures. Founded in 1901, CHLA is the top-ranked pediatric hospital in California and among the top 10 in the nation, according to the prestigious U.S. News & World Report Honor Roll of children’s hospitals for 2018-19. The hospital is home to The Saban Research Institute and is one of the few freestanding pediatric hospitals where scientific inquiry is combined with clinical care devoted exclusively to children. Children’s Hospital Los Angeles is a premier teaching hospital and has been affiliated with the Keck School of Medicine of USC since 1932. Ford Miller Murphy TABLE OF CONTENTS 2 A Letter From the President and Chief Executive Officer 3 A Message From the Chief Development Officer 4 Grateful Parents Ryan Murphy and David Miller Give $10 Million to CHLA 8 The Armenian Ambassadors Working Together to Support Children’s Health Care 10 Shaving the Way to a Cure St. Baldrick’s Foundation 12 A Miracle in May Costco Wholesale 14 Sophia Scano Fitzmaurice Changing the Future for Children and Adults With a Rare Blood Disease 15 Good News! Charitable Gift Annuity Rates Have Increased 16 Anonymous Donation Funds Emergency Department Expansion 16 Upcoming Events 17 In Memoriam 18 CHLA Happenings Ford Miller Murphy 21 The Children’s Hospital Los Angeles Gala: From Paris With Love 25 Walk and Play L.A. -

Napier88 – a Database Programming Language?

This paper should be referenced as: Dearle, A., Connor, R.C.H., Brown, A.L. & Morrison, R. “Napier88 - A Database Programming Language?”. In Proc. 2nd International Workshop on Database Programming Languages, Salishan, Oregon (1989) pp 179-195. Napier88 – A Database Programming Language? Alan Dearle, Richard Connor, Fred Brown & Ron Morrison al%uk.ac.st-and.cs@ukc richard%uk.ac.st-and.cs@ukc ab%uk.ac.st-and.cs@ukc ron%uk.ac.st-and.cs@ukc Department of Computational Science University of St Andrews North Haugh St Andrews Scotland KY16 9SS. Abstract This is a description of the Napier88 type system based on "A Framework for Comparing Type Systems for Database Programming Languages" by Albano et al. 1 Introduction This is a description of the Napier88 type system based on "A Framework for Comparing Type Systems for Database Programming Languages" [ADG89]. Napier88 is designed to be a general purpose persistent programming language. The authors envisage the language to be used to construct large, integrated computer systems such as CAD, CASE, hypermedia, database and Office Integration systems. Such systems have the following requirements in common which are met by the Napier88 programming language: 1. the need to support large amounts of dynamically changing, structured data, and, 2. the need for sophisticated MMI. In order to meet these demands an integrated environment is needed which will support all of the application builders' requirements. For comparison, it is useful to distinguish between Database Programming languages and Persistent Programming Languages. Database programming languages are not necessarily computationally complete. They often require the use of other ‘conventional’ programming languages [CL88]. -

Compilerbau Für Die Common Language Runtime

Compilerbau für die Common Language Runtime Aufbau des GCC . Löwis © 2006 Martin v Quellorganistation: Probleme • Modularisierung des Compilers: – verschiedene Übersetzungsphasen (Präprozessor, Compiler, Assembler, Linker) – verschiedene Frontends und Backends – Compiler-Compiler: Programme, die Quelltext des Compilers generieren – Comilerabhängige Laufzeitbibliotheken • Portabilität, Cross-Compilerbau: – Buildsystem: System, auf dem der Compiler übersetzt wird – Hostsystem: System, auf dem der Compiler läuft – Targetsystem: System, für das der Compiler Code erzeugt – gleichzeitige Installation von Compilern für verschiedene Targets . Löwis © 2006 Martin v Compilerbau 2 Modularisierung: Toplevel-Verzeichnis • Cygnus configure: Ein Build-Lauf soll gesamte Werkzeugkette für Host/Target-Kombination erzeugen können – Overlay-Struktur: gcc, binutils (as, ld), gdb, make(?) können alle in das gleiche Verzeichnis integriert werden, und “am Stück” übersetzt werden • gcc: enthält Compiler, Laufzeitbibliotheken, Supporttools für Compiler – gcc: Verzeichnis für GCC selbst – fixincludes:Übersetzer zur Anpassung von Headerfiles – boehm-gc, libada, libffi, libgfortran, libjava, libmudflap, ›libobjc, libruntime, libssp, libstdc++v3: Laufzeitbibliotheken . Löwis – libcpp, libiberty, libintl: vom Compiler selbst verwendete Bibliotheken © 2006 Martin v Compilerbau 3 gcc • Verzeichnis gcc selbst: Buildprozess, C compiler, Definition von Compiler-Datenstrukturen (tree, RTL), middle end • config: Backends – je ein Unterverzeichnis pro Prozessor • doc: texinfo-Dokumentation -

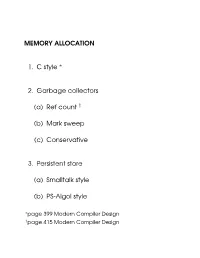

MEMORY ALLOCATION 1. C Style 2. Garbage Collectors (A) Ref Count (B

MEMORY ALLOCATION 1. C style ∗ 2. Garbage collectors (a) Ref count y (b) Mark sweep (c) Conservative 3. Persistent store (a) Smalltalk style (b) PS-Algol style ∗page 399 Modern Compiler Design ypage 415 Modern Compiler Design Malloc Data structure used size in bytes 16 1 free bits free Stack 32 0 program variables allocated block 16 0 48 1 free heap 1 MALLOC algorithm int heap[HSIZE]; char *scan(int s) {int i; for(i=0;i<HSIZE;i+=heap[i]> >2) if(heap[i]&1&&heap[i]&0xfffffffe>(s+4)) { heap[i]^=1; return &heap[i+1]; } return 0; } char *malloc(int size) { char *p=scan(size); if(p)return p; merge(); p=scan(size); if(p)return p; heapoverflow(); } 2 FREE ALGORITHM This simply toggles the free bit. free(char *p){heap[((int)p> >2)-1]^=1;} Merge algorithm merge() { int i; for(i=0;i<HSIZE;i+=heap[i]> >2) if(heap[i]&1&&heap[heap[i]> >2]&1) heap[i]+=(heap[heap[i]> >2]^1); } 3 Problem May have to chase long list of allocated blocks before a free block is found. Solution Use a free list 4 freepntr 16 1 free bits 32 0 chain free blocks allocated together block 16 0 48 1 heap 5 Problem the head of the free list will accumulate lots of small and unusable blocks, scanning these will slow access down Solution use two free pointers 1. points at the head of the free-list 2. points at the last allocated item on the free list when allocating use the second pointer to initi- ate the search, only when it reaches the end of the heap, do we re-initialise it from the first pointer.