But Their Fundamental Operation Has Remained Much the Same

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Case for Hardware Transactional Memory

Lecture 20: Transactional Memory CMU 15-418: Parallel Computer Architecture and Programming (Spring 2012) Giving credit ▪ Many of the slides in today’s talk are the work of Professor Christos Kozyrakis (Stanford University) (CMU 15-418, Spring 2012) Raising level of abstraction for synchronization ▪ Machine-level synchronization prims: - fetch-and-op, test-and-set, compare-and-swap ▪ We used these primitives to construct higher level, but still quite basic, synchronization prims: - lock, unlock, barrier ▪ Today: - transactional memory: higher level synchronization (CMU 15-418, Spring 2012) What you should know ▪ What a transaction is ▪ The difference between the atomic construct and locks ▪ Design space of transactional memory implementations - data versioning policy - con"ict detection policy - granularity of detection ▪ Understand HW implementation of transaction memory (consider how it relates to coherence protocol implementations we’ve discussed in the past) (CMU 15-418, Spring 2012) Example void deposit(account, amount) { lock(account); int t = bank.get(account); t = t + amount; bank.put(account, t); unlock(account); } ▪ Deposit is a read-modify-write operation: want “deposit” to be atomic with respect to other bank operations on this account. ▪ Lock/unlock pair is one mechanism to ensure atomicity (ensures mutual exclusion on the account) (CMU 15-418, Spring 2012) Programming with transactional memory void deposit(account, amount){ void deposit(account, amount){ lock(account); atomic { int t = bank.get(account); int t = bank.get(account); t = t + amount; t = t + amount; bank.put(account, t); bank.put(account, t); unlock(account); } } } nDeclarative synchronization nProgrammers says what but not how nNo explicit declaration or management of locks nSystem implements synchronization nTypically with optimistic concurrency nSlow down only on true con"icts (R-W or W-W) (CMU 15-418, Spring 2012) Declarative vs. -

Robust Architectural Support for Transactional Memory in the Power Architecture

Robust Architectural Support for Transactional Memory in the Power Architecture Harold W. Cain∗ Brad Frey Derek Williams IBM Research IBM STG IBM STG Yorktown Heights, NY, USA Austin, TX, USA Austin, TX, USA [email protected] [email protected] [email protected] Maged M. Michael Cathy May Hung Le IBM Research IBM Research (retired) IBM STG Yorktown Heights, NY, USA Yorktown Heights, NY, USA Austin, TX, USA [email protected] [email protected] [email protected] ABSTRACT in current p795 systems, with 8 TB of DRAM), as well as On the twentieth anniversary of the original publication [10], strengths in RAS that differentiate it in the market, adding following ten years of intense activity in the research lit- TM must not compromise any of these virtues. A robust erature, hardware support for transactional memory (TM) system is one that is sturdy in construction, a trait that has finally become a commercial reality, with HTM-enabled does not usually come to mind in respect to HTM systems. chips currently or soon-to-be available from many hardware We structured TM to work in harmony with features that vendors. In this paper we describe architectural support for support the architecture's scalability. Our goal has been to TM provide a comprehensive programming environment includ- TM added to a future version of the Power ISA . Two im- ing support for simple system calls and debug aids, while peratives drove the development: the desire to complement providing a robust (in the sense of "no surprises") execu- our weakly-consistent memory model with a more friendly tion environment with reasonably consistent performance interface to simplify the development and porting of multi- and without unexpected transaction failures.2 TM must be threaded applications, and the need for robustness beyond usable throughout the system stack: in hypervisors, oper- that of some early implementations. -

Nessus 8.3 User Guide

Nessus 8.3.x User Guide Last Updated: September 24, 2021 Table of Contents Welcome to Nessus 8.3.x 12 Get Started with Nessus 15 Navigate Nessus 16 System Requirements 17 Hardware Requirements 18 Software Requirements 22 Customize SELinux Enforcing Mode Policies 25 Licensing Requirements 26 Deployment Considerations 27 Host-Based Firewalls 28 IPv6 Support 29 Virtual Machines 30 Antivirus Software 31 Security Warnings 32 Certificates and Certificate Authorities 33 Custom SSL Server Certificates 35 Create a New Server Certificate and CA Certificate 37 Upload a Custom Server Certificate and CA Certificate 39 Trust a Custom CA 41 Create SSL Client Certificates for Login 43 Nessus Manager Certificates and Nessus Agent 46 Install Nessus 48 Copyright © 2021 Tenable, Inc. All rights reserved. Tenable, Tenable.io, Tenable Network Security, Nessus, SecurityCenter, SecurityCenter Continuous View and Log Correlation Engine are registered trade- marks of Tenable,Inc. Tenable.sc, Tenable.ot, Lumin, Indegy, Assure, and The Cyber Exposure Company are trademarks of Tenable, Inc. All other products or services are trademarks of their respective Download Nessus 49 Install Nessus 51 Install Nessus on Linux 52 Install Nessus on Windows 54 Install Nessus on Mac OS X 56 Install Nessus Agents 58 Retrieve the Linking Key 59 Install a Nessus Agent on Linux 60 Install a Nessus Agent on Windows 64 Install a Nessus Agent on Mac OS X 70 Upgrade Nessus and Nessus Agents 74 Upgrade Nessus 75 Upgrade from Evaluation 76 Upgrade Nessus on Linux 77 Upgrade Nessus on Windows 78 Upgrade Nessus on Mac OS X 79 Upgrade a Nessus Agent 80 Configure Nessus 86 Install Nessus Home, Professional, or Manager 87 Link to Tenable.io 88 Link to Industrial Security 89 Link to Nessus Manager 90 Managed by Tenable.sc 92 Manage Activation Code 93 Copyright © 2021 Tenable, Inc. -

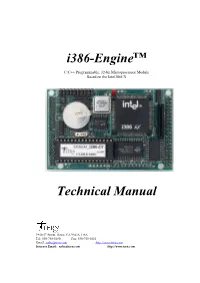

I386-Engine™ Technical Manual

i386-Engine™ C/C++ Programmable, 32-bit Microprocessor Module Based on the Intel386EX Technical Manual 1950 5 th Street, Davis, CA 95616, USA Tel: 530-758-0180 Fax: 530-758-0181 Email: [email protected] http://www.tern.com Internet Email: [email protected] http://www.tern.com COPYRIGHT i386-Engine, VE232, A-Engine, A-Core, C-Engine, V25-Engine, MotionC, BirdBox, PowerDrive, SensorWatch, Pc-Co, LittleDrive, MemCard, ACTF, and NT-Kit are trademarks of TERN, Inc. Intel386EX and Intel386SX are trademarks of Intel Coporation. Borland C/C++ are trademarks of Borland International. Microsoft, MS-DOS, Windows, and Windows 95 are trademarks of Microsoft Corporation. IBM is a trademark of International Business Machines Corporation. Version 2.00 October 28, 2010 No part of this document may be copied or reproduced in any form or by any means without the prior written consent of TERN, Inc. © 1998-2010 1950 5 th Street, Davis, CA 95616, USA Tel: 530-758-0180 Fax: 530-758-0181 Email: [email protected] http://www.tern.com Important Notice TERN is developing complex, high technology integration systems. These systems are integrated with software and hardware that are not 100% defect free. TERN products are not designed, intended, authorized, or warranted to be suitable for use in life-support applications, devices, or systems, or in other critical applications. TERN and the Buyer agree that TERN will not be liable for incidental or consequential damages arising from the use of TERN products. It is the Buyer's responsibility to protect life and property against incidental failure. TERN reserves the right to make changes and improvements to its products without providing notice. -

C Programmer's Manual

C PROGRAMMER'S MANUAL CGC 7900 SERIES COLOR GRAPHICS COMPUTERS Whitesmiths, Ltd. C PROGRAMMERS' MANUAL Release: 2.1 Date: Ma rch 1982 The C language was developed at Bell Laboratories by Dennis Ritchie; Whitesmiths, Ltd. has endeavored to remain as faithful as possible to his language specification. The external specifications of the Idris operating system, and of most of its utilities, are based heavily on those of UNIX, which was also developed at Bell Labora tories by Dennis Ritchie" and Ken Thompson. Whi tesmi ths, Ltd. grate fully acknowledges the parentage of many of the concepts we have commercialized, and we thank Western Electric Co. for waiving patent licensing fees for use of the UNIX protection mechanism. The successful implementation of Whi tesmi ths r compilers, operating systems, and utilities, however, is entirely the work of our pro gramming staff and allied consultants. For the record, UNIX is a trademark of Bell Laboratories; lAS, PDP-11, RSTS/E, RSX-11M, RT-11, VAX, VMS, and nearly every other term with an 11 in it all are trademarks of Digital Equipment Cor poration; CP/M is a trademark of Digital Research Co.; MC68000 and VERSAdos are trademarks of Motorola Inc.; ISIS is a trademark of In tel Corporation; A-Natural and Idris are trademarks of Whitesmiths, Ltd. C is not. Copyright (c) 1978, 1979, 1980, 1981 by Whitesmiths, Ltd. C PROGRAMMERS' MANUAL SECTIONS I. The C Languag-e II. Portable C Runtime Library III. C System Interface Library IV. C Machine Interface Library SCOPE This manual describes the C programming language, as implemented by Whitesmiths, Ltd., and the various library routines that make up the machine independent C environment. -

COSMIC C Cross Compiler for Motorola 68HC11 Family

COSMIC C Cross Compiler for Motorola 68HC11 Family COSMIC’s C cross compiler, cx6811 for the Motorola 68HC11 family of microcontrollers, incorporates over twenty years of innovative design and development effort. In the field since 1986 and previously sold under the Whitesmiths brand name, cx6811 is reliable, field-tested and incorporates many features to help ensure your embedded 68HC11 design meets and exceeds performance specifications. The C Compiler package for Windows includes: COSMIC integrated development environment (IDEA), optimizing C cross compiler, macro assembler, linker, librarian, object inspector, hex file generator, object format converters, debugging support utilities, run-time libraries and a compiler command driver. The PC compiler package runs under Windows 95/98/ME/NT4/2000 and XP. Complexity of a more generic compiler. You also get header Key Features file support for many of the popular 68HC11 peripherals, so Supports All 68HC11 Family Microcontrollers you can access their memory mapped objects by name either ANSI C Implementation at the C or assembly language levels. Extensions to ANSI for Embedded Systems ANSI / ISO Standard C Global and Processor-Specific Optimizations This implementation conforms with the ANSI and ISO Optimized Function Calling Standard C specifications which helps you protect your C support for Internal EEPROM software investment by aiding code portability and reliability. C support for Direct Page Data C Runtime Support C support for Code Bank Switching C runtime support consists of a subset of the standard ANSI C support for Interrupt Handlers library, and is provided in C source form with the binary Three In-Line Assembly Methods package so you are free to modify library routines to match User-defined Code/Data Program Sections your needs. -

Design and VHDL Implementation of an Application-Specific Instruction Set Processor

Design and VHDL Implementation of an Application-Specific Instruction Set Processor Lauri Isola School of Electrical Engineering Thesis submitted for examination for the degree of Master of Science in Technology. Espoo 19.12.2019 Supervisor Prof. Jussi Ryynänen Advisor D.Sc. (Tech.) Marko Kosunen Copyright © 2019 Lauri Isola Aalto University, P.O. BOX 11000, 00076 AALTO www.aalto.fi Abstract of the master’s thesis Author Lauri Isola Title Design and VHDL Implementation of an Application-Specific Instruction Set Processor Degree programme Computer, Communication and Information Sciences Major Signal, Speech and Language Processing Code of major ELEC3031 Supervisor Prof. Jussi Ryynänen Advisor D.Sc. (Tech.) Marko Kosunen Date 19.12.2019 Number of pages 66+45 Language English Abstract Open source processors are becoming more popular. They are a cost-effective option in hardware designs, because using the processor does not require an expensive license. However, a limited number of open source processors are still available. This is especially true for Application-Specific Instruction Set Processors (ASIPs). In this work, an ASIP processor was designed and implemented in VHDL hardware description language. The design was based on goals that make the processor easily customizable, and to have a low resource consumption in a System- on-Chip (SoC) design. Finally, the processor was implemented on an FPGA circuit, where it was tested with a specially designed VGA graphics controller. Necessary software tools, such as an assembler were also implemented for the processor. The assembler was used to write comprehensive test programs for testing and verifying the functionality of the processor. This work also examined some future upgrades of the designed processor. -

Comparative Architectures

Comparative Architectures CST Part II, 16 lectures Lent Term 2006 David Greaves [email protected] Slides Lectures 1-13 (C) 2006 IAP + DJG Course Outline 1. Comparing Implementations • Developments fabrication technology • Cost, power, performance, compatibility • Benchmarking 2. Instruction Set Architecture (ISA) • Classic CISC and RISC traits • ISA evolution 3. Microarchitecture • Pipelining • Super-scalar { static & out-of-order • Multi-threading • Effects of ISA on µarchitecture and vice versa 4. Memory System Architecture • Memory Hierarchy 5. Multi-processor systems • Cache coherent and message passing Understanding design tradeoffs 2 Reading material • OHP slides, articles • Recommended Book: John Hennessy & David Patterson, Computer Architecture: a Quantitative Approach (3rd ed.) 2002 Morgan Kaufmann • MIT Open Courseware: 6.823 Computer System Architecture, by Krste Asanovic • The Web http://bwrc.eecs.berkeley.edu/CIC/ http://www.chip-architect.com/ http://www.geek.com/procspec/procspec.htm http://www.realworldtech.com/ http://www.anandtech.com/ http://www.arstechnica.com/ http://open.specbench.org/ • comp.arch News Group 3 Further Reading and Reference • M Johnson Superscalar microprocessor design 1991 Prentice-Hall • P Markstein IA-64 and Elementary Functions 2000 Prentice-Hall • A Tannenbaum, Structured Computer Organization (2nd ed.) 1990 Prentice-Hall • A Someren & C Atack, The ARM RISC Chip, 1994 Addison-Wesley • R Sites, Alpha Architecture Reference Manual, 1992 Digital Press • G Kane & J Heinrich, MIPS RISC Architecture -

CS152: Computer Systems Architecture Pipelining

CS152: Computer Systems Architecture Pipelining Sang-Woo Jun Winter 2021 Large amount of material adapted from MIT 6.004, “Computation Structures”, Morgan Kaufmann “Computer Organization and Design: The Hardware/Software Interface: RISC-V Edition”, and CS 152 Slides by Isaac Scherson Eight great ideas ❑ Design for Moore’s Law ❑ Use abstraction to simplify design ❑ Make the common case fast ❑ Performance via parallelism ❑ Performance via pipelining ❑ Performance via prediction ❑ Hierarchy of memories ❑ Dependability via redundancy But before we start… Performance Measures ❑ Two metrics when designing a system 1. Latency: The delay from when an input enters the system until its associated output is produced 2. Throughput: The rate at which inputs or outputs are processed ❑ The metric to prioritize depends on the application o Embedded system for airbag deployment? Latency o General-purpose processor? Throughput Performance of Combinational Circuits ❑ For combinational logic o latency = tPD o throughput = 1/t F and G not doing work! PD Just holding output data X F(X) X Y G(X) H(X) Is this an efficient way of using hardware? Source: MIT 6.004 2019 L12 Pipelined Circuits ❑ Pipelining by adding registers to hold F and G’s output o Now F & G can be working on input Xi+1 while H is performing computation on Xi o A 2-stage pipeline! o For input X during clock cycle j, corresponding output is emitted during clock j+2. Assuming ideal registers Assuming latencies of 15, 20, 25… 15 Y F(X) G(X) 20 H(X) 25 Source: MIT 6.004 2019 L12 Pipelined Circuits 20+25=45 25+25=50 Latency Throughput Unpipelined 45 1/45 2-stage pipelined 50 (Worse!) 1/25 (Better!) Source: MIT 6.004 2019 L12 Pipeline conventions ❑ Definition: o A well-formed K-Stage Pipeline (“K-pipeline”) is an acyclic circuit having exactly K registers on every path from an input to an output. -

High-Performance Computing and Compiler Optimization

High-Performance Computing and Compiler Optimization P. (Saday) Sadayappan August 2019 What is a compiler? What •is Traditionally:a Compiler? Program that analyzes and translates from a high level language (e.g., C++) to low-level assembly languageCompilers that can be executed are translators by hardware • Fortran var a • C Machine code var b int a, b; Virtual machine code • C++ mov 3 a a = • 3;Java Transformed source code mov 4 r1 if (a• Text < processing4) { translate Augmented sourcecmpi a r1 b language= 2; • HTML/XML code jge l_e } else { • Command & Low-level commands mov 2 b b Scripting= 3; Semantic jmp l_d } Languages components • Natural language Anotherl_e: language mov 3 b • Domain specific l_d: ;done languages Wednesday,Wednesday, August 22, August 12 22, 12 Source: Milind Kulkarni 2 Compilers are optimizers • Can perform optimizations to make a program more What isefficient a Compiler? Compilers are optimizers var a • Can perform optimizations to make a program more efficient var b var a var c var b int a, b, c; mov a r1 var c b = av + 3; addi 3 r1 mov a r1 var a c = av + 3; mov varr1 bb addivar a3 r1 mov vara r2c movvar r1b b int a, b, c; addimov 3 ar2 r1 movvar r1c c b = a + 3; mov addir2 c3 r1 mov a Source:r1 Milind Kulkarni c = a + 3; mov r1 b addi 3 r1 ♦ Early days of computing: Minimizingmov a numberr2 of executedmov r1 b instructions Wednesday,minimized August 22, 12 program execution timeaddi 3 r2 mov r1 c § Sequential processors: had a singlemov functional r2 c unit to execute instructions § Compiler technology is very advanced in minimizing number of instructions ♦ Today:Wednesday, August 22, 12 § All computers are highly parallel: must make use of all parallel resources § Cost of data movement dominates the cost of performing the operations on data § Many challenging problems for compilers 3 The Good Old Days for Software Source: J. -

The Intel X86 Microarchitectures Map Version 2.0

The Intel x86 Microarchitectures Map Version 2.0 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • Variant: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

The Protection of Information in Computer Systems

The Protection of Information in Computer Systems JEROME H. SALTZER, SENIOR MEMBER, IEEE, AND MICHAEL D. SCHROEDER, MEMBER, IEEE Invited Paper Abstract - This tutorial paper explores the mechanics of Confinement protecting computer-stored information from unauthorized Allowing a borrowed program to have access to data, use or modification. It concentrates on those architectural while ensuring that the program cannot release the structures--whether hardware or software--that are information. necessary to support information protection. The paper Descriptor develops in three main sections. Section I describes A protected value which is (or leads to) the physical desired functions, design principles, and examples of address of some protected object. elementary protection and authentication mechanisms. Any Discretionary reader familiar with computers should find the first section (In contrast with nondiscretionary.) Controls on access to be reasonably accessible. Section II requires some to an object that may be changed by the creator of the familiarity with descriptor-based computer architecture. It object. examines in depth the principles of modern protection Domain architectures and the relation between capability systems The set of objects that currently may be directly and access control list systems, and ends with a brief accessed by a principal. analysis of protected subsystems and protected objects. Encipherment The reader who is dismayed by either the prerequisites or The (usually) reversible scrambling of data according the level of detail in the second section may wish to skip to to a secret transformation key, so as to make it safe for Section III, which reviews the state of the art and current transmission or storage in a physically unprotected research projects and provides suggestions for further environment.