Issue 88, June 2007

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Braillemathcodes Repository

Proceedings of the 4th International Workshop on "Digitization and E-Inclusion in Mathematics and Science 2021" DEIMS2021, February 18–19, 2021, Tokyo _________________________________________________________________________________________ The BrailleMathCodes Repository Paraskevi Riga1, Theodora Antonakopoulou1, David Kouvaras1, Serafim Lentas1, and Georgios Kouroupetroglou1 1National and Kapodistrian University of Athens, Greece Speech and Accessibility Laboratory, Department of Informatics and Telecommunications [email protected], [email protected], [email protected], [email protected], [email protected] Abstract Math notation for the sighted is a global language, but this is not the case with braille math, as different codes are in use worldwide. In this work, we present the design and development of a math braille-codes' repository named BrailleMathCodes. It aims to constitute a knowledge base as well as a search engine for both students who need to find a specific symbol code and the editors who produce accessible STEM educational content or, in general, the learner of math braille notation. After compiling a set of mathematical braille codes used worldwide in a database, we assigned the corresponding Unicode representation, when applicable, matched each math braille code with its LaTeX equivalent, and forwarded with Presentation MathML. Every math symbol is accompanied with a characteristic example in MathML and Nemeth. The BrailleMathCodes repository was designed following the Web Content Accessibility Guidelines. Users or learners of any code, both sighted and blind, can search for a term and read how it is rendered in various codes. The repository was implemented as a dynamic e-commerce website using Joomla! and VirtueMart. 1 Introduction Braille constitutes a tactile writing system used by people who are visually impaired. -

Guidelines and Standards for Tactile Graphics, 2010

Guidelines and Standards for Tactile Graphics, 2010 Developed as a Joint Project of the Braille Authority of North America and the Canadian Braille Authority L'Autorité Canadienne du Braille Published by The Braille Authority of North America ©2011 by The Braille Authority of North America All rights reserved. This material may be downloaded and printed, but not altered or sold. The mission and purpose of the Braille Authority of North America are to assure literacy for tactile readers through the standardization of braille and/or tactile graphics. BANA promotes and facilitates the use, teaching, and production of braille. It publishes rules, interprets, and renders opinions pertaining to braille in all existing codes. It deals with codes now in existence or to be developed in the future, in collaboration with other countries using English braille. In exercising its function and authority, BANA considers the effects of its decisions on other existing braille codes and formats; the ease of production by various methods; and acceptability to readers. For more information and resources, visit www.brailleauthority.org. ii Canadian Braille Authority (CBA) Members CNIB (Canadian National Institute for the Blind) Canadian Council of the Blind Braille Authority of North America (BANA) Members American Council of the Blind, Inc. (ACB) American Foundation for the Blind (AFB) American Printing House for the Blind (APH) Associated Services for the Blind (ASB) Association for Education & Rehabilitation of the Blind & Visually Impaired (AER) Braille Institute of America (BIA) California Transcribers & Educators for the Blind and Visually Impaired (CTEBVI) CNIB (Canadian National Institute for the Blind) The Clovernook Center for the Blind (CCBVI) National Braille Association, Inc. -

Nemeth Code Uses Some Parts of Textbook Format but Has Some Idiosyncrasies of Its Own

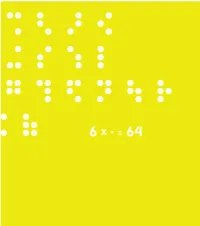

This book is a compilation of research, in “Understanding and Tracing the Problem faced by the Visually Impaired while doing Mathematics” as a Diploma project by Aarti Vashisht at the Srishti School of Art, Design and Technology, Bangalore. 6 DOTS 64 COMBINATIONS A Braille character is formed out of a combination of six dots. Including the blank space, sixty four combinations are possible using one or more of these six dots. CONTENTS Introduction 2 About Braille 32 Mathematics for the Visually Impaired 120 Learning Mathematics 168 C o n c l u s i o n 172 P e o p l e a n d P l a c e s 190 Acknowledgements INTRODUCTION This project tries to understand the nature of the problems that are faced by the visually impaired within the realm of mathematics. It is a summary of my understanding of the problems in this field that may be taken forward to guide those individuals who are concerned about this subject. My education in design has encouraged interest in this field. As a designer I have learnt to be aware of my community and its needs, to detect areas where design can reach out and assist, if not resolve, a problem. Thus began, my search, where I sought to grasp a fuller understanding of the situation by looking at the various mediums that would help better communication. During the project I realized that more often than not work happened in individual pockets which in turn would lead to regionalization of many ideas and opportunities. Data collection got repetitive, which would delay or sometimes even hinder the process. -

World Braille Usage, Third Edition

World Braille Usage Third Edition Perkins International Council on English Braille National Library Service for the Blind and Physically Handicapped Library of Congress UNESCO Washington, D.C. 2013 Published by Perkins 175 North Beacon Street Watertown, MA, 02472, USA International Council on English Braille c/o CNIB 1929 Bayview Avenue Toronto, Ontario Canada M4G 3E8 and National Library Service for the Blind and Physically Handicapped, Library of Congress, Washington, D.C., USA Copyright © 1954, 1990 by UNESCO. Used by permission 2013. Printed in the United States by the National Library Service for the Blind and Physically Handicapped, Library of Congress, 2013 Library of Congress Cataloging-in-Publication Data World braille usage. — Third edition. page cm Includes index. ISBN 978-0-8444-9564-4 1. Braille. 2. Blind—Printing and writing systems. I. Perkins School for the Blind. II. International Council on English Braille. III. Library of Congress. National Library Service for the Blind and Physically Handicapped. HV1669.W67 2013 411--dc23 2013013833 Contents Foreword to the Third Edition .................................................................................................. viii Acknowledgements .................................................................................................................... x The International Phonetic Alphabet .......................................................................................... xi References ............................................................................................................................ -

A Rapid Evidence Assessment of the Effectiveness of Educational Interventions to Support Children and Young People with Vision Impairment

SOCIAL RESEARCH NUMBER: 39/2019 PUBLICATION DATE: 12/09/2019 A Rapid Evidence Assessment of the effectiveness of educational interventions to support children and young people with vision impairment Mae’r ddogfen yma hefyd ar gael yn Gymraeg. This document is also available in Welsh. © Crown Copyright Digital ISBN 978-1-83933-152-7 A Rapid Evidence Assessment of the effectiveness of educational interventions to support children and young people with vision impairment Author(s): Graeme Douglas, Mike McLinden, Liz Ellis, Rachel Hewett, Liz Hodges, Emmanouela Terlektsi, Angela Wootten, Jean Ware* Lora Williams* University of Birmingham and Bangor University* Full Research Report: < Douglas, G. et al (2019). A Rapid Evidence Assessment of the effectiveness of educational interventions to support children and young people with vision impairment. Cardiff: Welsh Government, GSR report number 39/2019.> Available at: https://gov.wales/rapid-evidence-assessment-effectiveness- educational-interventions-support-children-and-young-people-visual-impairment Views expressed in this report are those of the researcher and not necessarily those of the Welsh Government For further information please contact: David Roberts Social Research and Information Division Welsh Government Sarn Mynach Llandudno Junction LL31 9RZ 0300 062 5485 [email protected] Table of contents 1. Introduction ............................................................................................................ 5 2. Methodology ....................................................................................................... -

Analysis of Teachers' Perceptions on Instruction of Braille Literacyin

ANALYSIS OF TEACHERS’ PERCEPTIONS ON INSTRUCTION OF BRAILLE LITERACYIN PRIMARY SCHOOLS FOR LEARNERS WITH VISUAL IMPAIRMENT IN KENYA CHOMBA M. WA MUNYI E83/27305/2014 A RESEARCHTHESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE DEGREE OF DOCTOR OF PHILOSOPHY IN THE SCHOOL OF EDUCATION SPECIAL NEEDS EDUCATION KENYATTA UNIVERSITY JUNE, 2017 ii DECLARATION I hereby declare that this submission is my own work and that, to the best of my knowledge and belief, it contains no material previously published or written by another person nor material which to a substantial extent has been accepted for the award of any other degree or diploma of the university or other institute of higher learning, except where due acknowledgment has been made in the text. Signature _________________________ Date ______________________ Chomba M. WaMunyi E83/27305/2014 Supervisors: This dissertation has been submitted for appraisal with our/my approval as University supervisor(s). Signature _________________________ Date ______________________ Dr. Margaret Murugami Department of Special Needs Education, Kenyatta University Signature _________________________ Date ______________________ Dr. Stephen Nzoka Department of Special Needs Education, Kenyatta University Signature ________________________ Date ______________________ Prof. Desmond Ozoji Department of Special Education and Rehabilitation Sciences, University of JOS iii DEDICATION I dedicate this dissertation to all children with visual impairment in Kenya. iv v TABLE OF CONTENTS DECLARATION ....................................................................................................... -

Perpustakaan Braille Di Kota Semarang

PERPUSTAKAAN BRAILLE DI KOTA SEMARANG TUGAS AKHIR UNTUK MEMPEROLEH GELAR SARJANA ARSITEKTUR Oleh Sadhu Adwitya Adhiwiajna 5112411017 PRODI ARSITEKTUR FAKULTAS TEKNIK SIPIL UNIVERSITAS NEGERI SEMARANG 2015 TUGAS AKHIR ustakaan Braille di Kota Semarang ii TUGAS AKHIR Perpustakaan Braille di Kota Semarang hapsoro adi iii TUGAS AKHIR ustakaan Braille di Kota Semarang iv TUGAS AKHIR Perpustakaan Braille di Kota Semarang hapsoro adi KATA PENGANTAR Segala puji syukur kami panjatkan kehadirat Allah SWT yang telah memberikan rahmat, taufik dan hidayah-Nya sehingga penyusun dapat menyelesaikan Landasan Program Perencanaan dan Perancangan Arsitektur (LP3A) Tugas Akhir Perpustakaan Braille di Kota Semarang ini dengan baik dan lancar tanpa terjadi suatu halangan apapun yang mungkin dapat mengganggu proses penyusunan LP3A Perpustakaan Braille ini. LP3A Perpustakaan Braille ini disusun sebagai salah satu syarat untuk kelulusan akademik di Universitas Negeri Semarang serta landasan dasar untuk merencanakan desain Perpustakaan Braille nantinya. Judul Tugas Akhir yang penulis pilih adalah ” Perpustakaan Braille di Kota Semarang”. Dalam penulisan LP3A Perpustakaan Braille ini tidak lupa penulis untuk mengucapkan terimakasih kepada semua pihak yang telah membantu, membimbing serta mengarahkan sehingga penulisan LP3A Perpustakaan Braille ini dapat terselesaikan dengan baik. Ucapan terimakasih saya tujukan kepada : 1. Allah SWT, yang telah memberikan kemudahan, kelancaran, serta kekekuatan sehingga dapat menyelesaikannya dengan baik 2. Bapak Prof. Dr. Fathur Rohman, M.Hum., Rektor Universitas Negeri Semarang 3. Bapak Dr. Nur Qudus, M.T., Dekan Fakultas Teknik Universitas Negeri Semarang 4. Bapak Drs. Sucipto, M.T., selaku Ketua Jurusan Teknik Sipil Universitas Negeri Semarang 5. Bapak Ir. Bambang Bambang Setyohadi K.P, M.T., selaku Kepala Program Studi Teknik Arsitektur S1 Universitas Negeri Semarang yang memberikan masukan, arahan dan ide-ide nya selama di perkuliahan 6. -

Using Information Communications Technologies To

USING INFORMATION COMMUNICATIONS TECHNOLOGIES TO IMPLEMENT UNIVERSAL DESIGN FOR LEARNING A working paper from the Global Reading Network for enhancing skills acquisition for students with disabilities Authors David Banes, Access and Inclusion Services Anne Hayes, Inclusive Development Partners Christopher Kurz, Rochester Institute of Technology Raja Kushalnagar, Gallaudet University With support from Ann Turnbull, Jennifer Bowser Gerst, Josh Josa, Amy Pallangyo, and Rebecca Rhodes This paper was made possible by the support of the American people through the United States Agency for International Development (USAID). The paper was prepared for USAID’s Building Evidence and Supporting Innovation to Improve Primary Grade Reading Assistance for the Office of Education (E3/ED), University Research Co., LLC, Contract No. AID-OAA-M-14-00001, MOBIS#: GS-10F-0182T. August 2020 RIGHTS AND PERMISSIONS Unless otherwise noted, Using Information Communications Technologies to Implement Universal Design for Learning is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License (CC BY-NC-SA 4.0). Under this license, users are free to share and adapt this material in any medium or format under the following conditions. To view a copy of this license, visit https://creativecommons.org/licenses/by-nc-sa/4.0/. Attribution: You must give appropriate credit, provide a link to the licensed material, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use. A suggested citation appears at the bottom of this page, and please also include this note: This document was developed by “Reading Within Reach,” through the support of the U.S. -

Guidance for Transcription Using the Nemeth Code Within UEB Contexts

Guidance for Transcription Using the Nemeth Code within UEB Contexts Approved April 2018 When Nemeth Code is to be used for mathematics and science, the actual math and technical notation is presented in Nemeth Code or the Nemeth-based Chemistry Code, as applicable, while the surrounding text is presented in UEB. UEB symbols are not used within the switch indicators for Nemeth Code. No contractions are to be used in Nemeth Code. Switch Indicators for Nemeth Code Opening Nemeth Code indicator _% Nemeth Code terminator _: Single-word switch indicator ,' These indicators should be listed on the Special Symbols page in braille order. The Nemeth Code terminator and single-word switch indicator are Nemeth symbols. Following the definition of both of these symbols, insert this phrase: (Nemeth Code symbol). Basic Guidance on When to Switch 1. Any mathematical expression or chemical formula is transcribed in Nemeth Code. This includes fragmentary expressions, (parts of formulas, incomplete equations, and the like) including isolated signs of operation or comparison. (See 3a below for exceptions.) Slash meaning per, over, or divided by is mathematical and is part of a fraction. Fractions are transcribed in Nemeth Code. Guidance for Transcription Using the Nemeth Code within UEB Contexts 1 Revised April 2018 Example: Multiplication is another commutative operation because 3(5) is the same as 5(3). ⠀⠀,multiplica;n is ano!r commutative op]a;n 2c _% #3(5) _: is ! same z _% #5(3) _:4 2. All other text, including punctuation that is logically associated with surrounding sentences, should be done in UEB. -

Guerrero-Dissertation-2017

An Investigation into the Commonalities of Students Who Are Braille Readers in a Southwestern State Who Possess Comprehensive Literacy Skills by Bertha Avila Guerrero, MEd A Dissertation In Special Education Submitted to the Graduate Faculty of Texas Tech University in Partial Fulfillment of the Requirements for the Degree of DOCTOR OF EDUCATION Approved Nora Griffin-Shirley, PhD Co-chair of Committee Rona Pogrund, PhD Co-chair of Committee Lee Deumer, PhD Committee Member Mark Sheridan, PhD Dean of the Graduate School August, 2017 Copyright © August, 2017, Bertha Avila Guerrero Texas Tech University, Bertha Avila Guerrero, August 2017 ACKNOWLEDGEMENTS First and foremost, I extend my eternal gratitude to my Prince of Peace, my blue- eyed Jesus, who instilled in me a love of learning and a desire to achieve more than what was expected of me. Two phenomenal women were my first introduction to what an extraordinary teacher of students with visual impairments (TVI) should be. Adalaide Ratner, who planted the seed of literacy in me at an early age, thank you for showing me that my future would be limited without the dots. Thanks to Ora Potter who watered the seed and propelled me further into independence with her encouragement and no-nonsense attitude about blindness. I am grateful for my beautiful Little Lady Godiva who patiently waited through endless hours at the library and lying on her favorite chair in my study while I worked; never complaining when the embosser woke her up and never grumbling about the late nights and early mornings. I thank Amador, my husband, my friend, research assistant, and driver, with all my heart and soul. -

Process-Driven Math

denied access to many career paths, especially those in science, technology, engineering, and Practice Perspective mathematics. Process-Driven Math is a fully audio Process-Driven Math: method of mathematics instruction and as An Auditory Method sessment that was created at Auburn Univer of Mathematics Instruction sity at Montgomery, Alabama, to meet the and Assessment for Students needs of one particular student, Logan. He Who Are Blind or Have Low Vision was blind, mobility impaired, and he could Ann P. Gulley, Luke A. Smith, not speak above a whisper. Logan was not Jordan A. Price, Logan C. Prickett, able to use traditional low vision tools like and Matthew F. Ragland braille and Nemeth code because he lacked the sensitivity in his fingers required to read Mathematics can be a formidable roadblock the raised dots. Logan’s need for tools that for students who are visually impaired (that would enable him to perform the rigorous is, those who are blind or have low vision). algebraic manipulations that are common in Grade equivalency comparisons between stu mathematics courses led to the development dents who are visually impaired and those of Process-Driven Math. With this method, he who are sighted are staggering. According to was able to succeed in both college algebra the U.S. Department of Education, 75% of all visually impaired students are more than a and pre-calculus with trigonometry. These full grade level behind their sighted peers in tools may help other visually impaired stu mathematics, and 20% of them are five or dents who are not succeeding in mathematics more grade levels behind their sighted peers because they lack access to, or knowledge of, in the subject (Blackorby, Chorost, Garza, & the Nemeth code. -

Unicodemath a Nearly Plain-Text Encoding of Mathematics Version 3.1 Murray Sargent III Microsoft Corporation 16-Nov-16

Unicode Nearly Plain Text Encoding of Mathematics UnicodeMath A Nearly Plain-Text Encoding of Mathematics Version 3.1 Murray Sargent III Microsoft Corporation 16-Nov-16 1. Introduction ............................................................................................................ 2 2. Encoding Simple Math Expressions ...................................................................... 3 2.1 Fractions .......................................................................................................... 4 2.2 Subscripts and Superscripts........................................................................... 6 2.3 Use of the Blank (Space) Character ............................................................... 8 3. Encoding Other Math Expressions ........................................................................ 8 3.1 Delimiters ........................................................................................................ 8 3.2 Literal Operators ........................................................................................... 11 3.3 Prescripts and Above/Below Scripts ........................................................... 11 3.4 n-ary Operators ............................................................................................. 12 3.5 Mathematical Functions ............................................................................... 13 3.6 Square Roots and Radicals ........................................................................... 14 3.7 Enclosures ....................................................................................................