ASC Training P6 Microarchitecture Tuning Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference 319433-037 MAY 2019 Intel technologies features and benefits depend on system configuration and may require enabled hardware, software, or service activation. Learn more at intel.com, or from the OEM or retailer. No computer system can be absolutely secure. Intel does not assume any liability for lost or stolen data or systems or any damages resulting from such losses. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifica- tions. Current characterized errata are available on request. This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Intel does not guarantee the availability of these interfaces in any future product. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling 1- 800-548-4725, or by visiting http://www.intel.com/design/literature.htm. Intel, the Intel logo, Intel Deep Learning Boost, Intel DL Boost, Intel Atom, Intel Core, Intel SpeedStep, MMX, Pentium, VTune, and Xeon are trademarks of Intel Corporation in the U.S. -

A Superscalar Out-Of-Order X86 Soft Processor for FPGA

A Superscalar Out-of-Order x86 Soft Processor for FPGA Henry Wong University of Toronto, Intel [email protected] June 5, 2019 Stanford University EE380 1 Hi! ● CPU architect, Intel Hillsboro ● Ph.D., University of Toronto ● Today: x86 OoO processor for FPGA (Ph.D. work) – Motivation – High-level design and results – Microarchitecture details and some circuits 2 FPGA: Field-Programmable Gate Array ● Is a digital circuit (logic gates and wires) ● Is field-programmable (at power-on, not in the fab) ● Pre-fab everything you’ll ever need – 20x area, 20x delay cost – Circuit building blocks are somewhat bigger than logic gates 6-LUT6-LUT 6-LUT6-LUT 3 6-LUT 6-LUT FPGA: Field-Programmable Gate Array ● Is a digital circuit (logic gates and wires) ● Is field-programmable (at power-on, not in the fab) ● Pre-fab everything you’ll ever need – 20x area, 20x delay cost – Circuit building blocks are somewhat bigger than logic gates 6-LUT 6-LUT 6-LUT 6-LUT 4 6-LUT 6-LUT FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel code and hardware accelerators need effort – Less effort if soft processors got faster 5 FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel code and hardware accelerators need effort – Less effort if soft processors got faster 6 FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel -

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

2Nd Generation Intel® Core™ Processor Family Mobile with ECC

2nd Generation Intel® Core™ Processor Family Mobile with ECC Datasheet Addendum May 2012 Revision 002 Document Number: 324855-002 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. A "Mission Critical Application" is any application in which failure of the Intel Product could result, directly or indirectly, in personal injury or death. SHOULD YOU PURCHASE OR USE INTEL'S PRODUCTS FOR ANY SUCH MISSION CRITICAL APPLICATION, YOU SHALL INDEMNIFY AND HOLD INTEL AND ITS SUBSIDIARIES, SUBCONTRACTORS AND AFFILIATES, AND THE DIRECTORS, OFFICERS, AND EMPLOYEES OF EACH, HARMLESS AGAINST ALL CLAIMS COSTS, DAMAGES, AND EXPENSES AND REASONABLE ATTORNEYS' FEES ARISING OUT OF, DIRECTLY OR INDIRECTLY, ANY CLAIM OF PRODUCT LIABILITY, PERSONAL INJURY, OR DEATH ARISING IN ANY WAY OUT OF SUCH MISSION CRITICAL APPLICATION, WHETHER OR NOT INTEL OR ITS SUBCONTRACTOR WAS NEGLIGENT IN THE DESIGN, MANUFACTURE, OR WARNING OF THE INTEL PRODUCT OR ANY OF ITS PARTS. -

Travelmate P6 Series Product Sheet

Acer recommends Windows 10 Pro. TravelMate P6 Wi-Fi 6 Microsoft Teams SPECIFICATIONS TravelMate P6 P614-51-G2_51G-G2 2 1 3 12 13 14 15 16 4 5 6 7 8 9 10 11 17 Product views 1. Infrared LED 5. HDMI® port 9. Smart Card reader slot (optional) 14. microCD card reader (optional) 6. USB port 10. Keyboard 15. USB port 2. Webcam 7. USB Type-C /Thunderbolt 11. Touchpad 16. Ethernet port 3. 14" display 3 port 12. Power button with fingerprint 17. Kensington lock slot 4. DC-in jack 8. Headset/speaker jack reader 13. SIM card slot (optional) Operating system1, Windows 10 Pro 64-bit (Acer recommends Windows 10 Pro for business.) Windows 10 Home 64-bit 2 CPU and chipset1 Intel® CoreTM i7-10510U processor Intel® CoreTM i5-10210U processor Memory1, 3, 4, 5 Dual-channel DDR4 SDRAM support up to 24 GB of DDR4 system memory, 8 GB / 4 GB of onboard DDR4 system memory Display6 14.0" display with IPS (In-Plane Switching) technology, Full HD 1920 x 1080, high-brightness (300nits) Acer ComfyViewTM LED-backlit TFT LCD 16:9 aspect ratio, 100% sRGB color gamut Wide viewing angle up to 170 degrees Stylish and Slim design Mercury free, environment friendly Graphics Intel® UHD Graphics 620 (P614-51-G2 only) NVIDIA® GeForce® MX250 (P614-51G-G2 only) Audio Four built-in microphones Built-in discrete smart amplifiers to deliver powerful sound Compatible with Cortana with Voice for up to 4 meters Acer TrueHarmony technology for lower distortion, wider frequency range, headphone-like audio and powerful sound Certified for Microsoft Teams Storage1, 7, Solid state -

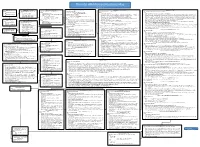

The Intel X86 Microarchitectures Map Version 2.0

The Intel x86 Microarchitectures Map Version 2.0 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • Variant: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

Intel Xeon Processor Can Be Identified by the Following Values

Intel® Xeon® Processor Specification Update December 2006 Notice: The Intel® Xeon® processor may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are documented in this specification update. Document Number: 249678-056 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY RELATING TO SALE AND/OR USE OF INTEL PRODUCTS, INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT, OR OTHER INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, life sustaining, critical control or safety systems, or in nuclear facility applications. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order. Copies of documents which have an ordering number and are referenced in this document, or other Intel literature may be obtained by calling 1-800-548-4725 or by visiting Intel's website at http://developer.intel.com/design/litcentr. Intel®, the Intel® logo, Pentium®, Pentium® III Xeon™, Celeron, Intel® NetBurst™ and Intel® Xeon™ are trademarks or registered trademarks of Intel® Corporation or its subsidiaries in the United States and other countries. -

Intel's Haswell CPU Microarchitecture

Intel’s Haswell CPU Microarchitecture www.realworldtech.com/haswell-cpu/ David Kanter Over the last 5 years, high performance microprocessors have changed dramatically. One of the most significant influences is the increasing level of integration that is enabled by Moore’s Law. In the context of semiconductors, integration is an ever-present fact of life, reducing system power consumption and cost and increasing performance. The latest incarnation of this trend is the System-on-a-Chip (SoC) philosophy and design approach. SoCs have been the preferred solution for extremely low power systems, such as 1W mobile phone chips. However, high performance microprocessors span a much wider design space, from 15W notebook chips to 150W server sockets and the adoption of SoCs has been slower because of the more diverse market. Sandy Bridge was a dawn of a new era for Intel, and the first high-end x86 microprocessor that could truly be described as an SoC, integrating the CPU, GPU, Last Level Cache and system I/O. However, Sandy Bridge largely targets conventional PC markets, such as notebooks, desktops, workstations and servers, with a smattering of embedded applications. The competition for Sandy Bridge is largely AMD’s Bulldozer family, which has suffered from poor performance in the first few iterations. The 32nm Sandy Bridge CPU introduced AVX, a new instruction extension for floating point (FP) workloads and fundamentally changed almost every aspect of the pipeline, from instruction fetching to memory accesses. The system architecture was radically revamped, with a coherent ring interconnect for on-chip communication, a higher bandwidth Last Level Cache (LLC), integrated graphics and I/O, and comprehensive power management. -

Tenant/Landlord Special Issue

The = HoUsing JUsTiCe sPeCiAl ediTion = IndypendenT #246: may 2019 • IndypendenT.org marchIng on alBany p6 play ThIs Board game p12 where The power lIes p16 Take down The landlords wIll ThIs Be The year ThaT TenanTs wIn sweepIng renT law reforms? coverage sTarTs on p6 ROB LAQuiNTA MAY 18. WHO SAYS RADICALS CAN’T HAVE NICE THINGS? TURN TO PAGE 23 FOR MORE... 2 CommUniTY The IndypendenT CAlendAr THE INDYPENDENT, INC. 388 Atlantic Avenue, 2nd Floor Brooklyn, NY 11217 212-904-1282 www.indypendent.org Twitter: @Theindypendent facebook.com/Theindypendent BOARD OF DIRECTORS: Ellen Davidson, Anna Gold, THU MAY 9 BRATHWAITE: BLACK IS BEAU- Alina Mogilyanskaya, Ann 5PM–8PM • FREE TIFUL Schneider, John Tarleton ART OPENiNG: ON This monograph tells the story GENTRiFiCATiON: of a key but under-recognized EDITOR-IN-CHIEF: mAY Mi CASA ES Su fi gure of the second Harlem Re- John Tarleton CASA naissance and a popularizer of ASSOCIATE EDITOR: THRU MAY 26 Set in a historic and archi- the phrase “black is beautiful.” Peter Rugh TuE–SuN, 12PM–7PM • FREE tectural landmark, this group ex- SCHOMBURG CENTER FOR RE- EXHiBiTiON: jOAN CORNELLÀ: hibition investigates notions of SEARCH IN BLACK CULTURE CONTRIBUTING EDITORS: KEEP iT REAL home (metaphorical and literal), 515 Malcolm X Blvd., Mnhtn Ellen Davidson, Alina Presenting the unique dark and belonging, displacement, street Mogilyanskaya, Nicholas unsettling irony of the interna- life, urban renewal, gentrifi ca- THU MAY 16 Powers, Steven Wishnia tionally renowned Catalan artist tion and activism. On view May 7PM–1AM • $12 and cartoonist. With no shame 9 to june 6, by appointment MuSiC: HiRS COLLECTivE, ILLUSTRATION DIRECTOR: or fear of offending the viewer, only: 646-541-5357. -

The Intel X86 Microarchitectures Map Version 2.2

The Intel x86 Microarchitectures Map Version 2.2 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • New instructions: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

Itanium and Vnuma

Virtualisation Case Study: Itanium and vNUMA Matthew Chapman [email protected] 1 ITANIUM ➜ High-performance processor architecture ➜ Also known as IA-64 ➜ Joint venture between HP and Intel ➜ Not the same instruction set as IA-32/AMD64/EM64T ➜ Very good floating-point performance 2000: Itanium“Merced” 180nm,upto800Mhz,4MBcache 2002: ItaniumII“McKinley” 180nm,upto1Ghz,3MBcache 2003: ItaniumII“Madison” 130nm,upto1.6Ghz,6MBcache 2004: Itanium II “Madison 9M” 130 nm, up to 1.67Ghz, 9MB cache 2006: Itanium II “Montecito” 90 nm, dual-core, up to 1.6Ghz, 24MB cache 2008: “Tukwila” 65nm,quad-core,upto2.5Ghz? ITANIUM 2 EXPLICITLY PARALLEL INSTRUCTION-SET COMPUTING (EPIC) ➜ Goal to increase instructions per cycle ➜ Itanium can have similar performance to x86 at a lower clock speed ➜ Based on Very Long Instruction Word (VLIW) ➜ Explicit parallelism in instruction set ➜ Simplified instruction decode and issue ➜ Scheduling decisions made by compiler Memory Branch Branch Branch Integer Integer Integer Integer MI;I bundle Integer Memory Memory Memory Memory Floating−point Floating−point Floating−point Floating−point VLIW Instruction MFI bundle Integer Execution Units Execution Units EPIC Instructions EXPLICITLY PARALLEL INSTRUCTION-SET COMPUTING (EPIC) 3 EXAMPLE Load and add three numbers in assembly code: ld8 r4 = [r1] ld8 r5 = [r2] ld8 r6 = [r3] ;; add r7 = r4, r5 ;; add r8 = r6, r7 ;; EXAMPLE 4 Resulting instructions: MMI ld8 r4=[r1] ld8 r5=[r2] nop.i 0 M;MI; ld8 r6 = [r3] ;; add r7=r4,r5 // Arithmetic on M too nop.i 0 ;; M;MI add r8 = r6, r7 -

P6: Microarchitecture

TheThe PentiumPentium®® II/IIIII/III ProcessorProcessor ““CompilerCompiler onon aa ChipChip”” Ronny Ronen Senior Principal Engineer Director of Architecture Research Intel Labs - Haifa Intel Corporation Tel Aviv University January 18, 2005 ® 1 G-Number AgendaAgenda z Goal,Goal, ExpectationsExpectations…… z GeneralGeneral InformationInformation z µµarchitecurearchitecure basicsbasics ® z PentiumPentium ProPro ProcessorProcessor µµarchitecurearchitecure z SWSW aspectsaspects ® 2 G-Number TechnologyTechnology ProfileProfile Pentium Pro - 1995 Pentium-II - 1998 Pentium-III - 1999 z Core @200MHz z Core @333MHz z Core @600MHz z 256K L2 on package, z 512KB L2 in SEC z 512KB L2 @200MHz @167MHz @ ???MHz z Performance: z Performance: z Performance: 8.09 SPECint95 12.8 SPECint95 24.0 SPECint95 6.70 SPECfp95 9.14 SPECfp95 15.9 SPECfp95 z 0.35 µm BiCMOS (P55C: 7.12/5.21) z 5.5M transistors z 0.25 µm CMOS z 0.25 µm CMOS process process z 195 sq mm (14x14) z 7.5M transistors z ???M transistors z 3.3V, 11.2A z 28.1W / 35.0W ® 3 G-Number TechnologyTechnology ProfileProfile (cont.)(cont.) z Pentium-III – 2000 (Coppermine) z Pentium-III - 2002 (Tualatin) z Core @1000MHz z Core @1400MHz z 256KB L2 on chip @ 1000MHz z 512KB L2 on chip @ 1400MHz z Performance: z Performance (estimated): >46 SPECint95 >60 SPECint95 >20 SPECfp95 >30 SPECfp95 z 0.18 µm CMOS process z 0.13 µm CMOS process z ~20M transistors z ~44M transistors z Pentium M Processor 2003 (Banias) z Pentium M Processor 2004 (Dothan) z Core @1800MHz z Core @2000MHz z 1024KB L2 on chip @