Recognition of Baybayin Symbols (Ancient Pre-Colonial Philippine Writing System) Using Image Processing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UAX #44: Unicode Character Database File:///D:/Uniweb-L2/Incoming/08249-Tr44-3D1.Html

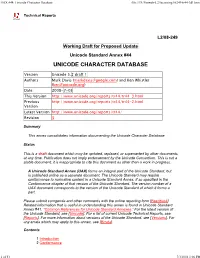

UAX #44: Unicode Character Database file:///D:/Uniweb-L2/Incoming/08249-tr44-3d1.html Technical Reports L2/08-249 Working Draft for Proposed Update Unicode Standard Annex #44 UNICODE CHARACTER DATABASE Version Unicode 5.2 draft 1 Authors Mark Davis ([email protected]) and Ken Whistler ([email protected]) Date 2008-7-03 This Version http://www.unicode.org/reports/tr44/tr44-3.html Previous http://www.unicode.org/reports/tr44/tr44-2.html Version Latest Version http://www.unicode.org/reports/tr44/ Revision 3 Summary This annex consolidates information documenting the Unicode Character Database. Status This is a draft document which may be updated, replaced, or superseded by other documents at any time. Publication does not imply endorsement by the Unicode Consortium. This is not a stable document; it is inappropriate to cite this document as other than a work in progress. A Unicode Standard Annex (UAX) forms an integral part of the Unicode Standard, but is published online as a separate document. The Unicode Standard may require conformance to normative content in a Unicode Standard Annex, if so specified in the Conformance chapter of that version of the Unicode Standard. The version number of a UAX document corresponds to the version of the Unicode Standard of which it forms a part. Please submit corrigenda and other comments with the online reporting form [Feedback]. Related information that is useful in understanding this annex is found in Unicode Standard Annex #41, “Common References for Unicode Standard Annexes.” For the latest version of the Unicode Standard, see [Unicode]. For a list of current Unicode Technical Reports, see [Reports]. -

Tungumál, Letur Og Einkenni Hópa

Tungumál, letur og einkenni hópa Er letur ómissandi í baráttu hópa við ríkjandi öfl? Frá Tifinagh og rúnaristum til Pixação Þorleifur Kamban Þrastarson Lokaritgerð til BA-prófs Listaháskóli Íslands Hönnunar- og arkitektúrdeild Desember 2016 Í þessari ritgerð er reynt að rökstyðja þá fullyrðingu að letur sé mikilvægt og geti jafnvel undir vissum kringumstæðum verið eitt mikilvægasta vopnið í baráttu hópa fyrir tilveru sinni, sjálfsmynd og stað í samfélagi. Með því að líta á þrjú ólík dæmi, Tifinagh, rúnaletur og Pixação, hvert frá sínum stað, menningarheimi og tímabili er ætlunin að sýna hvernig saga leturs og týpógrafíu hefur samtvinnast og mótast af samfélagi manna og haldist í hendur við einkenni þjóða og hópa fólks sem samsama sig á einn eða annan hátt. Einkenni hópa myndast oft sem andsvar við ytri öflum sem ógna menningu, auði eða tilverurétti hópsins. Hópar nota mismunandi leturtýpur til þess að tjá sig, tengjast og miðla upplýsingum. Það skiptir ekki eingöngu máli hvað þú skrifar heldur hvernig, með hvaða aðferðum og á hvaða efni. Skilaboðin eru fólgin í letrinu sjálfu en ekki innihaldi letursins. Letur er útlit upplýsingakerfis okkar og hefur notkun ritmáls og leturs aldrei verið meiri í heiminum sem og læsi. Ritmál og letur eru algjörlega samofnir hlutir og ekki hægt að slíta annað frá öðru. Ekki er hægt að koma frá sér ritmáli nema í letri og þessi tvö hugtök flækjast því oft saman. Í ljósi athugana á þessum þremur dæmum í ritgerðinni dreg ég þá ályktun að letur spilar og hefur spilað mikilvægt hlutverk í einkennum þjóða og hópa. Letur getur, ásamt tungumálinu, stuðlað að því að viðhalda, skapa eða eyðileggja menningu og menningarlegar tenginga Tungumál, letur og einkenni hópa Er letur ómissandi í baráttu hópa við ríkjandi öfl? Frá Tifinagh og rúnaristum til Pixação Þorleifur Kamban Þrastarson Lokaritgerð til BA-prófs í Grafískri hönnun Leiðbeinandi: Óli Gneisti Sóleyjarson Grafísk hönnun Hönnunar- og arkitektúrdeild Desember 2016 Ritgerð þessi er 6 eininga lokaritgerð til BA-prófs í Grafískri hönnun. -

A Translation of the Malia Altar Stone

MATEC Web of Conferences 125, 05018 (2017) DOI: 10.1051/ matecconf/201712505018 CSCC 2017 A Translation of the Malia Altar Stone Peter Z. Revesz1,a 1 Department of Computer Science, University of Nebraska-Lincoln, Lincoln, NE, 68588, USA Abstract. This paper presents a translation of the Malia Altar Stone inscription (CHIC 328), which is one of the longest known Cretan Hieroglyph inscriptions. The translation uses a synoptic transliteration to several scripts that are related to the Malia Altar Stone script. The synoptic transliteration strengthens the derived phonetic values and allows avoiding certain errors that would result from reliance on just a single transliteration. The synoptic transliteration is similar to a multiple alignment of related genomes in bioinformatics in order to derive the genetic sequence of a putative common ancestor of all the aligned genomes. 1 Introduction symbols. These attempts so far were not successful in deciphering the later two scripts. Cretan Hieroglyph is a writing system that existed in Using ideas and methods from bioinformatics, eastern Crete c. 2100 – 1700 BC [13, 14, 25]. The full Revesz [20] analyzed the evolutionary relationships decipherment of Cretan Hieroglyphs requires a consistent within the Cretan script family, which includes the translation of all known Cretan Hieroglyph texts not just following scripts: Cretan Hieroglyph, Linear A, Linear B the translation of some examples. In particular, many [6], Cypriot, Greek, Phoenician, South Arabic, Old authors have suggested translations for the Phaistos Disk, Hungarian [9, 10], which is also called rovásírás in the most famous and longest Cretan Hieroglyph Hungarian and also written sometimes as Rovas in inscription, but in general they were unable to show that English language publications, and Tifinagh. -

Documenting Endangered Alphabets

Documenting endangered alphabets Industry Focus Tim Brookes Three years ago, acting on a notion so whimsi- cal I assumed it was a kind of presenile monoma- nia, I began carving endangered alphabets. The Tdisclaimers start right away. I’m not a linguist, an anthropologist, a cultural historian or even a woodworker. I’m a writer — but I had recently started carving signs for friends and family, and I stumbled on Omniglot.com, an online encyclo- pedia of the world’s writing systems, and several things had struck me forcibly. For a start, even though the world has more than 6,000 Figure 1: Tifinagh. languages (some of which will be extinct even by the time this article goes to press), it has fewer than 100 scripts, and perhaps a passing them on as a series of items for consideration and dis- third of those are endangered. cussion. For example, what does a written language — any writ- Working with a set of gouges and a paintbrush, I started to ten language — look like? The Endangered Alphabets highlight document as many of these scripts as I could find, creating three this question in a number of interesting ways. As the forces of exhibitions and several dozen individual pieces that depicted globalism erode scripts such as these, the number of people who words, phrases, sentences or poems in Syriac, Bugis, Baybayin, can write them dwindles, and the range of examples of each Samaritan, Makassarese, Balinese, Javanese, Batak, Sui, Nom, script is reduced. My carvings may well be the only examples Cherokee, Inuktitut, Glagolitic, Vai, Bassa Vah, Tai Dam, Pahauh of, say, Samaritan script or Tifinagh that my visitors ever see. -

A Message from Afar: Fact Sheet 3 (PDF)

3 What’s the Message? Here’s your signal The detection of a signal from another world would be a most remarkable moment in human history. However, if we detect such a signal, is it just a beacon from their technology, without any content, or does it contain information or even a message? Does it resemble sound, or is it like interstellar e-mail? Can we ever understand such a message? This appears to be a tremendous challenge, given that we still have many scripts from our own antiquity that remain undeciphered, despite many serious attempts, over hundreds of years. – And we know far more about humanity than about extra-terrestrial intelligence… We are facing all the complexities involved in understanding and glimpsing the intellect of the author, while the world’s expectations demand immediacy of information. So, where do we begin? Structure and language Information stands out from randomness, it is based on structures. The problem goal we face, after we detect a signal, is to first separate out those information-carrying signals from other phenomena, without being able to engage in a dialogue, and then to learn something about the structure of their content in the passing. This means that we need a suitable filter. We need a way of separating out the interesting stuff; we need a language detector. While identifying the location of origin of a candidate signal can rule out human making, its content could involve a vast array of possible structures, some of which may be beyond our knowledge or imagination; however, for identifying intelligence that shares any pattern with our way of processing and transmitting information, the collective knowledge and examples here on Earth are a good starting point. -

'14 Oct 28 P6:23

SIXTEENTH CONGRESS Of ) THE REPUBLIC OF THE PHJUPPINES ) Second Regular Session ) '14 OCT 28 P6:23 SENATE S.B. NO. 2440 Introduced by SENATOR LOREN LEGARDA AN ACT DECLARING "BAYBAYIN" AS THE NATIONAL WRITING SYSTEM OF THE PHILIPPINES, PROVIDING FOR ITS PROMOTION, PROTECTION, PRESERVATION AND CONSERVATION, AND FOR OTHER PURPOSES EXPLANATORY NOTE The "Baybayin" is an ancient Philippine system of writing composed of a set of 17 cursive characters or letters which represents either a single consonant or vowel or a complete syllable. It started to spread throughout the country in the 16th century. However, today, there is a shortage of proof of the existence of "baybayin" scripts due to the fact that prior to the arrival of Spaniards in the Philippines, Filipino scribers would engrave mainly on bamboo poles or tree barks and frail materials that decay qUickly. To recognize our traditional writing systems which are objects of national importance and considered as a National Cultural Treasure, the "Baybayin", an indigenous national writing script which will be Tagalog-based national written language and one of the existing native written languages, is hereby declared collectively as the "Baybayin" National Writing Script of the country. Hence, it should be promoted, protected, preserved and conserved for posterity for the next generation. This bill, therefore, seeks to declare "baybayin" as the National Writing Script of the Philippines. It shall mandate the NCCA to promote, protect, preserve and conserve the script through the following (a) -

Language Ideologies in Morocco Sybil Bullock Connecticut College, [email protected]

CORE Metadata, citation and similar papers at core.ac.uk Provided by DigitalCommons@Connecticut College Connecticut College Digital Commons @ Connecticut College Anthropology Department Honors Papers Anthropology Department 2014 Language Ideologies in Morocco Sybil Bullock Connecticut College, [email protected] Follow this and additional works at: http://digitalcommons.conncoll.edu/anthrohp Recommended Citation Bullock, Sybil, "Language Ideologies in Morocco" (2014). Anthropology Department Honors Papers. Paper 11. http://digitalcommons.conncoll.edu/anthrohp/11 This Honors Paper is brought to you for free and open access by the Anthropology Department at Digital Commons @ Connecticut College. It has been accepted for inclusion in Anthropology Department Honors Papers by an authorized administrator of Digital Commons @ Connecticut College. For more information, please contact [email protected]. The views expressed in this paper are solely those of the author. Language Ideologies in Morocco Sybil Bullock 2014 Honors Thesis Anthropology Department Connecticut College Thesis Advisor: Petko Ivanov First Reader: Christopher Steiner Second Reader: Jeffrey Cole Table of Contents Dedication………………………………………………………………………………….….…3 Acknowledgments………………………………………………………………………….…….4 Abstract…………………………………………………………………………………….…….5 Chapter 1: Introduction…………………………………………………………………………..6 Chapter 2: The Role of Language in Nation-Building…………………………………………..10 Chapter 3: The Myth of Monolingualism………………………………………………….……18 Chapter 4: Language or Dialect?...................................................................................................26 -

Association Relative À La Télévision Européenne G.E.I.E. Gtld: .Arte Status: ICANN Review Status Date: 2015-10-27 17:43:58 Print Date: 2015-10-27 17:44:13

ICANN Registry Request Service Ticket ID: S1K9W-8K6J2 Registry Name: Association Relative à la Télévision Européenne G.E.I.E. gTLD: .arte Status: ICANN Review Status Date: 2015-10-27 17:43:58 Print Date: 2015-10-27 17:44:13 Proposed Service Name of Proposed Service: Technical description of Proposed Service: ARTE registry operator, Association Relative à la Télévision Européenne G.E.I.E. is applying to add IDN domain registration services. ARTE IDN domain name registration services will be fully compliant with IDNA 2008, as well as ICANN's Guidelines for implementation of IDNs. The language tables are submitted separately via the GDD portal together with IDN policies. ARTE is a brand TLD, as defined by the Specification 13, and as such only the registry and its affiliates are eligible to register .ARTE domain names. The full list of languages/scripts is: Azerbaijani language Belarusian language Bulgarian language Chinese language Croatian language French language Greek, Modern language Japanese language Korean language Kurdish language Macedonian language Moldavian language Polish language Russian language Serbian language Spanish language Swedish language Ukrainian language Arabic script Armenian script Avestan script Balinese script Bamum script Batak script Page 1 ICANN Registry Request Service Ticket ID: S1K9W-8K6J2 Registry Name: Association Relative à la Télévision Européenne G.E.I.E. gTLD: .arte Status: ICANN Review Status Date: 2015-10-27 17:43:58 Print Date: 2015-10-27 17:44:13 Bengali script Bopomofo script Brahmi script Buginese -

The Cretan Script Family Includes the Carian Alphabet

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln CSE Journal Articles Computer Science and Engineering, Department of 2017 The rC etan Script Family Includes the Carian Alphabet Peter Z. Revesz University of Nebraska-Lincoln, [email protected] Follow this and additional works at: https://digitalcommons.unl.edu/csearticles Revesz, Peter Z., "The rC etan Script Family Includes the Carian Alphabet" (2017). CSE Journal Articles. 196. https://digitalcommons.unl.edu/csearticles/196 This Article is brought to you for free and open access by the Computer Science and Engineering, Department of at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in CSE Journal Articles by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. MATEC Web of Conferences 125, 05019 (2017) DOI: 10.1051/ matecconf/201712505019 CSCC 2017 The Cretan Script Family Includes the Carian Alphabet Peter Z. Revesz1,a 1 Department of Computer Science, University of Nebraska-Lincoln, Lincoln, NE, 68588, USA Abstract. The Cretan Script Family is a set of related writing systems that have a putative origin in Crete. Recently, Revesz [11] identified the Cretan Hieroglyphs, Linear A, Linear B, the Cypriot syllabary, and the Greek, Old Hungarian, Phoenician, South Arabic and Tifinagh alphabets as members of this script family and using bioinformatics algorithms gave a hypothetical evolutionary tree for their development and presented a map for their likely spread in the Mediterranean and Black Sea areas. The evolutionary tree and the map indicated some unknown writing system in western Anatolia to be the common origin of the Cypriot syllabary and the Old Hungarian alphabet. -

A Baybayin Word Recognition System

A Baybayin word recognition system Rodney Pino, Renier Mendoza and Rachelle Sambayan Institute of Mathematics, University of the Philippines Diliman, Quezon City, Metro Manila, Philippines ABSTRACT Baybayin is a pre-Hispanic Philippine writing system used in Luzon island. With the effort in reintroducing the script, in 2018, the Committee on Basic Education and Culture of the Philippine Congress approved House Bill 1022 or the ''National Writing System Act,'' which declares the Baybayin script as the Philippines' national writing system. Since then, Baybayin OCR has become a field of research interest. Numerous works have proposed different techniques in recognizing Baybayin scripts. However, all those studies anchored on the classification and recognition at the character level. In this work, we propose an algorithm that provides the Latin transliteration of a Baybayin word in an image. The proposed system relies on a Baybayin character classifier generated using the Support Vector Machine (SVM). The method involves isolation of each Baybayin character, then classifying each character according to its equivalent syllable in Latin script, and finally concatenate each result to form the transliterated word. The system was tested using a novel dataset of Baybayin word images and achieved a competitive 97.9% recognition accuracy. Based on our review of the literature, this is the first work that recognizes Baybayin scripts at the word level. The proposed system can be used in automated transliterations of Baybayin texts transcribed in old books, tattoos, signage, graphic designs, and documents, among others. Subjects Computational Linguistics, Computer Vision, Natural Language and Speech, Optimization Theory and Computation, Scientific Computing and Simulation Keywords Baybayin, Optical character recognition, Support vector machine, Baybayin word recognition Submitted 17 March 2021 Accepted 25 May 2021 INTRODUCTION Published 16 June 2021 Baybayin is a pre-colonial writing system primarily used by Tagalogs in the northern Corresponding author Philippines. -

Optical Character Recognition System for Baybayin Scripts Using Support Vector Machine

Optical character recognition system for Baybayin scripts using support vector machine Rodney Pino, Renier Mendoza and Rachelle Sambayan Institute of Mathematics, University of the Philippines Diliman, Quezon City, Metro Manila, Philippines ABSTRACT In 2018, the Philippine Congress signed House Bill 1022 declaring the Baybayin script as the Philippines' national writing system. In this regard, it is highly probable that the Baybayin and Latin scripts would appear in a single document. In this work, we propose a system that discriminates the characters of both scripts. The proposed system considers the normalization of an individual character to identify if it belongs to Baybayin or Latin script and further classify them as to what unit they represent. This gives us four classification problems, namely: (1) Baybayin and Latin script recognition, (2) Baybayin character classification, (3) Latin character classification, and (4) Baybayin diacritical marks classification. To the best of our knowledge, this is the first study that makes use of Support Vector Machine (SVM) for Baybayin script recognition. This work also provides a new dataset for Baybayin, its diacritics, and Latin characters. Classification problems (1) and (4) use binary SVM while (2) and (3) apply the multiclass SVM classification. On average, our numerical experiments yield satisfactory results: (1) has 98.5% accuracy, 98.5% precision, 98.49% recall, and 98.5% F1 Score; (2) has 96.51% accuracy, 95.62% precision, 95.61% recall, and 95.62% F1 Score; (3) has 95.8% accuracy, 95.85% precision, 95.8% recall, and 95.83% F1 Score; and (4) has 100% accuracy, 100% precision, 100% recall, and 100% F1 Score. -

Recognition of Baybayin (Ancient Philippine Character) Handwritten Letters Using VGG16 Deep Convolutional Neural Network Model

ISSN 2347 - 3983 Lyndon R. Bague et al., International JournalVolume of Emerging 8. No. Trends 9, September in Engineering 2020 Research, 8(9), September 2020, 5233 – 5237 International Journal of Emerging Trends in Engineering Research Available Online at http://www.warse.org/IJETER/static/pdf/file/ijeter55892020.pdf https://doi.org/10.30534/ijeter/2020/ 55892020 Recognition of Baybayin (Ancient Philippine Character) Handwritten Letters Using VGG16 Deep Convolutional Neural Network Model Lyndon R. Bague1, Romeo Jr. L. Jorda2, Benedicto N. Fortaleza3, Andrea DM. Evanculla4, Mark Angelo V. Paez5, Jessica S. Velasco6 1Department of Electrical Engineering, Technological University of the Philippines, Manila, Philippines 2,4,5,6Department of Electronics Engineering, Technological University of the Philippines, Manila, Philippines 3Department of Mechanical Engineering, Technological University of the Philippines, Manila, Philippines 1,2,3,4,5,6Center for Engineering Design, Fabrication, and Innovation, College of Engineering, Technological University of the Philippines, Manila, Philippines of this proposed study is to translate baybayin characters ABSTRACT (based on the original 1593 Doctrina Christiana) into its corresponding Tagalog word equivalent. We proposed a system that can convert 45 handwritten baybayin Philippine character/s into their corresponding The proposed software employed Deep Convolutional Neural Tagalog word/s equivalent through convolutional neural Network (DCNN) model with VGG16 as the architecture and network (CNN) using Keras. The implemented architecture image processing module using OpenCV library to perform utilizes smaller, more compact type of VGG16 network. The character recognition. There are two (2) ways to input data classification used 1500 images from each 45 baybayin into the system, real – time translation by using web camera characters.