Guiding Human-Computer Music Improvisation: Introducing Authoring and Control with Temporal Scenarios Jérôme NIKA

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Magma Magma Mp3, Flac, Wma

Magma Magma mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Jazz / Rock Album: Magma Country: France Style: Jazz-Rock, Avantgarde, Prog Rock MP3 version RAR size: 1979 mb FLAC version RAR size: 1644 mb WMA version RAR size: 1806 mb Rating: 4.1 Votes: 970 Other Formats: VQF MP3 AUD DXD AA AAC MPC Tracklist Hide Credits Le Voyage Kobaïa 1-1 10:09 Written-By – Christian Vander Aïna 1-2 6:15 Written-By – Christian Vander Malaria 1-3 4:20 Written-By – Christian Vander Sohïa 1-4 7:41 Written-By – Teddy Lasry Sckxyss 1-5 2:47 Written-By – François Cahen Auraë 1-6 10:52 Written-By – Christian Vander La Decouverte De Kobaïa Thaud Zaïa 2-1 7:00 Written-By – Claude Engel Naü Ektila 2-2 12:55 Written-By – Laurent Thibault Stöah 2-3 8:08 Written-By – Christian Vander Müh 2-4 11:17 Written-By – Christian Vander Companies, etc. Distributed By – Harmonia Mundi Made By – MPO Credits Alto Saxophone, Tenor Saxophone, Flute – Richard Raux Design [Maquette] – M.-J. Petit* Drums, Vocals – Christian Vander Electric Bass, Contrabass – Francis Moze Engineer [Ingénieurs Du Son] – Claude Martelot*, Roger Roche Guitar [Guitars], Flute, Vocals – Claude Engel Piano – François Cahen Producer [Production Et Réalisation] – Laurent Thibault Soprano Saxophone, Flute [First Flute], Wind [Instruments À Vent] – Teddy Lasry Stage Manager [Régisseur] – Louis Haig Sarkissian Supervised By [Supervision De La Réalisation] – Lee Hallyday Technician [Assistant Technique] – Marcel Engel Trumpet, Percussion – Alain Charlery «Paco»* Vocals – Klaus Blasquiz Notes Recorded in Paris in April 1970. Made in France by MPO Diffusion Harmonia Mundi Packaged in a double jewel case with 8-page booklet. -

Real-Time Programming and Processing of Music Signals Arshia Cont

Real-time Programming and Processing of Music Signals Arshia Cont To cite this version: Arshia Cont. Real-time Programming and Processing of Music Signals. Sound [cs.SD]. Université Pierre et Marie Curie - Paris VI, 2013. tel-00829771 HAL Id: tel-00829771 https://tel.archives-ouvertes.fr/tel-00829771 Submitted on 3 Jun 2013 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Realtime Programming & Processing of Music Signals by ARSHIA CONT Ircam-CNRS-UPMC Mixed Research Unit MuTant Team-Project (INRIA) Musical Representations Team, Ircam-Centre Pompidou 1 Place Igor Stravinsky, 75004 Paris, France. Habilitation à diriger la recherche Defended on May 30th in front of the jury composed of: Gérard Berry Collège de France Professor Roger Dannanberg Carnegie Mellon University Professor Carlos Agon UPMC - Ircam Professor François Pachet Sony CSL Senior Researcher Miller Puckette UCSD Professor Marco Stroppa Composer ii à Marie le sel de ma vie iv CONTENTS 1. Introduction1 1.1. Synthetic Summary .................. 1 1.2. Publication List 2007-2012 ................ 3 1.3. Research Advising Summary ............... 5 2. Realtime Machine Listening7 2.1. Automatic Transcription................. 7 2.2. Automatic Alignment .................. 10 2.2.1. -

Miller Puckette 1560 Elon Lane Encinitas, CA 92024 [email protected]

Miller Puckette 1560 Elon Lane Encinitas, CA 92024 [email protected] Education. B.S. (Mathematics), MIT, 1980. Ph.D. (Mathematics), Harvard, 1986. Employment history. 1982-1986 Research specialist, MIT Experimental Music Studio/MIT Media Lab 1986-1987 Research scientist, MIT Media Lab 1987-1993 Research staff member, IRCAM, Paris, France 1993-1994 Head, Real-time Applications Group, IRCAM, Paris, France 1994-1996 Assistant Professor, Music department, UCSD 1996-present Professor, Music department, UCSD Publications. 1. Puckette, M., Vercoe, B. and Stautner, J., 1981. "A real-time music11 emulator," Proceedings, International Computer Music Conference. (Abstract only.) P. 292. 2. Stautner, J., Vercoe, B., and Puckette, M. 1981. "A four-channel reverberation network," Proceedings, International Computer Music Conference, pp. 265-279. 3. Stautner, J. and Puckette, M. 1982. "Designing Multichannel Reverberators," Computer Music Journal 3(2), (pp. 52-65.) Reprinted in The Music Machine, ed. Curtis Roads. Cambridge, The MIT Press, 1989. (pp. 569-582.) 4. Puckette, M., 1983. "MUSIC-500: a new, real-time Digital Synthesis system." International Computer Music Conference. (Abstract only.) 5. Puckette, M. 1984. "The 'M' Orchestra Language." Proceedings, International Computer Music Conference, pp. 17-20. 6. Vercoe, B. and Puckette, M. 1985. "Synthetic Rehearsal: Training the Synthetic Performer." Proceedings, International Computer Music Conference, pp. 275-278. 7. Puckette, M. 1986. "Shannon Entropy and the Central Limit Theorem." Doctoral dissertation, Harvard University, 63 pp. 8. Favreau, E., Fingerhut, M., Koechlin, O., Potacsek, P., Puckette, M., and Rowe, R. 1986. "Software Developments for the 4X real-time System." Proceedings, International Computer Music Conference, pp. 43-46. 9. Puckette, M. -

SOPHIE BOURGEOIS Cyril Amourette Frédéric THALY CEIBA

#19 NOVEMBRE 2016 LA LE WEBZINE D’ACTION JAZZ GAZETTE BLEUE 6 INTERVIEW SOPHIE BOURGEOIS 16 INTERVIEW 8 INTERVIEW cyril amourette 12 24 38 LES FESTIVALS Samy capbreton, anglet, MARCIAC 30 INTERVIEW THiebault Frédéric THALY 34 JAZZ AU FÉMININ CEIBA Photo Laurence Laborie 1 OUVERTURE LA GAZETTE QUI ÉCRIT Tremplin Action Jazz 2017 LES DERNIERS CRIS DU EDITO > ALAIN PIAROU DES JAZZ EN AQUITAINE INSCRIPTIONS Vous aimez le jazz Trois ans ! et vous avez envie Voilà trois ans que l’aventure a démarré. Trois ans de soutenir les actions qu’une équipe de rédacteurs, de chroniqueurs et de de l’association... photographes sillonnent la région pour vous proposer Dynamiser et soutenir la scène jazz des comptes rendus de concerts, de festivals, présenter à Bordeaux et dans la région Aquitaine les diffuseurs et leurs initiatives. Ils sont également à Sensibiliser un plus large public au jazz et aux musiques improvisées l’affût de tout ce qui se passe autour du jazz en mettant Tisser un réseau avec les jeunes musiciens, à l’honneur, des peintres, des dessinateurs, des photo- les clubs de jazz, les festivals, les producteurs graphes, des écrivains, des sculpteurs, des luthiers, etc. et la presse. Ils réalisent des interviews et font des gros plans sur les Musiciens, groupes, montez Adhérez en vous inscrivant musiciens pour mieux vous les faire connaitre et appré- sur www.actionjazz, vous serez cier. Ils chroniquent aussi leurs CD. Et tout cela dans le abonné gratuitement au webzine vos dossiers pour le prochain bénévolat le plus total. Leur récompense, c’est de sa- La Gazette Bleue voir que vous êtes de plus en plus nombreux à lire cette Toute l’actualité du jazz en Aquitaine : interviews, Tremplin Action Jazz 2017. -

Disco Gu”Rin Internet

ISCOGRAPHIE de ROGER GUÉRIN D Par Michel Laplace et Guy Reynard ● 45 tours ● 78 tours ● LP ❚■● CD ■●● Cassette AP Autre prise Janvier 1949, Paris 3. Flèche d’Or ● ou 78 Voix de son Maitre 5 février 1954, Paris Claude Bolling (p), Rex Stewart (tp), 4. Troublant Boléro FFLP1031 Jacques Diéval et son orchestre et sex- 5. Nuits de St-Germain-des-Prés ● ou 78 Voix de son Maitre Gérard Bayol (tp), Roger Guérin (cnt), ● tette : Roger Guérin (tp), Christian Benny Vasseur (tb), Roland Evans (cl, Score/Musidisc SCO 9017 FFLP10222-3 Bellest (tp), Fred Gérard (tp), Fernard as), George Kennedy (as), Armand MU/209 ● ou 78 Voix de son Maitre Verstraete (tp), Christian Kellens (tb), FFLP10154 Conrad (ts, bs), Robert Escuras (g), Début 1953, Paris, Alhambra « Jazz André Paquinet (tb), Benny Vasseur Guy de Fatto (b), Robert Péguet (dm) Parade » (tb), Charles Verstraete (tb), Maurice 8 mai 1953, Paris 1. Without a Song Sidney Bechet avec Tony Proteau et son Meunier (cl, ts), Jean-Claude Fats Sadi’s Combo : Fats Sadi (vib), 2. Morning Glory Orchestre : Roger Guérin (tp1), Forhenbach (ts), André Ross (ts), Geo ● Roger Guérin (tp), Nat Peck (tb), Jean Pacific (F) 2285 Bernard Hulin (tp), Jean Liesse (tp), Daly (vib), Emmanuel Soudieux (b), Aldegon (bcl), Bobby Jaspar (ts), Fernand Verstraete (tp), Nat Peck (tb), Pierre Lemarchand (dm), Jean Paris, 1949 Maurice Vander (p), Jean-Marie Guy Paquinet (tb), André Paquinet Bouchéty (arr) Claude Bolling (p), Gérard Bayol (tp), (tb), Sidney Bechet (ss) Jacques Ary Ingrand (b), Jean-Louis Viale (dm), 1. April in Paris Roger Guérin (cnt), Benny Vasseur (as), Robert Guinet (as), Daniel Francy Boland (arr) 2. -

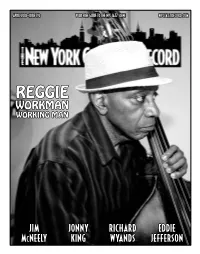

Reggie Workman Working Man

APRIL 2018—ISSUE 192 YOUR FREE GUIDE TO THE NYC JAZZ SCENE NYCJAZZRECORD.COM REGGIE WORKMAN WORKING MAN JIM JONNY RICHARD EDDIE McNEELY KING WYANDS JEFFERSON Managing Editor: Laurence Donohue-Greene Editorial Director & Production Manager: Andrey Henkin To Contact: The New York City Jazz Record 66 Mt. Airy Road East APRIL 2018—ISSUE 192 Croton-on-Hudson, NY 10520 United States Phone/Fax: 212-568-9628 New York@Night 4 Laurence Donohue-Greene: Interview : JIM Mcneely 6 by ken dryden [email protected] Andrey Henkin: [email protected] Artist Feature : JONNY KING 7 by donald elfman General Inquiries: [email protected] ON The COver : REGGIE WORKMAN 8 by john pietaro Advertising: [email protected] Encore : RICHARD WYANDS by marilyn lester Calendar: 10 [email protected] VOXNews: Lest WE Forget : EDDIE JEFFERSON 10 by ori dagan [email protected] LAbel Spotlight : MINUS ZERO by george grella US Subscription rates: 12 issues, $40 11 Canada Subscription rates: 12 issues, $45 International Subscription rates: 12 issues, $50 For subscription assistance, send check, cash or vOXNEWS 11 by suzanne lorge money order to the address above or email [email protected] Obituaries by andrey henkin Staff Writers 12 David R. Adler, Clifford Allen, Duck Baker, Stuart Broomer, FESTIvAL REPORT Robert Bush, Thomas Conrad, 13 Ken Dryden, Donald Elfman, Phil Freeman, Kurt Gottschalk, Tom Greenland, Anders Griffen, CD REviews 14 Tyran Grillo, Alex Henderson, Robert Iannapollo, Matthew Kassel, Marilyn Lester, Suzanne -

Marseille, Une Métropole Tournée

Festival Jazz des Cinq Continents 14ème édition 17, 18, 19, 20 21, 23, 24, 25, 26 et 27 juillet 2013 Quatorzième édition du Festival Jazz des Cinq Continents Les éditos La programmation Les artistes "Jungle Music" donne le "LA" au jardin zoologique Le Club FJ5C au Théâtre National de La Criée Autour du Festival Un Festival éco-responsable et solidaire Informations pratiques Sommaire Annexes : Les partenaires Quatorzième édition du Festival Jazz des Cinq Continents 2013 année exceptionnelle. Avec des artistes de grandes renommées, de nouveaux rendez-vous et un festival qui durera près d’un mois avec plusieurs dates en amont de la scène historique des Jardins du Palais Longchamp, le FJ5C se met aux couleurs de la Capitale Européenne de la Culture. Que la jazz attitude commence ! Une programmation s'annonçant de plus en plus prometteuse, ambitieuse et des rencontres inédites entre de grands artistes: des légendes comme Wayne Shorter, Chucho Valdés, Chick Corea, Gilberto Gil, George Benson, Archie Shepp, Nile Rodgers, Eddy Louiss… des grands noms comme Diana Krall … de belles retrouvailles avec Biréli Lagrène, Youn Sun Nah, Paolo Fresu qui invitera la Méditerranée sur le J4 ou encore Hiromi, Meshell Ndegeocello, Massaliazz et la nouvelle génération avec Guillaume Perret & The Electric Epic. "Carte Blanche", "Liberté", "Inédit" seront les maîtres mots du 17 au 27 juillet 2013, menant le public de l'esplanade du J4 pour le concert gratuit au jardin zoologique avant de retrouver le plateau du Palais Longchamp et ainsi faire le tour des cinq continents ! Cette édition, coréalisée avec l’Association Marseille Provence 2013, s'enrichit de deux soirées de concerts supplémentaires afin de répondre à l'attente d'un public de plus en plus nombreux et pour célébrer comme il se doit cette année capitale. -

100 Titres Sur Le JAZZ — JUILLET 2007 SOMMAIRE

100 TITRES SUR LE JAZZ À plusieurs époques la France, par sa curiosité et son ouverture à l’Autre, en l’occurrence les hommes et les musiques de l’Afro-Amérique, a pu être considérée, hors des États-Unis, comme une « fille aînée » du juillet 2007 / jazz. Après une phase de sensibilisation à des « musiques nègres » °10 constituant une préhistoire du jazz (minstrels, Cake-walk pour Debussy, débarquement d’orchestres militaires américains en 1918, puis tour- Hors série n nées et bientôt immigration de musiciens afro-américains…), de jeunes pionniers, suivis et encouragés par une certaine avant-garde intellectuelle et artistique (Jean Cocteau, Jean Wiener…), entrepren- / Vient de paraître / nent, dans les années 1920, avec plus de passion que d’originalité, d’imiter et adapter le « message » d’outre-Atlantique. Si les traces phonographiques de leur enthousiasme, parfois talentueux, sont qua- CULTURESFRANCE siment inexistantes, on ne saurait oublier les désormais légendaires Léon Vauchant, tromboniste et arrangeur, dont les promesses musica- les allaient finalement se diluer dans les studios américains, et les chefs d’orchestre Ray Ventura, (qui, dès 1924, réunissait une formation de « Collégiens ») et Gregor (Krikor Kelekian), à qui l’on doit d’avoir Philippe Carles Journaliste professionnel depuis 1965, rédacteur en chef de Jazz Magazine (puis directeur de la rédaction à partir de 2006) et producteur radio (pour France Musique) depuis 1971, Philippe Carles, né le 2 mars 1941 à Alger (où il a commencé en 1958 des études de médecine, interrompues à Paris en 1964), est co-auteur avec Jean-Louis Comolli de Free Jazz/Black Power (Champ Libre, 1971, rééd. -

La Brochure 2021-2022

Écrire un édito, mais sur quoi ? Il y aurait trop à dire, après ce silence imposé, après ces mois d’hésitations et d’incertitudes. Alors, par quoi commencer ? Qu’est-ce qui serait essentiel ? ÉDITO Peut-être, comme moi, vous n’arrivez pas à trancher entre dire qu’il serait temps de… • Croire à nouveau, si on le peut, que le • Suivre son instinct et enfin admettre futur a un peu d’avenir. qu’on est seulement contradictoire. • Sortir enfin de la torpeur du • Penser à s’inscrire sur les listes confinement. électorales. • Regarder avec une tendresse infinie, • Retrouver qui est l’auteur de cette comme nouvelle, ceux qui nous sont si belle citation Si vous pensez que proches et occupent notre quotidien. l’aventure est dangereuse, je vous • Être joyeusement rebelle et rester propose d’essayer la routine… elle est étranger à la répétition infinie des idées mortelle ! d’échecs annoncés, des catastrophes • Ne pas arriver à trancher ou à décider inévitables, des solitudes inconsolables. si dans le monde d’après, il vaut mieux • Ne rien lâcher de notre espoir dans le se lever tôt. Ou se coucher tard. Ou fait qu’il y aurait bien, au bout du tunnel, peut-être tenter de faire les deux. un monde meilleur. • Arrêter de se demander pourquoi • Se demander à nouveau pourquoi dans une même journée, selon l’heure l'Homme a décidé un jour de laisser où l’on écoute la radio, on adhère plus l’empreinte de sa main dans une facilement aux thèses des rassuristes le caverne. matin. -

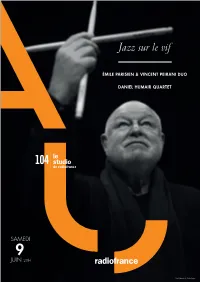

Jazz Sur Le Vif

Jazz sur le vif ÉMILE PARISIEN & VINCENT PEIRANI DUO DANIEL HUMAIR QUARTET SAMEDI 9 JUIN 20H Daniel Humair © Olivier Degen En soixante ans de carrière, depuis son arrivée à Paris en 1958, Daniel Humair a joué avec toute la planète jazz. En quartette avec trois interlocuteurs aussi créatifs que virtuoses, le trompettiste Fabrice Martinez, le guitariste Marc Ducret et le contrebassiste Bruno Chevillon, le batteur célèbre ce soir son quatre-vingtième anniversaire. Et pour que la fête soit complète, deux de ses jeunes partenaires préférés, le saxophoniste Émile Parisien et l’accordéoniste Vincent Peirani, ouvrent la soirée. ÉMILE PARISIEN/VINCENT PEIRANI DUO Daniel Humair a côtoyé et travaillé avec des artistes légendaires comme Don Byas, Émile Parisien saxophone soprano Lucky Thompson, Kenny Dorham, Bud Powell, Oscar Pettiford, Chet Baker ou Eric Vincent Peirani Dolphy. Depuis la fin des années 1950, Daniel Humair multiplie les collaborations accordéon ponctuelles et de longue date avec, notamment, le pianiste René Urtreger et le contrebassiste Pierre Michelot (le célèbre trio HUM), le pianiste Martial Solal, ou - Entracte - encore le violoniste Jean-Luc Ponty et l’organiste Eddy Louiss avec lesquels il forme le trio HLP. Le trio qu’il réunit ensuite avec François Jeanneau et Henri Texier est DANIEL HUMAIR QUARTET considéré comme l’un des catalyseurs de la nouvelle scène du jazz en France dans les années 70. Tout en continuant sa carrière de free-lance, Daniel Humair Daniel Humair batterie invente, avec Joachim Kühn et Jean-François Jenny-Clark, un trio équilatéral qui Fabrice Martinez trompette lui permet de développer pleinement sa conception de la batterie et son activité de compositeur. -

Eddy Louiss Est Mort : Les Anges Noirs Vont Swinguer !

Eddy Louiss est mort : les anges noirs vont swinguer ! Extrait du 7 Lames la Mer http://7lameslamer.net/eddy-louiss-est-mort-les-anges-1434.html Sang mêlé et blues Eddy Louiss est mort : les anges noirs vont swinguer ! - Le monde - Date de mise en ligne : mardi 30 juin 2015 Description : Eddy Louiss, « Sang mêlé », a été rappelé dans les étoiles, celles qui brillent dans les yeux des amoureux du blues. On disait de lui qu'il était le meilleur organiste du monde. Les anges ont bien de la chance là-haut avec ce nouveau venu : ils vont swinguer ! Petite confidence eddylouissienne : ces anges-là ont le visage noir ! Flash-back sur ce musicien hors norme qui avait soulevé La Réunion il y a 30 ans... Copyright © 7 Lames la Mer - Tous droits réservés Copyright © 7 Lames la Mer Page 1/4 Eddy Louiss est mort : les anges noirs vont swinguer ! Eddy Louiss, « Sang mêlé », a été rappelé dans les étoiles, celles qui brillent dans les yeux des amoureux du blues. On disait de lui qu'il était le meilleur organiste du monde. Les anges ont bien de la chance là-haut avec ce nouveau venu : ils vont swinguer ! Petite confidence eddylouissienne : ces anges-là ont le visage noir ! Flash-back sur ce musicien hors norme qui avait soulevé La Réunion il y a 30 ans... Eddy Louiss au Festival de Jazz de Château-Morange, le 21 octobre 1985. « Hors de l'eau, un orgue a surgi / C'est pas Némo, c'est Eddy »... chantait Claude Nougaro qui vient d'accueillir, là-haut, son dalon Eddy Louiss. -

Glenn Miller, Benny Goodman, and Count Basie Led Other Successful

JAZZ AGE Glenn Miller, Benny Goodman, and Count Basie led other modal jazz (based on musical modes), funk (which re- successful orchestras. While these big bands came to char- prised early jazz), and fusion, which blended jazz and rock acterize the New York jazz scene during the Great De- and included electronic instruments. Miles Davis in his pression, they were contrasted with the small, impover- later career and Chick Corea were two influential fusion ished jazz groups that played at rent parties and the like. artists. During this time the performer was thoroughly identified Hard bop was a continuation ofbebop but in a more by popular culture as an entertainer, the only regular accessible style played by artists such as John Coltrane. venue was the nightclub, and African American music be- Ornette Coleman (1960) developed avant-garde free jazz, came synonymous with American dance music. The big- a style based on the ideas ofThelonius Monk, in which band era was also allied with another popular genre, the free improvisation was central to the style. mainly female jazz vocalists who soloed with the orches- tras. Singers such as Billie Holiday modernized popular- Postmodern Jazz Since 1980 song lyrics, although some believe the idiom was more Hybridity, a greater degree offusion,and traditional jazz akin to white Tin Pan Alley than to jazz. revivals merely touch the surface of the variety of styles Some believe that the big band at its peak represented that make up contemporary jazz. Inclusive ofmany types the golden era ofjazz because it became part ofthe cul- ofworld music, it is accessible, socially conscious, and tural mainstream.