Algorithms and High Performance Computing Approaches for Sequencing-Based Comparative Genomics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computational Methods Addressing Genetic Variation In

COMPUTATIONAL METHODS ADDRESSING GENETIC VARIATION IN NEXT-GENERATION SEQUENCING DATA by Charlotte A. Darby A dissertation submitted to Johns Hopkins University in conformity with the requirements for the degree of Doctor of Philosophy Baltimore, Maryland June 2020 © 2020 Charlotte A. Darby All rights reserved Abstract Computational genomics involves the development and application of computational meth- ods for whole-genome-scale datasets to gain biological insight into the composition and func- tion of genomes, including how genetic variation mediates molecular phenotypes and disease. New biotechnologies such as next-generation sequencing generate genomic data on a massive scale and have transformed the field thanks to simultaneous advances in the analysis toolkit. In this thesis, I present three computational methods that use next-generation sequencing data, each of which addresses the genetic variations within and between human individuals in a different way. First, Samovar is a software tool for performing single-sample mosaic single-nucleotide variant calling on whole genome sequencing linked read data. Using haplotype assembly of heterozygous germline variants, uniquely made possible by linked reads, Samovar identifies variations in different cells that make up a bulk sequencing sample. We apply it to 13cancer samples in collaboration with researchers at Nationwide Childrens Hospital. Second, scHLAcount is a software pipeline that computes allele-specific molecule counts for the HLA genes from single-cell gene expression data. We use a personalized reference genome based on the individual’s genotypes to reveal allele-specific and cell type-specific gene expression patterns. Even given technology-specific biases of single-cell gene expression data, we can resolve allele-specific expression for these genes since the alleles are often quite different between the two haplotypes of an individual. -

Big Data, Moocs, and ... (PDF)

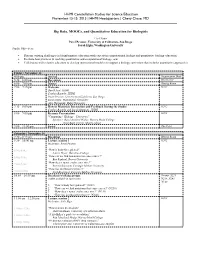

HHMI Constellation Studios for Science Education November 13-15, 2015 | HHMI Headquarters | Chevy Chase, MD Big Data, MOOCs, and Quantitative Education for Biologists Co-Chairs Pavel Pevzner, University of California- San Diego Sarah Elgin, Washington University Studio Objectives Discuss existing challenges in bioinformatics education with experts in computational biology and quantitative biology education, Evaluate best practices in teaching quantitative and computational biology, and Collaborate with scientist educators to develop instructional modules to support a biology curriculum that includes quantitative approaches. Friday | November 13 4:00 pm Arrival Registration Desk 5:30 – 6:00 pm Reception Great Hall 6:00 – 7:00 pm Dinner Dining Room 7:00 – 7:15 pm Welcome K202 David Asai, HHMI Cynthia Bauerle, HHMI Pavel Pevzner, University of California-San Diego Sarah Elgin, Washington University Alex Hartemink, Duke University 7:15 – 8:00 pm How to Maximize Interaction and Feedback During the Studio K202 Cynthia Bauerle and Sarah Simmons, HHMI 8:00 – 9:00 pm Keynote Presentation K202 "Computing + Biology = Discovery" Speakers: Ran Libeskind-Hadas, Harvey Mudd College Eliot Bush, Harvey Mudd College 9:00 – 11:00 pm Social The Pilot Saturday | November 14 7:30 – 8:15 am Breakfast Dining Room 8:30 – 10:00 am Lecture session 1 K202 Moderator: Pavel Pevzner 834a-854a “How is body fat regulated?” Laurie Heyer, Davidson College 856a-916a “How can we find mutations that cause cancer?” Ben Raphael, Brown University “How does a tumor evolve over time?” 918a-938a Russell Schwartz, Carnegie Mellon University “How fast do ribosomes move?” 940a-1000a Carl Kingsford, Carnegie Mellon University 10:05 – 10:55 am Breakout working groups Rooms: S221, (coffee available in each room) N238, N241, N140 1. -

Cloud Computing and the DNA Data Race Michael Schatz

Cloud Computing and the DNA Data Race Michael Schatz April 14, 2011 Data-Intensive Analysis, Analytics, and Informatics Outline 1. Genome Assembly by Analogy 2. DNA Sequencing and Genomics 3. Large Scale Sequence Analysis 1. Mapping & Genotyping 2. Genome Assembly Shredded Book Reconstruction • Dickens accidentally shreds the first printing of A Tale of Two Cities – Text printed on 5 long spools It was theIt was best the of besttimes, of times, it was it wasthe worstthe worst of of times, times, it it was was the the ageage of of wisdom, wisdom, it itwas was the agethe ofage foolishness, of foolishness, … … It was theIt was best the bestof times, of times, it was it was the the worst of times, it was the theage ageof wisdom, of wisdom, it was it thewas age the of foolishness,age of foolishness, … It was theIt was best the bestof times, of times, it was it wasthe the worst worst of times,of times, it it was the age of wisdom, it wasit was the the age age of offoolishness, foolishness, … … It was It thewas best the ofbest times, of times, it wasit was the the worst worst of times,of times, itit waswas thethe ageage ofof wisdom,wisdom, it wasit was the the age age of foolishness,of foolishness, … … It wasIt thewas best the bestof times, of times, it wasit was the the worst worst of of times, it was the age of ofwisdom, wisdom, it wasit was the the age ofage foolishness, of foolishness, … … • How can he reconstruct the text? – 5 copies x 138, 656 words / 5 words per fragment = 138k fragments – The short fragments from every copy are mixed -

BENG181/CSE 181/BIMM 181 Molecular Sequence Analysis Instructor: Pavel Pevzner

COURSE ANNOUNCEMENT FOR WINTER 2021 BENG181/CSE 181/BIMM 181 Molecular Sequence Analysis https://sites.google.com/site/ucsdcse181 Instructor: Pavel Pevzner ● phone: (858) 822-4365 ● e.mail: [email protected] ● web site: bioalgorithms.ucsd.edu Teaching Assistants: ● Andrey Bzikadze ([email protected]) ● Hsuan-lin (Charlene) Her ([email protected]) Time: 6:30-7:50 Mon/Wed, Place: online (seminar Friday 4:00-4:50 online) Zoom link for the class: https://ucsd.zoom.us/j/99782745100 Zoom link for the seminar: https://ucsd.zoom.us/j/96805484881 Office hours: PP: (Th 3-5 online), TAs (online Tue 1-2 PM and 4-5 PM or by appointment online) PP zoom link: https://ucsd.zoom.us/j/96986851791 Andrey Bzikadze zoom link: https://ucsd.zoom.us/j/94881347266 Hsuan-lin (Charlene) Her zoom link: https://ucsd.zoom.us/j/95134947264 Prerequisites: The course assumes some prior background in biology, some algorithmic culture (CSE 101 course on algorithms as a prerequisite), and some programming skills. Flipped online class. Starting in 2014, the Innovative Learning Technology Initiative (ILTI) at University of California encourages professors to transform their classes into online offerings available across various UC campuses. Dr. Pevzner is funded by the ILTI and NIH to develop new online approaches to bioinformatics education at UCSD. Since 2014, well before the COVID-19 pandemic, all lectures in this class are available online rather than presented in the classroom. Multi-university class. This class closely follows the textbook Bioinformatics Algorithms: an Active Learning Approach that has now been adopted by 140+ instructors from 40+ countries. -

Steven L. Salzberg

Steven L. Salzberg McKusick-Nathans Institute of Genetic Medicine Johns Hopkins School of Medicine, MRB 459, 733 North Broadway, Baltimore, MD 20742 Phone: 410-614-6112 Email: [email protected] Education Ph.D. Computer Science 1989, Harvard University, Cambridge, MA M.Phil. 1984, M.S. 1982, Computer Science, Yale University, New Haven, CT B.A. cum laude English 1980, Yale University Research Areas: Genomics, bioinformatics, gene finding, genome assembly, sequence analysis. Academic and Professional Experience 2011-present Professor, Department of Medicine and the McKusick-Nathans Institute of Genetic Medicine, Johns Hopkins University. Joint appointments as Professor in the Department of Biostatistics, Bloomberg School of Public Health, and in the Department of Computer Science, Whiting School of Engineering. 2012-present Director, Center for Computational Biology, Johns Hopkins University. 2005-2011 Director, Center for Bioinformatics and Computational Biology, University of Maryland Institute for Advanced Computer Studies 2005-2011 Horvitz Professor, Department of Computer Science, University of Maryland. (On leave of absence 2011-2012.) 1997-2005 Senior Director of Bioinformatics (2000-2005), Director of Bioinformatics (1998-2000), and Investigator (1997-2005), The Institute for Genomic Research (TIGR). 1999-2006 Research Professor, Departments of Computer Science and Biology, Johns Hopkins University 1989-1999 Associate Professor (1996-1999), Assistant Professor (1989-1996), Department of Computer Science, Johns Hopkins University. On leave 1997-99. 1988-1989 Associate in Research, Graduate School of Business Administration, Harvard University. Consultant to Ford Motor Co. of Europe and to N.V. Bekaert (Kortrijk, Belgium). 1985-1987 Research Scientist and Senior Knowledge Engineer, Applied Expert Systems, Inc., Cambridge, MA. Designed expert systems for financial services companies. -

THE BIG CHALLENGES of BIG DATA As They Grapple with Increasingly Large Data Sets, Biologists and Computer Scientists Uncork New Bottlenecks

TECHNOLOGY FEATURE THE BIG CHALLENGES OF BIG DATA As they grapple with increasingly large data sets, biologists and computer scientists uncork new bottlenecks. EMBL–EBI Extremely powerful computers are needed to help biologists to handle big-data traffic jams. BY VIVIEN MARX and how the genetic make-up of different can- year, particle-collision events in CERN’s Large cers influences how cancer patients fare2. The Hadron Collider generate around 15 petabytes iologists are joining the big-data club. European Bioinformatics Institute (EBI) in of data — the equivalent of about 4 million With the advent of high-throughput Hinxton, UK, part of the European Molecular high-definition feature-length films. But the genomics, life scientists are starting to Biology Laboratory and one of the world’s larg- EBI and institutes like it face similar data- Bgrapple with massive data sets, encountering est biology-data repositories, currently stores wrangling challenges to those at CERN, says challenges with handling, processing and mov- 20 petabytes (1 petabyte is 1015 bytes) of data Ewan Birney, associate director of the EBI. He ing information that were once the domain of and back-ups about genes, proteins and small and his colleagues now regularly meet with astronomers and high-energy physicists1. molecules. Genomic data account for 2 peta- organizations such as CERN and the European With every passing year, they turn more bytes of that, a number that more than doubles Space Agency (ESA) in Paris to swap lessons often to big data to probe everything from every year3 (see ‘Data explosion’). about data storage, analysis and sharing. -

UNIVERSITY of CALIFORNIA RIVERSIDE RNA-Seq

UNIVERSITY OF CALIFORNIA RIVERSIDE RNA-Seq Based Transcriptome Assembly: Sparsity, Bias Correction and Multiple Sample Comparison A Dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science by Wei Li September 2012 Dissertation Committee: Dr. Tao Jiang , Chairperson Dr. Stefano Lonardi Dr. Marek Chrobak Dr. Thomas Girke Copyright by Wei Li 2012 The Dissertation of Wei Li is approved: Committee Chairperson University of California, Riverside Acknowledgments The completion of this dissertation would have been impossible without help from many people. First and foremost, I would like to thank my advisor, Dr. Tao Jiang, for his guidance and supervision during the four years of my Ph.D. He offered invaluable advice and support on almost every aspect of my study and research in UCR. He gave me the freedom in choosing a research problem I’m interested in, helped me do research and write high quality papers, Not only a great academic advisor, he is also a sincere and true friend of mine. I am always feeling appreciated and fortunate to be one of his students. Many thanks to all committee members of my dissertation: Dr. Stefano Lonardi, Dr. Marek Chrobak, and Dr. Thomas Girke. I will be greatly appreciated by the advice they offered on the dissertation. I would also like to thank Jianxing Feng, Prof. James Borneman and Paul Ruegger for their collaboration in publishing several papers. Thanks to the support from Vivien Chan, Jianjun Yu and other bioinformatics group members during my internship in the Novartis Institutes for Biomedical Research. -

Bioinformatics: Computational Analysis of Biological Information

RESEARCH at the University of Maryland Bioinformatics: Computational Analysis of Biological Information Bioinformatics—the use of advanced computational techniques for biological research—is accelerating rates of scientific discovery and leading to new approaches to human disease. These computational methods enable researchers to tackle previously cumbersome analytical tasks, such as studying the entire genetic of an organism. With the aid of the latest bioinformatics technology, researchers can interpret DNA sequences with greater accuracy, in less time, and at lower costs. The University of Maryland’s Center for Bioinformatics and Computational Biology (CBCB) is at the forefront of bioinformatics research. Directed by Horvitz Professor of Computer Science Steven Salzberg, the center coordinates the expertise of researchers working in computer science, molecular biology, mathematics, physics, and biochemistry. These researchers reduce complex biological phenomena to information units stored in enormous data sets. The analysis of this data reveals new answers to biological problems. CBCB projects include technological solutions for accelerating vaccine development, identifying the complex causes of epidemic diseases, and revealing previously unseen relationships between the biochemistry of our bodies and the symptoms of puzzling diseases. Steven Salzberg and Carl Kingsford are using bioinformatics to transform influenza research. Their work will yield better methods for tracking the spread of influenza and for designing vaccines. Mihai Pop uses computational tools to combat infant mortality in developing countries. Bioinformatics enables him to pinpoint causes with greater sophistication. Najib El-Sayed and Mihai Pop use computational statistics to detect correlations between gut bacteria and the symptoms of under-explained disorders, such as autism and Crohn’s disease. -

The Triumph of New-Age Medicine Medicine Has Long Decried Acupuncture, Homeopathy, and the Like As Dangerous Nonsense That Preys on the Gullible

The Triumph of New-Age Medicine Medicine has long decried acupuncture, homeopathy, and the like as dangerous nonsense that preys on the gullible. Again and again, carefully controlled studies done by outsiders of natural medicine have shown alternative medicine to work no better than a placebo. But now many doctors admit that alternative medicine often seems to do a better job of making patients well, and at a much lower cost, than mainstream care—and they’re trying to learn from it. By DAVID H. FREEDMAN StephenWebster I MEET BRIAN BERMAN, a physician of gentle and upbeat demeanor, outside the stately Greek columns that form the facade of one of the nation’s oldest medical-lecture halls, at the edge of the University of Maryland Medical Center in downtown Baltimore. The research center that Berman directs sits next door, in a much smaller, plainer, but still venerable-looking two-story brick building. A staff of 33 works there, including several physician-researchers and practitioner-researchers, funded in part by $35 million in grants over the past 14 years from the National Institutes of Health, which has named the clinic a Research Center of Excellence. In addition to conducting research, the center provides medical care. Indeed, some patients wait as long as two months to begin treatment there—referrals from physicians all across the medical center have grown beyond the staff’s capacity. “That’s a big change,” says Berman, laughing. “We used to have trouble getting any physicians here to take us seriously.” Also see: The Center for Integrative Medicine, Berman’s clinic, is focused on alternative medicine, sometimes known as “complementary” or “holistic” medicine. -

Top 100 AI Leaders in Drug Discovery and Advanced Healthcare Introduction

Top 100 AI Leaders in Drug Discovery and Advanced Healthcare www.dka.global Introduction Over the last several years, the pharmaceutical and healthcare organizations have developed a strong interest toward applying artificial intelligence (AI) in various areas, ranging from medical image analysis and elaboration of electronic health records (EHRs) to more basic research like building disease ontologies, preclinical drug discovery, and clinical trials. The demand for the ML/AI technologies, as well as for ML/AI talent, is growing in pharmaceutical and healthcare industries and driving the formation of a new interdisciplinary industry (‘data-driven healthcare’). Consequently, there is a growing number of AI-driven startups and emerging companies offering technology solutions for drug discovery and healthcare. Another important source of advanced expertise in AI for drug discovery and healthcare comes from top technology corporations (Google, Microsoft, Tencent, etc), which are increasingly focusing on applying their technological resources for tackling health-related challenges, or providing technology platforms on rent bases for conducting research analytics by life science professionals. Some of the leading pharmaceutical giants, like GSK, AstraZeneca, Pfizer and Novartis, are already making steps towards aligning their internal research workflows and development strategies to start embracing AI-driven digital transformation at scale. However, the pharmaceutical industry at large is still lagging behind in adopting AI, compared to more traditional consumer industries -- finance, retail etc. The above three main forces are driving the growth in the AI implementation in pharmaceutical and advanced healthcare research, but the overall success depends strongly on the availability of highly skilled interdisciplinary leaders, able to innovate, organize and guide in this direction. -

The Anatomy of Successful Computational Biology Software

FEATURE The anatomy of successful computational biology software Stephen Altschul1, Barry Demchak2, Richard Durbin3, Robert Gentleman4, Martin Krzywinski5, Heng Li6, Anton Nekrutenko7, James Robinson6, Wayne Rasband8, James Taylor9 & Cole Trapnell10 Creators of software widely used in computational biology discuss the factors that contributed to their success he year was 1989 and Stephen Altschul What factors determine whether scientific tant thing is that Thad a problem. Sam Karlin, the brilliant software is successful? Cufflinks, Bowtie mathematician whose help he needed, was Stephen Altschul: BLAST was the first program (which is mainly Ben so convinced of the power of a mathemati- to assign rigorous statistics to useful scores of Langmead’s work) cally tractable but biologically constrained local sequence alignments. Before then people and TopHat were in measure of protein sequence similarity that had derived many large part at the right he would not listen to Altschul (or anyone different scoring sys- place at the right time. else for that matter). So Altschul essentially tems, and it wasn’t We were stepping into tricked him into solving the problem sty- clear why any should Cole Trapnell fields that were poised mying the field of computational biology have a particular developed the Tophat/ to explode, but which by posing it in terms of pure mathematics, advantage. I had made Cufflinks suite of short- really had a vacuum in devoid of any reference to biology. The treat a conjecture that read analysis tools. terms of usable tools. from that trick became known as the Karlin- every scoring system You get two things Altschul statistics that are a key part of that people proposed from being first. -

Steven L. Salzberg

Steven L. Salzberg McKusick-Nathans Institute of Genetic Medicine Johns Hopkins School of Medicine 733 North Broadway, MRB 459, Baltimore, MD 20742 Phone: 410-614-6112 Email: [email protected] Education Ph.D. Computer Science 1989, Harvard University, Cambridge, MA M.Phil. 1984, M.S. 1982, Computer Science, Yale University, New Haven, CT B.A. cum laude English 1980, Yale University Research Areas: Genomics, bioinformatics, genome assembly, gene finding, sequence analysis algorithms. Academic and Professional Experience 2011-present Professor, Department of Medicine and the McKusick-Nathans Institute of Genetic Medicine, Johns Hopkins University. Joint appointments as Professor in the Department of Biostatistics, Bloomberg School of Public Health, and in the Department of Computer Science, Whiting School of Engineering. 2012-present Director, Center for Computational Biology, Johns Hopkins University. 2005-2011 Director, Center for Bioinformatics and Computational Biology, University of Maryland Institute for Advanced Computer Studies 2005-2011 Horvitz Professor, Department of Computer Science, University of Maryland. 1997-2005 Senior Director of Bioinformatics (2000-2005), Director of Bioinformatics (1998-2000), and Investigator (1997-2005), The Institute for Genomic Research (TIGR). 1999-2006 Research Professor, Departments of Computer Science and Biology, Johns Hopkins University 1989-1999 Associate Professor (1996-1999), Assistant Professor (1989-1996), Department of Computer Science, Johns Hopkins University. On leave 1997-99. 1988-1989 Associate in Research, Graduate School of Business Administration, Harvard University. Consultant to Ford Motor Co. of Europe and to N.V. Bekaert (Kortrijk, Belgium). 1985-1987 Research Scientist and Senior Knowledge Engineer, Applied Expert Systems, Inc., Cambridge, MA. Designed expert systems for financial services companies.