The Anatomy of Successful Computational Biology Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Biophysics, Structural and Computational Biology (BSCB) Faculty Administers the Ph.D

UNIVERSITY OF ROCHESTER SCHOOL OF MEDICINE AND DENTISTRY Graduate Studies in BIOPHYSICS, STRUCTURAL AND COMPUTATIONAL BIOLOGY Student Handbook August 2019 David Mathews, Program Director Joseph Wedekind, Education Committee Chair Melissa Vera, Graduate Studies Coordinator PREFACE The Biophysics, Structural and Computational Biology (BSCB) Faculty administers the Ph.D. degree program in Biophysics for the Department of Biochemistry and Biophysics. This handbook is intended to outline the major features and policies of the program. The general features of the graduate experience at the University of Rochester are summarized in the Graduate Bulletin, which is updated every two years. Students and advisors will need to consult both sources, though it is our intent to provide the salient features here. Policy, of course, continues to evolve in response to the changing needs of the graduate programs and the students in them. Thus, it is wise to verify any crucial decisions with the Graduate Studies Coordinator. i TABLE OF CONTENTS Page I. DEFINITIONS 1 II. BIOPHYSICS CURRICULUM 2 A. Courses 2 1. Core Curriculum 2. Elective Courses 3 B. Suggested Progress Toward the Ph.D. in Biophysics 4 C. Other Educational Opportunities 4 1. Departmental Seminars 4 2. BSCB Program Retreat 5 3. Bioinformatics Cluster 5 D. Exemptions from Course Requirements 5 E. Minimum Course Performance 5 F. M.D./Ph.D. Students 6 III. ADDITIONAL DETAILS OF PROCEDURES AND REQUIREMENTS 7 A. Faculty Advisors for Entering Students 7 B. Student Laboratory Rotations 7 C. Radiation Certificate 8 D. Student Research Seminar 8 E. First Year Preliminary Examination and Evaluation 9 F. Teaching Assistantship 12 G. -

A Sample Workload for Bioinformatics and Computational Biology for Optimizing Next-Generation High-Performance Computer Systems

BioSPLASH: A sample workload for bioinformatics and computational biology for optimizing next-generation high-performance computer systems David A. Bader∗ Vipin Sachdeva Department of Electrical and Computer Engineering University of New Mexico, Albuquerque, NM 87131 Virat Agarwal Gaurav Goel Abhishek N. Singh Indian Institute of Technology, New Delhi May 1, 2005 Abstract BioSPLASH is a suite of representative applications that we have assembled from the com- putational biology community, where the codes are carefully selected to span a breadth of algorithms and performance characteristics. The main results of this paper are the assembly of a scalable bioinformatics workload with impact to the DARPA High Produc- tivity Computing Systems Program to develop revolutionarily-new economically- viable high-performance computing systems,andanalyses of the performance of these codes for computationally demanding instances using the cycle-accurate IBM MAMBO simulator and real performance monitoring on an Apple G5 system. Hence, our work is novel in that it is one of the first efforts to incorporate life science application per- formance for optimizing high-end computer system architectures. 1 Algorithms in Computational Biology In the 50 years since the discovery of the structure of DNA, and with new techniques for sequencing the entire genome of organisms, biology is rapidly moving towards a data-intensive, computational science. Biologists are in search of biomolecular sequence data, for its comparison with other genomes, and because its structure determines function and leads to the understanding of bio- chemical pathways, disease prevention and cure, and the mechanisms of life itself. Computational biology has been aided by recent advances in both technology and algorithms; for instance, the ability to sequence short contiguous strings of DNA and from these reconstruct the whole genome (e.g., see [34, 2, 33]) and the proliferation of high-speed micro array, gene, and protein chips (e.g., see [27]) for the study of gene expression and function determination. -

Biology (BA) Biology (BA)

Biology (BA) Biology (BA) This program is offered by the College of Arts & Sciences/ • CHEM 1110 General Chemistry II (3 hours) Biological Sciences Department and is only available at the St. and CHEM 1111 General Chemistry II: Lab (1 hour) Louis home campus. • CHEM 2100 Organic Chemistry I (3 hours) and CHEM 2101 Organic Chemistry I: Lab (1 hour) Program Description • MATH 1430 College Algebra (3 hours) • MATH 2200 Statistics (3 hours) The bachelor of arts degree is designed for students who seek or STAT 3100 Inferential Statistics (3 hours) a broad education in biology. This degree is suitable preparation or PSYC 2750 Introduction to Measurement and Statistics (3 for a diverse range of careers including health science, science hours) education and ecology-related fields. • PHYS 1710 College Physics I (3 hours) Students can earn the BA in biology alone, or with one of four and PHYS 1711 College Physics I: Lab (1 hour) emphases: biodiversity, computational biology, education or • PHYS 1720 College Physics II (3 hours) health science. and PHYS 1721 College Physics II: Lab (1 hour) Learning Outcomes BA in Biology (66 hours) Students who complete any of the bachelor of arts in biology will The general degree offers the greatest flexibility, allowing students be able to: to select 12 hours of electives from any of our 2000+ level BIOL, CHEM or PHYS courses in addition to the 54 credits of core • Describe biological, chemical and physical principles as they coursework in biology listed above. (Up to 3 credit hours of BIOL relate to the natural world in writings and presentations to a 4700/CHEM 4700/PHYS 4700 can be used toward these 12 credit diverse audience. -

BIOGRAPHICAL SKETCH NAME: Berger

BIOGRAPHICAL SKETCH NAME: Berger, Bonnie eRA COMMONS USER NAME (credential, e.g., agency login): BABERGER POSITION TITLE: Simons Professor of Mathematics and Professor of Electrical Engineering and Computer Science EDUCATION/TRAINING (Begin with baccalaureate or other initial professional education, such as nursing, include postdoctoral training and residency training if applicable. Add/delete rows as necessary.) EDUCATION/TRAINING DEGREE Completion (if Date FIELD OF STUDY INSTITUTION AND LOCATION applicable) MM/YYYY Brandeis University, Waltham, MA AB 06/1983 Computer Science Massachusetts Institute of Technology SM 01/1986 Computer Science Massachusetts Institute of Technology Ph.D. 06/1990 Computer Science Massachusetts Institute of Technology Postdoc 06/1992 Applied Mathematics A. Personal Statement Advances in modern biology revolve around automated data collection and sharing of the large resulting datasets. I am considered a pioneer in the area of bringing computer algorithms to the study of biological data, and a founder in this community that I have witnessed grow so profoundly over the last 26 years. I have made major contributions to many areas of computational biology and biomedicine, largely, though not exclusively through algorithmic innovations, as demonstrated by nearly twenty thousand citations to my scientific papers and widely-used software. In recognition of my success, I have just been elected to the National Academy of Sciences and in 2019 received the ISCB Senior Scientist Award, the pinnacle award in computational biology. My research group works on diverse challenges, including Computational Genomics, High-throughput Technology Analysis and Design, Biological Networks, Structural Bioinformatics, Population Genetics and Biomedical Privacy. I spearheaded research on analyzing large and complex biological data sets through topological and machine learning approaches; e.g. -

Computational Methods Addressing Genetic Variation In

COMPUTATIONAL METHODS ADDRESSING GENETIC VARIATION IN NEXT-GENERATION SEQUENCING DATA by Charlotte A. Darby A dissertation submitted to Johns Hopkins University in conformity with the requirements for the degree of Doctor of Philosophy Baltimore, Maryland June 2020 © 2020 Charlotte A. Darby All rights reserved Abstract Computational genomics involves the development and application of computational meth- ods for whole-genome-scale datasets to gain biological insight into the composition and func- tion of genomes, including how genetic variation mediates molecular phenotypes and disease. New biotechnologies such as next-generation sequencing generate genomic data on a massive scale and have transformed the field thanks to simultaneous advances in the analysis toolkit. In this thesis, I present three computational methods that use next-generation sequencing data, each of which addresses the genetic variations within and between human individuals in a different way. First, Samovar is a software tool for performing single-sample mosaic single-nucleotide variant calling on whole genome sequencing linked read data. Using haplotype assembly of heterozygous germline variants, uniquely made possible by linked reads, Samovar identifies variations in different cells that make up a bulk sequencing sample. We apply it to 13cancer samples in collaboration with researchers at Nationwide Childrens Hospital. Second, scHLAcount is a software pipeline that computes allele-specific molecule counts for the HLA genes from single-cell gene expression data. We use a personalized reference genome based on the individual’s genotypes to reveal allele-specific and cell type-specific gene expression patterns. Even given technology-specific biases of single-cell gene expression data, we can resolve allele-specific expression for these genes since the alleles are often quite different between the two haplotypes of an individual. -

Big Data, Moocs, and ... (PDF)

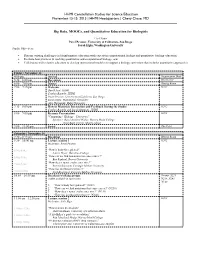

HHMI Constellation Studios for Science Education November 13-15, 2015 | HHMI Headquarters | Chevy Chase, MD Big Data, MOOCs, and Quantitative Education for Biologists Co-Chairs Pavel Pevzner, University of California- San Diego Sarah Elgin, Washington University Studio Objectives Discuss existing challenges in bioinformatics education with experts in computational biology and quantitative biology education, Evaluate best practices in teaching quantitative and computational biology, and Collaborate with scientist educators to develop instructional modules to support a biology curriculum that includes quantitative approaches. Friday | November 13 4:00 pm Arrival Registration Desk 5:30 – 6:00 pm Reception Great Hall 6:00 – 7:00 pm Dinner Dining Room 7:00 – 7:15 pm Welcome K202 David Asai, HHMI Cynthia Bauerle, HHMI Pavel Pevzner, University of California-San Diego Sarah Elgin, Washington University Alex Hartemink, Duke University 7:15 – 8:00 pm How to Maximize Interaction and Feedback During the Studio K202 Cynthia Bauerle and Sarah Simmons, HHMI 8:00 – 9:00 pm Keynote Presentation K202 "Computing + Biology = Discovery" Speakers: Ran Libeskind-Hadas, Harvey Mudd College Eliot Bush, Harvey Mudd College 9:00 – 11:00 pm Social The Pilot Saturday | November 14 7:30 – 8:15 am Breakfast Dining Room 8:30 – 10:00 am Lecture session 1 K202 Moderator: Pavel Pevzner 834a-854a “How is body fat regulated?” Laurie Heyer, Davidson College 856a-916a “How can we find mutations that cause cancer?” Ben Raphael, Brown University “How does a tumor evolve over time?” 918a-938a Russell Schwartz, Carnegie Mellon University “How fast do ribosomes move?” 940a-1000a Carl Kingsford, Carnegie Mellon University 10:05 – 10:55 am Breakout working groups Rooms: S221, (coffee available in each room) N238, N241, N140 1. -

Computational and Systems Biology

COMPUTATIONAL AND SYSTEMS BIOLOGY COMPUTATIONAL AND SYSTEMS BIOLOGY quantitative imaging; regulatory genomics and proteomics; single cell manipulations and measurement; stem cell and developmental systems biology; and synthetic biology and biological design. The eld of computational and systems biology represents a synthesis of ideas and approaches from the life sciences, physical The CSB PhD program is an Institute-wide program that has been sciences, computer science, and engineering. Recent advances jointly developed by the Departments of Biology, Biological in biology, including the human genome project and massively Engineering, and Electrical Engineering and Computer Science. parallel approaches to probing biological samples, have created new The program integrates biology, engineering, and computation to opportunities to understand biological problems from a systems address complex problems in biological systems, and CSB PhD perspective. Systems modeling and design are well established students have the opportunity to work with CSBi faculty from across in engineering disciplines but are newer in biology. Advances in the Institute. The curriculum has a strong emphasis on foundational computational and systems biology require multidisciplinary teams material to encourage students to become creators of future tools with skill in applying principles and tools from engineering and and technologies, rather than merely practitioners of current computer science to solve problems in biology and medicine. To approaches. Applicants must have an undergraduate degree in provide education in this emerging eld, the Computational and biology (or a related eld), bioinformatics, chemistry, computer Systems Biology (CSB) program integrates MIT's world-renowned science, mathematics, statistics, physics, or an engineering disciplines in biology, engineering, mathematics, and computer discipline, with dual-emphasis degrees encouraged. -

Lior Pachter Genome Informatics 2013 Keynote

Stories from the Supplement Lior Pachter! Department of Mathematics and Molecular & Cell Biology! UC Berkeley! ! November 1, 2013! Genome Informatics, CSHL The Cufflinks supplements • C. Trapnell, B.A. Williams, G. Pertea, A. Mortazavi, G. Kwan, M.J. van Baren, S.L. Salzberg, B.J. Wold and L. Pachter, Transcript assembly and quantification by RNA-Seq reveals unannotated transcripts and isoform switching during cell differentiation, Nature Biotechnology 28, (2010), p 511–515. Supplementary Material: 42 pages. • C. Trapnell, D.G. Hendrickson, M. Sauvageau, L. Goff, J.L. Rinn and L. Pachter, Differential analysis of gene regulation at transcript resolution with RNA-seq, Nature Biotechnology, 31 (2012), p 46–53. Supplementary Material: 70 pages. A supplementary arithmetic progression? • C. Trapnell, B.A. Williams, G. Pertea, A. Mortazavi, G. Kwan, M.J. van Baren, S.L. Salzberg, B.J. Wold and L. Pachter, Transcript assembly and quantification by RNA-Seq reveals unannotated transcripts and isoform switching during cell differentiation, Nature Biotechnology 28, (2010), p 511–515. Supplementary Material: 42 pages. • C. Trapnell, D.G. Hendrickson, M. Sauvageau, L. Goff, J.L. Rinn and L. Pachter, Differential analysis of gene regulation at transcript resolution with RNA- seq, Nature Biotechnology, 31 (2012), p 46–53. Supplementary Material: 70 pages. • A. Roberts and L. Pachter, Streaming algorithms for fragment assignment, Nature Methods 10 (2013), p 71—73. Supplementary Material: 98 pages? The nature methods manuscript checklist The nature methods manuscript checklist Emperor Joseph II: My dear young man, don't take it too hard. Your work is ingenious. It's quality work. And there are simply too many notes, that's all. -

Modeling and Analysis of RNA-Seq Data: a Review from a Statistical Perspective

Modeling and analysis of RNA-seq data: a review from a statistical perspective Wei Vivian Li 1 and Jingyi Jessica Li 1;2;∗ Abstract Background: Since the invention of next-generation RNA sequencing (RNA-seq) technolo- gies, they have become a powerful tool to study the presence and quantity of RNA molecules in biological samples and have revolutionized transcriptomic studies. The analysis of RNA-seq data at four different levels (samples, genes, transcripts, and exons) involve multiple statistical and computational questions, some of which remain challenging up to date. Results: We review RNA-seq analysis tools at the sample, gene, transcript, and exon levels from a statistical perspective. We also highlight the biological and statistical questions of most practical considerations. Conclusion: The development of statistical and computational methods for analyzing RNA- seq data has made significant advances in the past decade. However, methods developed to answer the same biological question often rely on diverse statical models and exhibit dif- ferent performance under different scenarios. This review discusses and compares multiple commonly used statistical models regarding their assumptions, in the hope of helping users select appropriate methods as needed, as well as assisting developers for future method development. 1 Introduction RNA sequencing (RNA-seq) uses the next generation sequencing (NGS) technologies to reveal arXiv:1804.06050v3 [q-bio.GN] 1 May 2018 the presence and quantity of RNA molecules in biological samples. Since its invention, RNA- seq has revolutionized transcriptome analysis in biological research. RNA-seq does not require any prior knowledge on RNA sequences, and its high-throughput manner allows for genome-wide profiling of transcriptome landscapes [1,2]. -

Cloud Computing and the DNA Data Race Michael Schatz

Cloud Computing and the DNA Data Race Michael Schatz April 14, 2011 Data-Intensive Analysis, Analytics, and Informatics Outline 1. Genome Assembly by Analogy 2. DNA Sequencing and Genomics 3. Large Scale Sequence Analysis 1. Mapping & Genotyping 2. Genome Assembly Shredded Book Reconstruction • Dickens accidentally shreds the first printing of A Tale of Two Cities – Text printed on 5 long spools It was theIt was best the of besttimes, of times, it was it wasthe worstthe worst of of times, times, it it was was the the ageage of of wisdom, wisdom, it itwas was the agethe ofage foolishness, of foolishness, … … It was theIt was best the bestof times, of times, it was it was the the worst of times, it was the theage ageof wisdom, of wisdom, it was it thewas age the of foolishness,age of foolishness, … It was theIt was best the bestof times, of times, it was it wasthe the worst worst of times,of times, it it was the age of wisdom, it wasit was the the age age of offoolishness, foolishness, … … It was It thewas best the ofbest times, of times, it wasit was the the worst worst of times,of times, itit waswas thethe ageage ofof wisdom,wisdom, it wasit was the the age age of foolishness,of foolishness, … … It wasIt thewas best the bestof times, of times, it wasit was the the worst worst of of times, it was the age of ofwisdom, wisdom, it wasit was the the age ofage foolishness, of foolishness, … … • How can he reconstruct the text? – 5 copies x 138, 656 words / 5 words per fragment = 138k fragments – The short fragments from every copy are mixed -

BENG181/CSE 181/BIMM 181 Molecular Sequence Analysis Instructor: Pavel Pevzner

COURSE ANNOUNCEMENT FOR WINTER 2021 BENG181/CSE 181/BIMM 181 Molecular Sequence Analysis https://sites.google.com/site/ucsdcse181 Instructor: Pavel Pevzner ● phone: (858) 822-4365 ● e.mail: [email protected] ● web site: bioalgorithms.ucsd.edu Teaching Assistants: ● Andrey Bzikadze ([email protected]) ● Hsuan-lin (Charlene) Her ([email protected]) Time: 6:30-7:50 Mon/Wed, Place: online (seminar Friday 4:00-4:50 online) Zoom link for the class: https://ucsd.zoom.us/j/99782745100 Zoom link for the seminar: https://ucsd.zoom.us/j/96805484881 Office hours: PP: (Th 3-5 online), TAs (online Tue 1-2 PM and 4-5 PM or by appointment online) PP zoom link: https://ucsd.zoom.us/j/96986851791 Andrey Bzikadze zoom link: https://ucsd.zoom.us/j/94881347266 Hsuan-lin (Charlene) Her zoom link: https://ucsd.zoom.us/j/95134947264 Prerequisites: The course assumes some prior background in biology, some algorithmic culture (CSE 101 course on algorithms as a prerequisite), and some programming skills. Flipped online class. Starting in 2014, the Innovative Learning Technology Initiative (ILTI) at University of California encourages professors to transform their classes into online offerings available across various UC campuses. Dr. Pevzner is funded by the ILTI and NIH to develop new online approaches to bioinformatics education at UCSD. Since 2014, well before the COVID-19 pandemic, all lectures in this class are available online rather than presented in the classroom. Multi-university class. This class closely follows the textbook Bioinformatics Algorithms: an Active Learning Approach that has now been adopted by 140+ instructors from 40+ countries. -

Spontaneous Generation & Origin of Life Concepts from Antiquity to The

SIMB News News magazine of the Society for Industrial Microbiology and Biotechnology April/May/June 2019 V.69 N.2 • www.simbhq.org Spontaneous Generation & Origin of Life Concepts from Antiquity to the Present :ŽƵƌŶĂůŽĨ/ŶĚƵƐƚƌŝĂůDŝĐƌŽďŝŽůŽŐLJΘŝŽƚĞĐŚŶŽůŽŐLJ Impact Factor 3.103 The Journal of Industrial Microbiology and Biotechnology is an international journal which publishes papers in metabolic engineering & synthetic biology; biocatalysis; fermentation & cell culture; natural products discovery & biosynthesis; bioenergy/biofuels/biochemicals; environmental microbiology; biotechnology methods; applied genomics & systems biotechnology; and food biotechnology & probiotics Editor-in-Chief Ramon Gonzalez, University of South Florida, Tampa FL, USA Editors Special Issue ^LJŶƚŚĞƚŝĐŝŽůŽŐLJ; July 2018 S. Bagley, Michigan Tech, Houghton, MI, USA R. H. Baltz, CognoGen Biotech. Consult., Sarasota, FL, USA Impact Factor 3.500 T. W. Jeffries, University of Wisconsin, Madison, WI, USA 3.000 T. D. Leathers, USDA ARS, Peoria, IL, USA 2.500 M. J. López López, University of Almeria, Almeria, Spain C. D. Maranas, Pennsylvania State Univ., Univ. Park, PA, USA 2.000 2.505 2.439 2.745 2.810 3.103 S. Park, UNIST, Ulsan, Korea 1.500 J. L. Revuelta, University of Salamanca, Salamanca, Spain 1.000 B. Shen, Scripps Research Institute, Jupiter, FL, USA 500 D. K. Solaiman, USDA ARS, Wyndmoor, PA, USA Y. Tang, University of California, Los Angeles, CA, USA E. J. Vandamme, Ghent University, Ghent, Belgium H. Zhao, University of Illinois, Urbana, IL, USA 10 Most Cited Articles Published in 2016 (Data from Web of Science: October 15, 2018) Senior Author(s) Title Citations L. Katz, R. Baltz Natural product discovery: past, present, and future 103 Genetic manipulation of secondary metabolite biosynthesis for improved production in Streptomyces and R.