The Doctor's Personality

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Doctor Who: Human Nature: the History Collection Pdf, Epub, Ebook

DOCTOR WHO: HUMAN NATURE: THE HISTORY COLLECTION PDF, EPUB, EBOOK Paul Cornell | 288 pages | 03 Mar 2015 | Ebury Publishing | 9781849909099 | English | London, United Kingdom Doctor Who: Human Nature: The History Collection PDF Book The plot was developed with fellow New Adventure novelist Kate Orman and the book was well received on its publication in Archived from the original on 23 June Select a valid country. Companion Freema Agyeman Martha Jones. Starfall - a world on the edge, where crooks and smugglers hide in the gloomy Edit Did You Know? User Reviews. It has a LDPE 04 logo on it, which means that it can be recycled with other soft plastic such as carrier bags. Added to Watchlist. Runtime: 45 min 45 min 50 episodes. Return policy. Back to School Picks. Sign In. The contract for sale underlying the purchase of goods is between us World of Books and you, the customer. Title: Human Nature 10 Oct Payment details. Although most praise for the script was directed at Cornell, a great deal of the episode had in fact been rewritten by executive producer Russell T Davies. No additional import charges at delivery! Item description Please note, the image is for illustrative purposes only, actual book cover, binding and edition may vary. The pioneers who used to be drawn by the hope of making a The Novrosk Peninsula: the Soviet naval base has been abandoned, the nuclear submarines are rusting Several years later, the revived Doctor Who television series included several people who had worked on the New Adventures. With the Doctor she knows gone, and only a suffragette and an elderly rake for company, can Benny fight off a vicious alien attack? Doorman Peter Bourke See all. -

Souveneir & Program Book (PDF)

1 COOMM WWEELLC EE!! NNVVEERRGGEENNCCEE 22001133 TTOO CCOO LCOOM WWEELC MEE!! TO CONVERGENCE 2013 starting Whether this is your fifteenth on page time at CONvergence or your 12, and first, CONvergence aims meet to be one of the best them celebrations of science all over the fiction and fantasy on course of the the planet. And possibly weekend. the universe as well, but Our panels are we’ll have to get back filled with other top to you on that. professionals and This year’s theme is fans talking British Invasion. We’ve about what they always loved British love, even if it is contributions to what they love to science fiction and hate. The conven- fantasy — from tion is more than H.G. Wells to Iain just panel discus- Banks or Hitch- sions — Check hiker’s Guide to out Mr. B. the Harry Potter. It’s Gentleman Rhymer the 50th Anni- (making his North versary of Doctor American debut Who as well (none on our Mainstage), of us have forgot- the crazy projects ten about that) and going on in Con- you’ll see that reflected nie’s Quantum Sand- throughout the conven- box, and a movie in Cinema Rex. tion. Get a drink or a snack in CoF2E2 or We have great Guests of Honor CONsuite, or visit all of our fantastic par- this year, some with connections to the theme and oth- ties around the garden court. Play a game, see some ers that represent the full range of science fiction and anime, and wear a costume if it suits you! fantasy. -

A Greeting from Paul Cornell President of the Board of Directors, Augustana Heritage Association

THE AUGUS ta N A HERI ta GE NEWSLE tt ER VOLUME 5 SPRING 2007 NUMBER 2 A Greeting From Paul Cornell President of the Board of Directors, Augustana Heritage Association hautauqua - Augustana - Bethany - Gustavus – Chautauqua...approximately 3300 persons have attended these first five gatherings of the AHA! All C have been rewarding experiences. The AHA Board of Directors announces Gatherings VI and VII. Put the date on your long-range date book now. 2008 Bethany College Lindsborg, Kansas 19-22 June 2010 Augustana College Rock Island, Illinois 10-13 June At Gathering VI at Bethany, we will participate in the famous Midsummers Day activities on Saturday, the 21st. We will also remember the 200th anniversary of the birth of Lars Paul Cornell, President of the Board of Directors Paul Esbjorn, a pioneer pastor of Augustana. We are planning an opening event on Thursday evening, the 19th and concluding on of Augustana - Andover, Illinois and New Sweden, Iowa, and Sunday, the 22nd with a luncheon. 4) Celebrating with the Archbishop of the Church of Sweden in Gathering VII at Augustana will include: 1) Celebrating attendence. the 150th anniversary of the establishment of the Augustana I would welcome program ideas from readers of the AHA Synod, 2) Celebrating the anniversary of Augustana College Newsletter for either Bethany or Augustana. I hope to be present and Seminary, 3) Celebrating the two pioneer'. congregations at both events. How about YOU? AHA 1 Volume 5, Number 2 The Augustana Heritage Association defines, promotes, and Spring 2007 perpetuates the heritage and legacy of the Augustana Evangelical Lutheran Church. -

MARCH 1St 2018

March 1st We love you, Archivist! MARCH 1st 2018 Attention PDF authors and publishers: Da Archive runs on your tolerance. If you want your product removed from this list, just tell us and it will not be included. This is a compilation of pdf share threads since 2015 and the rpg generals threads. Some things are from even earlier, like Lotsastuff’s collection. Thanks Lotsastuff, your pdf was inspirational. And all the Awesome Pioneer Dudes who built the foundations. Many of their names are still in the Big Collections A THOUSAND THANK YOUS to the Anon Brigade, who do all the digging, loading, and posting. Especially those elite commandos, the Nametag Legionaires, who selflessly achieve the improbable. - - - - - - - – - - - - - - - - – - - - - - - - - - - - - - - – - - - - - – The New Big Dog on the Block is Da Curated Archive. It probably has what you are looking for, so you might want to look there first. - - - - - - - – - - - - - - - - – - - - - - - - - - - - - - - – - - - - - – Don't think of this as a library index, think of it as Portobello Road in London, filled with bookstores and little street market booths and you have to talk to each shopkeeper. It has been cleaned up some, labeled poorly, and shuffled about a little to perhaps be more useful. There are links to ~16,000 pdfs. Don't be intimidated, some are duplicates. Go get a coffee and browse. Some links are encoded without a hyperlink to restrict spiderbot activity. You will have to complete the link. Sorry for the inconvenience. Others are encoded but have a working hyperlink underneath. Some are Spoonerisms or even written backwards, Enjoy! ss, @SS or $$ is Send Spaace, m3g@ is Megaa, <d0t> is a period or dot as in dot com, etc. -

The Ultimate Foe

The Black Archive #14 THE ULTIMATE FOE By James Cooray Smith Published November 2017 by Obverse Books Cover Design © Cody Schell Text © James Cooray Smith, 2017 Range Editor: Philip Purser-Hallard James Cooray Smith has asserted his right to be identified as the author of this Work in accordance with the Copyright, Designs and Patents Act 1988. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or in any form or by any means, without the prior permission in writing of the publisher, nor be otherwise circulated in any form of binding, cover or e-book other than which it is published and without a similar condition including this condition being imposed on the subsequent publisher. 2 INTERMISSION: WHO IS THE VALEYARD In Holmes’ draft of Part 13, the Valeyard’s identity is straightforward. But it would not remain so for long. MASTER Your twelfth and final incarnation… and may I say you do not improve with age1. By the intermediate draft represented by the novelisation2 this has become: ‘The Valeyard, Doctor, is your penultimate reincarnation… Somewhere between your twelfth and thirteenth regeneration… and I may I say, you do not improve with age..!’3 The shooting script has: 1 While Robert Holmes had introduced the idea of a Time Lord being limited to 12 regenerations, (and thus 13 lives, as the first incarnation of a Time Lord has not yet regenerated) in his script for The Deadly Assassin, his draft conflates incarnations and regenerations in a way that suggests that either he was no longer au fait with how the terminology had come to be used in Doctor Who by the 1980s (e.g. -

Download Doctor Who: Nemesis of the Daleks Free Ebook

DOCTOR WHO: NEMESIS OF THE DALEKS DOWNLOAD FREE BOOK Dan Abnett, Paul Cornell | 194 pages | 25 Jun 2013 | Panini Publishing Ltd | 9781846535314 | English | Tunbridge Wells, United Kingdom Doctor Who: Nemesis Of The Daleks Jan 30, Jeanette rated it really liked it Shelves: fictionscience-fictiongraphic-novel. I gave this book 3 stars out of 5, balancing good Daak and bad Hulk. Paul Cornell is a British writer of science fiction and fantasy prose, comics and television. In the 26 th century the Earth Empire is in a death struggle with voracious Dalek forces yet still riven Doctor Who: Nemesis of the Daleks home-grown threats. Doctor Who fans in the s and s were a pretty devoted lot. It's a magnificent illustration of why I despise multi-part storytelling with a passion. Davros has activated the long-dormant Doctor Who: Nemesis of the Daleks army hidden there, converting them to the white and gold colour scheme applied to the Daleks he created on the planet Necros in Revelation of the Daleks. He plays second fiddle during most of the Doctor Who: Nemesis of the Daleks. Goodreads is the world's largest site for readers with over 50 million reviews. Emperor of the Daleks Assimilation 2. So three and half stars. Are you happy to accept all Doctor Who: Nemesis of the Daleks But I wish they'd put them in a more accurate chronological order. Other books in this series. Doctor Who Magazine — The longer pieces were better, especially 'Train Flight' and 'Nemesis'. It was interesting to see some elements similar to later TV plots - for instance the idea of a planetary library this time infested with bugs rather than the more scary Vashta Neradaa graveyard of the Tardises and a Time Lord killer - and in 'Who's That Girl' there appears to be a female Doctor. -

Spring 2005 the Augustana Heritage Association (AHA) Co-Editors Arvid and Nancy Anderson Is to Define, Promote and Perpetuate

THE AUGUSTANA HERITAGE NEWSLETTER V O L U M E 4 S P R I N G 2 0 0 5 N U M B E R 2 A Letter from Paul Cornell President of the Augustana Heritage Association On Pearl Harbor Day, 2004 Many of our membership remember where we were and what we were doing on that day. Me? Playing ice hockey on Lake Nakomis in Minneapolis, Minnesota on a Sunday afternoon. I can better remember this year’s Gathering IV of the Augustana Heritage Association at Gustavus Adolphus. From the Jussi Bjoerling lecture Thursday through the luncheon on Sunday afternoon. It filled us with joy and thanksgiv- ing. Paul Cornell I realize more and more, and am thankful for, the legacy of Augustana that continues to guide and inspire me in the 7th decade of my life. The renewing of friendships, the words and music of “Songs from Two Homelands,” the presentations of pro- gram teachers, and the ambience (including the weather) of GA made for a great four days. Also to the GA committee for their pre- planning, their on-site operations, and all the behind-the-scenes – a very huge “tack så mycket”. I ask for your prayers and support as the second president of the AHA, stepping into the shoes of Reuben Swanson, is a momen- tous task. We are grateful for his four years of leadership. I am so pleased that he is continuing as a member of the AHA Board. Thank you, Reuben! The Program Committee for the September 14-17, 2006 Gathering V at Chautauqua Institute, Chautauqua, New York has met. -

Doctor Who's Feminine Mystique

Doctor Who’s Feminine Mystique: Examining the Politics of Gender in Doctor Who By Alyssa Franke Professor Sarah Houser, Department of Government, School of Public Affairs Professor Kimberly Cowell-Meyers, Department of Government, School of Public Affairs University Honors in Political Science American University Spring 2014 Abstract In The Feminine Mystique, Betty Friedan examined how fictional stories in women’s magazines helped craft a societal idea of femininity. Inspired by her work and the interplay between popular culture and gender norms, this paper examines the gender politics of Doctor Who and asks whether it subverts traditional gender stereotypes or whether it has a feminine mystique of its own. When Doctor Who returned to our TV screens in 2005, a new generation of women was given a new set of companions to look up to as role models and inspirations. Strong and clever, socially and sexually assertive, these women seemed to reject traditional stereotypical representations of femininity in favor of a new representation of femininity. But for all Doctor Who has done to subvert traditional gender stereotypes and provide a progressive representation of femininity, its story lines occasionally reproduce regressive discourses about the role of women that reinforce traditional gender stereotypes and ideologies about femininity. This paper explores how gender is represented and how norms are constructed through plot lines that punish and reward certain behaviors or choices by examining the narratives of the women Doctor Who’s titular protagonist interacts with. Ultimately, this paper finds that the show has in recent years promoted traits more in line with emphasized femininity, and that the narratives of the female companion’s have promoted and encouraged their return to domestic roles. -

Number 42 Michaelmas 2018

Number 42 Michaelmas 2018 Number 42 Michaelmas Term 2018 Published by the OXFORD DOCTOR WHO SOCIETY [email protected] Contents 4 The Time of Doctor Puppet: interview with Alisa Stern J A 9 At Last, the Universe is Calling G H 11 “I Can Hear the Sound of Empires Toppling’ : Deafness and Doctor Who S S 14 Summer of ‘65 A K 17 The Barbara Wright Stuff S I 19 Tonight, I should liveblog… G H 22 Love Letters to Doctor Who: the 2018 Target novelizations R C 27 Top or Flop? Kill the Moon J A, W S S S 32 Haiku for Kill the Moon W S 33 Limerick for Kill the Moon J A 34 Utopia 2018 reports J A 40 Past and present mixed up: The Time Warrior M K 46 Doctors Assemble: Marvel Comics and Doctor Who J A 50 The Fan Show: Peter Capaldi at LFCC 2018 I B 51 Empty Pockets, Empty Shelves M K 52 Blind drunk at Sainsbury’s: Big Finish’s Exile J A 54 Fiction: A Stone’s Throw, Part Four J S 60 This Mid Curiosity: Time And Relative Dimensions In Shitposting W S Front cover illustration by Matthew Kilburn, based on a shot from The Ghost Monument, with a background from Following Me Home by Chris Chabot, https://flic.kr/p/i6NnZr, (CC BY-NC 2.0) Edited by James Ashworth and Matthew Kilburn Editorial address [email protected] Thanks to Alisa Stern and Sophie Iles This issue was largely typeset in Minion Pro and Myriad Pro by Adobe; members of the Alegreya family, designed by Juan Pablo del Peral; members of the Saira family by Omnibus Type; with Arial Rounded MT Bold, Baskerville, Bauhaus 93, and Gotham Narrow Black. -

The Early Adventures - 5.4 the Crash of the Uk- 201 Pdf, Epub, Ebook

THE EARLY ADVENTURES - 5.4 THE CRASH OF THE UK- 201 PDF, EPUB, EBOOK Jonathan Morris | none | 31 Jan 2019 | Big Finish Productions Ltd | 9781781789667 | English | Maidenhead, United Kingdom The Early Adventures - 5.4 The Crash of the UK-201 PDF Book Textbook Stuff. View all Movies Sites. Some of these events are wonderful, some are tragic, but together they form our lives. First Doctor. The Black Hole. Series three saw the return of the First Doctor. Prev Post. Can she fix things so she gets back to the doctor. But it never made it. Steven , Sara. Thanks for telling us about the problem. Big Finish Originals. Short Trips 14 : The Solar System. The Dimension Cannon. You must be logged in to post a comment. Social connect: Login Login with twitter. Star Wars. Subscriber Short Trips. Cancel Save. Crashing on the planet Dido, a tragic chain of events was set in motion leading to the death of almost all of its crew and a massacre of the indigenous population. File:Domain of the Voord cover. The Eleventh Doctor Chronicles. Tales of Terror. The Scarifyers. Stockton Butler marked it as to-read Aug 31, Torchwood - Big Finish Audio. This has what seems to be a wonderful effect: the crew survives, the ship proceeds to arrive at the colony Astra, and Vicki goes on to live a long, happy life, first with her father and later with her husband and children. The baddies are a bit new series Doctor Who and would have been realised very differently in Tony rated it it was amazing May 31, For the first two stories, the role of Barbara Wright was recast, as Jacqueline Hill was deceased. -

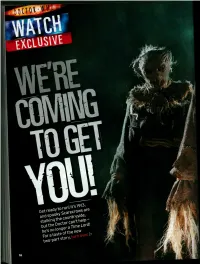

T 1913, I ^ * I No Longer A

XCLUSW-t 1913, ^ * I i no longer a • ' *• • *:• i li • r> . DOCTOR WHO Saturdays BBi DOCTOR WHO CONFIDENTIAL Saturdays THE FINAL STRAW "I'd say this is the scariest creature so far," says Ken Hosking (Scarecrow, far left) - and as a veteran monster-performer, he's well placed to know. "But it only takes about 20 minutes to put on, so it's not one of the more complicated costumes." "It's comfortable to wear because it's soft, not rigid, so you can do lots of action in it," adds Ruari Mears (Scarecrow, centre). "But when you start exerting yourself, ventilation becomes an issue. We've been given breathing ^W exercises by the choreographer." S M" %ff"r^. i*j$L "We breathe in for eight •'1 '~^?um-- seconds, breathe out for eight, breathe in for five, breathe out for F£A 'a eight - that sort of thing," adds •a jfl FJ«L ! 1 Hosking, "just generally varying 1 \ your breathing to slow it down •r~~.' . kd x 3 P \ iff/ consciously, so you don't panic " "*f»pr i and hyperventilate. But it's not f" ^' *^*^ as physically arduous as some of the other monsters have been." Maybe Scarecrow Hosking got -V off lightly - "You didn't have to run up that blooming country lane!" // Mears retorts. For exclusive video clips from our Scarecrow photo shoot, visit www.radiotimes.com/ doctor-who-scarecrows - The Doctor is forced ho regenerator Russell T Davies has flagged this two-parter as "a very human... but he different sort of a story", W isn't safe at all" and he isn't wrong. -

Celestial Toyroom 506 (Portrait)

why and how, we shall always remember EDITORIAL them. by Alan Stevens Fiona’s eulogy for David Collings closes this issue. “Take me down to Kaldor City Where the Vocs are green Lastly, I would like to thank JL Fletcher for yet and the girls are pretty.” another breathtaking, full-colour postcard, this time themed on… the Storm Mine Yes, we’ve gone Vocing mad! murders! So buckle up for a journey into the ‘Boucherverse’, as Fiona Moore and I tackle eugenics, religion and colonialism on an unnamed planet ruled by the great god Xoanon! Fiona then takes a look behind-the-scenes of The Robots of Death and shares some fascinating production details, before treating us to a sample chapter from her up-and-coming critical monograph on the aforementioned story, by way of The Black Archive book series. Issue 506 Edited by Alan Stevens But we haven’t finished yet. Ann Worrall Cover by Andy Lambert delves into the politics, strategies, and mind- Proofreading by Ann Worrall bending questions raised by Magic Bullet’s and Fiona Moore Kaldor City audio drama series, which leads us Postcard by JL Fletcher nicely into a presentation by Sarah Egginton and myself of a complete, annotated version Published by the of the warped game of chess that took Doctor Who Appreciation Society place between the psychostrategist Carnell Layout by Nicholas Hollands and possessed robot V31 for the Kaldor All content is © City Taren Capel adventure ; the episode, relevant contributor/DWAS incidentally, that inspired artist Andy Lambert to produce this issue’s magnificent No copyright infringement is intended wrap-around cover.